Is Robotization Destroying or Creating Jobs?

Solve Loading Dock Challenges With Robotic Unloading

Neuron EV Unveils the Future of Smart Travel and Residence

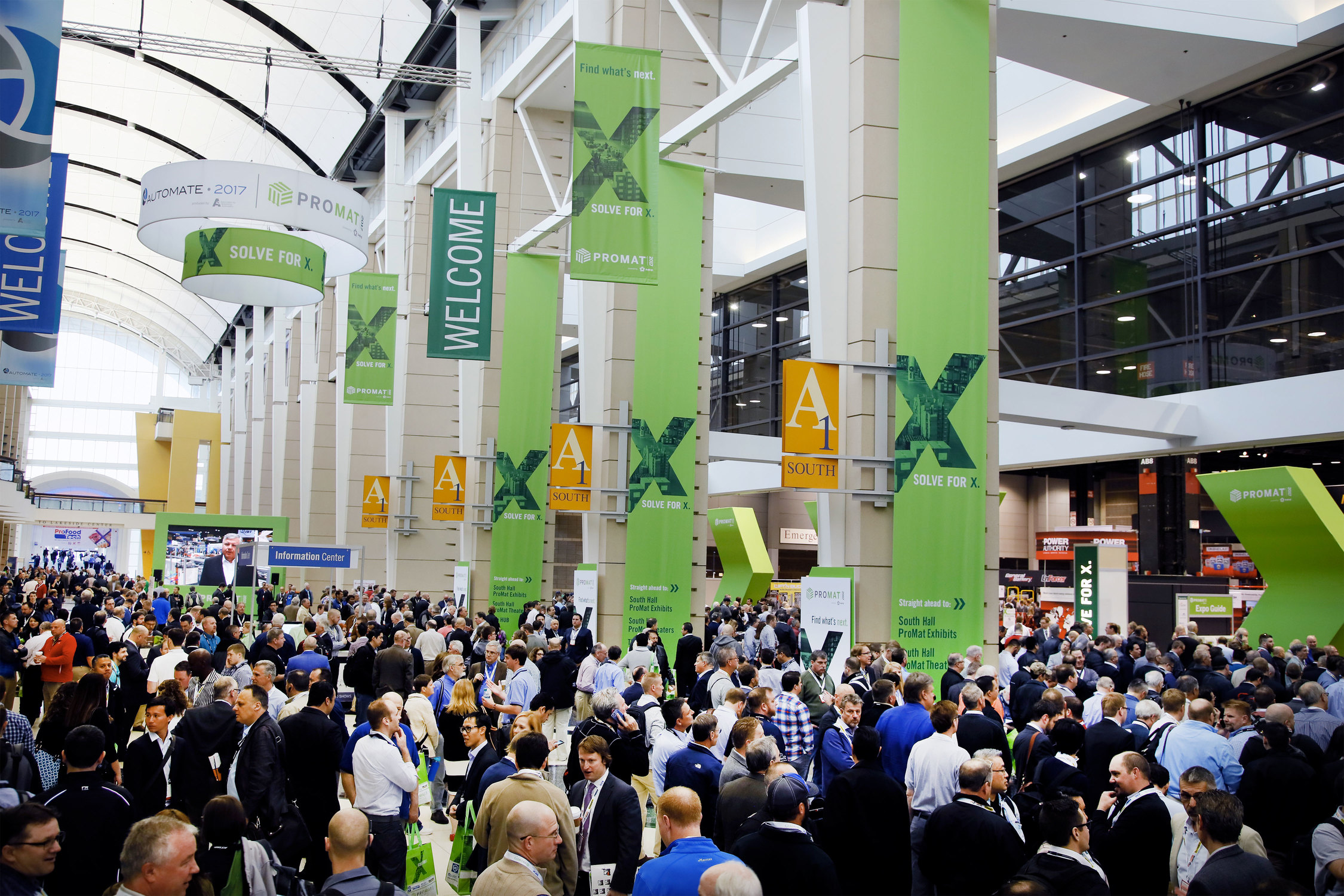

#290: AI Powered Robotic Picking at Promat 2019, with Vince Martinelli, Jim Liefer, Pete Blair, Sean Davis and Erik Nieves

In this episode, join our interviewer Andrew Vaziri at Promat 2019, the largest expo for manufacturing and supply chain professionals in North and South America. Andrew interviews a handful of companies which provide warehouse fulfillment robots that can autonomous pick and place items. Our guests explain how advances in AI have made autonomous picking possible. They also talk about the unique technologies they use to stand out in a crowded field of competing products.

The guests in the order they appear are:

Vince Martinelli, Head of Product and Marketing, RightHand Robotics

Jim Liefer, CEO, Kindred

Pete Blair, VP of Marketing, Berkshire Grey

Sean Davis, Technical Product Manager, Osaro

Erik Nieves, CEO, Plus One Robotics

See video of each robot picking items on our YouTube page:

Links

Team programs a humanoid robot to communicate in sign language

Treating Heart Disease with Robotic Precision and Safety

Sharing control with robots may make manufacturing safer, more efficient

Brain Corp., maker of robot janitors for Walmart, moving into European market

First-ever Successful Mind-controlled Robotic Arm Without Brain Implants

IDS Imaging Case Study – Automatic Quality Control in Car Body Construction in Real Time

Robotics Zone Showcases Innovation at PACK EXPO Las Vegas and co-located Healthcare Packaging EXPO

Robots can play key roles in repairing our infrastructure

Pipeline inspection robot

I was on the phone recently with a large multinational corporate investor discussing the applications for robotics in the energy market. He expressed his frustration about the lack of products to inspect and repair active oil and gas pipelines, citing too many catastrophic accidents. His point was further endorsed by a Huffington Post article that reported in a twenty-year period such tragedies have led to 534 deaths, more than 2,400 injuries, and more than $7.5 billion in damages. The study concluded that an incident occurs every 30 hours across America’s vast transcontinental pipelines.

The global market for pipeline inspection robots is estimated to exceed $2 billion in the next six years, more than tripling today’s $600 million in sales. The Zion Market Research report states: “Robots are being used increasingly in various verticals in order to reduce human intervention from work environments that are dangerous … Pipeline networks are laid down for the transportation of oil and gas, drinking waters, etc. These pipelines face the problem of corrosion, aging, cracks, and various other types of damages…. As the demand for oil and gas is increasing across the globe, it is expected that the pipeline network will increase in length in the near future thereby increasing the popularity of the in-pipe inspection robots market.”

Industry consolidation plays key role

Another big indicator of this burgeoning industry is growth of consolidation. Starting in December 2017, Pure Technologies was purchased by New York-based Xylem for more than $500 million. Xylem was already a leader in smart technology solutions for water and waste management pump facilities. Its acquisition of Pure enabled the industrial company to expand its footprint into the oil and gas market. Utilizing Pure’s digital inspection expertise with mechatronics, the combined companies are able to take a leading position in pipeline diagnostics.

Patrick Decker, Xylem president and chief executive, explained, “Pure’s solutions strongly complement the broader Xylem portfolio, particularly our recently acquired Visenti and Sensus solutions, creating a unique and disruptive platform of diagnostic, analytics and optimization solutions for clean and wastewater networks. Pure will also bring greater scale to our growing data analytics and software-as-a-service capabilities.”

According to estimates at the time of the merger, almost 25% of Pure’s business was in the oil and gas industry. Today, Pure offers a suite of products for above ground and inline inspections, as well as data management software. In addition to selling its machines, sensors and analytics to the energy sector, it has successfully deployed units in thousands of waterways globally.

This past February, Eddyfi (a leading provider of testing equipment) acquired Inuktun, a robot manufacturer of semi-autonomous crawling systems. This was the sixth acquisition by fast growing Eddyfi in less than three years. As Martin Thériault, Eddyfi’s CEO, elaborates: “We are making a significant bet that the combination of Inuktun robots with our sensors and instruments will meet the increasing needs from asset owners. Customers can now select from a range of standard Inuktun crawlers, cameras and controllers to create their own off-the-shelf, yet customized, solutions.”

Colin Dobell, president of Inuktun, echoed Thériault sentiments, “This transaction links us with one of the best! Our systems and technology are suitable to many of Eddyfi Technologies’ current customers and the combination of the two companies will strengthen our position as an industry leader and allow us to offer truly unique solutions by combining some of the industry’s best NDT [Non Destructive Testing] products with our mobile robotic solutions. The future opportunities are seemingly endless. It’s very exciting.” In addition to Xylem and Eddyfi, other entrees into this space, include: CUES, Envirosight, GE Inspection Robotics, IBAK Helmut Hunger, Medit (Fiberscope), RedZone Robotics, MISTRAS Group, RIEZLER Inspektions Systeme, and Honeybee Robotics.

Repairing lines with micro-robots

While most of the current technologies focus on inspection, the bigger opportunity could be in actively repairing pipelines with micro-bots. Last year, the government of the United Kingdom began a $35 million study with six universities to develop mechanical insect-like robots to automatically fix its large underground network. According to the government’s press release, the goal is to develop robots of one centimeter in size that will crawl, swim and quite possibly fly through water, gas and sewage pipes. The government estimates that underground infrastructure accounts for $6 billion annually in labor and business disruption costs.

One of the institutions charged with this endeavor is the University of Sheffield’s Department of Mechanical Engineering led by Professor Kirill Horoshenkov. Dr. Horoshenkov boasts that his mission is more than commercial as “Maintaining a safe and secure water and energy supply is fundamental for society but faces many challenges such as increased customer demand and climate change.”

Horoshenkov, a leader in acoustical technology, expands further on the research objectives of his team, “Our new research programme will help utility companies monitor hidden pipe infrastructure and solve problems quickly and efficiently when they arise. This will mean less disruption for traffic and general public. This innovation will be the first of its kind to deploy swarms of miniaturised robots in buried pipes together with other emerging in-pipe sensor, navigation and communication solutions with long-term autonomy.”

England is becoming a hotbed for robotic insects; last summer Rolls-Royce shared with reporters its efforts in developing mechanical bugs to repair airplane engines. The engineers at the British aerospace giant were inspired by the research of Harvard professor Robert Wood with its ambulatory microrobot for search and rescue missions. James Kell of Rolls-Royce proclaims this could be a game changer, “They could go off scuttling around reaching all different parts of the combustion chamber. If we did it conventionally it would take us five hours; with these little robots, who knows, it might take five minutes.”

Currently the Harvard robot is too large to buzz through jet engines, but Rolls-Royce is not waiting for Boston’s scientist as it has established with the University of Nottingham a Centre for Manufacturing and On-Wing Technologies “to design and build a range of bespoke prototype robots capable of performing jet engine repairs remotely.” The project lead Dragos Axinte is optimistic about the spillover effect of this work into the energy market, “The emergence of robots capable of replicating human interventions on industrial equipment can be coupled with remote control strategies to reduce the response time from several days to a few hours. As well as with any Rolls-Royce engine, our robots could one day be used in other industries such as oil, gas and nuclear.”

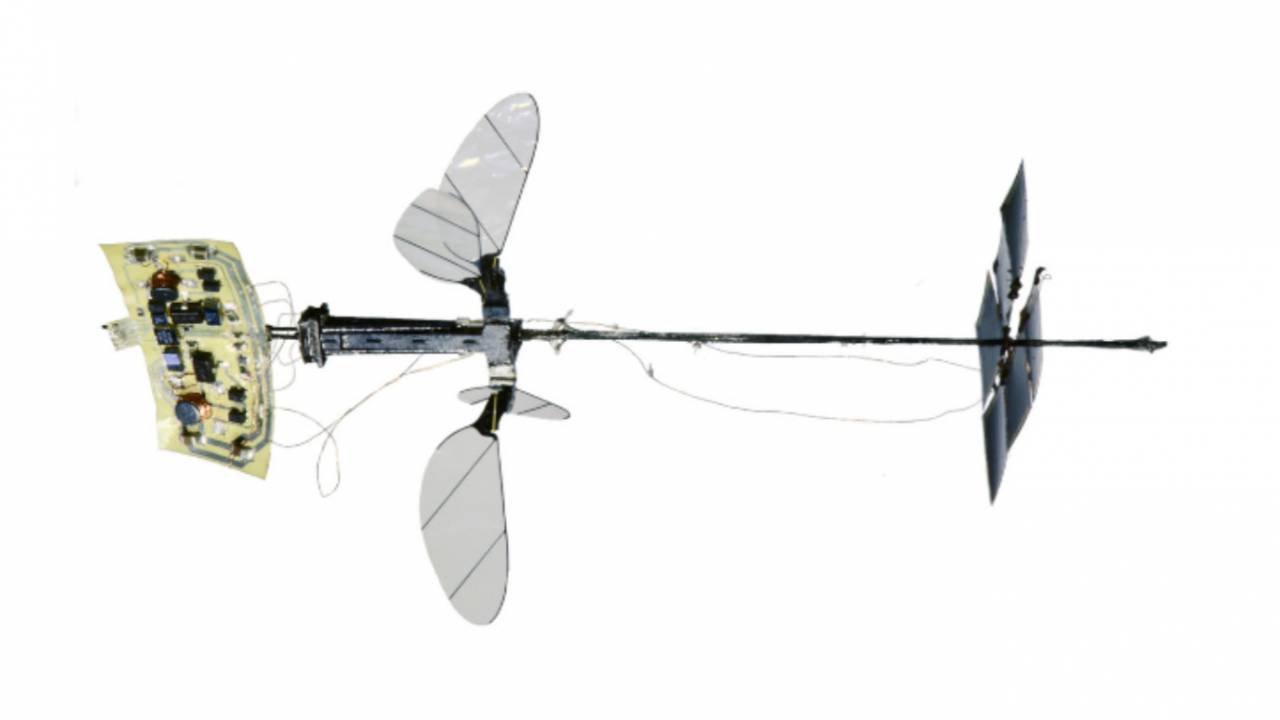

The RoboBee flies solo

By Leah Burrows

In the Harvard Microrobotics Lab, on a late afternoon in August, decades of research culminated in a moment of stress as the tiny, groundbreaking Robobee made its first solo flight.

Graduate student Elizabeth Farrell Helbling, Ph.D.’19, and postdoctoral fellow Noah T. Jafferis, Ph.D. from Harvard’s Wyss Institute for Biologically Inspired Engineering, the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS), and the Graduate School of Arts and Sciences caught the moment on camera.

Helbling, who has worked on the project for six years, counted down: “Three, two, one, go.”

The bright halogens switched on and the solar-powered Robobee launched into the air. For a terrifying second, the tiny robot, still without on-board steering and control, careened towards the lights.

Off camera, Helbling exclaimed and cut the power. The Robobee fell dead out of the air, caught by its Kevlar safety harness.

“That went really close to me,” Helbling said, with a nervous laugh.

“It went up,” Jafferis, who has also worked on the project for about six years, responded excitedly from the high-speed camera monitor where he was recording the test.

And with that, Harvard University’s Robobee reached its latest major milestone — becoming the lightest vehicle ever to achieve sustained untethered flight.

“This is a result several decades in the making,” said Robert Wood, Ph.D., Core Faculty member of the Wyss Institute, the Charles River Professor of Engineering and Applied Sciences at SEAS, and principle investigator of the Robobee project. “Powering flight is something of a Catch-22 as the tradeoff between mass and power becomes extremely problematic at small scales where flight is inherently inefficient. It doesn’t help that even the smallest commercially available batteries weigh much more than the robot. We have developed strategies to address this challenge by increasing vehicle efficiency, creating extremely lightweight power circuits, and integrating high efficiency solar cells.”

The milestone is described in Nature.

To achieve untethered flight, this latest iteration of the Robobee underwent several important changes, including the addition of a second pair of wings. “The change from two to four wings, along with less visible changes to the actuator and transmission ratio, made the vehicle more efficient, gave it more lift, and allowed us to put everything we need on board without using more power,” said Jafferis. (The addition of the wings also earned this Robobee the nickname X-Wing, after the four-winged starfighters from Star Wars.)

That extra lift, with no additional power requirements, allowed the researchers to cut the power cord — which has kept the Robobee tethered for nearly a decade — and attach solar cells and an electronics panel to the vehicle.

The solar cells, the smallest commercially available, weigh 10 milligrams each and get 0.76 milliwatts per milligram of power when the sun is at full intensity. The Robobee X-Wing needs the power of about three Earth suns to fly, making outdoor flight out of reach for now. Instead, the researchers simulate that level of sunlight in the lab with halogen lights. The solar cells are connected to an electronics panel under the bee, which converts the low voltage signals of the solar array into high voltage drive signals needed to control the actuators. The solar cells sit about three centimeters above the wings, to avoid interference.

In all, the final vehicle, with the solar cells and electronics, weights 259 milligrams (about a quarter of a paper clip) and uses about 120 milliwatts of power, which is less power than it would take to light a single bulb on a string of LED Christmas lights.

“When you see engineering in movies, if something doesn’t work, people hack at it once or twice and suddenly it works. Real science isn’t like that,” said Helbling. “We hacked at this problem in every which way to finally achieve what we did. In the end, it’s pretty thrilling.” The researchers will continue to hack away, aiming to bring down the power and add on-board control to enable the Robobee to fly outside.

“Over the life of this project we have sequentially developed solutions to challenging problems, like how to build complex devices at millimeter scales, how to create high-performance millimeter-scale artificial muscles, bioinspired designs, and novel sensors, and flight control strategies,” said Wood. “Now that power solutions are emerging, the next step is onboard control. Beyond these robots, we are excited that these underlying technologies are finding applications in other areas such as minimally-invasive surgical devices, wearable sensors, assistive robots, and haptic communication devices – to name just a few.”

Harvard has developed a portfolio of intellectual property (IP) related to the fabrication process for millimeter-scale devices. This IP, as well as related technologies, can be applied to microrobotics, medical devices, consumer electronics and a wide range of complex electromechanical systems. Harvard’s Office of Technology Development is exploring opportunities for commercial impact in these fields.

This research was co-authored by Michael Karpelson, Ph.D., Staff Electrical Engineer on the Institute’s Advanced Technology Team. It was supported by the National Science Foundation and the Office of Naval Research.

Study: Social robots can benefit hospitalized children

Image: Courtesy of the Personal Robots Group, MIT Media Lab

A new study demonstrates, for the first time, that “social robots” used in support sessions held in pediatric units at hospitals can lead to more positive emotions in sick children.

Many hospitals host interventions in pediatric units, where child life specialists will provide clinical interventions to hospitalized children for developmental and coping support. This involves play, preparation, education, and behavioral distraction for both routine medical care, as well as before, during, and after difficult procedures. Traditional interventions include therapeutic medical play and normalizing the environment through activities such as arts and crafts, games, and celebrations.

For the study, published today in the journal Pediatrics, researchers from the MIT Media Lab, Boston Children’s Hospital, and Northeastern University deployed a robotic teddy bear, “Huggable,” across several pediatric units at Boston Children’s Hospital. More than 50 hospitalized children were randomly split into three groups of interventions that involved Huggable, a tablet-based virtual Huggable, or a traditional plush teddy bear. In general, Huggable improved various patient outcomes over those other two options.

The study primarily demonstrated the feasibility of integrating Huggable into the interventions. But results also indicated that children playing with Huggable experienced more positive emotions overall. They also got out of bed and moved around more, and emotionally connected with the robot, asking it personal questions and inviting it to come back later to meet their families. “Such improved emotional, physical, and verbal outcomes are all positive factors that could contribute to better and faster recovery in hospitalized children,” the researchers write in their study.

Although it is a small study, it is the first to explore social robotics in a real-world inpatient pediatric setting with ill children, the researchers say. Other studies have been conducted in labs, have studied very few children, or were conducted in public settings without any patient identification.

But Huggable is designed only to assist health care specialists — not replace them, the researchers stress. “It’s a companion,” says co-author Cynthia Breazeal, an associate professor of media arts and sciences and founding director of the Personal Robots group. “Our group designs technologies with the mindset that they’re teammates. We don’t just look at the child-robot interaction. It’s about [helping] specialists and parents, because we want technology to support everyone who’s invested in the quality care of a child.”

“Child life staff provide a lot of human interaction to help normalize the hospital experience, but they can’t be with every kid, all the time. Social robots create a more consistent presence throughout the day,” adds first author Deirdre Logan, a pediatric psychologist at Boston Children’s Hospital. “There may also be kids who don’t always want to talk to people, and respond better to having a robotic stuffed animal with them. It’s exciting knowing what types of support we can provide kids who may feel isolated or scared about what they’re going through.”

Joining Breazeal and Logan on the paper are: Sooyeon Jeong, a PhD student in the Personal Robots group; Brianna O’Connell, Duncan Smith-Freedman, and Peter Weinstock, all of Boston Children’s Hospital; and Matthew Goodwin and James Heathers, both of Northeastern University.

Boosting mood

First prototyped in 2006, Huggable is a plush teddy bear with a screen depicting animated eyes. While the eventual goal is to make the robot fully autonomous, it is currently operated remotely by a specialist in the hall outside a child’s room. Through custom software, a specialist can control the robot’s facial expressions and body actions, and direct its gaze. The specialists could also talk through a speaker — with their voice automatically shifted to a higher pitch to sound more childlike — and monitor the participants via camera feed. The tablet-based avatar of the bear had identical gestures and was also remotely operated.

During the interventions involving Huggable — involving kids ages 3 to 10 years — a specialist would sing nursery rhymes to younger children through robot and move the arms during the song. Older kids would play the I Spy game, where they have to guess an object in the room described by the specialist through Huggable.

Through self-reports and questionnaires, the researchers recorded how much the patients and families liked interacting with Huggable. Additional questionnaires assessed patient’s positive moods, as well as anxiety and perceived pain levels. The researchers also used cameras mounted in the child’s room to capture and analyze speech patterns, characterizing them as joyful or sad, using software.

A greater percentage of children and their parents reported that the children enjoyed playing with Huggable more than with the avatar or traditional teddy bear. Speech analysis backed up that result, detecting significantly more joyful expressions among the children during robotic interventions. Additionally, parents noted lower levels of perceived pain among their children.

The researchers noted that 93 percent of patients completed the Huggable-based interventions, and found few barriers to practical implementation, as determined by comments from the specialists.

A previous paper based on the same study found that the robot also seemed to facilitate greater family involvement in the interventions, compared to the other two methods, which improved the intervention overall. “Those are findings we didn’t necessarily expect in the beginning,” says Jeong, also a co-author on the previous paper. “We didn’t tell family to join any of the play sessions — it just happened naturally. When the robot came in, the child and robot and parents all interacted more, playing games or in introducing the robot.”

An automated, take-home bot

The study also generated valuable insights for developing a fully autonomous Huggable robot, which is the researchers’ ultimate goal. They were able to determine which physical gestures are used most and least often, and which features specialists may want for future iterations. Huggable, for instance, could introduce doctors before they enter a child’s room or learn a child’s interests and share that information with specialists. The researchers may also equip the robot with computer vision, so it can detect certain objects in a room to talk about those with children.

“In these early studies, we capture data … to wrap our heads around an authentic use-case scenario where, if the bear was automated, what does it need to do to provide high-quality standard of care,” Breazeal says.

In the future, that automated robot could be used to improve continuity of care. A child would take home a robot after a hospital visit to further support engagement, adherence to care regimens, and monitoring well-being.

“We want to continue thinking about how robots can become part of the whole clinical team and help everyone,” Jeong says. “When the robot goes home, we want to see the robot monitor a child’s progress. … If there’s something clinicians need to know earlier, the robot can let the clinicians know, so [they’re not] surprised at the next appointment that the child hasn’t been doing well.”

Next, the researchers are hoping to zero in on which specific patient populations may benefit the most from the Huggable interventions. “We want to find the sweet spot for the children who need this type of of extra support,” Logan says.