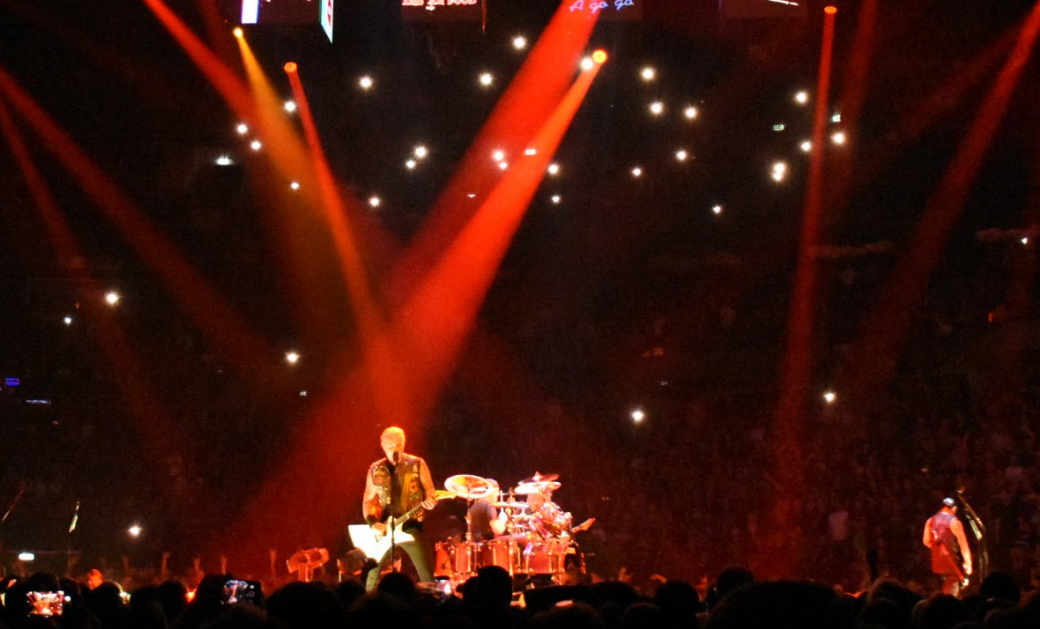

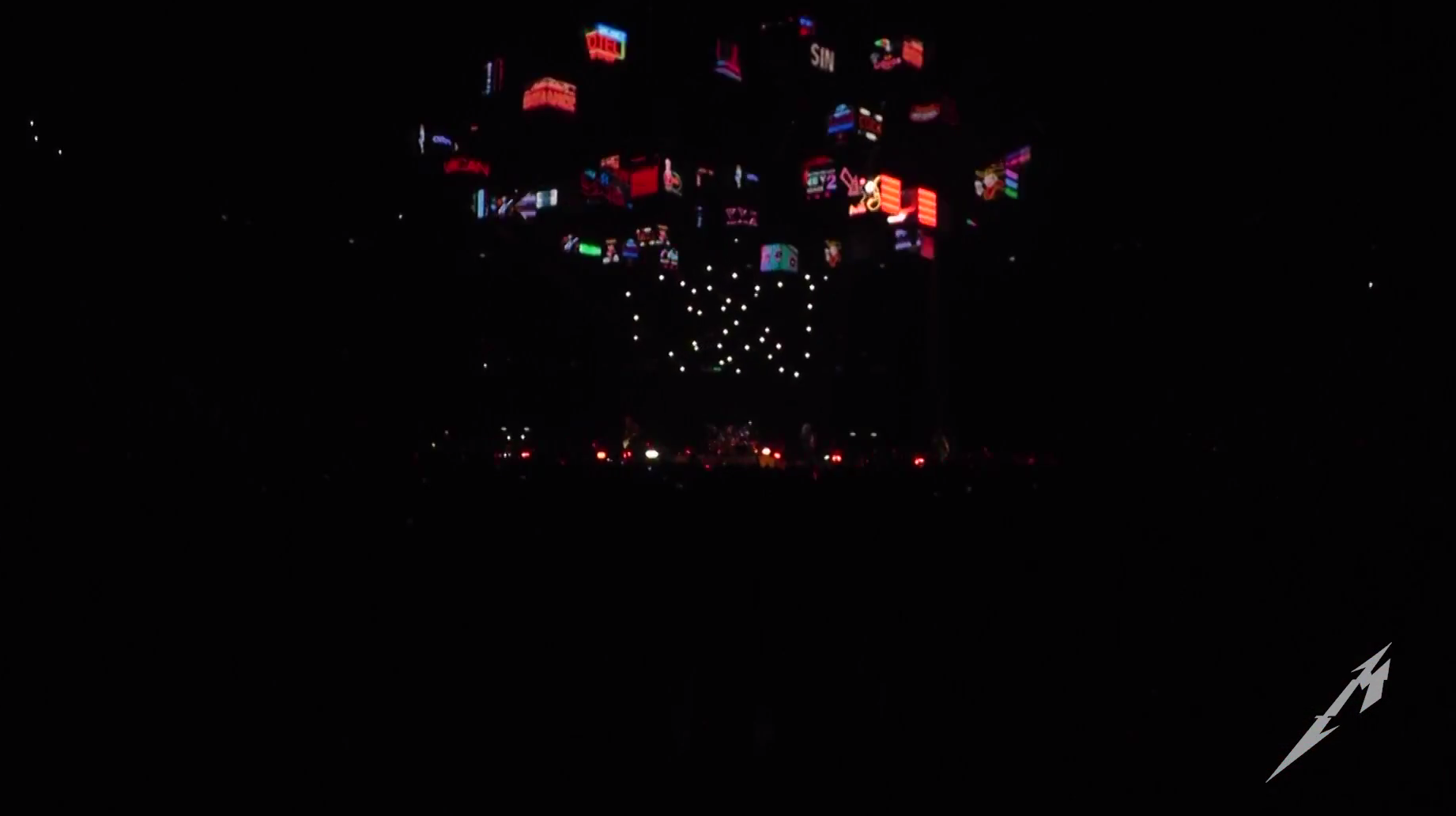

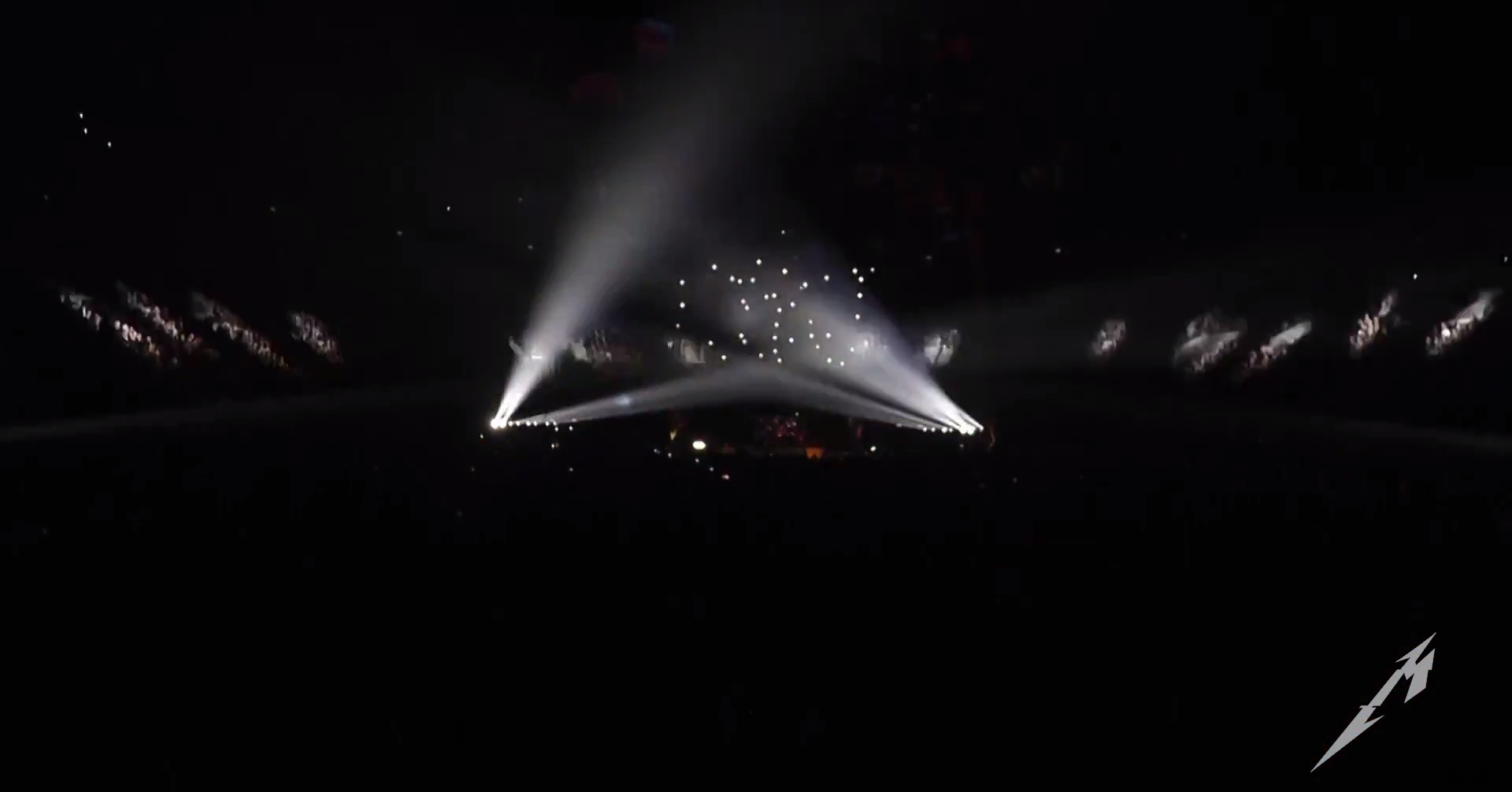

Metallica’s European WorldWired tour, which opened to an ecstatic crowd of 15,000 in Copenhagen’s sold-out Royal Arena this Saturday, features a swarm of micro drones flying above the band. Shortly after the band breaks into their hit single “Moth Into Flame”, dozens of micro drones start emerging from the stage, forming a large rotating circle above the stage. As the music builds, more and more drones emerge and join the formation, creating increasingly complex patterns, culminating in a choreography of three interlocking rings that rotate in position.

This show’s debut marks the world’s first autonomous drone swarm performance in a major touring act. Unlike previous drone shows, this performance features indoor drones, flying above performers and right next to throngs of concert viewers in a live event setting. Flying immediately next to audiences creates a more intimate effect than outdoor drone shows. The same closeness also allows the creation of moving, three-dimensional sculptures like the ones seen in the video — an effect further enhanced by Metallica’s 360-degree stage setup, with concert viewers on all sides.

Flying drones close to and around people in such a setting is challenging. Unlike outdoors, indoor drones cannot rely on GPS signals, which are severely degraded in indoor settings and do not offer the required accuracy for autonomous drone navigation on stage. The safety aspects of flying dozens of drones close to crowds in the high-pressure, live-event environment impose further challenges. Robustness to the uncertainties caused by changing show conditions in a touring setting as well as variation in the drone systems’ components and sensors, including the hundreds of motors powering the drones, is another necessary condition for this drone show system.

“It’s all about safety and reliability first”, says Raffaello D’Andrea, founder of the company behind the drones used in the Metallica show, Verity Studios (full disclosure: I’m a co-founder). D’Andrea knows what he is talking about: In work with his previous company, which was snatched up by e-commerce giant Amazon for an eye-watering 775M USD in 2012, D’Andrea and his team created fleets of autonomous warehousing robots, moving inventory through the warehouse around the clock. That company, which has since been renamed Amazon Robotics, now operates up to 10,000 robots — in a single warehouse.

How was this achieved?

In a nutshell: Verity Studios’ drone show system is an advanced show automation system that uses distributed AI, robotics, and sophisticated algorithms to achieve the level of robust performance and safety required by the live entertainment industry. With a track record of >7,000 autonomous flights on Broadway, achieved with its larger Stage Flyer drones during 398 live shows, Verity Studios is no newcomer to this industry.

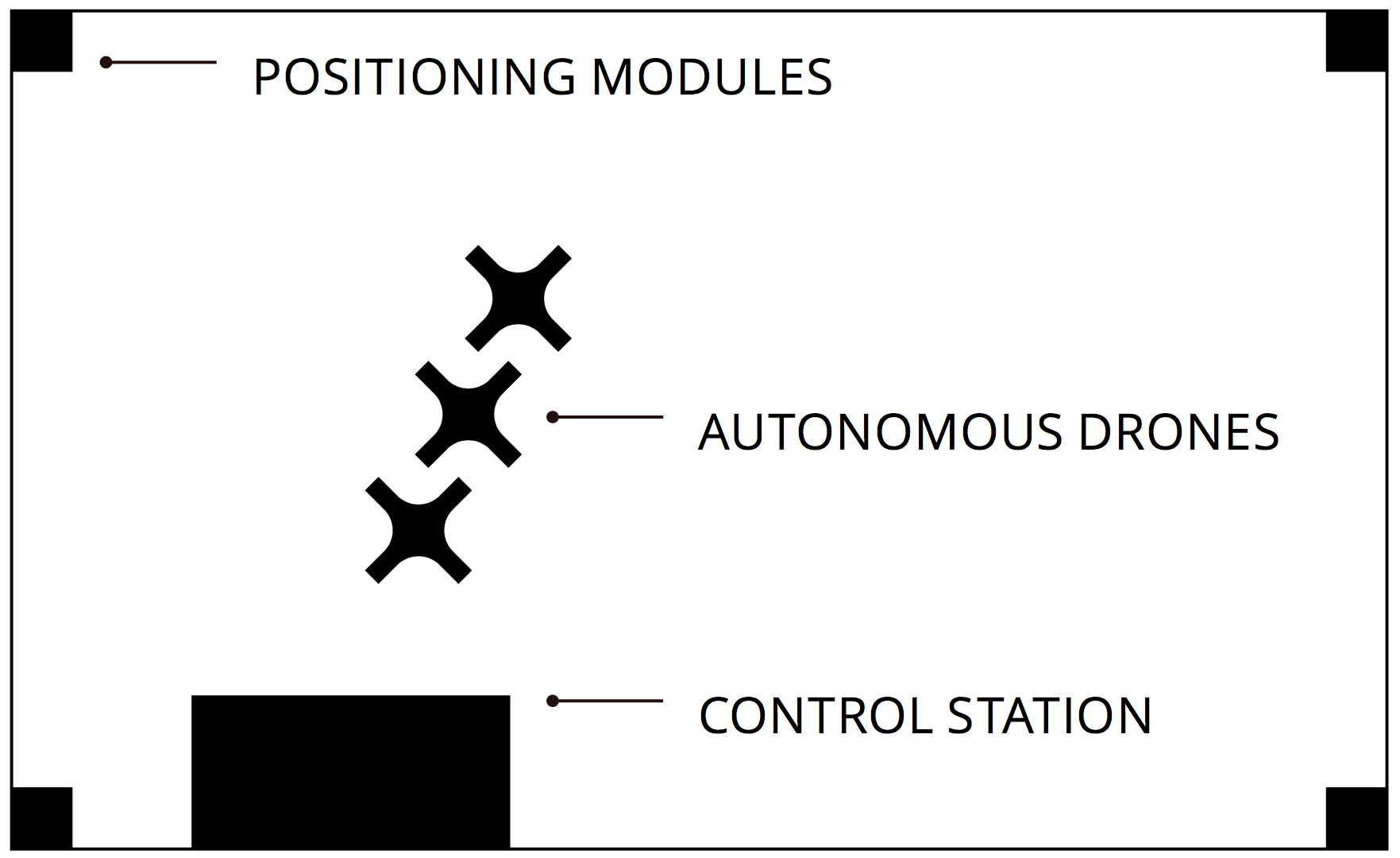

Many elements are needed to create a touring drone show; the drones themselves are just one aspect. Verity’s drones are autonomous, supervised by a human operator, who does not control drone motions individually. Instead, the operator only issues high-level commands such as “takeoff” or “land”, monitors the motions of multiple drones at a time, and reacts to anomalies. In other words, Verity’s advanced automation system takes over the role of multiple human pilots that would be required with standard, remote-controlled drones. The drones are flying mobile robots that navigate autonomously, piloting themselves, under human supervision. The autonomous drones’ motions and their lighting design are choreographed by Verity’s creative staff.

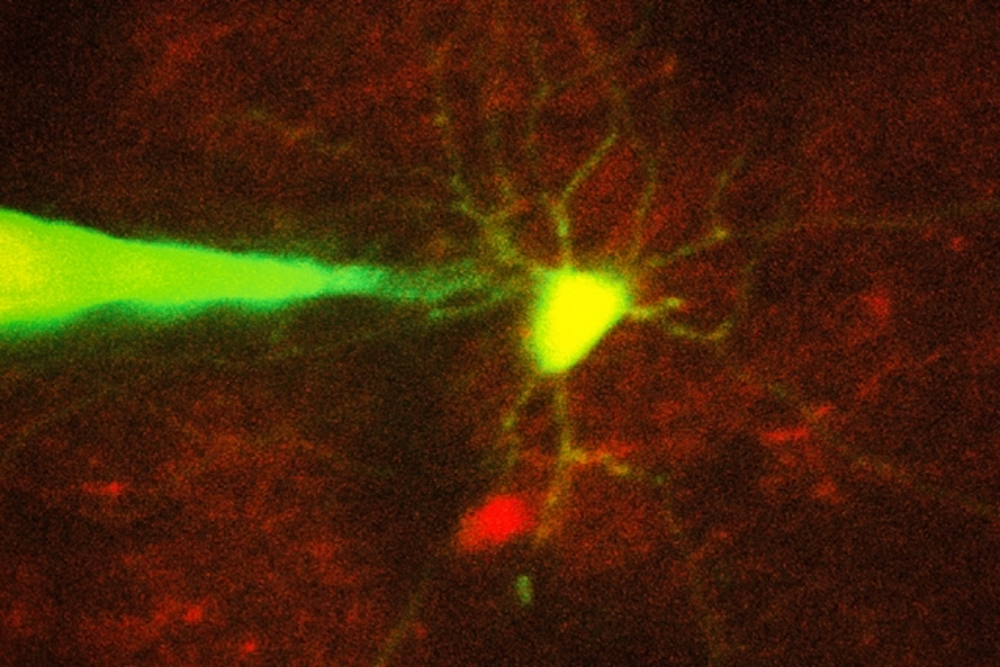

To navigate autonomously, drones require a reliable method for determining their position in space. As mentioned above, while drones can use GPS for their autonomous navigation in an outdoor setting, GPS is not a viable option indoors: GPS signals degrade close to large structures (e.g., tall buildings) and are usually not available, or severely degraded, in indoor environments. Since degraded GPS may result in unreliable or unsafe conditions for autonomous flight, the Verity drones use proprietary indoor localization technology.

It is the combination of a reliable indoor positioning system with intelligent autonomous drones and a suitable operator interface that allows the single operator of the Metallica show to simultaneously control the coordinated movement of many drones. This pilot-less approach is not merely a matter of increasing efficiency and effectiveness (who wants to have dozens of pilots on staff), but also a key safety requirement: Pilot errors have been an important contributing factor in dozens of documented drone accidents at live events. Safety risks rapidly increase as the number of drones increases, resulting in more complex flight plans and higher risks of mid-air collisions. Autonomous control allows safer operation of multiple drones than remote control by human pilots, especially when operating in a reduced airspace envelope.

Verity’s system also had to be engineered for safety in spite of other potential failures, including wireless interference, hardware or software component failures, power outages, or malicious disruption/hacking attacks. In its 398-show run on Broadway, the biggest challenge to safety turned out to be another factor: Human error. While operated by theater staff on Broadway, Verity’s system correctly identified human errors on five occasions and prevented the concerned drones from taking flight (on these occasions, the show continued with six or seven instead of the show’s planned eight drones; only one show proceeded without any drones as a safety precaution, i.e., the drone show’s “uptime” was 99.7%). As my colleagues and I have outlined in a recently published overview document on best practices for drone shows, when using drones at live event safety is a hard requirement.

Another key element for Verity’s show creation process are drone authoring tools. Planning shows like the Metallica performance requires tools for the efficient creation of trajectories for large numbers of drones. The trajectories must account for the drones’ actual flight dynamics, considering actuator limitations, as well as for aerodynamic effects, such as air turbulence or lift. Drone motions generated by these tools need to be collision-free and allow for emergency maneuvers. To create compelling effects, drone authoring tools also need to allow extracting all of the dynamic performance the drones are capable of — another area that D’Andrea’s team has gained considerable experience with prior to founding Verity Studios, in this case as part of research at the Swiss Federal Institute of Technology’s Flying Machine Arena.

Creating a compelling drone show requires more than the drone show system itself. For this tour, Verity Studios partnered with the world’s leading stage automation company TAIT Towers to integrate the drones into the stage floor as well as tackling a series of other technical challenges related to this touring show.

While technology is the key enabler, the starting point and the key driver of Verity’s shows are non-technological. Instead, the show is driven by the show designers’ creative intent. This comprises defining the role of show drones for the performance at hand as well as determining their integration into the visual and musical motifs of the show’s creative concept. For Metallica, the drones’ flight trajectories and lighting were created by Verity’s choreography team, incorporating feedback from Metallica’s production team and the band.

Metallica’s WorldWired tour

Metallica’s WorldWired Tour is their first worldwide tour after the World Magnetic Tour six years ago. The tour’s currently published European leg runs until 11 May 2018, with all general tickets sold out.

Further Robohub reading

- Drone shows: Creative potential and best practices

- Ben Einstein’s great tear-down of D’Andrea’s Amazon robots (although I disagree with Ben’s conclusion that the bots are “cooler than a flying drone” maybe Ben hasn’t seen Verity’s Lucie drones yet? You be the judge.)

- Verity’s 7,000 autonomous drone flights on Broadway

Some images for your viewing pleasure

Leon Kuperman is the CTO of CUJO IoT Security. He co-founded ZENEDGE, an enterprise web application security platform, and Truition Inc. He is also the CTO of BIDZ.com.

Leon Kuperman is the CTO of CUJO IoT Security. He co-founded ZENEDGE, an enterprise web application security platform, and Truition Inc. He is also the CTO of BIDZ.com.