Personalizing wearable devices

When it comes to soft, assistive devices — like the exosuit being designed by the Harvard Biodesign Lab — the wearer and the robot need to be in sync. But every human moves a bit differently and tailoring the robot’s parameters for an individual user is a time-consuming and inefficient process.

Now, researchers from the Wyss Institute for Biologically Inspired Engineering and the Harvard John A. Paulson School of Engineering and Applied and Sciences (SEAS) have developed an efficient machine learning algorithm that can quickly tailor personalized control strategies for soft, wearable exosuits.

The research is described in Science Robotics.

“This new method is an effective and fast way to optimize control parameter settings for assistive wearable devices,” said Ye Ding, a Postdoctoral Fellow at SEAS and co-first author of the research. “Using this method, we achieved a huge improvement in metabolic performance for the wearers of a hip extension assistive device.”

When humans walk, we constantly tweak how we move to save energy (also known as metabolic cost).

“Before, if you had three different users walking with assistive devices, you would need three different assistance strategies,” said Myunghee Kim, Ph.D., postdoctoral research fellow at SEAS and co-first author of the paper. “Finding the right control parameters for each wearer used to be a difficult, step-by-step process because not only do all humans walk a little differently but the experiments required to manually tune parameters are complicated and time consuming.”

The researchers, led by Conor Walsh, Ph.D., Core Faculty member at the Wyss Institute and the John L. Loeb Associate Professor of Engineering and Applied Sciences, and Scott Kuindersma, Ph.D., Assistant Professor of Engineering and Computer Science at SEAS, developed an algorithm that can cut through that variability and rapidly identify the best control parameters that work best for minimizing the energy used for walking.

The researchers used so-called human-in-the-loop optimization, which uses real-time measurements of human physiological signals, such as breathing rate, to adjust the control parameters of the device. As the algorithm honed in on the best parameters, it directed the exosuit on when and where to deliver its assistive force to improve hip extension. The Bayesian Optimization approach used by the team was first report in a paper last year in PLOS ONE.

The combination of the algorithm and suit reduced metabolic cost by 17.4 percent compared to walking without the device. This was a more than 60 percent improvement compared to the team’s previous work.

“Optimization and learning algorithms will have a big impact on future wearable robotic devices designed to assist a range of behaviors,” said Kuindersma. “These results show that optimizing even very simple controllers can provide a significant, individualized benefit to users while walking. Extending these ideas to consider more expressive control strategies and people with diverse needs and abilities will be an exciting next step.”

“With wearable robots like soft exosuits, it is critical that the right assistance is delivered at the right time so that they can work synergistically with the wearer,” said Walsh. “With these online optimization algorithms, systems can learn how do achieve this automatically in about twenty minutes, thus maximizing benefit to the wearer.”

Next, the team aims to apply the optimization to a more complex device that assists multiple joints, such as hip and ankle, at the same time.

“In this paper, we demonstrated a high reduction in metabolic cost by just optimizing hip extension,” said Ding. “This goes to show what you can do with a great brain and great hardware.”

This research was supported by the Defense Advanced Research Projects Agency, Warrior Web Program, Harvard’s Wyss Institute for Biologically Inspired Engineering, and the Harvard John A. Paulson School of Engineering and Applied Science.

Self-driving cars have power consumption problems

I recently chaired a UJA Tech Talk on “The Future Of Autonomous Cars” with former General Motors Vice-Chairman Steve Girsky. The auto executive enthusiastically shared his vision for the next 15-25 years of driving – a congestion-free world of automated wheeled capsules zipping commuters to and from work.

Girsky stated that connected cars with safety assist (autonomy-lite) features are moving much faster toward mass adoption than fully autonomous vehicles (sans steering wheels and pedals). In his opinion, the largest roadblocks toward a consumer-ready robocar are the current technical inefficiencies of prototypes on the road today, which burn huge amounts of energy supporting enhanced computing and arrays of sensors. This makes the sticker price closer to a 1972 Ferrari than a 2018 Prius.

As main street adoption relies heavily on converting combustion engines to electric at accessible pricing, Girsky’s sentiment was shared by many CES 2018 participants. NVIDIA, the leading chip manufacturer for autonomous vehicles, unveiled its latest technology, Xavier, with auto industry partner Volkswagen in Las Vegas. Xavier promises to be 15 times more energy-efficient than previous chip generations delivering 30 trillion operations per second by wielding only 30 watts of power.

After the Xavier CES demonstration, Volkswagen CEO Herbert Diess exclaimed, “Autonomous driving, zero-emission mobility, and digital networking are virtually impossible without advances in AI and deep learning. Working with NVIDIA, the leader in AI technology, enables us to take a big step into the future.”

NVIDIA is becoming the industry standard as Volkswagen joins more than 320 companies and organizations working with the chip manufacturer on autonomous vehicles. While NVIDIA is leading the pack, Intel and Qualcomm are not far behind with their low-power solutions. Electric vehicle powerhouse Tesla is developing its own internal chip for the next generation of Autopilot. While these new chips represents a positive evolution in processors, there is still much work to be done as current self-driving prototypes require close to 2,500 watts per second.

Power Consumption a Tradeoff for Self-Driving Cars

The power-consumption problem was highlighted recently with a report published by the the University of Michigan Center for Sustainable Systems. Its lead author, Greg Keoleian, questions whether the current autonomous car models will slow the overall adoption towards electric vehicles. Keoleian’s team simulated a number of self-driving Ford Fusion models with different-sized computer configurations and engine designs. In sharing his findings, Keoleian said, “We knew there was going to be a tradeoff in terms of the energy and greenhouse gas emissions associated with the equipment and the benefits gained from operational efficiency. I was surprised that it was so significant.”

Keoleian’s conclusions challenged the premise of self-driving cars accelerating the adoption of renewal energy. For years, the advocates of autonomous vehicles have claimed that smart driving will lead to a reduction of greenhouse gas emissions through the platooning of vehicles on highways and intersections; the decrease of aerodynamic drag on freeways, and the overall reduction in urban congestion.

Analysis: How California’s Self-Driving Cars Performed in 2017

However, the University of Michigan tests only showed a “six to nine percent net energy reduction” over the vehicle’s lifecycle when running on autonomy mode. This went down by five percent when using a large Waymo rooftop sensor package (shown below) as it increased the aerodynamic drag. The report also stated that the greatest net efficiencies were in cars with gas drivetrains that benefit the most from smart driving. Waymo currently uses a hybrid Chrysler Pacifica to run its complex fusion of sensors and processing units.

Keoleian told IEEE Spectrum that his modeling actually “overstates real impacts from future autonomous vehicles.” While he anticipates the reduction of computing and sensor drag, he is concerned that the impact of 5G communications has not been fully explored. The increased bandwidth will lead to greater data streams and boost power consumption for inboard systems and processors. In addition, he thinks that self-driving systems will lead to greater distances traveled as commuters move further away from city centers with the advent of easier commutes. Keoleian explains, “There could be a rebound effect. They could induce travel, adding to congestion and fuel use.” Koeleian points to a confusing conclusion by the U.S. National Renewable Energy Laboratory that presents two possible outcomes of full autonomy:

- A reduction in greenhouse emissions by sixty percent with greater ride sharing options

- An increase of two hundred percent with increased driving distances

According to Wilko Stark, Mercedes-Benz’s Vice President of Strategy, it only makes sense for autonomous vehicles to be electric as the increased power requirements will go to the computers instead of the motors. “To put such a system into a combustion-engined car doesn’t make any sense, because the fuel consumption will go up tremendously,” explains Stark.

Analysis: Fleet Expansion Shows Waymo Lapping Self-Driving Competition

Girsky shares Stark’s view, as he predicted that the first large scale use cases for autonomy will be fleets of souped-up golf carts running low speed pre-planned shuttle routes. Also on view at CES were complimentary autonomous shared taxi rides around Las Vegas, courtesy of French startup Navya. Today, Navya boasts of 60 operating shuttles in more than 10 cities, including around the University of Michigan.

Fully autonomous cars might not be far behind, as Waymo has seen a ninety percent drop in component costs by bringing its sensor development in-house. The autonomous powerhouse recently passed the four million mile marker on public roads and is planning on ditching its safety driver later this year in its Phoenix, Arizona test program. According Dmitri Dolgov, Vice President of Waymo’s Engineering, “Sensors on our new generation of vehicles can see farther, sharper, and more accurately than anything available on the market. Instead of taking components that might have been designed for another application, we engineered everything from the ground up, specifically for the task of Level 4 autonomy.”

With increased roadside fatalities and rising CO2 emissions, the world can’t wait too much longer for affordable, energy-efficient autonomous transportation. Girsky and others remind us there is still a long road ahead, while the industry experts estimate that the current gas-burning Waymo Chrysler Pacifica cruising around Arizona costs more than one hundred times the sticker price of the minivan. I guess until then there is always Citibike.

Power transmission line inspection robots

In 2010 I wrote that there were three sponsored research projects to solve the problem of safely inspecting and maintaining high voltage transmission lines using robotics. Existing 2010 methods ranged from humans crawling the lines, to helicopters flying close-by and scanning, to cars and jeeps with people and binoculars attempting to scan with the human eye. (2010 article)

In 2014 I described the progress from 2010 including the Japanese start-up HiBot and their inspection robot Expliner which seemed promising. This project got derailed by the Fukushima disaster which took away the funding and attention from Tepco which was forced to refocus all its resources on the disaster. HiBot later sold their IP to Hitachi High-Tech which, thus far, hasn’t reported any progress or offered any products. (2014 article)

Also in 2014 Canada’s Hydro-Québec Research Institute was working on their transmission line robot, LineScout and in America, the EPRI (American Electric Power Research Institute) was researching robots and drones for line inspection.

Now, in 2018, Canada’s MIR Innovations (the product arm of Hydro Québec) is promoting their new LineRanger inspection robot and their LineDrone flying corrosion sensor as finished products while both Hitachi High Tech and the EPRI have been silent about their research progress thus far.

The progress of these three electrical power research projects to solve a very real need shows how deep pockets are needed to solve real problems with robotic solutions and how slowly that research process often takes. This is not atypical. I observed the same kind of delays in two recent visits I made to robot startups where original concepts have morphed into totally different ones that now – after many development iterations – seem close to acceptably solving the original problems yet with no scale-up production plans in sight — again after years of funding and research.

$

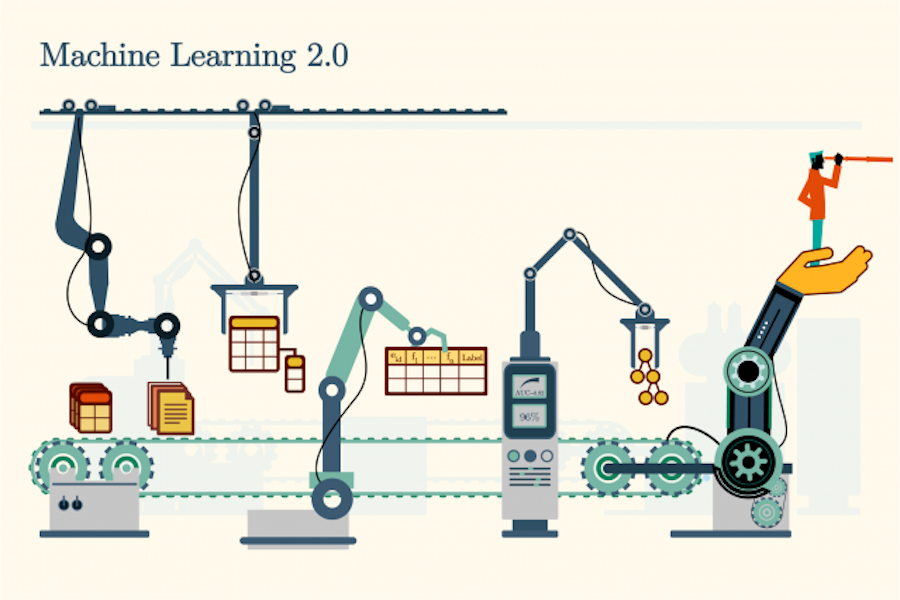

ML 2.0: Machine learning for many

Courtesy of the Laboratory for Information and Decision Systems

Today, when an enterprise wants to use machine learning to solve a problem, they have to call in the cavalry. Even a simple problem requires multiple data scientists, machine learning experts, and domain experts to come together to agree on priorities and exchange data and information.

This process is often inefficient, and it takes months to get results. It also only solves the problem immediate at hand. The next time something comes up, the enterprise has to do the same thing all over again.

One group of MIT researchers wondered, “What if we tried another strategy? What if we created automation tools that enable the subject matter experts to use ML, in order to solve these problems themselves?”

For the past five years, Kalyan Veeramachaneni, a principal research scientist at MIT’s Laboratory for Information and Decision Systems, along with Max Kanter and Ben Schreck who began working with Veeramachaneni as MIT students and later co-founded machine learning startup Feature Labs, has been designing a rigorous paradigm for applied machine learning.

The team first divided the process into a discrete set of steps. For instance, one step involved searching for buried patterns with predictive power, known as “feature engineering.” Another is called “model selection,” in which the best modeling technique is chosen from the many available options. They then automated these steps, releasing open-source tools to help domain experts efficiently complete them.

In their new paper, “Machine Learning 2.0: Engineering Data Driven AI Products,” the team brings together these automation tools, turning raw data into a trustworthy, deployable model over the course of seven steps. This chain of automation makes it possible for subject matter experts — even those without data science experience — to use machine learning to solve business problems.

“Through automation, ML 2.0 frees up subject matter experts to spend more time on the steps that truly require their domain expertise, like deciding which problems to solve in the first place and evaluating how predictions impact business outcomes,” says Schreck.

Last year, Accenture joined the MIT and Feature Labs team to undertake an ambitious project — build an AI project manager by developing and deploying a machine learning model that could predict critical problems ahead of time and augment seasoned human project managers in the software industry.

This was an opportunity to test ML 2.0’s automation tool, Featuretools, an open-source library funded by DARPA’s Data-Driven Discovery of Models (D3M) program, on a real-world problem.

Veeramachaneni and his colleagues closely collaborated with domain experts from Accenture along every step, from figuring out the best problem to solve, to running through a robust gauntlet of testing. The first model the team built was to predict the performance of software projects against a host of delivery metrics. When testing was completed, the model was found to correctly predict more than 80 percent of project performance outcomes.

Using Featuretools involved a series of human-machine interactions. In this case, Featuretools first recommended 40,000 features to the domain experts. Next, the humans used their expertise to narrow this list down to the 100 most promising features, which they then put to work training the machine-learning algorithm.

Next, the domain experts used the software to simulate using the model, and test how well it would work as new, real-time data came in. This method also extends the “train-test-validate” protocol typical to contemporary machine-learning research, making it more applicable to real-world use. The model was then deployed making predictions for hundreds of projects on a weekly basis.

“We wanted to apply machine learning (ML) to critical problems that we face in the technology services business,” says Sanjeev Vohra, global technology officer, Accenture Technology. “More specifically, we wanted to see for ourselves if MIT’s ML 2.0 could help anticipate potential risks in software delivery. We are very happy with the outcomes, and will be sharing them broadly so others can also benefit.”

In a separate joint paper, “The AI Project Manager,” the teams walk through how they used the ML 2.0 paradigm to achieve fast and accurate predictions.

“For 20 years, the task of applying machine learning to problems has been approached as a research or feasibility project, or an opportunity to make a discovery,” says Veeramachaneni. “With these new automation tools it is now possible to create a machine learning model from raw data and put them to use — within weeks,” says Veeramachaneni.

The team intends to keep honing ML 2.0 in order to make it relevant to as many industry problems as possible. “This is the true idea behind democratizing machine learning. We want to make ML useful to a broad swath of people,” he adds.

In the next five years, we are likely to see an increase in the adoption of ML 2.0. “As the momentum builds, developers will be able to set up a ML apparatus just as they set up a database,” says Max Kanter, CEO at Feature Labs. “It will be that simple.”

Integrating Robotics in the Enterprise Infrastructure

Glide-Line Conveyor Case Study: Automating in-context Conveyor Configuration With Solidworks

Artificial Intelligence Startup Announces New Breed of Mobile Autonomous Robots

The Pros and Cons of Pneumatic Robots

Segway Robotics Launches Indiegogo Campaign for Loomo – The World’s First Mobile Robot Sidekick

Reskilling Our Workforce for the Age of Automation

LineRanger robot: Innovative live-line inspection solution

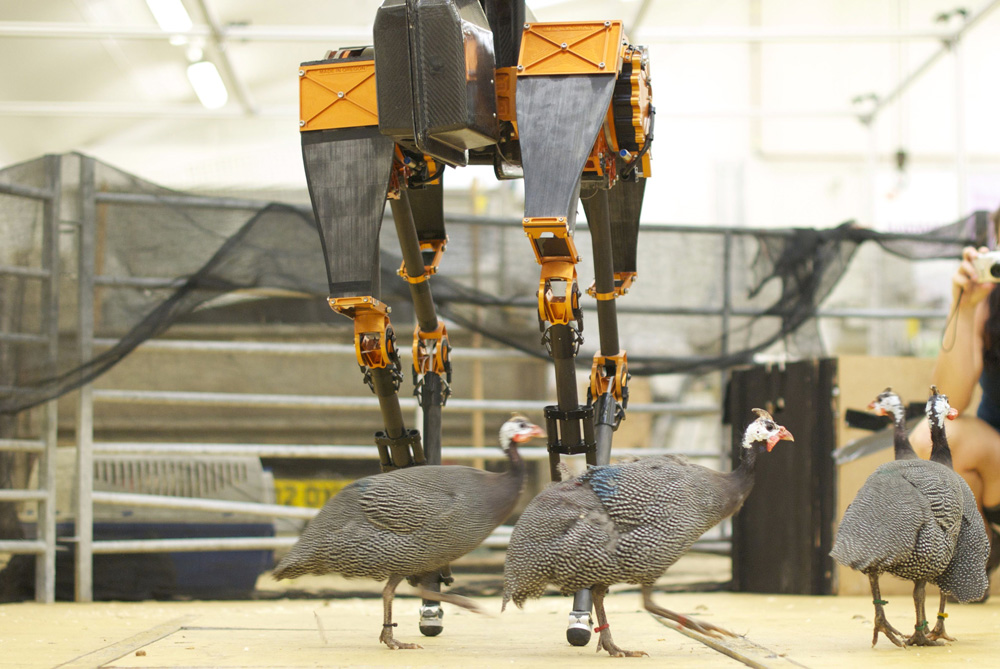

#255: Learning about Legged Locomotion from Birds, with Monica Daley

In this episode, Audrow Nash speaks with Monica Daley about learning from birds about legged locomotion. To do this, Daley analyzes the gaits of guineafowl in various experiments to understand the mechanical principles underlying gaits, such as energetic economy, mechanical limits, and how the birds avoid injury. She then tests her ideas about legged locomotion on legged robots with collaborators, including Jonathan Hurst from Oregon State University. Daley also speaks about her experience with interdisciplinary collaborations.

Monica Daley

Monica Daley earned an HBSc in Biology with a Chemistry minor at the University of Utah, where she was inspired to pursue an academic career through her research on human locomotor-ventilatory integration with Dennis Bramble and David Carrier. Daley then spent a year as a research technician at the University of Utah, investigating motor control of singing in zebra finches in the lab of Franz Goller. These experiences initiated a long-standing fascination with the interplay of mechanics and neural control.

Monica Daley earned an HBSc in Biology with a Chemistry minor at the University of Utah, where she was inspired to pursue an academic career through her research on human locomotor-ventilatory integration with Dennis Bramble and David Carrier. Daley then spent a year as a research technician at the University of Utah, investigating motor control of singing in zebra finches in the lab of Franz Goller. These experiences initiated a long-standing fascination with the interplay of mechanics and neural control.

Daley went on to Harvard University, where she earned her MA and PhD in Organismic and Evolutionary Biology. Her research on muscle-tendon dynamics and biomechanics of avian bipedal locomotion was supported by a prestigious Predoctoral Fellowship award from the Howard Hughes Medical Institute and supervised by Andrew Biewener at the Concord Field Station of Harvard University (CFS Website).

After completing her PhD, Daley was awarded a Research Fellowship by the U.S. National Science Foundation to develop models of the dynamics and control of bipedal locomotion, working with Dan Ferris in the Human Neuromechanics Lab at University of Michigan, in collaboration with Auke Ijspeert in the Biologically Inspired Robotics Group at the Swiss Federal Institute of Technology in Lausanne.

Daley is a faculty member of the Structure and Motion Lab, where she leads research in Comparative Neuromechanics— a field that seeks to understand the interplay of morphology, mechanics and sensorimotor control that influences how animals move through their environment.

Links

Soft robots that can sense touch, pressure, movement and temperature

By Leah Burrows

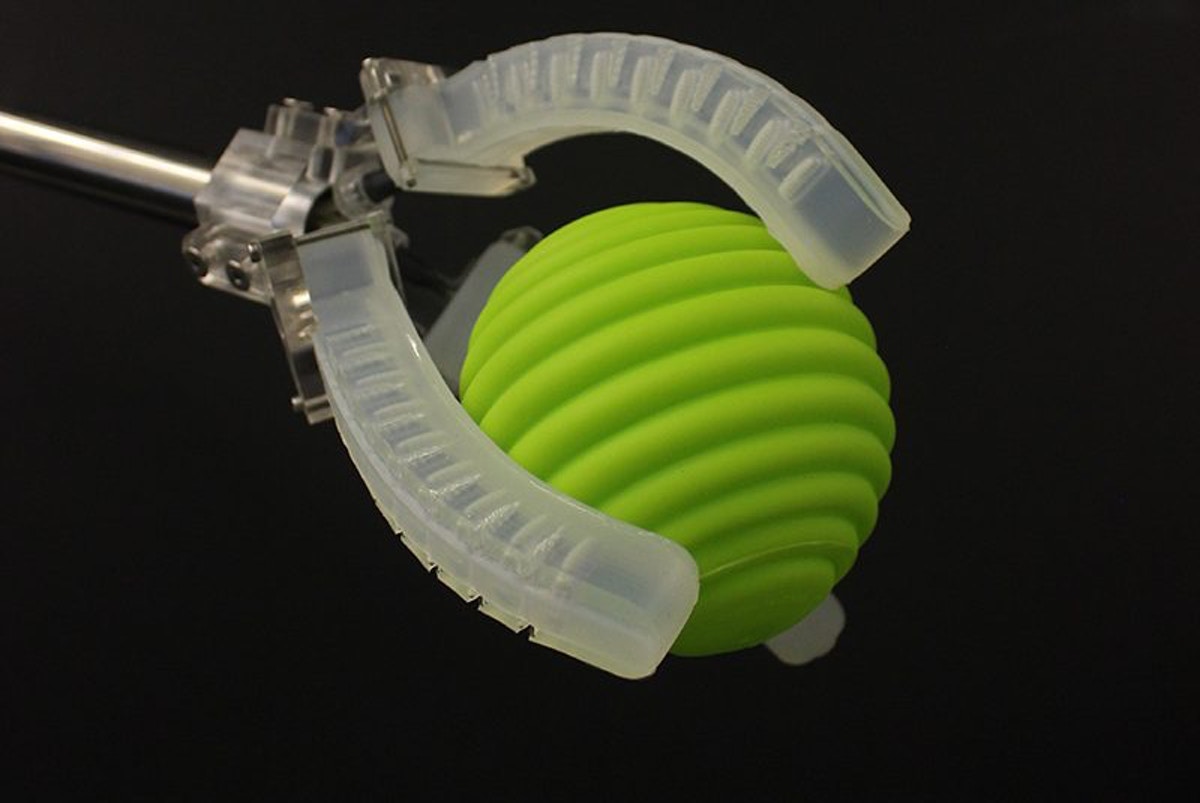

Researchers at Harvard University have built soft robots inspired by nature that can crawl, swim, grasp delicate objects and even assist a beating heart, but none of these devices has been able to sense and respond to the world around them.

That’s about to change.

Inspired by our bodies’ sensory capabilities, researchers at the Wyss Institute for Biologically Inspired Engineering and the Harvard John A. Paulson School of Engineering and Applied Sciences have developed a platform for creating soft robots with embedded sensors that can sense movement, pressure, touch, and even temperature.

The research is published in Advanced Materials.

“Our research represents a foundational advance in soft robotics,” said Ryan Truby, first author of the paper and recent Ph.D. graduate at SEAS. “Our manufacturing platform enables complex sensing motifs to be easily integrated into soft robotic systems.”

Integrating sensors within soft robots has been difficult in part because most sensors, such as those used in traditional electronics, are rigid. To address this challenge, the researchers developed an organic ionic liquid-based conductive ink that can be 3D printed within the soft elastomer matrices that comprise most soft robots.

“To date, most integrated sensor/actuator systems used in soft robotics have been quite rudimentary,” said Michael Wehner, former Postdoctoral Fellow at SEAS and co-author of the paper. “By directly printing ionic liquid sensors within these soft systems, we open new avenues to device design and fabrication that will ultimately allow true closed loop control of soft robots.”

Wehner is now an Assistant Professor at the University of California, Santa Cruz.

To fabricate the device, the researchers relied on an established 3D printing technique developed in the lab of Jennifer Lewis, Sc.D., Core Faculty Member of the Wyss Institute and the Hansjörg Wyss Professor of Biologically Inspired Engineering at SEAS. The technique — known as embedded 3D printing — seamlessly and quickly integrates multiple features and materials within a single soft body.

“This work represents the latest example of the enabling capabilities afforded by embedded 3D printing – a technique pioneered by our lab,” said Lewis.

“The function and design flexibility of this method is unparalleled,” said Truby. “This new ink combined with our embedded 3D printing process allows us to combine both soft sensing and actuation in one integrated soft robotic system.”

To test the sensors, the team printed a soft robotic gripper comprised of three soft fingers or actuators. The researchers tested the gripper’s ability to sense inflation pressure, curvature, contact, and temperature. They embedded multiple contact sensors, so the gripper could sense light and deep touches.

“Soft robotics are typically limited by conventional molding techniques that constrain geometry choices, or, in the case of commercial 3D printing, material selection that hampers design choices,” said Robert Wood, Ph.D., Core Faculty Member of the Wyss Institute and the Charles River Professor of Engineering and Applied Sciences at SEAS, and co-author of the paper. “The techniques developed in the Lewis Lab have the opportunity to revolutionize how robots are created — moving away from sequential processes and creating complex and monolithic robots with embedded sensors and actuators.”

Next, the researchers hope to harness the power of machine learning to train these devices to grasp objects of varying size, shape, surface texture, and temperature.

The research was coauthored by Abigail Grosskopf, Daniel Vogt and Sebastien Uzel. It was supported it part by through Harvard MRSEC and Harvard’s Wyss Institute for Biologically Inspired Engineering.

Robotics fundings, acquisitions, IPOs, failures: February 2018

Nineteen different startups were funded in February, raising $377 million; a substantial two-month start for the new year. Five acquisitions were reported during the month, while the IPO front had no activity whatsoever.

CB Insights said that China dominated the funding of AI-related startups during 2017. So far in 2018, the China funding percentage appears to be less than the 48% of 2017.

Robotics Fundings

1. PROCEPT BioRobotics, a Redwood Shores, Calif.-based surgical robotics company, raised $118 million in financing. Viking Global Investors LP led the round, and was joined by investors including Perceptive Advisors and CPMG Inc. More Coverage on The Robot Report

2. Aurora Innovation, a Silicon Valley self-driving vehicle startup, raised $90 million in funding. Investors include Greylock Partners and Index Ventures.

3. Rani Therapeutics, a Silicon Valley medical device startup, raised $53 million raising their total funding to $142 million. The round included Chinese GeneScience Pharmaceuticals, Shire, Bossa Ventures, and Cathay Venture, GV, Novartis, AstraZeneca, Ping An Ventures, Virtus Ventures and others. More Coverage on The Robot Report

4. DecaWave, a Dublin, Ireland-based fabless semiconductor company specializing in precise location and connectivity applications, raised $30 million in funding. Atlantic Bridge Ventures led the round, and was joined by investors including Enterprise Ireland, China Ireland Growth Technology Fund, ACT Venture Capital and ZZ Ventures.

5. Magazino, a Munich startup providing mobile picking robots for small and medium-sized boxes, cases and pods, raised $24.8 million in a round led by the Körber Group with participation by Cellcom Resources, Zalando and Fiege Logistics.

6. CommonSense Robotics, an Israeli startup integrating robotics with online grocery fulfilment, raised $20 million in a Series A funding led by Playground Global, with participation from Aleph VC and Eric Schmidt’s Innovation Endeavors. CommonSense employs robots to power custom warehouses or micro-fulfilment centers that are small enough to be placed in urban areas. The robots are designed to store products and bring the right ones to humans who then pack a customer’s order. More robots are then used to get the packaged order out to dispatch.

7. Prophesee SA, a Paris-based creator of a bio-inspired vision system, raised $19 million in funding. Investors include 360 Capital Partners, Supernova Invest, iBionext, Intel Capital, Renault Group and Robert Bosch Venture Capital.

8. Citadel Defense Company, a San Diego-based drone system developer, raised $12 million in Series A funding. Investors include Lightspeed Venture Partners.

9. Drone Base, a Los Angeles startup that connects drone users with commercial tasks, raised $12 million in a Series B funding round co-led by Upfront Ventures and Union Square Ventures. DJI, Hearst Ventures and Pritzker Group also participated in the round.

10. May Mobility, an Ann Arbor-based startup developing self-driving shuttles for large campuss and central business districts, raised $11.5 million in seed funding from Toyota AI Ventures and BMW i Ventures. More Coverage on The Robot Report

11. Miso Robotics, a Pasadena, CA food handling robot startup, raised $10 million in a Series B round led by Acacia Research Corporation, with participation from Levy Restaurants, OpenTable CTO Joseph Essas, and Cali Group, owner of CaliBurger. Miso Robotics is one of a number of companies trying to use robots to replace or augment fast food workers.

12. inVia Robotics, a Westlake Village, Calif.-based provider of robotics warehouse automation solutions for e-commerce fulfillment centers, raised $9 million in funding. Upfront Ventures led the round and was joined by investors including Embark Ventures.

13. EdgyBees, a CA startup providing augmented reality for drones and other moving platforms, raised $5.5 million in a seed round led by OurCrowd with participation from 8VC, Verizon Ventures, Aspect Ventures, Motorola Solutions Ventures and NFX.

14. Fourier Intelligence, a Chinese startup providing exoskeletons for rehab and prosthetics, raised $4.7 million in a Series A round let by Prosperico Venture with participation from Volcanics Venture, IDG Capital Partners and Qianhai Fund of Funds.

15. Invert Robotics, a NZ RaaS (robotics as a service) startup providing inspection of industrial equipment across a range of applications, raised $4.6 million (6.4 NZ$) from Powerhouse Ventures, NZ Venture Investment Fund and Allan Moss.

16. American Robotics, a Boston-based startup developing a drone system for farmers, has raised $2 million in a second seed round (they raised $1.1 million last year). Investors included BRC Innovation and Brain Robot Capital.

17. Dash Robotics, a Silicon Valley startup developing futuristic, app-controlled toy robots, raised $2.7 million in a Series A funding round led by Ironfire Ventures.

18. Planys Technologies, an Indian marine robotics startup, raised $1 million from Kris Gopalakrishnan along with Pratithi Investment Trust and MEMG (Manipal Education and Medical Group).

19. Solinftec, a Brazilian ag tech startup, raised an undisclosed Series B funding from AgFunder and TPG ART.

Acquisitions

1. KBR, a large engineering and construction company formerly owned by Halliburton, acquired Stinger Ghaffarian Technologies, an integrator of space and defense systems, from the government for $355 million.

2. Fanuc acquired Japanese co-bot maker Life Robotics for an undisclosed amount.

3. Humatics, a Cambridge, MA startup developing a localization platform, has acquired 5D Robotics, a Carlsbad, CA provider of mobility products using radio waves for distancing, and 5D’s subsidiary, Time Domain, an Alabama provider of ultra-wide-band chips, for an undisclosed amount. More Coverage on The Robot Report

4. Johnson & Johnson Medical Devices acquired Orthotaxy, a French developer of software-enabled surgery technologies, including a differentiated robotic-assisted surgery solution for total and partial knee replacement. Financial terms of the transaction were not disclosed. More Coverage on The Robot Report

5. FarmShots, a NC startup providing imagery and analytics for farmers, was acquired by Syngenta for an undisclosed amount. FarmShots collects data for 8 million acres.

IPOs

Nada. Zip. Zero!

Failures

1. New Matter, a Pasadena-based maker of 3D printers is closing down after running out of the $6.5 million funding it had raised. It will continue to maintain printers it has sold to consumers and schools through the summer of 2018.

2. RoboBusiness Europe, a trade conference and expo scheduled to be held in February by the same group that produces RoboBusiness in America, cancelled due to lack of funding.

3. Innorobo, a service robotics expo and conference to be held in June has also been cancelled due to a lack of funding.