Using the fossil and fossilized footprints of a 300-million-year-old animal, scientists from EPFL and Humboldt-Universität zu Berlin have identified the most likely gaits of extinct animals and designed a robot that can recreate an extinct animal’s walk. This study can help researchers better understand how vertebrate locomotion evolved over time.

How did vertebrates walk 300 million years ago? Could they already stand upright on their legs? Did they move in a balanced, energy-efficient way? Scientists at EPFL’s Biorobotics Laboratory – supported by the National Center of Competence in Research (NCCR) Robotics – and the Interdisciplinary Laboratory Image Knowledge Gestaltung at Humboldt-Universität zu Berlin set out to answer these questions. Using the fossilized skeleton and footprints of Orobates pabsti – a vertebrate that, on the evolutionary tree, comes between amphibians on one hand and reptiles and mammals on the other – the scientists created computer simulations and a robot. Drawing on experimental studies of four living amphibian and reptile species, they used these tools to gauge how plausible different ways of walking were for the fossilized animal.

“Orobates is an ideal candidate for understanding how land vertebrates evolved because it is in the lineage leading to modern amniotes. These animals formed in eggs laid on land and became largely independent of water,” says John Nyakatura, a professor at Humboldt-Universität. What’s more, Orobates is the oldest-known vertebrate for which scientists have been able to link a fossil with its fossilized footprints. “This combination is what enabled us to carry out our unique quantitative study, which paves the way to replicating the walk of other fossilized animals,” says NCCR Robotics Professor Auke Ijspeert. The researchers’ findings appear in Nature.

A motion-based model and then a robotic one

To better understand how Orobates walked and pinpoint just how advanced its locomotion was, the scientists at Humboldt-Universität developed a digital model of its skeleton based on the animal’s fossil and the biomechanics of modern animals with sprawling postures. They used this model to carry out the first kinematic computer simulation of Orobates’ gait as it walks on its digitalized footprints. This simulation focuses on movements (rather than forces) and identifies gaits where the animal’s bones do not collide or come out of their joints.

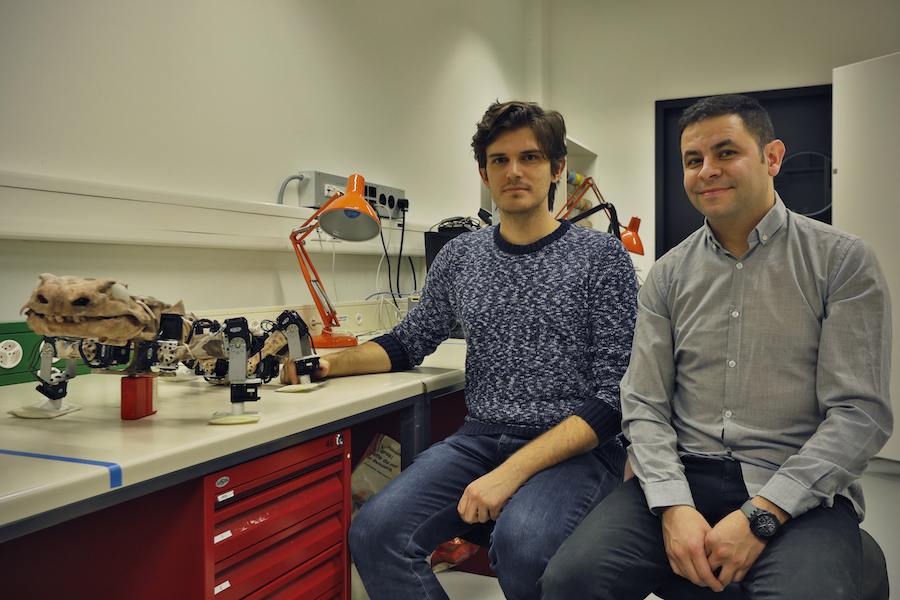

In parallel, two scientists at EPFL’s Biorobotics Laboratory – post-doctoral researcher Kamilo Melo and PhD student Tomislav Horvat, both members of NCCR Robotics at the time of the study – used the fossilized animal anatomy to build a robot called OroBOT. Designed and scaled to match the shape and movements of the extinct animal, OroBOT was used to calculate the physics of how Orobates walked. “We tested our hypotheses about the animal’s locomotion dynamics with our robotic model, which factors in the real-world physics of the animal’s gait,” says Melo.

Testing hundreds of different gaits, based on contemporary animals

The interdisciplinary team of scientists tested hundreds of different gaits with their robot in order to determine which ones Orobates could have used – and those that it clearly did not. The gaits they tested were based on biomechanical principles extracted from similar modern-day animals such as caimans, salamanders, iguanas and skinks, which they analyzed through X-ray videos and force measurements. “We studied the biomechanics of their movements and determined which mechanical principles they all followed”, says Nyakatura. The research team looked at three features in particular: how erect the animal stood on its legs; how its backbone bent; and how much its elbow or shoulder joints bent as it walked. These three features determine what the researchers call the animal’s “sprawling gait space”. They created a powerful interactive website where fellow scientists – and the wider public – can explore the universe of movements that Orobates could have used.

With these results, they came up with the most likely ways that Orobates may have walked. They scored the gaits based on how much energy was required, how stable the movements were, how the leg forces compared with those of other sprawling animals, and how closely the movements aligned with the fossilized footprints. The gaits with good scores appear quite athletic and most closely resemble the movements of caimans. This suggests that Orobates probably already held itself a little upright on its legs – unlike salamanders and skinks. Its locomotion was thus more advanced — more upright, balanced and mechanically power-saving — than had been previously thought.

The study concludes that advanced locomotion, as in Orobates, may have evolved before the common ancestor of reptiles and mammals lived. The novel approach developed for this study can be applied by other scientists in their work, and it could be modified to study other evolutionary transitions, such as the origins of flight or galloping gaits in mammals. Last but not least, being able to select the most efficient gait for any given morphology is of fundamental importance for the walking robots that NCCR Robotics researchers are developing, in particular for search-and-rescue applications.

Orobates fossil

Literature

John A. Nyakatura, Kamilo Melo, Tomislav Horvat, Kostas Karakasiliotis, Vivian R. Allen, Amir Andikfar, Emanuel Andrada, Patrick Arnold, Jonas Lauströer, John R. Hutchinson, Martin S. Fischer and Auke J. Ijspeert. “Reverse-engineering the locomotion of a stem amniote“, Nature, 17 January 2019.

By Rob Matheson

By Rob Matheson