First collaborative robot to work with vehicles in motion

System detects errors when medication is self-administered

From swallowing pills to injecting insulin, patients frequently administer their own medication. But they don’t always get it right. Improper adherence to doctors’ orders is commonplace, accounting for thousands of deaths and billions of dollars in medical costs annually. MIT researchers have developed a system to reduce those numbers for some types of medications.

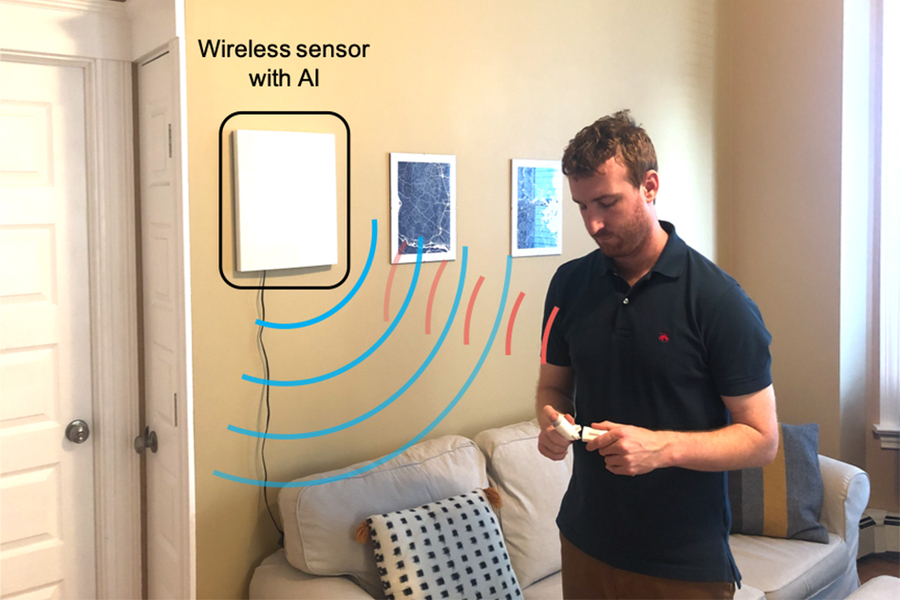

The new technology pairs wireless sensing with artificial intelligence to determine when a patient is using an insulin pen or inhaler, and flags potential errors in the patient’s administration method. “Some past work reports that up to 70% of patients do not take their insulin as prescribed, and many patients do not use inhalers properly,” says Dina Katabi, the Andrew and Erna Viteri Professor at MIT, whose research group has developed the new solution. The researchers say the system, which can be installed in a home, could alert patients and caregivers to medication errors and potentially reduce unnecessary hospital visits.

The research appears today in the journal Nature Medicine. The study’s lead authors are Mingmin Zhao, a PhD student in MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), and Kreshnik Hoti, a former visiting scientist at MIT and current faculty member at the University of Prishtina in Kosovo. Other co-authors include Hao Wang, a former CSAIL postdoc and current faculty member at Rutgers University, and Aniruddh Raghu, a CSAIL PhD student.

Some common drugs entail intricate delivery mechanisms. “For example, insulin pens require priming to make sure there are no air bubbles inside. And after injection, you have to hold for 10 seconds,” says Zhao. “All those little steps are necessary to properly deliver the drug to its active site.” Each step also presents opportunity for errors, especially when there’s no pharmacist present to offer corrective tips. Patients might not even realize when they make a mistake — so Zhao’s team designed an automated system that can.

Their system can be broken down into three broad steps. First, a sensor tracks a patient’s movements within a 10-meter radius, using radio waves that reflect off their body. Next, artificial intelligence scours the reflected signals for signs of a patient self-administering an inhaler or insulin pen. Finally, the system alerts the patient or their health care provider when it detects an error in the patient’s self-administration.

The researchers adapted their sensing method from a wireless technology they’d previously used to monitor people’s sleeping positions. It starts with a wall-mounted device that emits very low-power radio waves. When someone moves, they modulate the signal and reflect it back to the device’s sensor. Each unique movement yields a corresponding pattern of modulated radio waves that the device can decode. “One nice thing about this system is that it doesn’t require the patient to wear any sensors,” says Zhao. “It can even work through occlusions, similar to how you can access your Wi-Fi when you’re in a different room from your router.”

The new sensor sits in the background at home, like a Wi-Fi router, and uses artificial intelligence to interpret the modulated radio waves. The team developed a neural network to key in on patterns indicating the use of an inhaler or insulin pen. They trained the network to learn those patterns by performing example movements, some relevant (e.g. using an inhaler) and some not (e.g. eating). Through repetition and reinforcement, the network successfully detected 96 percent of insulin pen administrations and 99 percent of inhaler uses.

Once it mastered the art of detection, the network also proved useful for correction. Every proper medicine administration follows a similar sequence — picking up the insulin pen, priming it, injecting, etc. So, the system can flag anomalies in any particular step. For example, the network can recognize if a patient holds down their insulin pen for five seconds instead of the prescribed 10 seconds. The system can then relay that information to the patient or directly to their doctor, so they can fix their technique.

“By breaking it down into these steps, we can not only see how frequently the patient is using their device, but also assess their administration technique to see how well they’re doing,” says Zhao.

The researchers say a key feature of their radio wave-based system is its noninvasiveness. “An alternative way to solve this problem is by installing cameras,” says Zhao. “But using a wireless signal is much less intrusive. It doesn’t show peoples’ appearance.”

He adds that their framework could be adapted to medications beyond inhalers and insulin pens — all it would take is retraining the neural network to recognize the appropriate sequence of movements. Zhao says that “with this type of sensing technology at home, we could detect issues early on, so the person can see a doctor before the problem is exacerbated.”

Build a Transformer in JAX from scratch: how to write and train your own models

Growth in the Automation of Distribution and Fulfillment Industry

Nanotech scientists create world’s smallest origami bird

RoboEYE: A semi-autonomous and gaze-guided wheelchair

Webinar: 8 Robotic & Automation Applications of Flexible 3D Printed Parts – Watch Now

HP Industrial 3D Printing – Robotics and End of arm tooling (EOAT)

Webinar: 8 Robotic & Automation Applications of Flexible 3D Printed Parts

Sustainable Supply Chains in the Era of Industry 4.0

Chad Jenkins’ talk – That Ain’t Right: AI Mistakes and Black Lives (with video)

In this technical talk, Chad Jenkins from the University of Michigan posed the following question: “who will pay the cost for the likely mistakes and potential misuse of AI systems?” As he states, “we are increasingly seeing how AI is having a pervasing impact on our lives, both for good and for bad. So, how do we ensure equal opportunity in science and technology?”

Abstract

It would be great to talk about the many compelling ideas, innovations, and new questions emerging in robotics research. I am fascinated by the ongoing NeRF Explosion, prospects for declarative robot programming by demonstration, and potential for a reemergence of probabilistic generative inference. However, there is a larger issue facing our intellectual enterprise: who will pay the cost for the likely mistakes and potential misuse of AI systems? My nation is poised to invest billions of dollars to remain the leader in artificial intelligence as well as quantum computing. This investment is critically needed to reinvigorate the science that will shape our future. In order to get the most from this investment, we have to create an environment that will produce innovations that are not just technical advancements but will also benefit and uplift everybody in our society. We are increasingly seeing how AI is having a pervasing impact on our lives, both for good and for bad. So, how do we ensure equal opportunity in science and technology? It starts with how we invest in scientific research. Currently, when we make investments, we only think about technological advancement. Equal opportunity is a non-priority and, at best, a secondary consideration. The fix is simple really — and something we can do almost immediately: we must start enforcing existing civil rights statutes for how government funds are distributed in support of scientific advancement. This will mostly affect universities, as the springwell that generates the intellectual foundation and workforce for other organizations that are leading the way in artificial intelligence.This talk will explore the causes of systemic inequality in AI, the impact of this inequity within the field of AI and across society today, and offer thoughts for the next wave of AI inference systems for robotics that could provide introspectability and accountability. Ideas explored build upon the BlackInComputing.org open letter and “Before we put $100 billion into AI…” opinion. Equal opportunity for anyone requires equal opportunity for everyone.

Biography

Odest Chadwicke Jenkins, Ph.D., is a Professor of Computer Science and Engineering and Associate Director of the Robotics Institute at the University of Michigan. Prof. Jenkins earned his B.S. in Computer Science and Mathematics at Alma College (1996), M.S. in Computer Science at Georgia Tech (1998), and Ph.D. in Computer Science at the University of Southern California (2003). He previously served on the faculty of Brown University in Computer Science (2004-15). His research addresses problems in interactive robotics and human-robot interaction, primarily focused on mobile manipulation, robot perception, and robot learning from demonstration. Prof. Jenkins has been recognized as a Sloan Research Fellow and is a recipient of the Presidential Early Career Award for Scientists and Engineers (PECASE). His work has also been supported by Young Investigator awards from the Office of Naval Research (ONR), the Air Force Office of Scientific Research (AFOSR) and the National Science Foundation (NSF). Prof. Jenkins is currently serving as Editor-in-Chief for the ACM Transactions on Human-Robot Interaction. He is a Fellow of the American Association for the Advancement of Science and Association for the Advancement of Artificial Intelligence, and Senior Member of the Association for Computing Machinery and the Institute of Electrical and Electronics Engineers. He is an alumnus of the Defense Science Study Group (2018-19).

Featuring Guest Panelist: Sarah Brown, Hadas Kress-Gazit, Aisha Walcott

The next technical talk will be delivered by Raia Hadsell from DeepMind, and it will take place on March 26 at 3pm EST. Keep up to date on this website.