A microrobotic opto-electro-mechanical device able to steer a laser beam with high speed and a large range of motion could enhance the possibilities of minimally invasive surgeries

By Benjamin Boettner

Minimally invasive surgeries in which surgeons gain access to internal tissues through natural orifices or small external excisions are common practice in medicine. They are performed for problems as diverse as delivering stents through catheters, treating abdominal complications, and performing transnasal operations at the skull base in patients with neurological conditions.

The ends of devices for such surgeries are highly flexible (or “articulated”) to enable the visualization and specific manipulation of the surgical site in the target tissue. In the case of energy-delivering devices that allow surgeons to cut or dry (desiccate) tissues, and stop internal bleeds (coagulate) deep inside the body, a heat-generating energy source is added to the end of the device. However, presently available energy sources delivered via a fiber or electrode, such as radio frequency currents, have to be brought close to the target site, which limits surgical precision and can cause unwanted burns in adjacent tissue sections and smoke development.

Laser technology, which already is widely used in a number of external surgeries, such as those performed in the eye or skin, would be an attractive solution. For internal surgeries, the laser beam needs to be precisely steered, positioned and quickly repositioned at the distal end of an endoscope, which cannot be accomplished with the currently available relatively bulky technology.

Responding to an unmet need for a robotic surgical device that is flexible enough to access hard to reach areas of the G.I. tract while causing minimal peripheral tissue damage, Researchers at the Wyss Institute and Harvard SEAS have developed a laser steering device that has the potential to improve surgical outcomes for patients. Credit: Wyss Institute at Harvard University

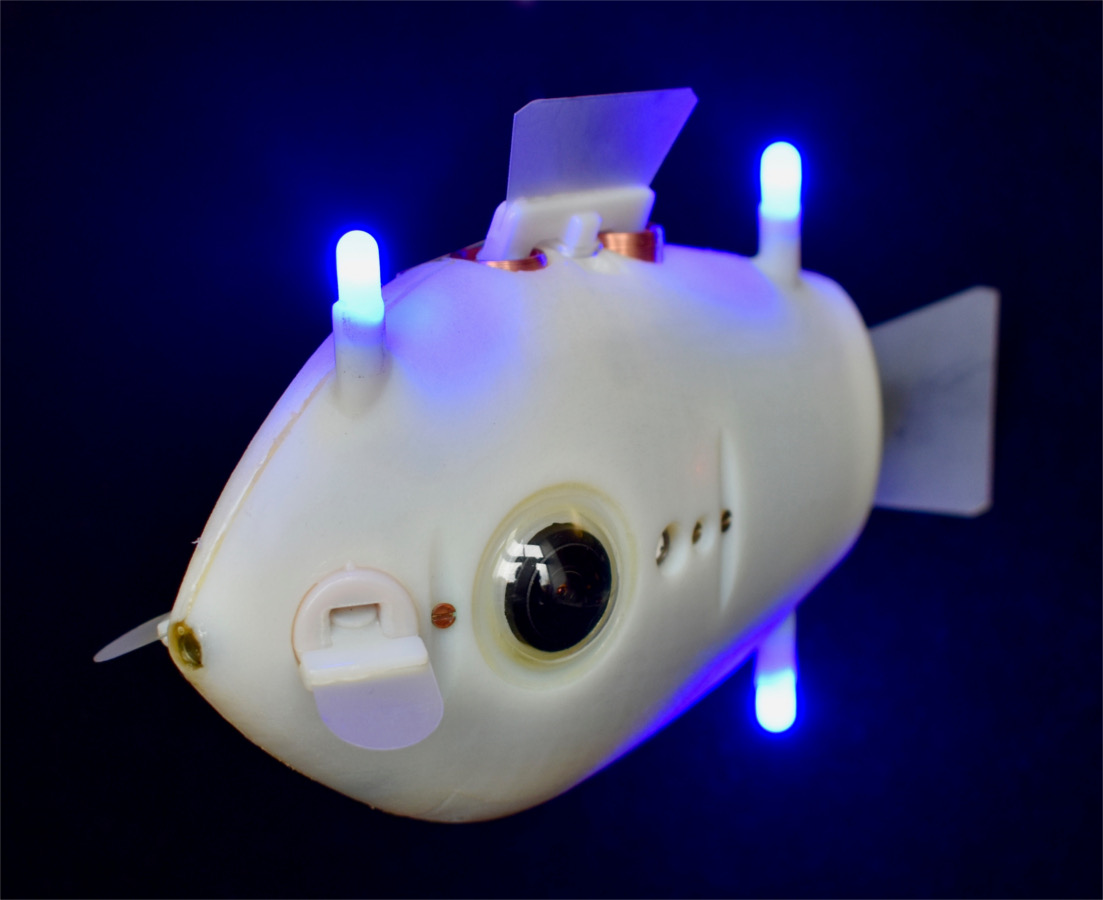

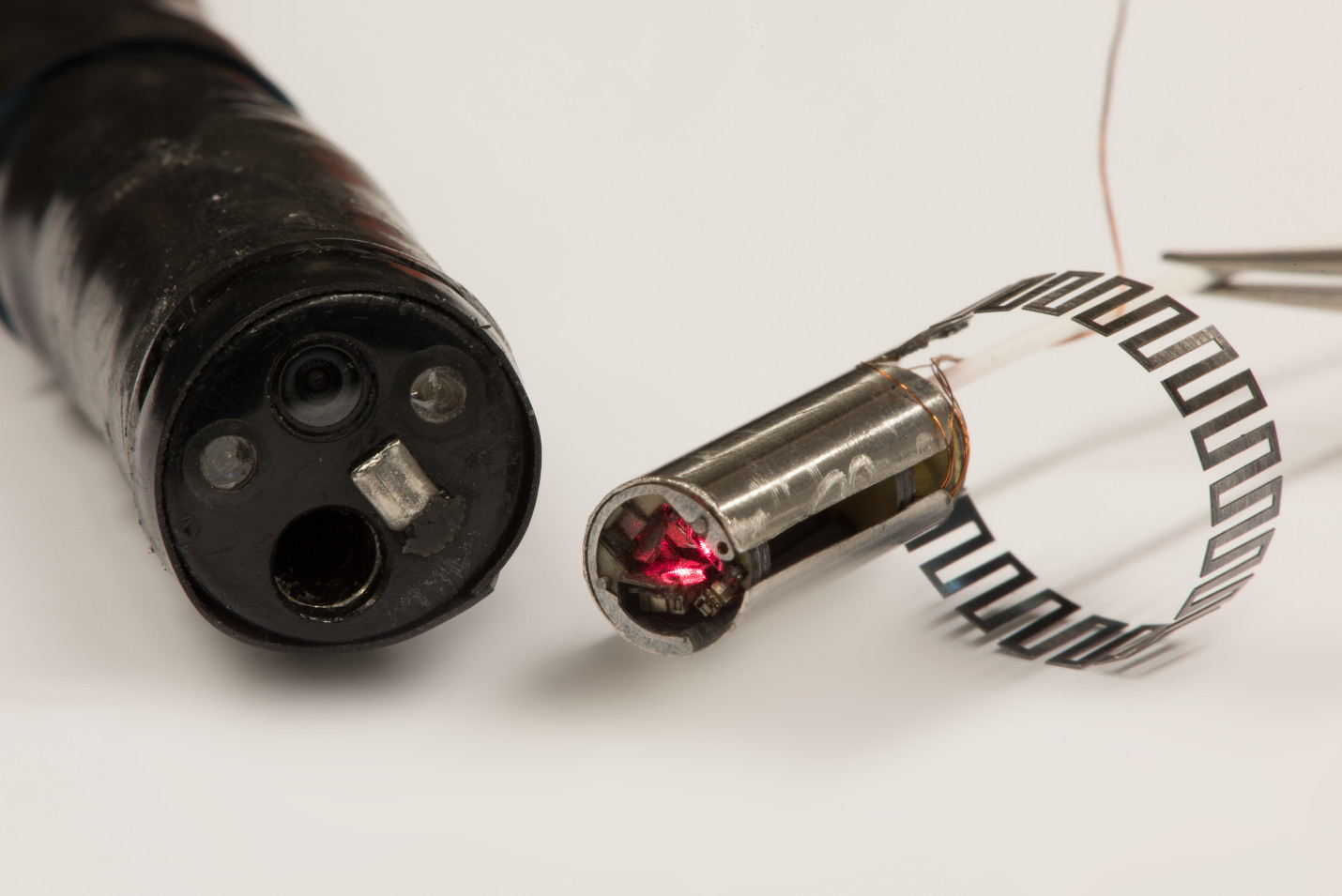

Now, robotic engineers led by Wyss Associate Faculty member Robert Wood, Ph.D., and postdoctoral fellow Peter York, Ph.D., at Harvard University’s Wyss Institute for Biologically Inspired Engineering and John A. Paulson School for Engineering and Applied Science (SEAS) have developed a laser-steering microrobot in a miniaturized 6×16 millimeter package that operates with high speed and precision, and can be integrated with existing endoscopic tools. Their approach, reported in Science Robotics, could help significantly enhance the capabilities of numerous minimally invasive surgeries.

In this multi-disciplinary approach, we managed to harness our ability to rapidly prototype complex microrobotic mechanisms…provide clinicians with a non-disruptive solution that could allow them to advance the possibilities of minimally invasive surgeries in the human body with life-altering or potentially life-saving impact.

Robert Wood

“To enable minimally invasive laser surgery inside the body, we devised a microrobotic approach that allows us to precisely direct a laser beam at small target sites in complex patterns within an anatomical area of interest,” said York, the first and corresponding author on the study and a postdoctoral fellow on Wood’s microrobotics team. “With its large range of articulation, minimal footprint, and fast and precise action, this laser-steering end-effector has great potential to enhance surgical capabilities simply by being added to existing endoscopic devices in a plug-and-play fashion.”

The team needed to overcome the basic challenges in design, actuation, and microfabrication of the optical steering mechanism that enables tight control over the laser beam after it has exited from an optical fiber. These challenges, along with the need for speed and precision, were exacerbated by the size constraints – the entire mechanism had to be housed in a cylindrical structure with roughly the diameter of a drinking straw to be useful for endoscopic procedures.

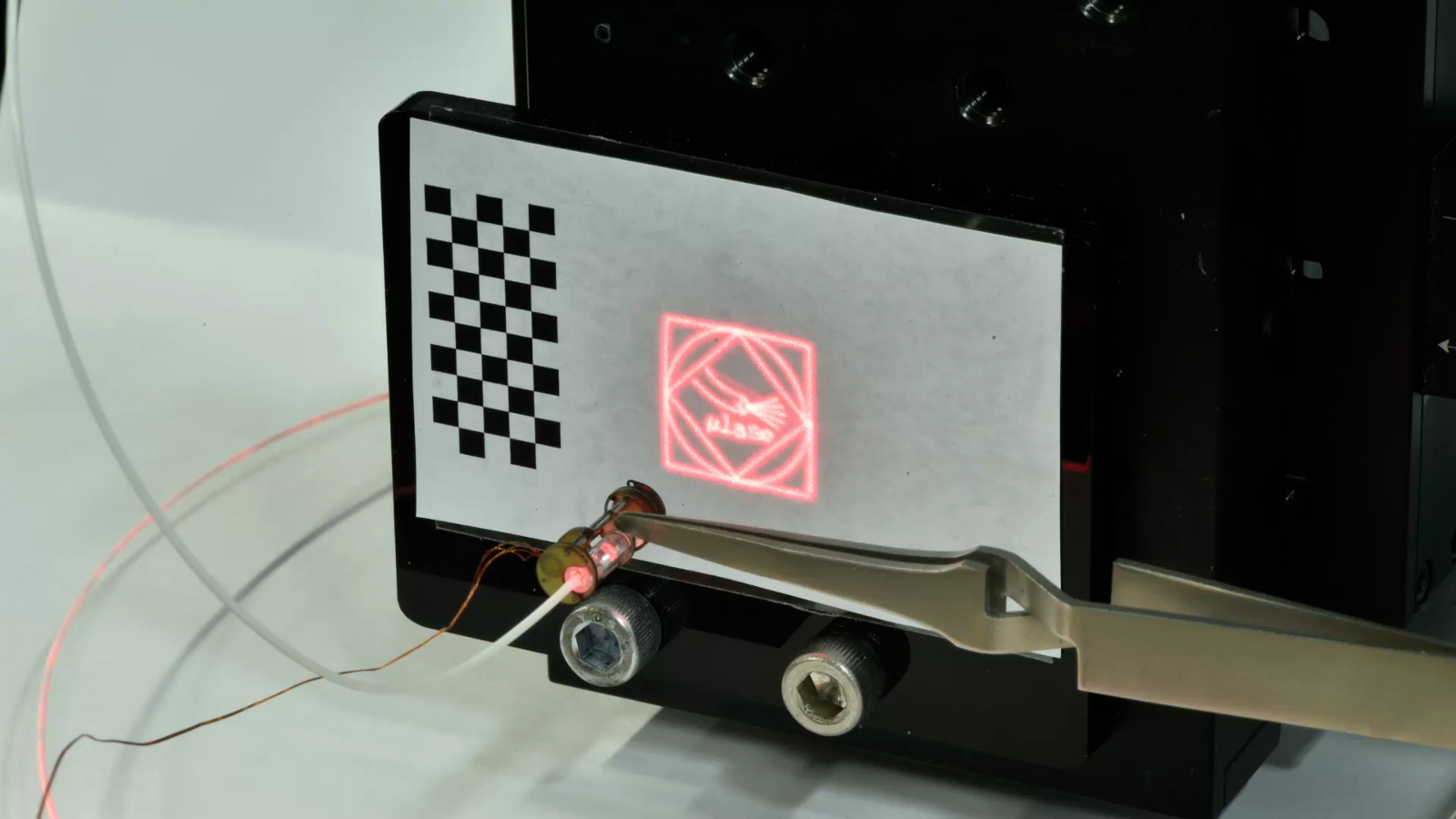

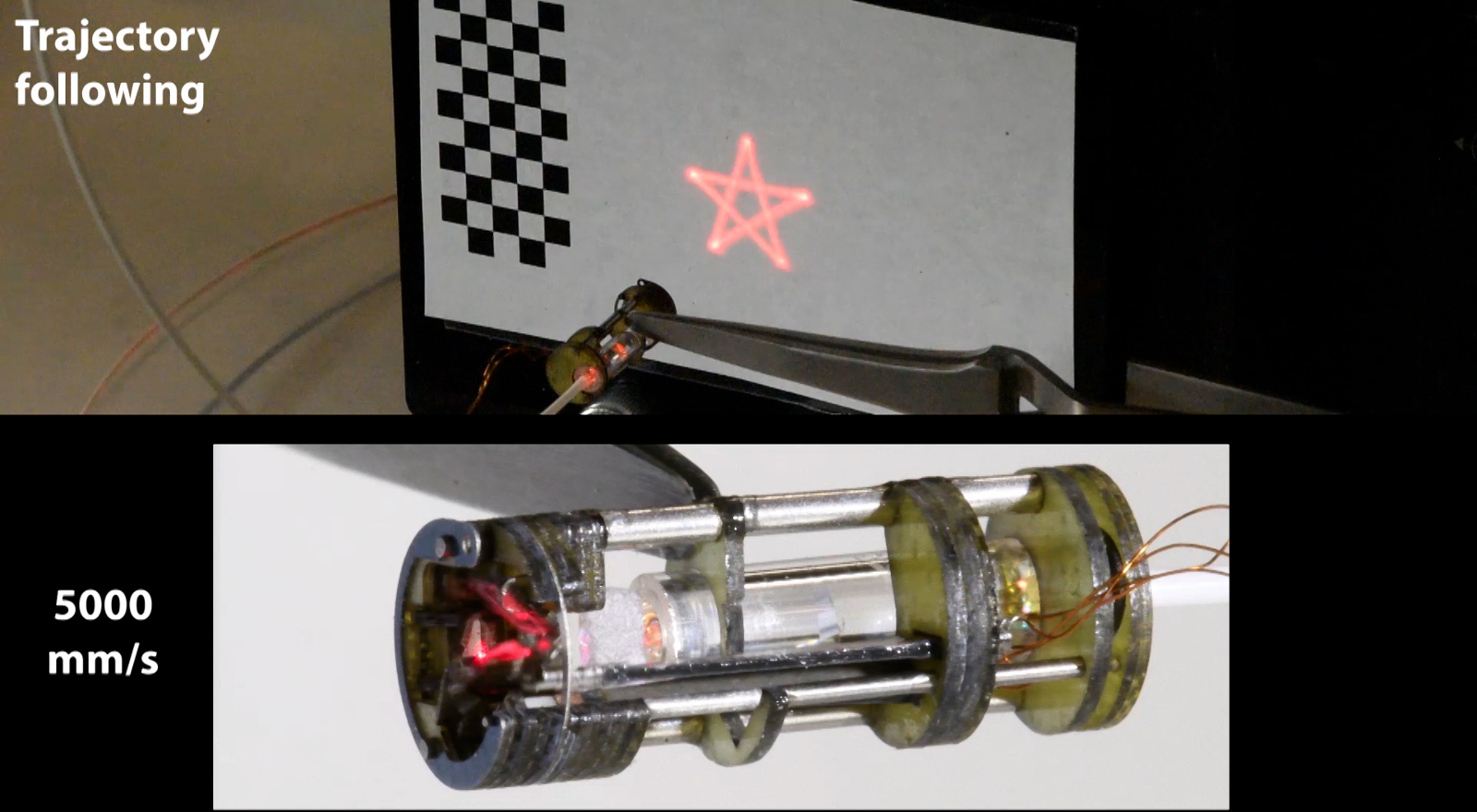

“We found that for steering and re-directing the laser beam, a configuration of three small mirrors that can rapidly rotate with respect to one another in a small ‘galvanometer’ design provided a sweet spot for our miniaturization effort,” said second author Rut Peña, a mechanical engineer with micro-manufacturing expertise in Wood’s group. “To get there, we leveraged methods from our microfabrication arsenal in which modular components are laminated step-wise onto a superstructure on the millimeter scale – a highly effective fabrication process when it comes to iterating on designs quickly in search of an optimum, and delivering a robust strategy for mass-manufacturing a successful product.”

The team demonstrated that their laser-steering end-effector, miniaturized to a cylinder measuring merely 6 mm in diameter and 16 mm in length, was able to map out and follow complex trajectories in which multiple laser ablations could be performed with high speed, over a large range, and be repeated with high accuracy.

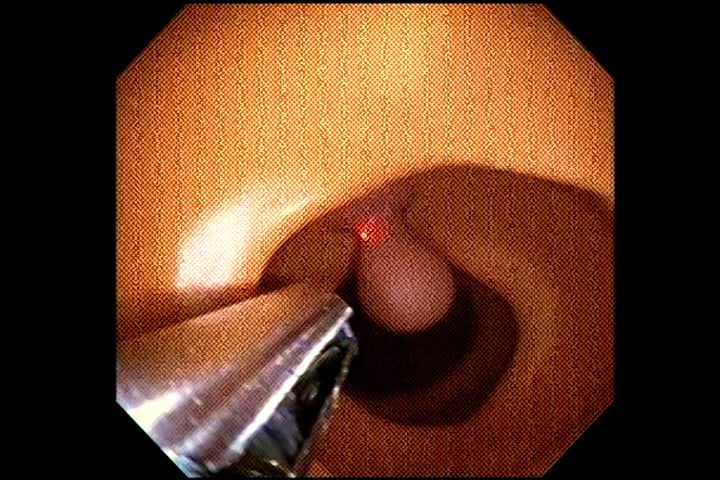

To further show that the device, when attached to the end of a common colonoscope, could be applied to a life-like endoscopic task, York and Peña, advised by Wyss Clinical Fellow Daniel Kent, M.D., successfully simulated the resection of polyps by navigating their device via tele-operation in a benchtop phantom tissue made of rubber. Kent also is a resident physician in general surgery at the Beth Israel Deaconess Medical Center.

“In this multi-disciplinary approach, we managed to harness our ability to rapidly prototype complex microrobotic mechanisms that we have developed over the past decade to provide clinicians with a non-disruptive solution that could allow them to advance the possibilities of minimally invasive surgeries in the human body with life-altering or potentially life-saving impact,” said senior author Wood, Ph.D., who also is the Charles River Professor of Engineering and Applied Sciences at SEAS.

Wood’s microrobotics team together with technology translation experts at the Wyss Institute have patented their approach and are now further de-risking their medical technology (MedTech) as an add-on for surgical endoscopes.

“The Wyss Institute’s focus on microrobotic devices and this new laser-steering device developed by Robert Wood’s team working across disciplines with clinicians and experts in translation will hopefully revolutionize how minimally invasive surgical procedures are carried out in a number of disease areas,” said Wyss Founding Director Donald Ingber, M.D., Ph.D., who is also the Judah Folkman Professor of Vascular Biology at Harvard Medical School and Boston Children’s Hospital, and Professor of Bioengineering at SEAS.

The study was funded by the National Science Foundation under award #CMMI-1830291, and the Wyss Institute for Biologically Inspired Engineering.