Robots for deep-sea recovery missions in sci-fi and reality

A science fiction/science fact review of Three Miles Down by Harry Turtledove, the fictionalized version of the Hughes Glomar Explorer expedition 50 years before the OceanGate Titan tragedy.

My new science fiction/science fact article for Science Robotics is out on why deep ocean robotics is hard. Especially when trying to bring up a sunken submarine 3 miles underwater, which the CIA actually did in 1974. It’s even harder if you’re trying to bring up an alien spaceship- which is the plot of Harry Turtledove’s new sci-fi novel Three Miles Under. It’s a delightful Forrest Gump version of that 1974 Hughes Glomar Explorer expedition. Though the expedition was 50 years before the OceanGate Titan tragedy, the same challenges exist for today’s robots. The robotics science in the book is very real, the aliens, not so much.

In 1974, the CIA deployed a 3 mile long, 6 million pound robot manipulator to recover a Russian submarine. The cover story was that Howard Hughes was deep sea mining for manganese nodules- which accidentally started everyone else investing in deep sea mining.

The Glomar Explorer was also a breakthrough in computer control, as the ship had to stay on station and move the arm to the sub in the presence of wind, waves, and currents. All with an array of 16-bit microprocessor, 5MHz clock, 32K words of core memory Honeywell computers. Consider that a late model iPhone uses a 64-bit microprocessor, a 3GHz clock, 6GB of RAM and a GPU.

Turtledove takes one major liberty with the otherwise hard science retrospective: the CIA recovering the Soviet sub was in turn a cover story masking the real mission to salvage the alien space ship that apparently collided with the sub!

The dry humor and attention to scientific details makes for an entertaining sci-fi compare-and-contrast between deep sea robotics and computers in the 1970s and the present day. It’s a fun read- not just for roboticists and computer scientists.

For further robotics science reading:

- about ROVs: https://rov.org/history/

- about the automatic station keeping system: The Jennifer Project

For further scifi reading, check out:

Do humans get lazier when robots help with tasks?

Image/Shutterstock.com

By Angharad Brewer Gillham, Frontiers science writer

‘Social loafing’ is a phenomenon which happens when members of a team start to put less effort in because they know others will cover for them. Scientists investigating whether this happens in teams which combine work by robots and humans found that humans carrying out quality assurance tasks spotted fewer errors when they had been told that robots had already checked a piece, suggesting they relied on the robots and paid less attention to the work.

Now that improvements in technology mean that some robots work alongside humans, there is evidence that those humans have learned to see them as team-mates — and teamwork can have negative as well as positive effects on people’s performance. People sometimes relax, letting their colleagues do the work instead. This is called ‘social loafing’, and it’s common where people know their contribution won’t be noticed or they’ve acclimatized to another team member’s high performance. Scientists at the Technical University of Berlin investigated whether humans social loaf when they work with robots.

“Teamwork is a mixed blessing,” said Dietlind Helene Cymek, first author of the study in Frontiers in Robotics and AI. “Working together can motivate people to perform well but it can also lead to a loss of motivation because the individual contribution is not as visible. We were interested in whether we could also find such motivational effects when the team partner is a robot.”

A helping hand

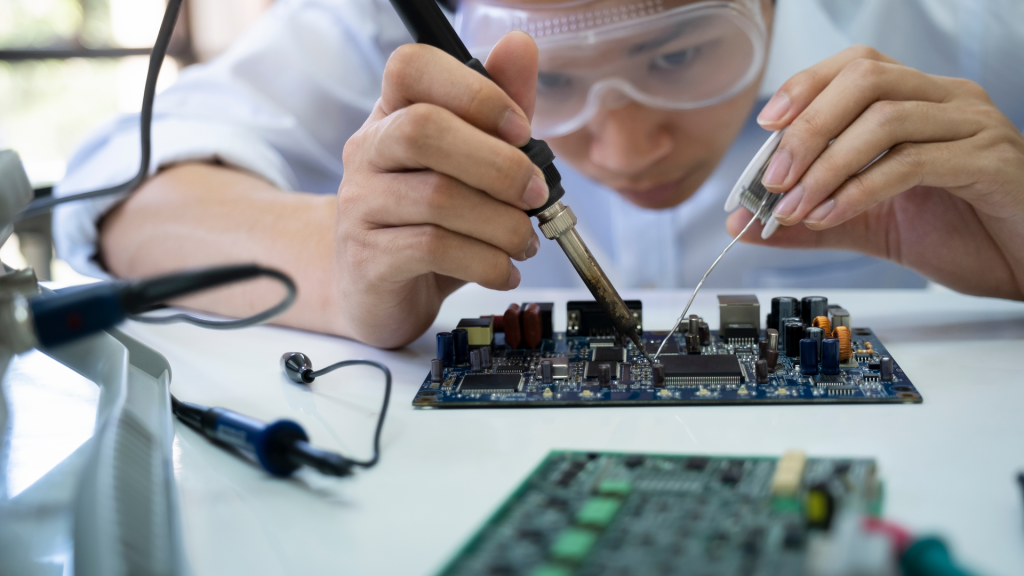

The scientists tested their hypothesis using a simulated industrial defect-inspection task: looking at circuit boards for errors. The scientists provided images of circuit boards to 42 participants. The circuit boards were blurred, and the sharpened images could only be viewed by holding a mouse tool over them. This allowed the scientists to track participants’ inspection of the board.

Half of the participants were told that they were working on circuit boards that had been inspected by a robot called Panda. Although these participants did not work directly with Panda, they had seen the robot and could hear it while they worked. After examining the boards for errors and marking them, all participants were asked to rate their own effort, how responsible for the task they felt, and how they performed.

Looking but not seeing

At first sight, it looked as if the presence of Panda had made no difference — there was no statistically significant difference between the groups in terms of time spent inspecting the circuit boards and the area searched. Participants in both groups rated their feelings of responsibility for the task, effort expended, and performance similarly.

But when the scientists looked more closely at participants’ error rates, they realized that the participants working with Panda were catching fewer defects later in the task, when they’d already seen that Panda had successfully flagged many errors. This could reflect a ‘looking but not seeing’ effect, where people get used to relying on something and engage with it less mentally. Although the participants thought they were paying an equivalent amount of attention, subconsciously they assumed that Panda hadn’t missed any defects.

“It is easy to track where a person is looking, but much harder to tell whether that visual information is being sufficiently processed at a mental level,” said Dr Linda Onnasch, senior author of the study.

The experimental set-up with the human-robot team. Image supplied by the authors.

Safety at risk?

The authors warned that this could have safety implications. “In our experiment, the subjects worked on the task for about 90 minutes, and we already found that fewer quality errors were detected when they worked in a team,” said Onnasch. “In longer shifts, when tasks are routine and the working environment offers little performance monitoring and feedback, the loss of motivation tends to be much greater. In manufacturing in general, but especially in safety-related areas where double checking is common, this can have a negative impact on work outcomes.”

The scientists pointed out that their test has some limitations. While participants were told they were in a team with the robot and shown its work, they did not work directly with Panda. Additionally, social loafing is hard to simulate in the laboratory because participants know they are being watched.

“The main limitation is the laboratory setting,” Cymek explained. “To find out how big the problem of loss of motivation is in human-robot interaction, we need to go into the field and test our assumptions in real work environments, with skilled workers who routinely do their work in teams with robots.”

Bidirectional reflectivity measurements for ground-based objects

Using large language models to enable open-world, interactive and personalized robot navigation

Robot maker dreams of turning sci-fi into reality

How robots can help find the solar energy of the future

Running on fumes? AI isn’t possible without proper data management

Robot Talk Episode 59 – Ffion Llewellyn

Claire chatted to Ffion Llewellyn from Oshen about sea-faring robots and ocean sensing.

Ffion Llewellyn graduated from Imperial College London in 2022 with a masters in Aeronautical Engineering. Following this, she joined Oshen who are building low cost, autonomous micro-vessels for remote ocean sensing. Ffion has been focused on the integration and testing of sensors onto Oshen’s autonomous micro-vessels, including metocean sensors and hydrophones for the monitoring of marine mammals. Her role also includes the design and manufacture of the micro-vessels, conducting sea trials and analysing the data collected.

Engineers develop breakthrough ‘robot skin’

Study shows same movement patterns used by wide range of organisms, with implications for cognition and robotics

Introducing the trimmed helicoid, a soft robot arm that’s based on an elephant’s trunk

Implementing the Most Efficient Picking Paths to Meet Crucial KPIs

Japan show provides glimpse of robots as future of rescue efforts

Code to Joy: Why Everyone Should Learn a Little Programming – Interview with Michael Littman

Code to Joy: Why Everyone Should Learn a Little Programming is a new book from Michael Littman, Professor of Computer Science at Brown University and a founding trustee of AIhub. We spoke to Michael about what the book covers, what inspired it, and how we are all familiar with many programming concepts in our daily lives, whether we realize it or not.

Could you start by telling us a bit about the book, and who the intended audience is?

The intended audience is not computer scientists, although I have been getting a very warm reception from computer scientists, which I appreciate. The idea behind the book is to try to help people understand that telling machines what to do (which is how I view much of computer science and AI) is something that is really accessible to everyone. It builds on skills and practices that people already have. I think it can be very intimidating for a lot of people, but I don’t think it needs to be. I think that the foundation is there for everybody and it’s just a matter of tapping into that and building on top of it. What I’m hoping, and what I’m seeing happening, is that machine learning and AI is helping to meet people part way. The machines are getting better at listening as we try to get better at telling them what to do.

What made you decide to write the book, what was the inspiration behind it?

I’ve taught large introductory computer science classes and I feel like there’s an important message in there about how a deeper knowledge of computing can be very empowering, and I wanted to bring that to a larger audience.

Could you talk a bit about the structure of the book?

The meat of the book talks about the fundamental components that make up programs, or, in other words, that make up the way that we tell computers what to do. Each chapter covers a different one of those topics – loops, variables, conditionals, for example. Within each chapter I talk about the ways in which this concept is already familiar to people, the ways that it shows up in regular life. I point to existing pieces of software or websites where you can make use of that one particular concept to tell computers what to do. Each chapter ends with an introduction to some concepts from machine learning that can help create that particular programming construct. For example, in the chapter on conditionals, I talk about the ways that we use the word “if” in regular life all the time. Weddings, for example, are very conditionally structured, with statements like “if anyone has anything to say, speak now or forever hold your peace”. That’s kind of an “if-then” statement. In terms of tools to play with, I talk about interactive fiction. Partway between video games and novels is this notion that you can make a story that adapts itself while it’s being read. What makes that interesting is this notion of conditionals – the reader can make a choice and that will cause a branch. There are really wonderful tools for being able to play with this idea online, so you don’t have to be a full-fledged programmer to make use of conditionals. The machine learning concept introduced there is decision trees, which is an older form of machine learning where you give a system a bunch of examples and then it outputs a little flowchart for decision making.

Do you touch on generative AI in the book?

The book was already in production by the time ChatGPT came out, but I was ahead of the curve, and I did have a section specifically about GPT-3 (pre-ChatGPT) which talks about what it is, how machine learning creates it, and how it itself can be helpful in making programs. So, you see it from both directions. You get the notion that this tool actually helps people tell machines what to do, and also the way that humanity created this tool in the first place using machine learning.

Did you learn anything while you were writing the book that was particularly interesting or surprising?

Researching the examples for each chapter caused me to dig into a whole bunch of topics. This notion of interactive fiction, and that there’s tools for creating interactive fiction, I found pretty interesting. When researching another chapter, I found an example from a Jewish prayer book that was just so shocking to me. So, Jewish prayer books (and I don’t know if this is true in other belief systems as well, but I’m mostly familiar with Judaism), contain things you’re supposed to read, but they have little conditional markings on them sometimes. For example, one might say “don’t read this if it’s a Saturday”, or “don’t read this if it’s a full moon”, or “don’t read if it’s a full moon on a Saturday”. I found one passage that actually had 14 different conditions that you had to check to decide whether or not it was appropriate to read this particular passage. That was surprising to me – I had no idea that people were expected to do so much complex computation during a worship activity.

Why is it important that everybody learns a little programming?

It’s really important to keep in mind the idea that at the end of the day what AI is doing is making it easier for us to tell machines what to do, and we should share that increased capability with a broad population. It shouldn’t just be the machine learning engineers who get to tell computers what to do more easily. We should find ways of making this easier for everybody.

Because computers are here to help, but it’s a two-way street. We need to be willing to learn to express what we want in a way that can be carried out accurately and automatically. If we don’t make that effort, then other parties, companies often, will step in and do it for us. At that point, the machines are working to serve some else’s interest instead of our own. I think it’s become absolutely essential that we restore a healthy relationship with these machines before we lose any more of our autonomy.

Any final thoughts or takeaways that we should bear in mind?

I think there’s a message here for computer science researchers, as well. When we tell other people what to do, we tend to combine a description or a rule, something that’s sort of program-like, with examples, something that’s more data-like. We just intermingle them when we talk to each other. At one point when I was writing the book, I had a dishwasher that was acting up and I wanted to understand why. I read through its manual, and I was struck by how often it was the case that in telling people what to do with the dishwasher, the authors would consistently mix together a high-level description of what they are telling you to do with some particular, vivid examples: a rule for what to load into the top rack, and a list of items that fit that rule. That seems to be the way that people want to both convey and receive information. What’s crazy to me is that we don’t program computers that way. We either use something that’s strictly programming, all rules, no examples, or we use machine learning, where it’s all examples, no rules. I think the reason that people communicate this way with each other is because those two different mechanisms have complementary strengths and weaknesses and when you combine the two together, you maximize the chance of being accurately understood. And that’s the goal when we’re telling machines what to do. I want the AI community to be thinking about how we can combine what we’ve learned about machine learning with something more programming-like to make a much more powerful way of telling machines what to do. I don’t think this is a solved problem yet, and that’s something that I really hope that people in the community think about.

Code to Joy: Why Everyone Should Learn a Little Programming is available to buy now.

|

Michael L. Littman is a University Professor of Computer Science at Brown University, studying machine learning and decision making under uncertainty. He has earned multiple university-level awards for teaching and his research on reinforcement learning, probabilistic planning, and automated crossword-puzzle solving has been recognized with three best-paper awards and three influential paper awards. Littman is co-director of Brown’s Humanity Centered Robotics Initiative and a Fellow of the Association for the Advancement of Artificial Intelligence and the Association for Computing Machinery. He is also a Fellow of the American Association for the Advancement of Science Leshner Leadership Institute for Public Engagement with Science, focusing on Artificial Intelligence. He is currently serving as Division Director for Information and Intelligent Systems at the National Science Foundation. |