AI approach yields ‘athletically intelligent’ robotic dog

Elevating Precision Motion and Control with Magnetic Levitation to New Heights

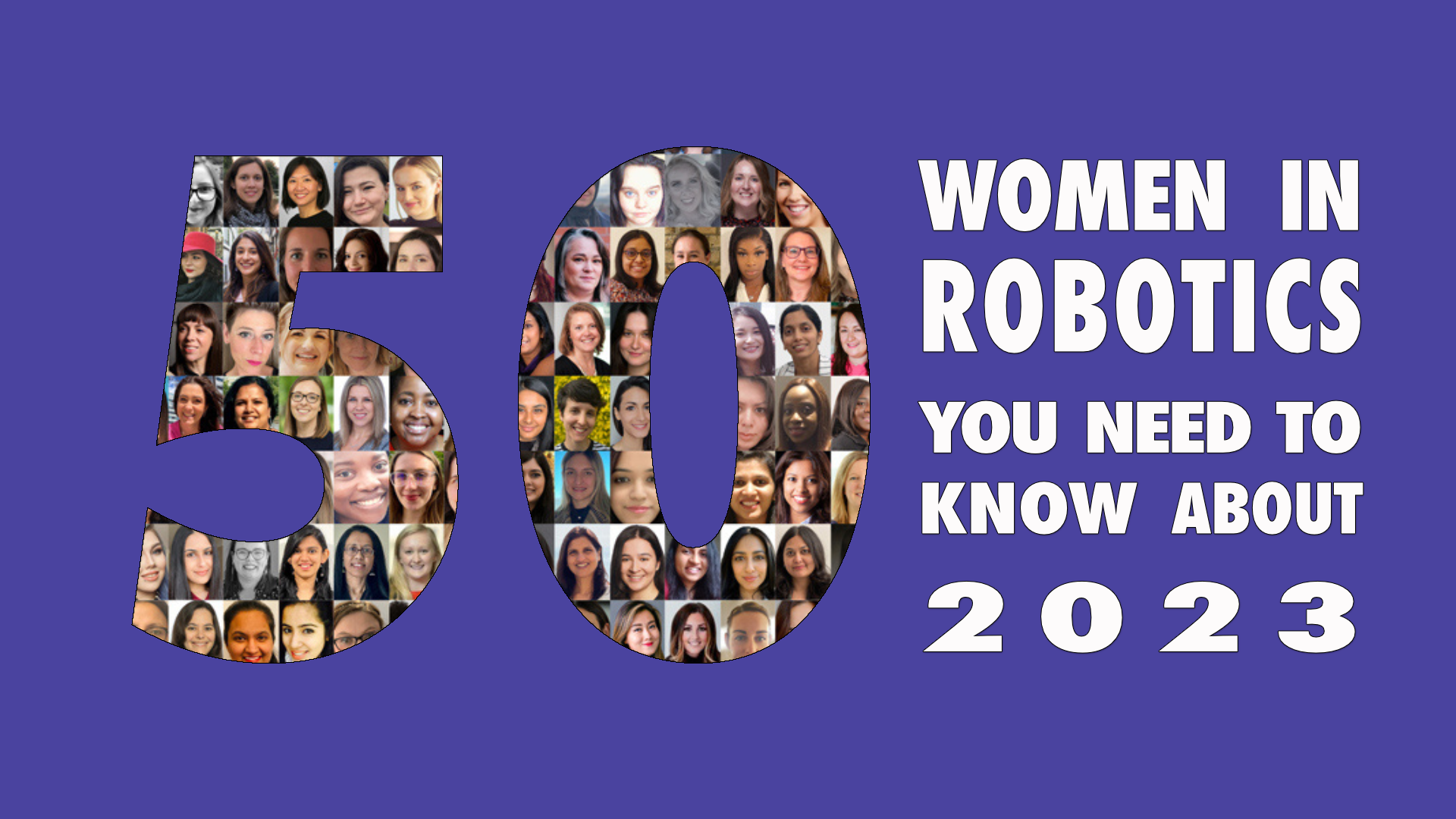

50 women in robotics you need to know about 2023

In celebration of the launch of International Women in Robotics Day, the Women in Robotics organization is proud to release another “50 women in robotics you need to know about” collection of stories. With a growing robotics industry there are many opportunities for everyone to get involved. This is why we showcase the wide range of roles that women play in robotics today.

Since 2012, the Women in Robotics organization has released a list of women building the future in robotics. The list has covered all ages, career stages, types of occupation and experience. We’ve featured more than 350 women already and we’ve shown that women have always been working in the robotics industry, in the earliest robotics research labs and companies, although those stories have often been forgotten.

This year’s collection includes Nancy Cornelius, co-founder of Boston Dynamics and the first engineer hired. Cornelius remained an integral part of Boston Dynamics until the company was sold to Google in 2013. Vandi Verma is the head of NASA’s rover (robot) program. Joanna Buttler is the head of the Global Autonomous Technology Group for Daimler Truck. And Whitney Rockley founded a venture capital company investing exclusively in ‘industrial internet’ companies like Clearpath Robotics.

For the first time, we feature an Indigenous (Ojibwe) American roboticist, Danielle Boyer. Boyer started a non-profit The STEAM Connection to combat the difficulties that many kids have getting access to robotics. She created an affordable robot kit that’s been distributed to thousands of students, and is proudest of the SKOBOT project. Personalized robots that keep culture and language traditions alive. Boyer epitomizes the motto “Building the Future”.

We also try to feature women from all regions of the world and this year’s collection represents Nigeria, India, China, Australia, Japan, Switzerland, Croatia, Korea, Denmark, Singapore, Italy, Romania, United States, Sweden, Spain, Canada, the UK, Israel, Austria, Belgium, Mexico, Argentina and Brazil. There is an active Latinx community in Women in Robotics engaged in translating more robotics information into Spanish, hoping to create more connections between the global robotics community and the roboticists, and potential roboticists, of Latin America.

There have always been women doing great things in robotics! And we’re pleased to present another collection of strong female role models for young and upcoming roboticists (of any gender).

You can also join in the Women in Robotics celebrations today and throughout October, with events listed on the women in robotics site, like Diversity Cocktails at the IROS conference in Detroit, or the launch of the Los Angeles women in robotics chapter. Women in Robotics is a global community organization for women and non-binary people working in robotics and those who’d like to work in robotics. Learn more at https://womeninrobotics.org

Join our events, host your own events, share our celebration on social media!