The Standard Platform Soccer League in action.

The Standard Platform Soccer League in action.

This is the second of our daily digests from RoboCup2024 in Eindhoven, The Netherlands. If you missed the first digest, which gives some background to RoboCup, you can find it here.

Competitions continued across all the leagues today, with participants vying for a place in Sunday’s finals.

The RoboCup@Work league focusses on robots in work-related scenarios, utilizing ideas and concepts from other RoboCup competitions to tackle open research challenges in industrial and service robotics.

I arrived at the arena in time to catch the advanced navigation test. Robots have to autonomously navigate, picking up and placing objects at different work stations. In this advanced test, caution tape is added to the arena floor, which the robots should avoid travelling over. There is also a complex placing element where teams have to put an object that they’ve collected into a slot – get the orientation or placement of the object slightly wrong and the it won’t fall into the slot.

The RoboCup@Work arena just before competition start.

The RoboCup@Work arena just before competition start.

Eight teams are taking part in the league this year. Executive Committee member Asad Norouzi said that there are plans to introduce a sub-league which would provide an entry point for new teams or juniors to get into the league proper.

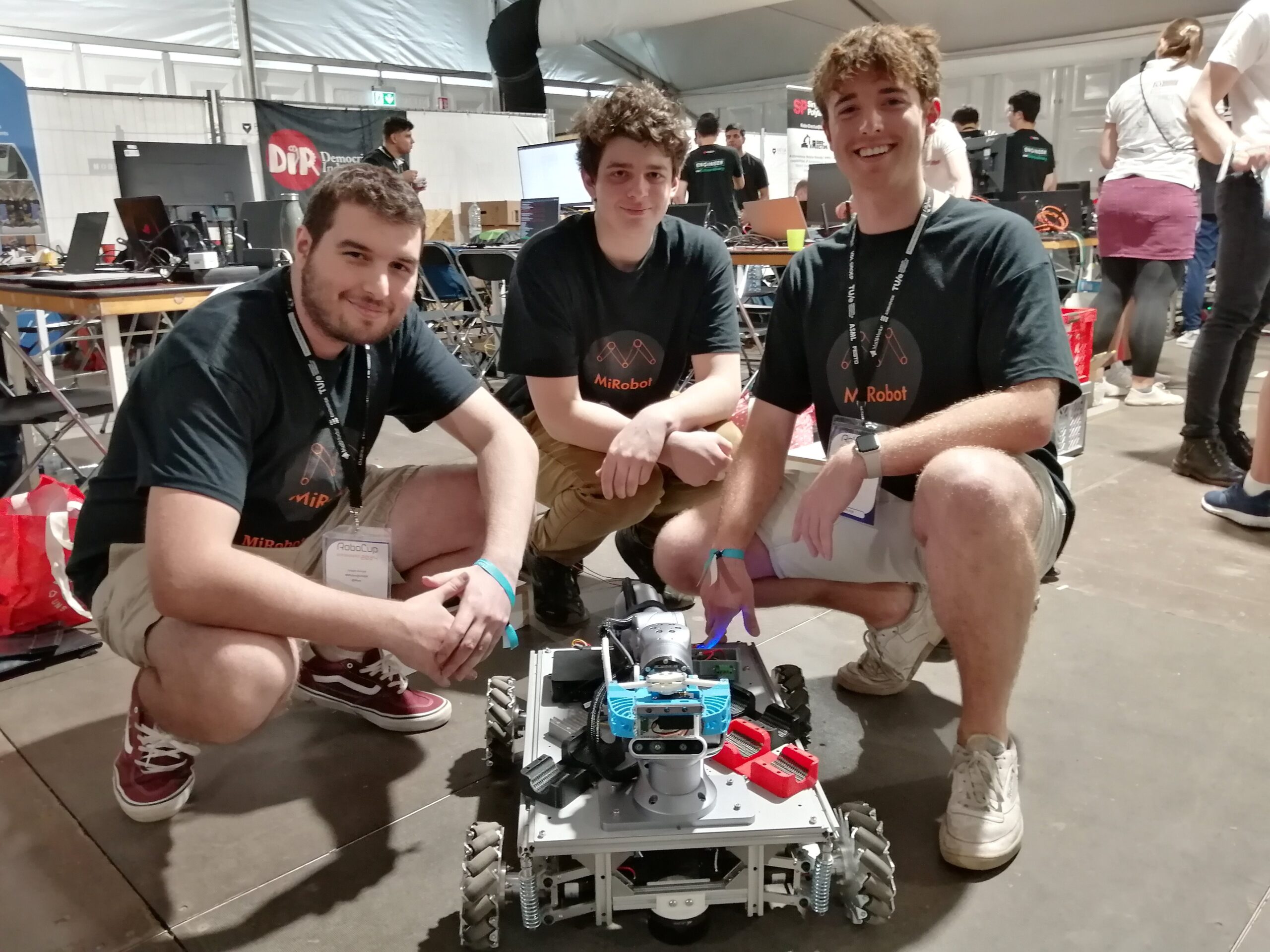

I caught up with Harrison Burns, Mitchell Torok and Jasper Arnold from Team MiRobot. They are based at the University of New South Wales and are attending RoboCup for the first time.

Team MiRobot from UNSW.

Team MiRobot from UNSW.

The team actually only started six months ago, so final preparations have been a bit stressful. However, the experience has been great fun, and the competition has gone well so far. Like most teams, they’ve had to make many refinements as the competition has progressed, leading to some late nights.

One notable feature of the team’s robot is the bespoke, in-house-designed grasping mechanism on the end of the arm. The team note that “it has good flexible jaws, so when it grabs round objects it actually pulls the object directly into it. Because it uses a linear motion, compared to a lot of other rotating jaws, it has a lot better reliability for picking up objects”.

Here is some footage from the task, featuring Team bi-t-bots and Team Singapore.

Team b-it-bots take on the RoboCup@Work advanced navigation test, picking up a drill bit #RoboCup2024 pic.twitter.com/QfijZpxaOK

— AIhub (@aihuborg) July 20, 2024

Team Singapore placing an object in the RoboCup@Work advanced navigation test pic.twitter.com/SvgLBOaVo7

— AIhub (@aihuborg) July 20, 2024

In the Middle Size Soccer league (MSL), teams of five fully autonomous robots play with a regular size FIFA ball. Teams are free to design their own hardware but all sensors have to be on-board and there is a maximum size and weight limit of 40kg for the robots. The research focus is on mechatronics design, control and multi-agent cooperation at plan and perception levels. Nine teams are competing this year.

Action from the Middle Size League at #RoboCup2024.

Falcons vs Robot Club Toulon pic.twitter.com/GHcNLOx2nV

— AIhub (@aihuborg) July 20, 2024

I spoke to António Ribeiro, who is a member of the technical committee and part of Team LAR@MSL from the University of Minho, Portugal. The team started in 1998, but António and most of his colleagues on the current team have only been involved in the MSL since September 2022. The robots have evolved as the competition has progressed, and further improvements are in progress. Refinements so far have included communication, the detection system, and the control system. They are pleased with the improvements from the previous RoboCup. “Last year we had a lot of hardware issues, but this year the hardware seems pretty stable. We also changed our coding architecture and it is now much easier and faster for us to develop code because we can all work on the code at the same time on different modules”.

António cited versatility and cost-effective solutions as strengths of the team. “Our robot is actually very cheap compared to other teams. We use a lot of old chassis, and our solutions always go to the lowest cost possible. Some teams have multiple thousand dollar robots, but, for example, our vision system is around $70-80. It works pretty well – we need to improve the way we handle it, but it seems stable”.

Team LAR@MSL

Team LAR@MSL

The RoboCup@Home league aims to develop service and assistive robot technology with high relevance for future personal domestic applications. A set of benchmark tests is used to evaluate the robots’ abilities and performance in a realistic non-standardized home environment setting. These tests include helping to prepare breakfast, clearing the table, and storing groceries.

I arrived in time to watch the “stickler for the rules” challenge, where robots have to navigate different rooms and make sure that the people inside (“guests” at a party) are sticking to four rules: 1) there is one forbidden room – if a guest is in there the robot must alert them and ask them to follow it into another room), 2) everyone must have a drink in their hand – if not, the robot directs them to a shelf with drinks, 3) no shoes to be worn in the house, 4) there should be no rubbish left on the floor.

After watching an attempt from the LAR@Home robot, Tiago from the team told me a bit about the robot. “The goal is to develop a robot capable of multi general-purpose tasks in home and healthcare environments.” With the exception of the robotic arm, all of the hardware was built by the team. The robot has two RGBD cameras, two LIDARs, a tray (where the robot can store items that it needs to carry), and two emergency stop buttons that deactivate all moving parts. Four omnidirectional wheels allow the robot to move in any direction at any time. The wheels have independent suspension systems which guarantees that they can all be on the ground at all times, even if there are bumps and cables on the venue floor. There is a tablet that acts as a visual interface, and a microphone and speakers to enable communication between humans and the robot, which is all done via speaking and listening.

Tiago told me that the team have talked to a lot healthcare practitioners to find out the main problems faced by elderly people, and this inspired one of their robot features. “They said that the two main injury sources are from when people are trying to sit down or stand up, and when they are trying to pick something up from the floor. We developed a torso that can pick objects from the floor one metre away from the robot”.

The LAR@Home team.

The LAR@Home team.

You can keep up with the latest news direct from RoboCup here.

Click here to see all of our content pertaining to RoboCup.

The main soccer arena.

The main soccer arena. Some of the pitches in the main soccer arena.

Some of the pitches in the main soccer arena.  Standard platform league. Round 5 champions cup match between SPQR Team vs rUNSWift.

Standard platform league. Round 5 champions cup match between SPQR Team vs rUNSWift.  Action from the Humanoid AdultSize League.

Action from the Humanoid AdultSize League. RoboCup Rescue arena from above.

RoboCup Rescue arena from above.