Researchers enhance human-robot interaction by merging mixed reality and robotics

Pallet Moving Robots Are Game Changers – And Easy to Implement Fast

New implant offers promise for the paralyzed

Michel Roccati stands up and walks in Lausanne. © EPFL / Alain Herzog 2021

The images made headlines around the world in late 2018. David Mzee, who had been left paralyzed by a partial spinal cord injury suffered in a sports accident, got up from his wheelchair and began to walk with the help of a walker. This was the first proof that Courtine and Bloch’s system – which uses electrical stimulation to reactivate spinal neurons – could work effectively in patients.

Fast forward three years, and a new milestone has just been reached. The research team led by both Courtine, a professor at EPFL and member of NCCR Robotics, and Bloch, a professor and neurosurgeon at CHUV, has enhanced their system with more sophisticated implants controlled by artificial-intelligence software. These implants can stimulate the region of the spinal cord that activates the trunk and leg muscles. Thanks to this new technology, three patients with complete spinal cord injury were able to walk again outside the lab. “Our stimulation algorithms are still based on imitating nature,” says Courtine. “And our new, soft implanted leads are designed to be placed underneath the vertebrae, directly on the spinal cord. They can modulate the neurons regulating specific muscle groups. By controlling these implants, we can activate the spinal cord like the brain would do naturally to have the patient stand, walk, swim or ride a bike, for example.”

Patient with complete spinal cord injury (left) and incomplete spinal cord injury (right) walking in Lausanne, Switzerland. ©NeuroRestore – Jimmy Ravier

The new system is described in an article appearing in Nature Medicine that was also co-authored by Silvestro Micera, who leads the NCCR Robotics Wearable Robotics Grand Challenge. “Our breakthrough here is the longer, wider implanted leads with electrodes arranged in a way that corresponds exactly to the spinal nerve roots,” says Bloch. “That gives us precise control over the neurons regulating specific muscles.” Ultimately, it allows for greater selectivity and accuracy in controlling the motor sequences for a given activity.

Extensive training is obviously necessary for patients to get comfortable using the device. But the pace and scope of rehabilitation is amazing. “All three patients were able to stand, walk, pedal, swim and control their torso movements in just one day, after their implants were activated!” says Courtine. “That’s thanks to the specific stimulation programs we wrote for each type of activity. Patients can select the desired activity on the tablet, and the corresponding protocols are relayed to the pacemaker in the abdomen.”

Read the full story on the EPFL website.

Vision Language models: towards multi-modal deep learning

Unilever Konya HPC Factory Case Study

How to help humans understand robots

HEIDENHAIN In Action at OMIC

Hands on ground robot & drone design series part I: mechanical & wheels

This is a new series looking at the detailed design of various robots. To start with we will be looking at the design of two different robots that were used for the DARPA Subterranean Challenge. Both of these robots were designed for operating in complex subterranean environments, including Caves, Mines & Urban environments. Both of these robots presented are from the Carnegie Mellon University Explorer team. While I am writing these posts, this was a team effort that required many people to be successful. (If anyone on Team Explorer is reading this, thank you for everything, you are all awesome.)

These posts are skipping the system requirements step of the design process. See here for more details on defining system requirements.

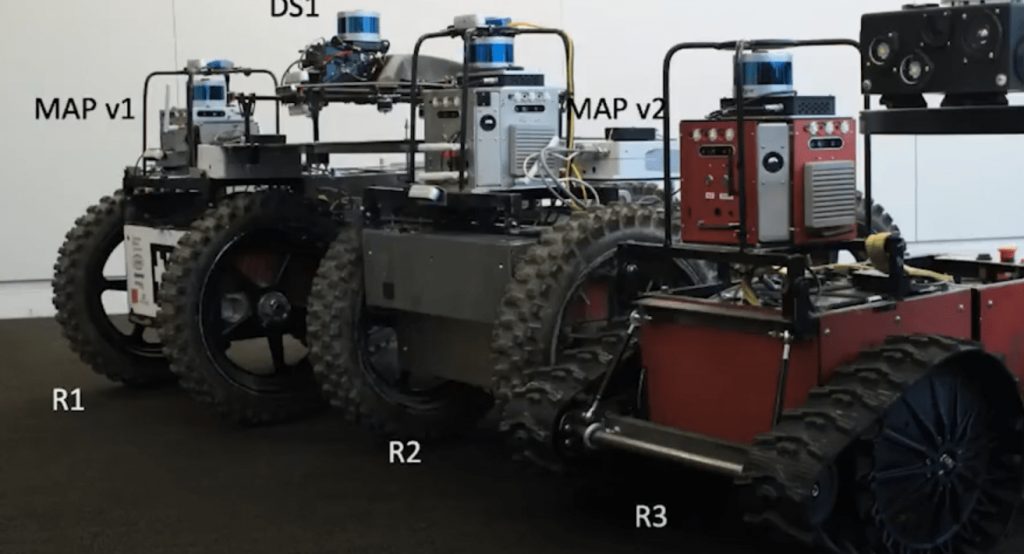

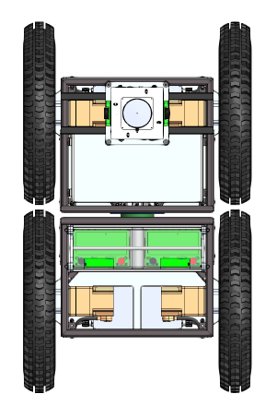

SubT Ground UGV and DS Drone ImageTeam Explorer R1 Ground Robot and DS Drone [Source]

R3 Ground robot (UGV)

For the SubT challenge three ground vehicles were developed all of a similiar design. The ground robots were known with the moniker of R#, where # is the order we built them in. The primary difference between the three versions are

R1 – Static Chassis, so the chassis has minimal ground compliance when driving over obstacles and uneven surfaces. R1 was initially supposed to have a differencing mechanism for compliance, however due to time constraints it was left out from this first version. R1 is pictured above.

R2 – Has the differencing mechanism and was designed as initially planned.

R3 – Is almost identical to R2, but smaller. This robot was built for navigating smaller areas and also to be able to climb up and down steps. It also uses different motors for the driving the wheels.

DS drone

The original drone design used by Team Explorer called their drones D1, D2, etc.. This let a combination of UGV +Drone go by joint designations such as R2D2. Early on, the team switched to a smaller drone design that was referred to as DS1, DS2, etc.. Where DS is short for Drone Small.

The drone design post are split into two sections. The first is about the actual drone platform, and the second is about the payload that sat on top of the drone.

Mechanical & wheels

Robot size decision

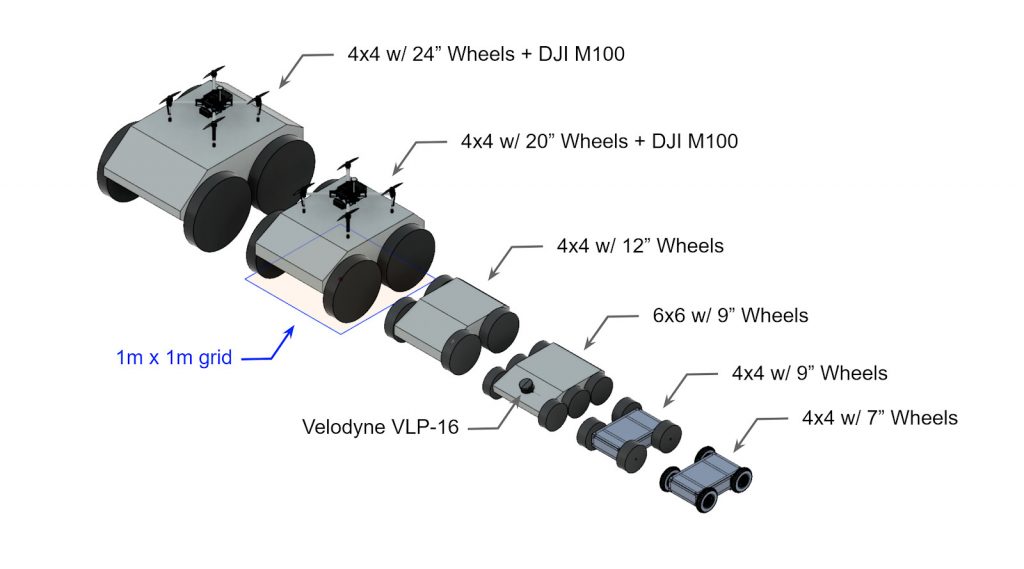

After we have the list of system requirements we start with the design of the mechanical structure of the robot. In this case we decided that a wheeled robot would be best. We wanted to have the largest wheels possible to help climb over obstacles, however, we also needed to keep our sensors at the top of the vehicle above the wheels and be able to fit in openings 1 x 1 meters. These requirements set the maximum size of the robot as well as the maximum size of the wheels.

The final dimensions of the first two vehicles (R1 and R2) were around (L x W x H) 1.2 x 0.8 x 0.8 meters (3.9 x 2.6 x 2.6 ft). The third smaller vehicle was around 1 x 0.6 m (3.2 x 1.9 ft) and designed to fit through 0.7×0.7 m openings.

Steering approach

Early on we also needed to determine the method of driving. Do we want wheels or tracks? Do we want to steer with ackerman steering, rocker-bogie, skid steer, etc.?

See here for more details on steering selection.

We chose to use a skid steer four wheeled drive approach for the simplicity of control and the ability to turn in place (point turns). At the start of the competition we were not focused on stair climbing, which might have changed some of our design decisions.

Suspension

The next step was to determine the suspension type. A suspension is needed so that all four of the wheels make contact with the ground. If the robot had a static fixed frame only three of the wheels might make contact with the ground when on uneven surfaces. This would reduce our stability and traction.

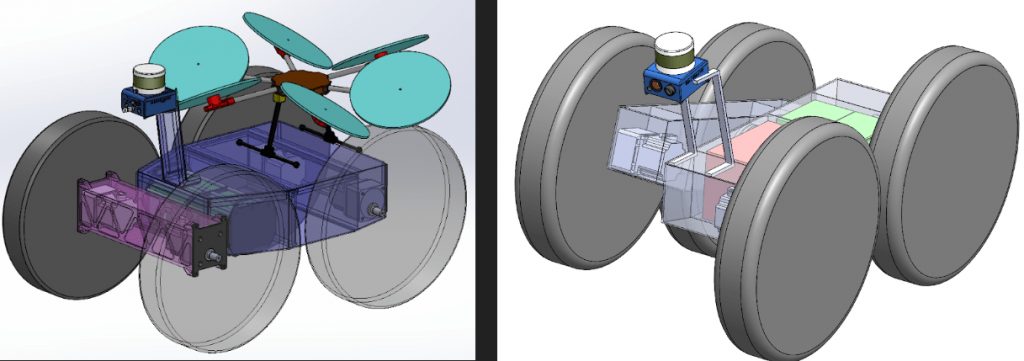

We decided early on that we wanted a passive suspension for the simplicity of not having active components. With a passive suspension we were looking at different type of body averaging. We roughly had two choices, front-pivot or side-to-side.

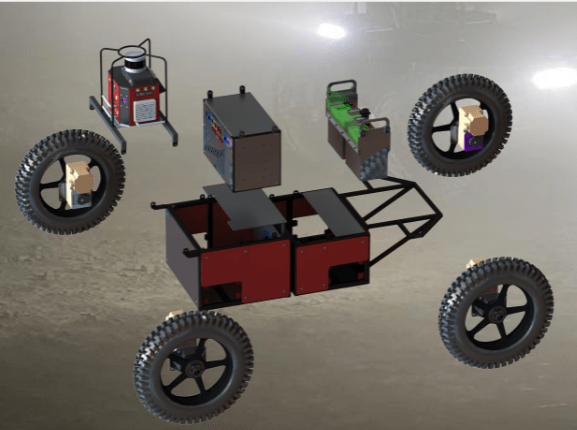

Left image shows a front-pivot approach. Right image shows a side-to-side differencing method.

We decided to choose the front-pivot method, however we decided to make the pivot be roughly centered in the vehicle. This allowed us to put all of the electronics in the front and the batteries in the rear. The front-pivot method we felt would be better for climbing up stairs and for climbing over obstacles on level’ish terrain. Also importantly this approach made it easier to carry a drone on the ground vehicle.

Chassis design

At this point we started designing the chassis. This was an important step so that we could estimate the total weight in order to spec the drive-train. Ideas for the chassis were everything from building with 80/20 to building an aluminum frame and populating it with components, to a solid welded chassis. We selected to use a welded steel tube chassis for the strength. We needed a robot that could survive anything we did to it. This proved to be a wise decision when the robot crashed or fell over cliffs. The downside of the steel was increased mass.

For the pivot we found a large crossed roller bearing that we were able to use to attach the two steel boxes together. The large bore in the middle was useful for passing wires/cables through for batteries, motors, etc…

Part of the chassis design was also determining where all of the components should mount. Having the batteries (green boxes in image above) in the rear helps us climb obstacles. Other goals were to keep the ground clearance as high as possible while keeping the center of gravity (CG) as low as possible. Since those are competing goals, part of the design process was to develop a happy medium.

In order to maintain modularity for service, each wheel module had the motor controller, motor, gear box, and bearing block as a solid unit that could be swapped between robots if there was any issues. This also allowed most of the wiring to be part of that block. The only cables that needed to be connected to each of the modules from the robot were power, CAN communications, and the emergency stop line; all of which were connectorized.

For electronics on R1 and R2 we built an electronics box that was separate from the robot and could be removed from the robot as needed. On R3 we built the electronics into the robot itself. This modular approach was very useful when we had to do some welding to the chassis post-build for modifications. The downside of the modular approach for electronics was that working in the electronics box was more difficult then in the open R3. Also the time for fabricating and wiring the R1/R2 electronics boxes was considerably more than the open R3 electronics. We also had several failures during testing related to the connectors from the electronics boxes.

Wheel design

We debated a lot about what type of wheel to use, ultimately we used motorcycle wheels due to the simplicity of obtaining them and mounting them. The wheel diameter we desired also lined up very well with motorcycle wheels. In order to get better traction and ability to climb over obstacles we liked the wider tires.

R1 and R2 had a wheel diameter of 0.55m, R3 had a wheel diameter of 0.38m. This gave R1 and R2 a ground clearance of 0.2m, and R3 a ground clearance of 0.12m.

The wheel hubs ended up being a different story. We found solid metal rims that we had to machine large amounts of metal out of in order to balance the strength and the weight.

The R1 and R2 robots were around 180kg (400lb)*, the wheels were for a vehicle significantly heavier. As such we put a small amount of pressure in the wheels to keep them from falling off, however we tried to keep the pressure low to increase the ground compliance of the wheels. This method added a very small amount of compliance, we tried removing some of the rubber from the sidewalls, but was not able to get a happy medium between limiting the wheel deforming during point turns and increasing ground compliance.

We were also concerned how the motorcycle tires would do when point turning and if we would rip the wheels from the rims. To counter this we installed a beadlock system into each of the wheels. The beadlock was a curved segment installed in multiple places to sandwich the tire to the rim. We never had a wheel separate from the rim, so our approach definitely worked, however it was a pain to install.

*R3 was around 90 kg (200 lbs). We tried using different wheels and tracks to get R3 to climb stairs well. However that story is for another post…

The black rims were solid metal that we machined the wedges into in order to lightweight them. The 3 metal posts in those wedges are the beadlock tensioning bolts. You can also see the castle nut and pin that holds the wheel to the axle. This image is from R2, you can see the gap between the front and rear sections of the robot where the pivot is.

Drive-train selection

Now that we had a mass estimate and system requirements for speed and obstacle clearance we can start to spec the drive-train. The other piece of information that we needed and had to discuss with the electrical team was the voltage of the battery. Different bus voltages greatly affects the motors available for a given speed and torque. We decided on a 51.2v nominal bus voltage. This presented a problem since it was very hard to find the speed/torques we wanted at that voltage. We ended up selecting a 400W 1/2HP motor+gearbox from Oriental Motors with a parallel 100:1 gearbox that allows us to drive at a maximum speed of 2.5m/s.

The part numbers of the motors and gearbox on R1 and R2 were BLVM640N-GFS + GFS6G100FR.

The part numbers of the motors and gearbox on the smaller R3 were Maxon EC 90 Flat + GP81A.

Next steps

Now that we know the mechanics of the robot we can start building it. In the next post we will start looking at the electronics and motor controls. While the nature of the blog makes it seem that this design is a serial process, in reality lots of things are happening in parallel. While the mechanical team is designing the chassis, the electrical team is finding the electrical components needed in order for the mechanical person to know what needs mounted.

It is also important to work with the electrical team to figure out wire routing while the chassis is being developed.

Note of the editor: This post has been merged from the posts “Hands On Ground Robot & Drone Design Series” and “Mechanical & Wheels – Hands On Ground Robot Design“.

New approach to flexible robotics and metamaterials design mimics nature, encourages sustainability

Researchers develop car driving system that can ‘look into the future’ with smart eco mode

Self-healing materials for robotics made from ‘jelly’ and salt

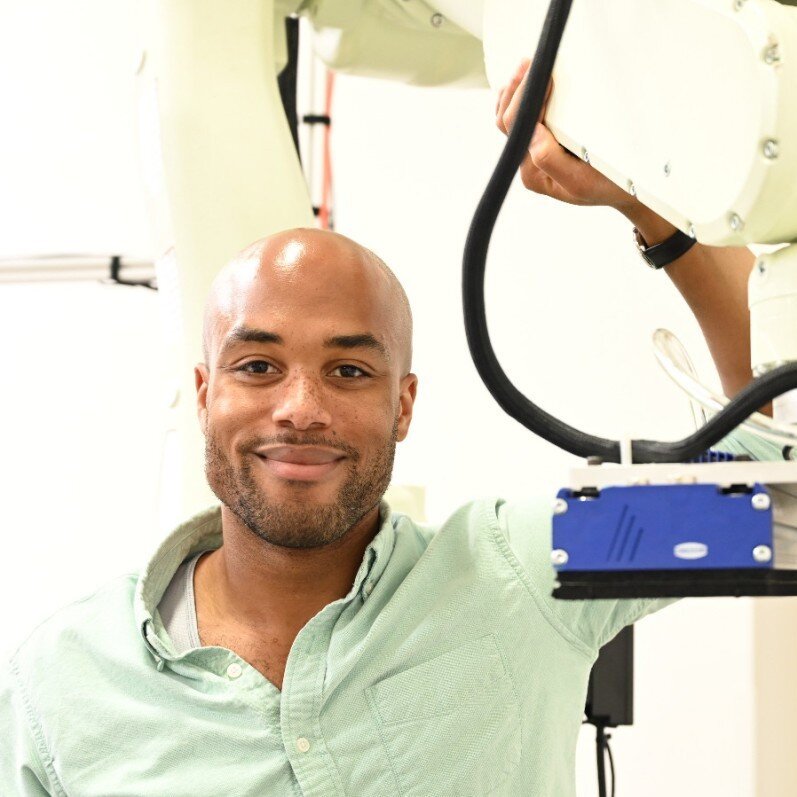

Black in Robotics ‘Meet The Members’ series: Andrew Dupree

Inside of the development studios of San Francisco-based Dexterity, Inc. there is a robot arm that stands as tall as a human. It is placed between a conveyor belt and several wooden pallets, all of which are typical of most warehouse packing facilities. But this is no typical warehouse facility.

This is the location where most warehouse packing facilities would have teams of people manually picking up boxes from the conveyor belt and carefully placing them onto the pallets for wrapping and shipping, but there are no such people here. Instead, as the packages come down the belt, this robot recognizes them, picks them up, and then deposits them onto the target pallet with a gentle touch. It can do all of this on its own, and when a human does enter its vicinity, the robot knows to avoid the human’s area thanks to a “light curtain” sensor.

Andrew Dupree is one of the product managers developing technology like this at Dexterity (p.s. they are hiring!) and, in this ‘Meet the Members’ post, we will look at his journey from pure engineering and working with nonprofits in Haiti to doing product development in the bay area for several budding hardware startups.

Andrew’s trajectory

Andrew described his early childhood as that of a typical “blerd” (aka a black nerd). His father was an early adopter of things like personal computers and Andrew vividly remembers his first computer, a Gateway machine, being delivered to the house.

He spent tons of time poring over the device and creating websites with his brother using Angelfire and HTML. This passion for technology later be combined with a drive to have a social impact at the University of Maryland – College Park. The plan was to do “a Computer Engineering degree focusing on low-cost computers”, but while Andrew worked through courses like Signal Theory, he noted that something seemed to be missing from his experience.

His first attempt to find that “missing piece” was to join a laboratory at UC Berkeley for an internship at their TIER Lab. When the internship was finished and he returned to the East Coast, he remained connected to the local Information and Communications Technology for Developing Countries (ICT4D) community. It was at one of the community’s meetups that he was introduced to a nonprofit working in Haiti and “they actually knew the professor that I’d worked with in Berkeley because it’s a small scene.”

Andrew leveraged this connection to reach out to them with an offer:

I reached out to them and said “Look, I’ve done this internship and I’ve done this work. Are you hiring?”

And they were like “No.”

Then I said, “Are you sure?”

And they said “We’re sure.”

Then I asked “What if I volunteer?”

And they responded “Let’s talk.”

And thus, he had locked in an eight month experience with the nonprofit Inveneo focusing on setting up computer labs and implementing computer literacy programs in Haiti. There was only one detail that he needed to work out: Figuring out what to do with the graduate school admissions offers that had begun to come in. He began to ask around about deferring his admission for a year with surprising results.

“All of the other grad schools that I asked about deferring [said “No”, but] Stanford actually really bent over backwards to help me defer. They don’t have a proper deferral, but they [found a way] and really hooked me up.”

— Andrew

While Stanford’s graduate school offer was being worked out, he dove into the world of Haiti’s Inveneo which was filled with ups and downs. Andrew humbly summarizes his emotions with “Fixing the world is really hard. I had good days where I felt like ‘I’m really making an impact’ and I had bad days where I felt like ‘My work is doing nothing here. This is a tough challenge.’” When he eventually returned to the United States for his Master’s at Stanford, he decided to focus more on the engineering skills that he had not had the time to focus on while working at the nonprofit. In his engineering classes again, he felt like he was missing something, not accessing a part of himself that he really wanted to.

“… I ended up doing a bunch of design classes. And [specifically the] business management for engineers class, that last one was a huge eye opener for me. Because I realized we were doing case studies, and analyzing business strategies [and more]. I really enjoyed that material.”

— Andrew

Andrew did so well in the class that the professor strong-armed him into TA’ing it the following year. Curious about how he could combine his love for business management with his engineering expertise, Andrew asked this professor what careers would best mix these two worlds. “That’s easy,” the professor explained. “You become a product manager.”

So, as Andrew began his career, he sought out experiences to work with or become a product manager. After working on several important hardware engineering teams, he was able to successfully make the jump and has held the title of product manager (in some form) at multiple Silicon Valley hardware-focused tech companies.

He is currently thrilled to be a product manager at the high growth start-up Dexterity, Inc. which develops “full-stack, end-to-end systems for kitting, picking, packing, counting, sorting, palletizing, and order fulfillment.”

“… Working at a small, high-growth startup is a lot of fun … and I think that robotics in Supply Chain/Logistics is huge. It’s going to be even bigger. … I’ve worked in a bunch of different industries at this point and this is my first experience working in an industry where customers truly have a huge problem and they need you to help them solve it.”

— Andrew

In addition to his work at Dexterity, Andrew is excited to return to his passion for social impact. After reading and reflecting on his multiple experiences (both in industry and with ICT4D), he wants “to continue to think about tangibly [making] steps towards social impact and making the world a better place. I’m not sure what that looks like yet, but I’m excited to figure that out.”

Andrew’s challenges

Discovering Andrew’s inner “product manager” might have been accelerated if he had been more receptive to the idea earlier on. He recalls colleagues teasing him while at the University of Maryland with “Oh, you’re just gonna be a business manager at some point.” The culture of valuing technical expertise above all else encouraged people like him to reject the idea of becoming a business manager or leader. It’s a problem that still exists today and that Andrew would like to counter:

“I think what I would’ve internalized earlier is that there are a lot of ways to contribute in technical areas/technical disciplines [like robotics]. For example, it’s critical to understand the problem that you’re trying to solve, to define that clearly, to communicate that clearly and also to keep engineering efforts focused on an impactful outcome.”

— Andrew

Another challenge that Andrew encountered was attempting to get a Product Manager position with only engineering experience on his resumé. Realizing that he needed to convince potential recruiters of his capabilities, he:

- Showcased his understanding of hardware and business via blog posts on his website,

- Discussed relevant product management events and ideas with others on Twitter, and

- Moonlit as a product manager while also performing his current role (as a project manager)

Feel free to check out his website, where he has authored dozens of blog posts on topics ranging from circular buffers to conducting user research to understanding the options for hardware housings. Using this approach, Andrew successfully showcased an understanding of product management that won him the attention of several interesting hardware teams.

Andrew’s words of wisdom

We asked Andrew to share some of the intangible lessons he has learned through the challenges mentioned above and he had many. Some of the important ones are described below.

One of the lessons that has served Andrew well is the importance of self-help books. As he puts it: “A lot of people have been doing this `human` thing for a while. There’s probably no need to reinvent the wheel.” He specifically picks up books that are pervasive or challenge his current ideas about the world. Some examples of books that have previously landed on his reading list are:

- The 7 Habits of Highly Effective People, by Stephen R. Covey

- Winners Take All: The Elite Charade of Changing the World, by Anand Giridharadas

“You’ve got to separate out some of the nonsense, but being proactive about your personal growth … is something that I’ve been intentional about.”

— Andrew

His plan for becoming a Product Manager was one of the things that he had been intentional about and he established a strategy that was able to get him into the career of his choice!

Andrew also advises against pressuring yourself to always be in a rush, whether it be in robotics or in life.

“One of the things that’s surprised me in hindsight is that there’s a lot of time. There’s no huge rush. It feels like if you have turned twenty-six and haven’t made a certain impact, then [there’s a problem.] But looking back, I see that I’ve worked across a bunch of different industries from Academic Research to Consumer Tech. A couple of different flavors of industrial stuff. And I have had at least three or four different job functions entirely. There really doesn’t need to be a rush.”

— Andrew

More from Andrew

To see more from Andrew, please feel free to go to his website.

Also, Dexterity is growing and might need a Black roboticist like you! Reach out to Andrew on our Slack (or through his contact info above) if you’re interested in a role there!

Acknowledgements

Drafts of this article were corrected and improved by Andrew Dupree, Nosa Edoimioya, and Nailah Seale. All current errors are the fault of Kwesi Rutledge. Please reach out to him if you spot any!

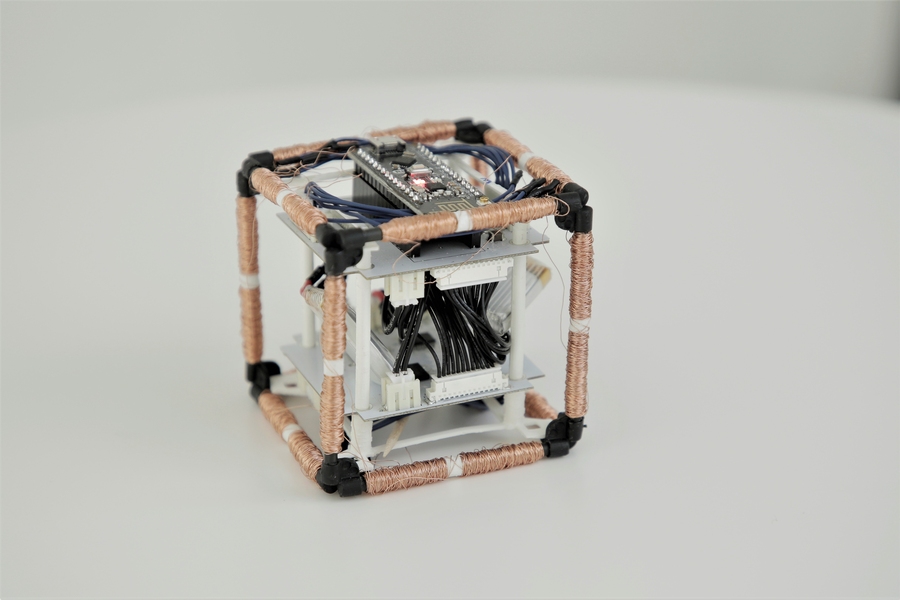

Robotic cubes shapeshift in outer space

MIT PhD student Martin Nisser tests self-reconfiguring robot blocks, or ElectroVoxels, in microgravity. Photo: Steve Boxall/ZeroG

By Rachel Gordon | MIT CSAIL

If faced with the choice of sending a swarm of full-sized, distinct robots to space, or a large crew of smaller robotic modules, you might want to enlist the latter. Modular robots, like those depicted in films such as “Big Hero 6,” hold a special type of promise for their self-assembling and reconfiguring abilities. But for all of the ambitious desire for fast, reliable deployment in domains extending to space exploration, search and rescue, and shape-shifting, modular robots built to date are still a little clunky. They’re typically built from a menagerie of large, expensive motors to facilitate movement, calling for a much-needed focus on more scalable architectures — both up in quantity and down in size.

Scientists from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) called on electromagnetism — electromagnetic fields generated by the movement of electric current — to avoid the usual stuffing of bulky and expensive actuators into individual blocks. Instead, they embedded small, easily manufactured, inexpensive electromagnets into the edges of the cubes that repel and attract, allowing the robots to spin and move around each other and rapidly change shape.

The “ElectroVoxels” have a side length of about 60 millimeters, and the magnets consist of ferrite core (they look like little black tubes) wrapped with copper wire, totaling a whopping cost of just 60 cents. Inside each cube are tiny printed circuit boards and electronics that send current through the right electromagnet in the right direction.

Unlike traditional hinges that require mechanical attachments between two elements, ElectroVoxels are completely wireless, making it much easier to maintain and manufacture for a large-scale system.

ElectroVoxels are robotic cubes that can reconfigure using electromagnets. The cubes don’t need motors or propellant to move, and can operate in microgravity.

To better visualize what a bunch of blocks would look like while interacting, the scientists used a software planner that visualizes reconfigurations and computes the underlying electromagnetic assignments. A user can manipulate up to a thousand cubes with just a few clicks, or use predefined scripts that encode multiple, consecutive rotations. The system really lets the user drive the fate of the blocks, within reason — you can change the speed, highlight the magnets, and display necessary moves to avoid collisions. You can instruct the blocks to take on different shapes (like a chair to a couch, because who needs both?)

The cheap little blocks are particularly auspicious for microgravity environments, where any structure that you want to launch to orbit needs to fit inside the rocket used to launch it. After initial tests on an air table, ElextroVoxels found true weightlessness when tested in a microgravity flight, with the overall impetus of better space exploration tools like propellant-free reconfiguration or changing the inertia properties of a spacecraft.

By leveraging propellant-free actuation, for example, there’s no need to launch extra fuel for reconfiguration, which addresses many of the challenges associated with launch mass and volume. The hope, then, is that this reconfigurability method could aid myriad future space endeavors: augmentation and replacement of space structures over multiple launches, temporary structures to help with spacecraft inspection and astronaut assistance, and (future iterations) of the cubes acting as self-sorting storage containers.

“ElectroVoxels show how to engineer a fully reconfigurable system, and exposes our scientific community to the challenges that need to be tackled to have a fully functional modular robotic system in orbit,” says Dario Izzo, head of the Advanced Concepts Team at the European Space Agency. “This research demonstrates how electromagnetically actuated pivoting cubes are simple to build, operate, and maintain, enabling a flexible, modular and reconfigurable system that can serve as an inspiration to design intelligent components of future exploration missions.”

To make the blocks move, they have to follow a sequence, like little homogeneous Tetris pieces. In this case, there are three steps to the polarization sequence: launch, travel, and catch, with each phase having a traveling cube (for moving), an origin one (where the traveling cube launches), and destination (which catches the traveling cube). Users of the software can specify which cube to pivot in what direction, and the algorithm will automatically compute the sequence and address of electromagnetic assignments required to make that happen (repel, attract, or turn off).

For future work, moving from space to Earth is the natural next step for ElectroVoxels, which would require doing more detailed modeling and optimization of these electromagnets to do reconfiguration against gravity here.

“When building a large, complex structure, you don’t want to be constrained by the availability and expertise of people assembling it, the size of your transportation vehicle, or the adverse environmental conditions of the assembly site. While these axioms hold true on Earth, they compound severely for building things in space,” says MIT CSAIL PhD student Martin Nisser, the lead author on a paper about ElectroVoxels. “If you could have structures that assemble themselves from simple, homogeneous modules, you could eliminate a lot of these problems. So while the potential benefits in space are particularly great, the paradox is that the favorable dynamics provided by microgravity mean some of those problems are actually also easier to solve — in space, even tiny forces can make big things move. By applying this technology to solve real near-term problems in space, we can hopefully incubate the technology for future use on earth too.”

Nisser wrote the paper alongside Leon Cheng and Yashaswini Makaram of MIT CSAIL; Ryo Suzuki, assistant professor of computer science at the University of Calgary; and MIT Professor Stefanie Mueller. They will present the work at the 2022 International Conference on Robotics and Automation. The work was supported, in part, by The MIT Space Exploration Initiative.