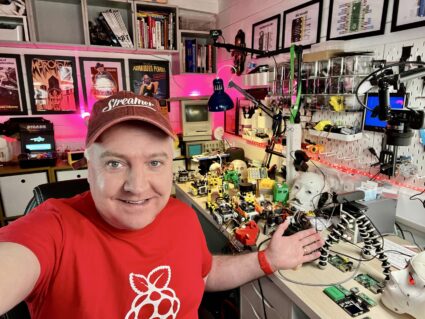

Robot Talk Episode 134 – Robotics as a hobby, with Kevin McAleer

Claire chatted to Kevin McAleer from kevsrobots about how to get started building robots at home.

Kevin McAleer is a hobbyist robotics fanatic who likes to build robots, share videos about them on YouTube and teach people how to do the same. Kev has been building robots since 2019, when he got his first 3d printer and wanted to make more interesting builds. Kev has a degree in Computer Science, and because his day job is relatively hands-off, this hobby allows his creativity to have an outlet. Kev is a huge fan of Python and Micropython for embedded devices, and has a website – kevsrobots.com where you can learn more about how to get started in robotics.

New Encoder Display from US Digital

How modified robotic prosthetics could help address hip and back problems for amputees

How we’re bringing AI image verification to the Gemini app

Build with Nano Banana Pro, our Gemini 3 Pro Image model

Introducing Nano Banana Pro

Designing Human-Centric Automation: The Next Evolution of Robotics in the Supply Chain

Real-world helper exoskeletons come closer to reality with AI training

Microrobots overcome navigational limitations with the help of ‘artificial spacetimes’

Expert comment: How concerned should we be about ‘carebots?’

Decorating plastics with robotic precision

ACM SIGAI Autonomous Agents Award 2026 open for nominations

Nominations are solicited for the 2026 ACM SIGAI Autonomous Agents Research Award. This award is made for excellence in research in the area of autonomous agents. It is intended to recognize researchers in autonomous agents whose current work is an important influence on the field. The award is an official ACM award, funded by an endowment created by ACM SIGAI from the proceeds of previous Autonomous Agents conferences. The recipient of the award will receive a monetary prize and a certificate, and will be invited to present a plenary talk at the AAMAS 2026 conference.

How to nominate

Anyone can make a nomination. Nominations should be made by filling out this google form, and should consist of a short (< 1 page) statement that emphasizes not only the research contributions that the individual has made that merit the award but also how the individual’s current work is an important influence on the field.

Note: a candidate can only be considered for the award if they are explicitly nominated. If you believe that someone deserves the award, then nominate them – don’t assume that somebody else will!

Nomination link is here.

Important Dates

15 December 2025 – Deadline for nominations

1 February 2026 – Announcement of winner

25-29 May 2026 – AAMAS-2026 conference in Paphos, Cyprus

For any questions please contact Edith Elkind (Award Chair) or Louise Dennis (SIGAI Vice Chair).