A Generalist Agent

BYOL tutorial: self-supervised learning on CIFAR images with code in Pytorch

A reconfigurable robotic system for cleaning and maintenance

ABB Robotics unveils world’s first robot-painted art car

Is AI-generated art really creative? It depends on the presentation

A new robotic system for automated laundry

Intelligent Robotics for Laundries Closes Automation Gap

Swiss Robotics Day showcases innovations and collaborations between academia and industry

As the next edition of the Swiss Robotics Day is in preparation in Lausanne, let’s revisit the November 2021 edition, where the vitality and richness of Switzerland’s robotics scene was on full display at StageOne Event and Convention Hall in Zurich. It was the first edition of NCCR Robotics’s flagship event after the pandemic, and it surpassed the scale of previous editions, drawing in almost 500 people. You can see the photo gallery here.

Welcome notes from ETH President Joël Mesot and NCCR Robotics Director Dario Floreano opened a dense conference programme, chaired by NCCR Robotics co-Director Robert Riener and that included scientific presentations from Marco Hutter (ETH Zurich), Stéphanie Lacour and Herb Shea (both from EPFL), as well as the industry perspective from ABB’s Marina Bill, Simon Johnson from the Drone Industry Association and Hocoma co-founder Gery Colombo. A final roundtable – including Robert Riener, Hocoma’s Serena Maggioni, Liliana Paredes from Rehaklinik and Georg Rauter from the University of Basel – focused on the potential and the challenges of innovation in healthcare robotics.

Over 50 exhibitors – including scientific laboratories as well as start-ups and large companies – filled the 3,300 square-meter venue, demonstrating technologies ranging from mobile robots to wearable exoskeletons, from safe delivery drones to educational robots and much more. Sixteen young companies presented their innovations in a start-up carousel. Dozens of professional meetings took place throughout the day, allowing a diverse audience of entrepreneurs, funders, academics and policy makers to network and explore possible collaborations. A crowd of young researchers participated in a mentoring session where Marina Bill (ABB), Auke Ijspeert (EPFL) and Iselin Frøybu (Emovo Care) provided advice on academic and industrial careers. Sixteen students participated in the Cybathlon @school competition, experimenting with robotic technologies for disability, and in the end officially announcing CYBATHLON 2024.

During the event, the next chapter for Swiss robotics was also announced: the launch of the NTN Innovation Booster on robotics, which will run from 2022 to 2025 and will be led by EPFL’s Aude Billard. Funded by Innosuisse, the NTN will act as a platform for new ideas and partnerships, supporting innovation through “idea generator bubbles” and specific funding calls.

The 2021 Swiss Robotics Day marked the beginning of NCCR Robotics’s final year. The project, launched in 2010, is on track to meet all its scientific goals in the three areas of wearable, rescue and educational robotics, while continuing to focus on supporting spin-offs, advancing robotics education and improving Swiss Robotics Day showcases innovation equality of opportunities for all robotics researchers. The conclusion of NCCR Robotics will be marked by the next edition of the Swiss Robotics Day as larger, two-days public event that will take place in Lausanne on 4 and 5 November 2022.

Wearable robotics

The goal of the NCCR Grand Challenge on Wearable Robotic is to develop a novel generation of wearable robotic systems, which will be more comfortable for patients and more extensively usable in a clinical environment. These new technological solutions will help in the recovery of movement and grasping after cardiovascular accidents and spinal cord lesions. They can be used to enhance physiotherapy by improving training, thus encouraging the brain to repair networks (neurorehabilitation). And they can be used as assistive devices (e.g. prosthetic limbs and exoskeletons) to support paralysed people in daily life situations.

While current wearable robots are making huge advances in the lab, there is some way to go before they become part of everyday life for people with disabilities. In order to be functional, robots must work with the user and not cause damage or irritation (in the case of externally worn devices) or be rejected by the host (in the case of implants), they must have their own energy source that does not need to be constantly plugged in or re-charged, and they need to be affordable.

Rescue robotics

After a natural disaster such as an earthquake or flood, it is often very dangerous for teams of rescue workers to go into affected areas to look for victims and survivors.

The idea behind robots for rescue activities is to create robust robots that can travel into areas too dangerous for humans and rescue dogs. Robots can be used to assess the situation and to locate people who may be trapped and to relay the location back to the rescue teams, so that all efforts can be concentrated on areas where victims are known to be. Robots are also being developed to carry medical supplies and food, thereby focusing resources where they are most needed.

The main research issues within the field of mobile robotics for search and rescue mission are durability and usability of robots – how to design robots that are easily transported, can function efficiently in all weather conditions and that have long lasting power, and robots that can navigate themselves and have effective enough sensors to pick out victims.

Educational robotics

In the 1970’s and 1980’s, robots were typically introduced in schools as a tool for teaching robotics or other Science, Technology, Engineering and Mathematics (STEM) subjects. However, this specificity held back their adoption for wider educational purposes. This early failure of adoption of robots in classrooms happened because they were unreliable, expensive and with limited applications.

Nowadays, with robots being cheaper and more easily deployable, applications in education have become easier. In the past fifteen years, there have been an increasing number of extracurricular robotics activities showing the popularity of robotics in an informal educational context. However, robots are still underused in schools for formal education. Although there is no agreement over the exact reasons for this situation, it seems clear, from different studies, that teachers play a key role in the introduction of technology in schools.

During the first two phases of NCCR Robotics two products were developed: the Thymio robot— a mobile robot increasingly used to teach robotics and programming, and Cellulo — a small, inexpensive and robust robot that kids can move with their hands and use in groups.

Current research focuses on two aspects. The first one is inventing new forms of interactions between learners and tangible swarms based on the Cellulo robot, and studying the learning outcomes enabled by these interactions.

The second aspect is investigating teacher adoption of robotics from two points of view: platform usability and teacher training. Research will show how to train teachers and exploit Thymio and Cellulo in their daily activities and how to minimize their orchestration load. Activities relating to computational thinking skills are the main target, with school topics outside the STEM domains also included.

This robot lives with an Antarctica penguin colony, monitoring their every move

XAG promotes drones in Vietnam to boost rice farming while cutting fertilizer use

Afreez Gan: Open Source Robot Dog, Kickstarter, and Home Robots | Sense Think Act Podcast #18

In this episode, Audrow Nash speaks to Afreez Gan, who is the founder and CEO of MangDang; MangDang is a Chinese startup that makes Minipupper, an open source robot dog that uses the Robot Operating System (ROS). Minipupper was inspired by and built with lessons learned from the Stanford Pupper, an open source robot dog. In this interview, Afreez speaks about Minipupper’s hardware, software, and documentation, about manufacturing Minipupper, and about his eventual goal of building a home robot dog.

Episode Links

- Download the episode

- Afreez’s LinkedIn

- MangDang’s website

- Minipupper’s Kickstarter

- MangDang’s Github

Podcast info

How Does the "Goods-To-Person" Method Improve Warehouse Efficiency?

GelBot – A new 3D printing method to tackle sustainability in soft robots

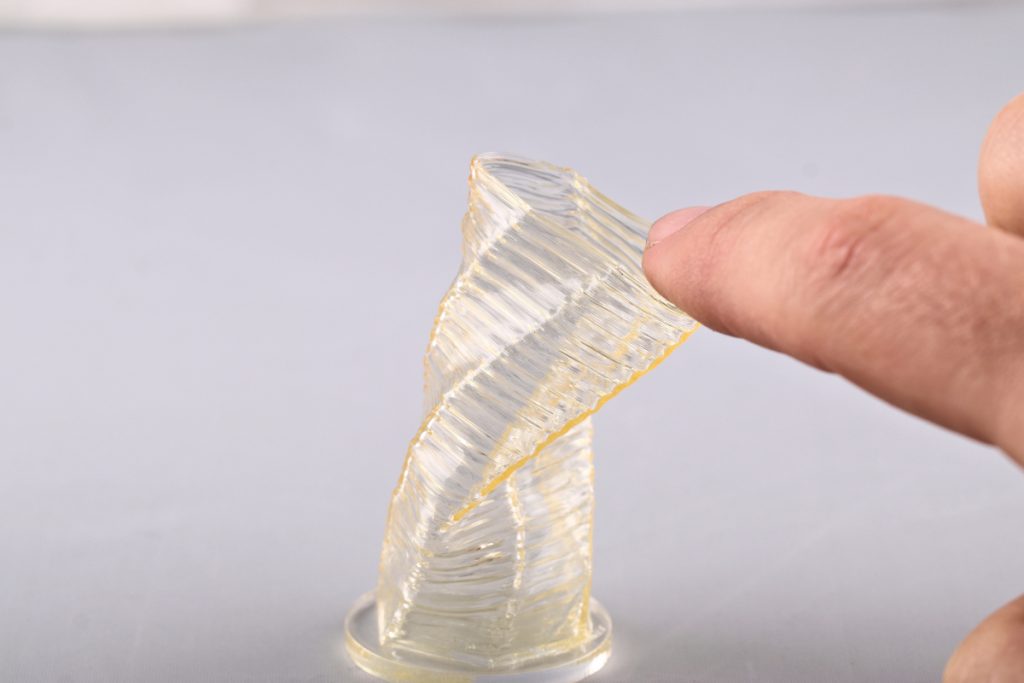

3D-printed vase deformed by human finger touch.

Future generations of robots will work very differently from those that assemble entire vehicles or solder electronics onto circuit boards at lightning speed on factory floors today. They will leave the factory halls and start working with people, handing them a tool at the right moment or assisting them in assembling heavy components. They will appear in agriculture, helping harvest the fields or process the fruits. And they will increasingly be found in living rooms, supporting and entertaining people there or simply making them feel less alone.

Of course, these robots will also look different from the enormous metallic contraptions found in today’s industrial plants. Their appearance will change along with their new functions. Whenever they come into contact with people, they will be gentle and soft so that they won’t hurt anyone – and “soft” here actually means that they are made of conformable materials; that their surface is elastic, flexible and stretchable. Of course, at the same time they are equipped with comprehensive sensor technology that immediately registers every touch and every approach, in order to be able to react appropriately. Today, the development of these soft electronics and robotics mostly relies on synthetic materials such as silicone elastomers – a rubber with very good elastic properties but of fossil origin. This also means that if soft robots become as omnipresent in the future as smartphones are today, the tech-waste grows substantially once again. This raises the question: Where are the biodegradable alternatives? And if they exist, how can we make truly empowering robots that move, sense, and react to their environment?

Martin Kaltenbrunner, head of the Soft Materials Laboratory at the Johannes Kepler University in Linz, Austria, investigates such sustainable alternatives for our future technologies. His team focuses on technologies that interface the human body and, therefore, are equally soft and conformable. Wearable electronics, stretchable energy supplies, and biomimetic robots are just a few examples. And by addressing sustainability, his team adds a new twist to these soft technologies.

The scientific breakthrough was achieved in 2020, when Kaltenbrunner and his team discovered a frugal way to make biodegradable gels (biogels) extremely resilient and durable but still vanish when disposed. Based on the abundant biopolymer gelatin, their material had similar properties and performance than the non-degradable silicone rubbers, paving the way for its use in soft robots.

Now, the doctoral students Andreas Heiden and David Preninger have built a system to 3D print this biogel into complex shapes. They have printed finger-like robots that use intricate sensor networks to sense their own deformation and also objects in their surroundings. Together with Florian Hartmann, materials engineer at EPFL, they have published their research in the renowned journal Science Robotics.

Nature as a source of inspiration

Soft robotics greatly benefits from nature as a source of inspiration, introducing innate means of safe interaction between robotic appliances and living organisms. Kaltenbrunner soon realized that despite soft robotics being largely inspired by nature, an inherent “feature” of nature’s creations was missing: biodegradability. Once a soft robot has reached its end-of-life, there is often no simple solution to recycle its components or treating waste in an environmentally friendly manner.

Introducing biodegradable materials to soft robotics seems to be the logical solution, but existing materials were just not durable enough or were difficult to process. Kaltenbrunner and his team were driven to engineer bioderived materials such as gels based on the biopolymer gelatin, that can match the performance of conventional synthetic elastomers, yet degrade fully after their intended use – leaving essentially no trace of having existed. About two years ago, published in the journal Nature Materials, they optimized such gels to be of use in on-skin electronics, and for soft robots. Based on naturally occurring materials as degradable building blocks that are durable, it is a broadly applicable gelatin-based biogel that unites the challenging needs of resilient yet sustainable (soft) robots in a single platform. It is highly stretchable and elastic—and its thermoplastic. An attribute that lets the material melt when heated and makes it perfectly suitable for 3D-printing.

Complex shapes through 3D printing

The deformable structure of soft robots poses challenges in fabrication and assembly. Unlike conventional robots that are screwed together from individual parts, soft robots are manufactured as monolithic blocks. To this end, 3D printing is a versatile fabrication strategy that also allows to produce complex objects. Heiden and Preninger designed a custom system based on Fused Deposition Modeling (FDM) to print their biogel. FDM is one of the most common 3d printing methods nowadays and based on fusing melted polymers that become solid again when cooled. For printing the biogel, the material is melted in a medical syringe and squeezed through the tip, which leads to to the deposition of a biogel “thread” that solidifies quickly after the extrusion. In this manner several two-dimensional layers are drawn subsequently, stacked on each other, to form the three-dimensional object.

XYZ calibration cube and gummybear model printed from gelatin-based biogel ink.

But what happens if a print fails? Usually, you would throw away your print and restart. Having a biodegradable solution, you do not even have to worry about waste production. Alongside this eco-friendly fabrication approach using biodegradable materials, we introduced an additional reuse cycle where the biogel is reprinted up to 5 times, maintaining more than 70% of the initial performance metrics. Utilizing such approaches for a circular economy will enable more sustainable solutions for materials that are less degradable.

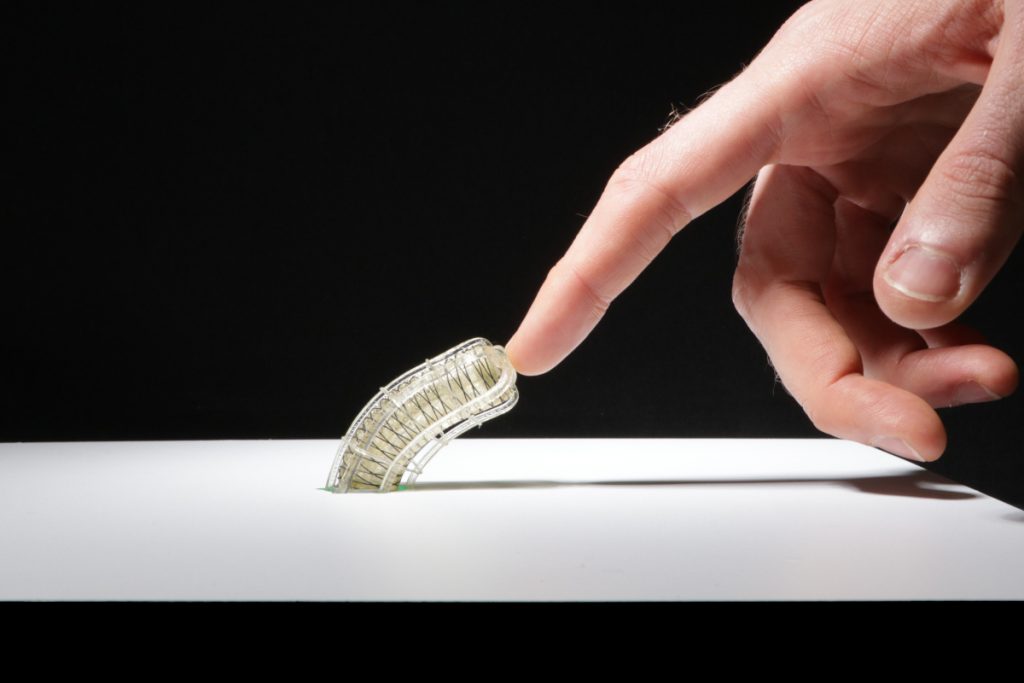

Omnidirectional actuators with perception

By extending the fabrication of biodegradable gels to 3D-printing, the researchers were able to produce versatile soft actuators in various complex shapes and even include integrated sensor networks to let them interact with their environment. In their Science robotics publication, they demonstrated a finger like actuator that is powered by pressurized air and can bend in any direction, similar to an elephant trunk or an octopus tentacle. A combination of three inflatable chambers within the actuator and the use of a cotton-textile reinforcement make this motion possible.

Fully actuated 3-chamber actuator with integrated sensor network detects human finger touch.

Additionally, the actuator features a distributed sensor network that is based on light transmission through transparent materials. These sensors acquire information on the actuator’s own bending state, and also on impact with objects in its surrounding. Without having eyes, this robot is able to detect an obstacle and remove it with from its vicinity. The function of this single robotic element demonstrates that both motion and sensing can be achieved with sustainable material and fabrication solutions, without making large compromise on performance. And once they are no longer of use, they can be simply disposed. Immersing it in water triggers the swelling and dissolution of the biogel and, in the presence of enzymes, complete decomposition.