Miniscule robots of metal and plastic may revolutionize the field of medicine

Real Time Systems – ALL APPLICATIONS ON ONE HARDWARE PLATFORM

Maximizing the Benefits of Customized Solutions

Previewed Reality: A system that allows users to predict future changes in their environment

Versatile building blocks make structures with surprising mechanical properties

By David L. Chandler

Researchers at MIT’s Center for Bits and Atoms have created tiny building blocks that exhibit a variety of unique mechanical properties, such as the ability to produce a twisting motion when squeezed. These subunits could potentially be assembled by tiny robots into a nearly limitless variety of objects with built-in functionality, including vehicles, large industrial parts, or specialized robots that can be repeatedly reassembled in different forms.

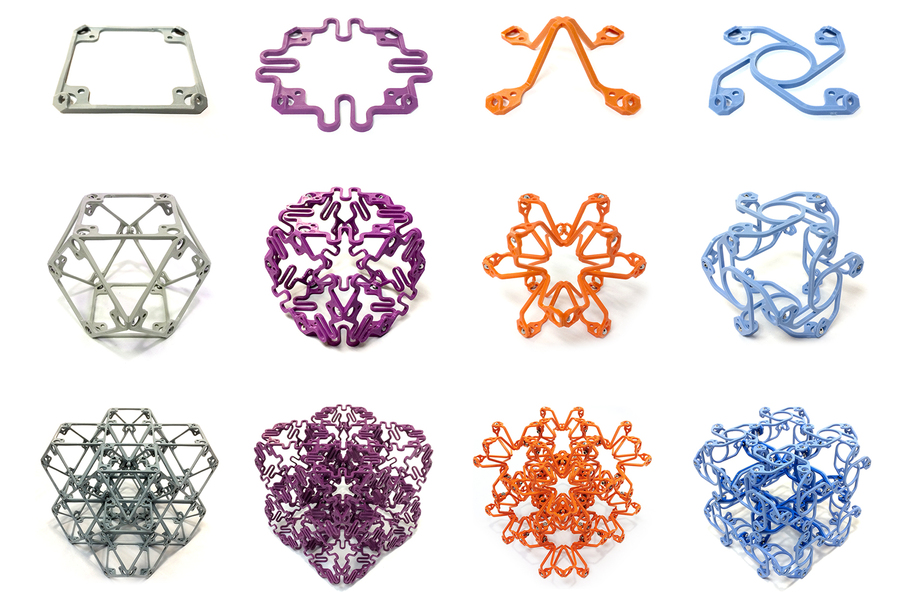

The researchers created four different types of these subunits, called voxels (a 3D variation on the pixels of a 2D image). Each voxel type exhibits special properties not found in typical natural materials, and in combination they can be used to make devices that respond to environmental stimuli in predictable ways. Examples might include airplane wings or turbine blades that respond to changes in air pressure or wind speed by changing their overall shape.

The findings, which detail the creation of a family of discrete “mechanical metamaterials,” are described in a paper published in the journal Science Advances, authored by recent MIT doctoral graduate Benjamin Jenett PhD ’20, Professor Neil Gershenfeld, and four others.

“This remarkable, fundamental, and beautiful synthesis promises to revolutionize the cost, tailorability, and functional efficiency of ultralight, materials-frugal structures,” says Amory Lovins, an adjunct professor of civil and environmental engineering at Stanford University and founder of Rocky Mountain Institute, who was not associated with this work.

Metamaterials get their name because their large-scale properties are different from the microlevel properties of their component materials. They are used in electromagnetics and as “architected” materials, which are designed at the level of their microstructure. “But there hasn’t been much done on creating macroscopic mechanical properties as a metamaterial,” Gershenfeld says.

With this approach, engineers should be able to build structures incorporating a wide range of material properties — and produce them all using the same shared production and assembly processes, Gershenfeld says.

The voxels are assembled from flat frame pieces of injection-molded polymers, then combined into three-dimensional shapes that can be joined into larger functional structures. They are mostly open space and thus provide an extremely lightweight but rigid framework when assembled. Besides the basic rigid unit, which provides an exceptional combination of strength and light weight, there are three other variations of these voxels, each with a different unusual property.

The “auxetic” voxels have a strange property in which a cube of the material, when compressed, instead of bulging out at the sides, actually bulges inward. This is the first demonstration of such a material produced through conventional and inexpensive manufacturing methods.

There are also “compliant” voxels, with a zero Poisson ratio, which is somewhat similar to the auxetic property, but in this case, when the material is compressed, the sides do not change shape at all. Few known materials exhibit this property, which can now be produced through this new approach.

Finally, “chiral” voxels respond to axial compression or stretching with a twisting motion. Again, this is an uncommon property; research that produced one such material through complex fabrication techniques was hailed last year as a significant finding. This work makes this property easily accessible at macroscopic scales.

“Each type of material property we’re showing has previously been its own field,” Gershenfeld says. “People would write papers on just that one property. This is the first thing that shows all of them in one single system.”

To demonstrate the real-world potential of large objects constructed in a LEGO-like manner out of these mass-produced voxels, the team, working in collaboration with engineers at Toyota, produced a functional super-mileage race car, which they demonstrated on a rece track during an international robotics conference earlier this year.

They were able to assemble the lightweight, high-performance structure in just a month, Jenett says, whereas building a comparable structure using conventional fiberglass construction methods had previously taken a year.

During the race, the track was slick from rain, and the race car ended up crashing into a barrier. To the surprise of everyone involved, the car’s lattice-like internal structure deformed and then bounced back, absorbing the shock with little damage. A conventionally built car, Jenett says, would likely have been severely dented if it was made of metal, or shattered if it was composite.

The car provided a vivid demonstration of the fact that these tiny parts can indeed be used to make functional devices at human-sized scales. And, Gershenfeld points out, in the structure of the car, “these aren’t parts connected to something else. The whole thing is made out of nothing but these parts,” except for the motors and power supply.

Because the voxels are uniform in size and composition, they can be combined in any way needed to provide different functions for the resulting device. “We can span a wide range of material properties that before now have been considered very specialized,” Gershenfeld says. “The point is that you don’t have to pick one property. You can make, for example, robots that bend in one direction and are stiff in another direction and move only in certain ways. And so, the big change over our earlier work is this ability to span multiple mechanical material properties, that before now have been considered in isolation.”

Jenett, who carried out much of this work as the basis for his doctoral thesis, says “these parts are low-cost, easily produced, and very fast to assemble, and you get this range of properties all in one system. They’re all compatible with each other, so there’s all these different types of exotic properties, but they all play well with each other in the same scalable, inexpensive system.”

“Think about all the rigid parts and moving parts in cars and robots and boats and planes,” Gershenfeld says. “And we can span all of that with this one system.”

A key factor is that a structure made up of one type of these voxels will behave exactly the same way as the subunit itself, Jenett says. “We were able to demonstrate that the joints effectively disappear when you assemble the parts together. It behaves as a continuum, monolithic material.”

Whereas robotics research has tended to be divided between hard and soft robots, “this is very much neither,” Gershenfeld says, because of its potential to mix and match these properties within a single device.

One of the possible early application of this technology, Jenett says, could be for building the blades of wind turbines. As these structures become ever larger, transporting the blades to their operating site becomes a serious logistical issue, whereas if they are assembled from thousands of tiny subunits, that job can be done at the site, eliminating the transportation issue. Similarly, the disposal of used turbine blades is already becoming a serious problem because of their large size and lack of recyclability. But blades made up of tiny voxels could be disassembled on site, and the voxels then reused to make something else.

And in addition, the blades themselves could be more efficient, because they could have a mix of mechanical properties designed into the structure that would allow them to respond dynamically, passively, to changes in wind strength, he says.

Overall, Jenett says, “Now we have this low-cost, scalable system, so we can design whatever we want to. We can do quadrupeds, we can do swimming robots, we can do flying robots. That flexibility is one of the key benefits of the system.”

Stanford’s Lovins says that this technology “could make inexpensive, durable, extraordinarily lightweight aeronautical flight surfaces that passively and continuously optimize their shape like a bird’s wing. It could also make automobiles’ empty mass more nearly approach their payload, as their crashworthy structure becomes mostly air. It may even permit spherical shells whose crush strength allows a vacuum balloon (with no helium) buoyant in the atmosphere to lift a couple of dozen times the net payload of a jumbo jet.”

He adds, “Like biomimicry and integrative design, this new art of cellular metamaterials is a powerful new tool for helping us do more with less.”

The research team included Filippos Tourlomousis, Alfonso Parra Rubio, and Megan Ochalek at MIT, and Christopher Cameron at the U.S. Army Research Laboratory. The work was supported by NASA, the U.S. Army Research Laboratory and the Center for Bits and Atoms Consortia.

The Industrial Robotics Industry: Is Reemergence of the Industry on the Cards Post the COVID-19 Pandemic Settles?

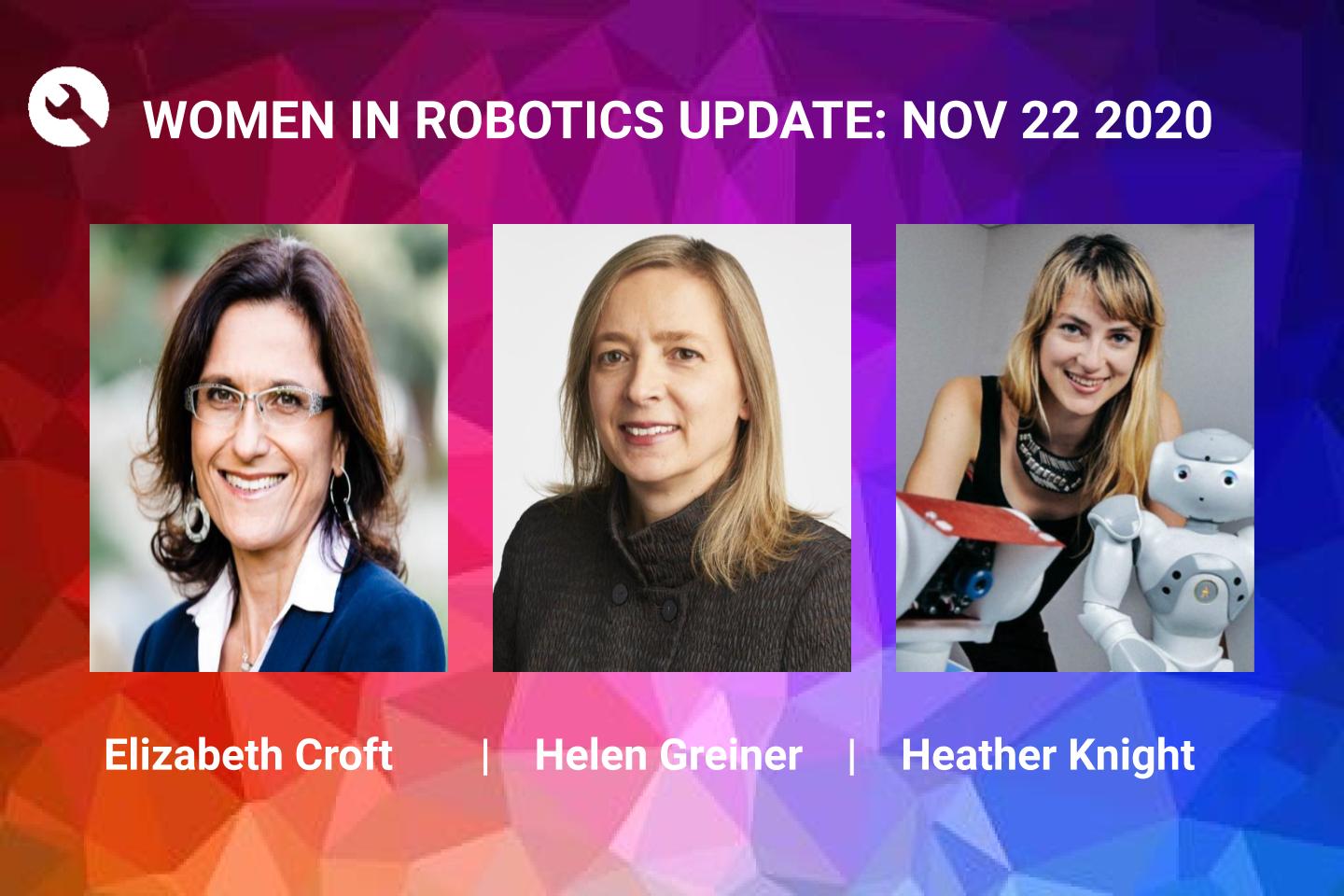

Women in Robotics Update: Elizabeth Croft, Helen Greiner, Heather Knight

“Fearless Girl should provide the spark to inspire more female engineers”, writes Elizabeth Croft, Dean and Faculty of Engineering at Monash University.

“Girls are natural engineers, highly capable in maths and physics. We need to show them that these tools can be used to design a better world.So far, we’ve done a poor job of communicating to girls the very powerful impact they can make through an engineering career.” Croft continues, providing us with the inspiration to introduce the second of our new series of Women in Robotics Updates, featuring Elizabeth Croft, Helen Greiner and Heather Knight from our first “25 women in robotics you need to know about” list in 2013.

|

Elizabeth Croft

Dean and Faculty of Engineering at Monash University

Elizabeth Croft (featured 2013) is now a Dean and Faculty of Engineering division at Monash University in Australia, advancing human-robot interaction, industrial robotics, trajectory generation and diversity in STEM. Previously she was the Founding Director of the Collaborative Advanced Robotics and Intelligent Systems Laboratory at the University of British Columbia (UBC). As the Marshall Bauder Professor in Engineering Economics, Business and Management Training from 2015-2017, she launched the Master of Engineering Leadership degrees at the UBC. Recognized as one of the 100 most powerful women in Canada in 2014 by Women’s Executive Network, Croft also received the RA McLachlan Peak Career Award for Professional Engineering in the Province of British Columbia, Canada in 2018. She is a fellow of the ASME, Engineers Australia, Engineers Canada, and the Canadian Academy of Engineering. She is also the recipient of other awards such as the Wendy MacDonald Award, Diversity Champion, Vancouver Board of Trade of 2016, and Just Desserts Award of University British Columbia, Alma Mater Society in 2015. She has more than 200 research publications and almost 6000 citations. She is an advocate for women in Engineering and has an exceptional record of propelling women’s representation and participation in engineering. As the Natural Sciences and Engineering Research Council Chair for Women in Science and Engineering (2010-2015), she worked with partners in funding agencies, industry, academe, and the education system on comprehensive strategies to improve women’s participation and retention in the STEM disciplines at all levels. During this period Croft successfully increased female enrollment in Engineering to 30%. |

|

Helen Greiner

CEO, Cofounder and Founder of Tertill, iRobot and Cyphy Works

Helen Greiner (featured in 2013), is now a founder of Tertill , in addition to founding iRobot and CyPhy Works (aka Aria Insights). Tertill is a solar-powered, weed-snipping robot for home gardens patrolling throughout the day and looks somewhat like an outdoor Roomba, one of the products from iRobot, the world’s first commercially successful consumer robotics product. Greiner has received numerous awards and accolades, including being named an “Innovator for the Next Century” by Technology Review Magazine. She received the DEMO God Award at the DEMO Conference in 2014 and was named a Presidential Ambassador for Global Leadership (PAGE) by US President, Barack Obama and US Secretary of Commerce, Penny Pritzker. She was recognized for leadership in the design, development, and application of practical robots by the National Academy of Engineering, and was named “woman of the year” at Wentworth Institute of Technology in 2018. In 2018, she was also sworn in as a Highly Qualified Expert for the US Army. You can hear her speak in 2021 at the finals of the $2m GoFly competition . As a child Greiner became fascinated by the robots of StarWars, particularly the three-foot-tall spunky R2D2. Says Greiner “He had moods, emotions, and dare I say, his own agenda. This was exciting to me—he was a creature, an artificial creature.” Consistently pioneering in building the helping robots to perform dull, dirty and dangerous jobs and launching robotics into the consumer market since 2008, she says, “If we don’t take robots to the next level, we’ll have a lot of explaining to do to our grandchildren.” |

|

Heather Knight

Assistant Professor at Oregon State University

Heather Knight, (featured 2013) is now an Assistant Professor in the Computer Science department at Oregon State University and directs the CHARISMA* Research Lab. In CHARISMA Research Lab, she operationalizes methods for the performing arts to make more emotive and engaging robots, exploring minimal social robots, robot ethics, charismatic machines, and multi-robot/multi-human social interaction. Knight has presented a TED talk: Silicon-Based Comedy in 2010 where she demonstrated a robot stand-up comedian “Data” which has gotten almost 1 million views. She was mentioned in Forbes List’s 30 under 30 in Science and named one of AdWeek’s top 100 creatives in 2017. In 2017, she was also a Robotic Artist in Residence at X, the Moonshot Factory. Her installations have been featured at the Smithsonian-Cooper Hewitt Design Museum, TED, Pop! Tech, LACMA, SIGGRAPH, and the Fortezza da Basso in Florence, Italy. She is also the Assistant Director of Robotics at Humanity+ and a fellow at the Hybrid Realities Institute and a National Science Foundation (NSF) Fellow. She is also a founder of Marilyn Monrobot, a robot theater company performing comedy, dance and even Rube Goldberg Machine installations. Here, she successfully organizes the annual ‘Robot Film Festival’ which awards Botskers to various robot films and robot film stars. The film archives make for great viewing. |

Want to keep reading? There are 180 more stories on our 2013 to 2020 lists. Why not nominate someone for inclusion next year!

And we encourage #womeninrobotics and women who’d like to work in robotics to join our professional network at http://womeninrobotics.org

Robotics sector brings robotics to the public in annual European showcase

European Robotics Week 2020 (ERW2020) began on Thursday and hundreds of interactive robotics events for the public have been announced. These will take place in countries across Europe and beyond, to show how robots will impact the way we work, live, and learn.

In a year when humanity has faced a global pandemic crisis, robotics companies and researchers across Europe have been able to demonstrate how robotics help societies and economies to keep operating in a world affected by Covid-19.

With the opportunities arising from Europe’s digital transformation driven by new technologies like artificial intelligence, robotics, cloud computing and blockchain, the demand for ICT specialists continues to grow. In the future, 9 out of 10 jobs will require digital skills (source). Yet fewer women than men take up ICT-related jobs and education: for every 1000 women, only 24 graduate in digital fields (source). European Robotics Week (ERW) promotes digital skills for everyone to be used everywhere, be it in school, at university or on the job, putting the emphasis on inspiring girls to follow STEM-related careers.

What is happening in Europe?

Since 2011, European Robotics Week has been encouraging robotics researchers, universities and industry to open their doors to the public to raise awareness of the contribution that robots can make to our society. In 2020, despite the limitations imposed on physical events by restrictions to tackle the spread of Covid, organisers have been resourceful in arranging hundreds of activities throughout European and and beyond.

See the full list of activities: www.robotics-week.eu

ERW Central Event 23-25 November, Sarajevo, Bosnia (BiH) ONLINE

Every year the ERW chooses a location to host the Central Event, with an opening ceremony and an eco-system of various engaging activities. This year’s Central Opening event is hosted in Sarajevo, Bosnia and Herzigovina, during 23-25 November. The ERW2020 Central Event will be delivered entirely digitally, meaning that a great many people both within BiH and beyond, including children, will be able to interact with ERW2020’s online fair, workshops, webinars and lectures and competitions.

Under the theme Robotics for Humanity, the Central Event of ERW2020 in Bosnia and Herzegovina will focus on the positive effects of robotics and technology on everyday life, economy and health, especially taking into account challenges arising from global COVID-19 pandemic and technology’s potential to address them.

The event will also mark the 10th anniversary of European Robotics Week and it will coincide with the European Researchers’ Night celebrated on 27 November. It will be run by the Association for promotion of culture, science and cinematography BALKANFILM and the Info Centre of European Union to Bosnia and Herzigovina.

Background of European Robotics Week

ERW was conceived with the desire of the European Robotics community to bring robotics research and development closer to the public and to build the future Robotics Society. Many more than 550,000 people across Europe have been part of ERW since its first edition in 2011. The European Robotics Week is organised under SPARC, the public-private partnership for robotics between euRobotics and the European Commission.

European Robotics Week 2020 (ERW2020) takes place across Europe and beyond during 19-29 November 2020.

Social media

- Hashtag #ERW2020

- Twitter: twitter.com/eu_Robotics twitter.com/SPARCrobotics

- Facebook: www.facebook.com/euRobotics www.facebook.com/SPARCrobotics

- LinkedIn: www.linkedin.com/company/eurobotics-aisbl

- Youtube: www.youtube.com/user/euRobotics