Meta’s Ray-Ban Smart Glasses: A Bold Leap Towards a Connected Future

The advent of Meta’s Ray-Ban smart glasses marks a transformative moment in wearable technology. Equipped with live AI and real-time translation, these gadgets are more than eyewear; they’re a window into the future of augmented reality (AR). Meta’s innovation signals an exciting convergence of AI, AR, and wearable tech, setting the stage for what’s to...

The post Meta’s Ray-Ban Smart Glasses: A Bold Leap Towards a Connected Future appeared first on 1redDrop.

Crossing the Uncanny Valley: Researchers develop technology for lifelike facial expressions in androids

Accelerate data preparation and AI collaboration at scale

Speed, scale, and collaboration are essential for AI teams — but limited structured data, compute resources, and centralized workflows often stand in the way.

Whether you’re a DataRobot customer or an AI practitioner looking for smarter ways to prepare and model large datasets, new tools like incremental learning, optical character recognition (OCR), and enhanced data preparation will eliminate roadblocks, helping you build more accurate models in less time.

Here’s what’s new in the DataRobot Workbench experience:

- Incremental learning: Efficiently model large data volumes with greater transparency and control.

- Optical character recognition (OCR): Instantly convert unstructured scanned PDFs into usable data for predictive and generative AI use cases.

- Easier collaboration: Work with your team in a unified space with shared access to data prep, generative AI development, and predictive modeling tools.

Model efficiently on large data volumes with incremental learning

Building models with large datasets often leads to surprise compute costs, inefficiencies, and runaway expenses. Incremental learning removes these barriers, allowing you to model on large data volumes with precision and control.

Instead of processing an entire dataset at once, incremental learning runs successive iterations on your training data, using only as much data as needed to achieve optimal accuracy.

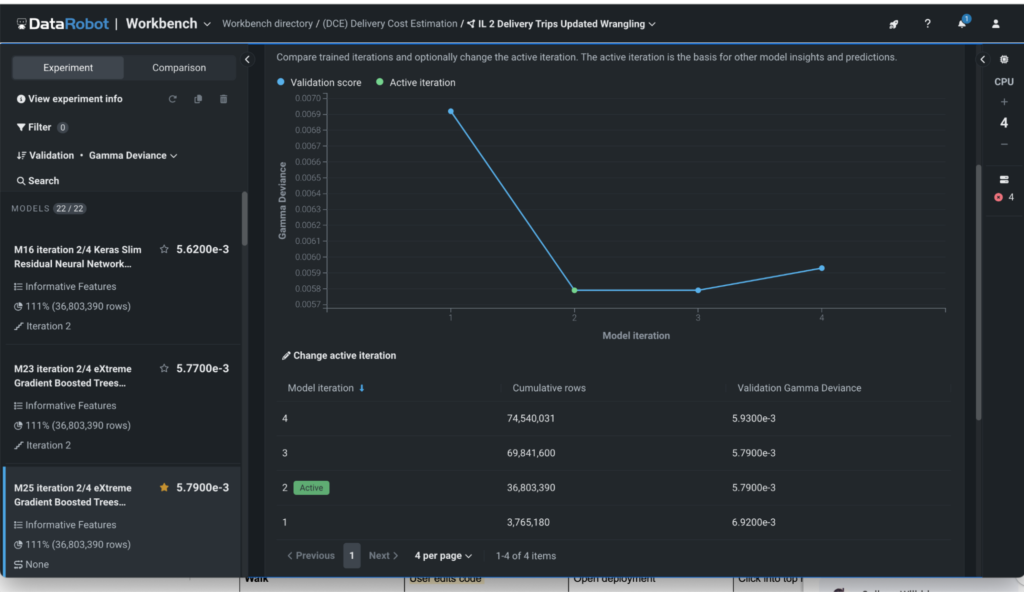

Each iteration is visualized on a graph (see Figure 1), where you can track the number of rows processed and accuracy gained — all based on the metric you choose.

Key advantages of incremental learning:

- Only process the data that drives results.

Incremental learning stops jobs automatically when diminishing returns are detected, ensuring you use just enough data to achieve optimal accuracy. In DataRobot, each iteration is tracked, so you’ll clearly see how much data yields the strongest results. You are always in control and can customize and run additional iterations to get it just right.

- Train on just the right amount of data

Incremental learning prevents overfitting by iterating on smaller samples, so your model learns patterns — not just the training data.

- Automate complex workflows:

Ensure this data provisioning is fast and error free. Advanced code-first users can go one step further and streamline retraining by using saved weights to process only new data. This avoids the need to rerun the entire dataset from scratch, reducing errors from manual setup.

When to best leverage incremental learning

There are two key scenarios where incremental learning drives efficiency and control:

- One-time modeling jobs

You can customize early stopping on large datasets to avoid unnecessary processing, prevent overfitting, and ensure data transparency.

- Dynamic, regularly updated models

For models that react to new information, advanced code-first users can build pipelines that add new data to training sets without a complete rerun.

Unlike other AI platforms, incremental learning gives you control over large data jobs, making them faster, more efficient, and less costly.

How optical character recognition (OCR) prepares unstructured data for AI

Having access to large quantities of usable data can be a barrier to building accurate predictive models and powering retrieval-augmented generation (RAG) chatbots. This is especially true because 80-90% company data is unstructured data, which can be challenging to process. OCR removes that barrier by turning scanned PDFs into a usable, searchable format for predictive and generative AI.

How it works

OCR is a code-first capability within DataRobot. By calling the API, you can transform a ZIP file of scanned PDFs into a dataset of text-embedded PDFs. The extracted text is embedded directly into the PDF document, ready to be accessed by document AI features.

How OCR can power multimodal AI

Our new OCR functionality isn’t just for generative AI or vector databases. It also simplifies the preparation of AI-ready data for multimodal predictive models, enabling richer insights from diverse data sources.

Multimodal predictive AI data prep

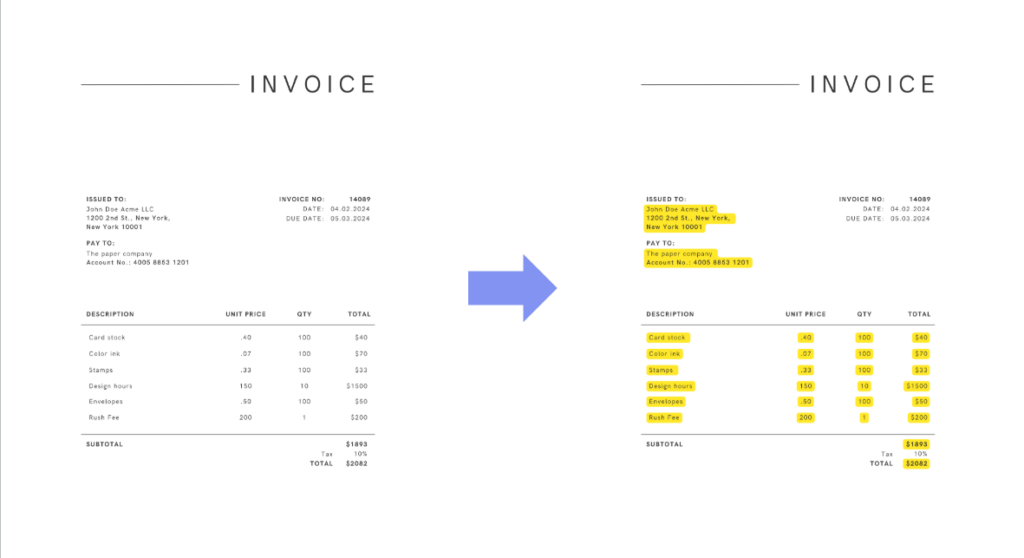

Rapidly turn scanned documents into a dataset of PDFs with embedded text. This allows you to extract key information and build features of your predictive models using document AI capabilities.

For example, say you want to predict operating expenses but only have access to scanned invoices. By combining OCR, document text extraction, and an integration with Apache Airflow, you can turn these invoices into a powerful data source for your model.

Powering RAG LLMs with vector databases

Large vector databases support more accurate retrieval-augmented generation (RAG) for LLMs, especially when supported by larger, richer datasets. OCR plays a key role by turning scanned PDFs into text-embedded PDFs, making that text usable as vectors to power more precise LLM responses.

Practical use case

Imagine building a RAG chatbot that answers complex employee questions. Employee benefits documents are often dense and difficult to search. By using OCR to prepare these documents for generative AI, you can enrich an LLM, enabling employees to get fast, accurate answers in a self-service format.

WorkBench migrations that boost collaboration

Collaboration can be one of the biggest blockers to fast AI delivery, especially when teams are forced to work across multiple tools and data sources. DataRobot’s NextGen WorkBench solves this by unifying key predictive and generative modeling workflows in one shared environment.

This migration means that you can build both predictive and generative models using both graphical user interface (GUI) and code based notebooks and codespaces — all in a single workspace. It also brings powerful data preparation capabilities into the same environment, so teams can collaborate on end-to-end AI workflows without switching tools.

Accelerate data preparation where you develop models

Data preparation often takes up to 80% of a data scientist’s time. The NextGen WorkBench streamlines this process with:

- Data quality detection and automated data healing: Identify and resolve issues like missing values, outliers, and format errors automatically.

- Automated feature detection and reduction: Automatically identify key features and remove low-impact ones, reducing the need for manual feature engineering.

- Out-of-the-box visualizations of data analysis: Instantly generate interactive visualizations to explore datasets and spot trends.

Improve data quality and visualize issues instantly

Data quality issues like missing values, outliers, and format errors can slow down AI development. The NextGen WorkBench addresses this with automated scans and visual insights that save time and reduce manual effort.

Now, when you upload a dataset, automatic scans check for key data quality issues, including:

- Outliers

- Multicategorical format errors

- Inliers

- Excess zeros

- Disguised missing values

- Target leakage

- Missing images (in image datasets only)

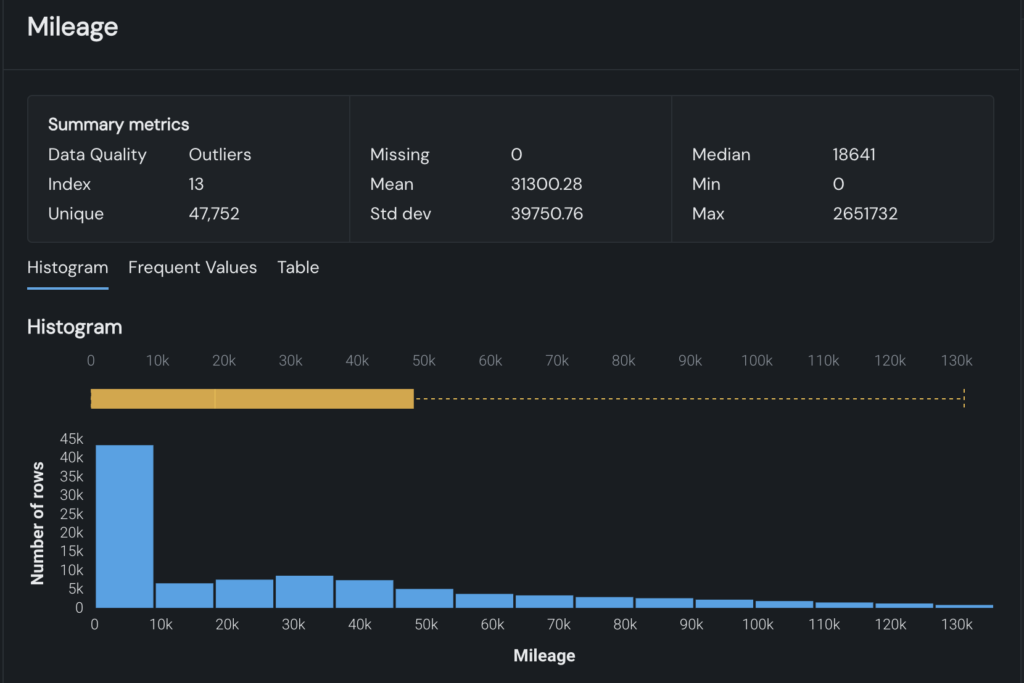

- PII

These data quality checks are paired with out-of-the-box EDA (exploratory data analysis) visualizations. New datasets are automatically visualized in interactive graphs, giving you instant visibility into data trends and potential issues, without having to build charts yourself. Figure 3 below demonstrates how quality issues are highlighted directly within the graph.

Automate feature detection and reduce complexity

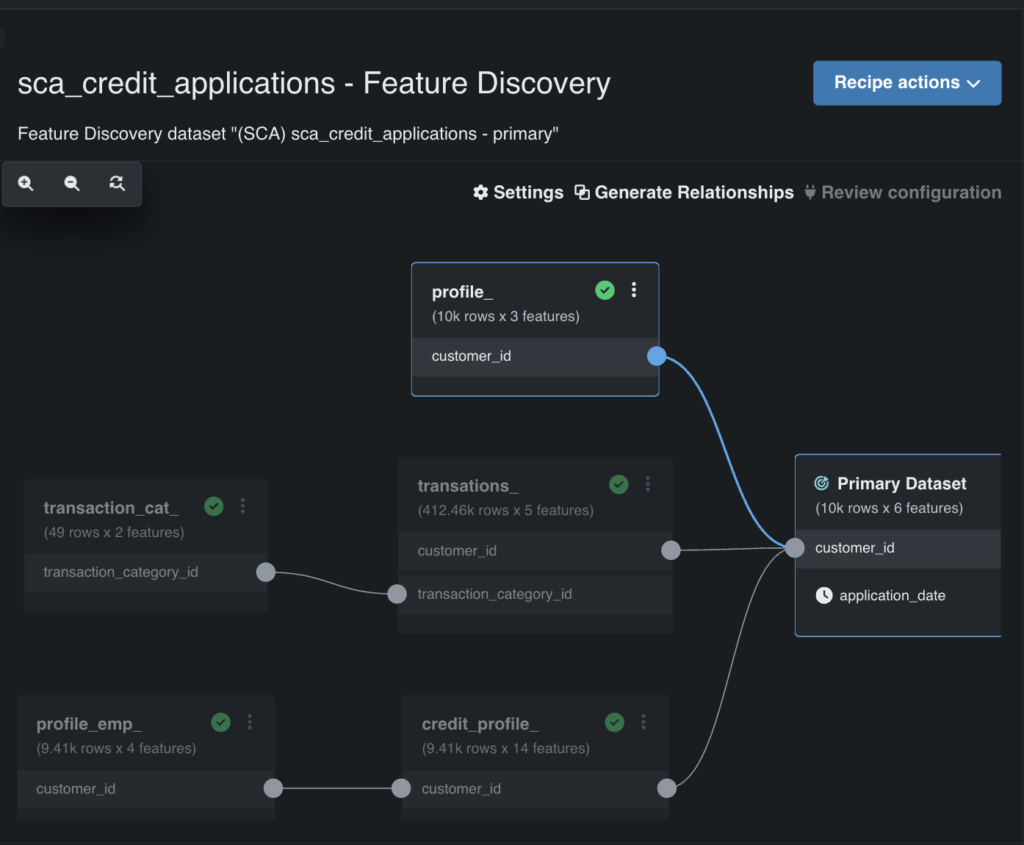

Automated feature detection helps you simplify feature engineering, making it easier to join secondary datasets, detect key features, and remove low-impact ones.

This capability scans all your secondary datasets to find similarities — like customer IDs (see Figure 4) — and enables you to automatically join them into a training dataset. It also identifies and removes low-impact features, reducing unnecessary complexity.

You maintain full control, with the ability to review and customize which features are included or excluded.

Don’t let slow workflows slow you down

Data prep doesn’t have to take 80% of your time. Disconnected tools don’t have to slow your progress. And unstructured data doesn’t have to be out of reach.

With NextGen WorkBench, you have the tools to move faster, simplify workflows, and build with less manual effort. These features are already available to you — it’s just a matter of putting them to work.

If you’re ready to see what’s possible, explore the NextGen experience in a free trial.

The post Accelerate data preparation and AI collaboration at scale appeared first on DataRobot.

Top Viewed Articles of 2024

ChatGPT Getting Smarter — Again

New Upgrade Promised for Early 2025

ChatGPT’s maker OpenAI is promising to release yet another upgrade to one of the AI engines that helps power the chatbot — dubbed OpenAI o3.

The performance of the new AI engine is three times better than its predecessor, OpenAI 01 — and that’s when OpenAI 03 is running on its ‘low compute’ setting.

The new AI engine is even more impressive when it runs on its ‘high compute’ setting, according to OpenAI.

Lead writer Maxwell Zeff reports that ChatGPT-maker OpenAI plans to release the new AI engine in early 2025.

In other news and analysis on AI writing:

*In-Depth Guide: Google’s New Deep Research Tool: AI expert Paul O’Malley offers a crystal-clear, easy-paced, step-by-step video of how to use a powerful new AI research tool from Google.

Dubbed Google ‘Deep Research,’ the new tool begins its research for you by developing an in-depth, research game-plan for any topic you fancy.

Once you approve the plan — which you can edit beforehand — the AI scurries off to the Web, visiting and analyzing hundreds — or more — Web sites to put together a deeply reasoned, well-written report on its findings and analysis.

One caveat: Some reviewers have found that while Deep Research is accurate overall, the tool sometimes misconstrues nuances and its accuracy is not 100% reliable.

*Google Deep Research: Another Believer: Add writer Ryan Morrison to the growing number of AI experts thrilled with Google’s new Deep Research tool.

In practice, Deep Research returns an in-depth report for you on any topic, complete with citations and references.

“You can link back to any source it gathered the information from and even ask a follow-up question and have it refine the report.

“This isn’t a quick process: It can take several minutes to complete the search and provide the final report — which you can export to (Google) Docs.”

*Google’s New AI Reasoning Released: Including a Peek Inside Its Genius: Determined to match ChatGPT maker OpenAI blow-for-blow, Google has released an update to its AI that specializes in reasoning — Google Gemini 2.0 Flash.

Observes writer Carl Franzen: “Unlike competitor reasoning models o1 and o1 mini from OpenAI, Gemini 2.0 enables users to access its step-by-step reasoning through a dropdown menu, offering clearer, more transparent insight into how the model arrives at its conclusions.

“By allowing users to see how decisions are made, Gemini 2.0 addresses longstanding concerns about AI functioning as a “black box,” and brings this model — licensing terms still unclear — to parity with other open-source models fielded by competitors.”

*Oops: Apple’s News Service Accidentally ‘Kills-Off’ CEO Killer: This week’s ‘Egg-on-Face’ Award for irresponsible use of AI goes to Apple.

Its newly forged news service, powered by Apple Intelligence, falsely reported that the New York CEO killer shot himself — and credited the BBC as the news source.

Ouch.

Observes BBC writer Graham Fraser: “This week, the AI-powered summary falsely made it appear BBC News had published an article claiming Luigi Mangione — the man arrested following the murder of healthcare insurance CEO Brian Thompson in New York — had shot himself.

“He has not.”

Not surprisingly, the BBC is none too pleased.

*Why Teach Writing? Let AI Handle It: While scores of educators anguish over the widespread use of ChatGPT and similar tools to cheat on homework, high school teacher Stephen Lane is not one of them.

In fact, Lane — a history and economics teacher — looks forward to the day when K-12 educators will offload the entire process of writing instruction to AI.

Observes Lane: “AI is in the classroom. And teachers need to teach students how to use it.

“The challenge is to uphold the bedrock value of academic integrity at the same time. The best way to do so may be to separate writing from scholarship.”

*Me Too: Google Crashes the Text-to-Video Party: As Hollywood’s filmmakers warily eye the widespread release of ChatGPT’s text-to-video tool ‘Sora,’ Google has jumped in with a resounding ‘Me Too’ with Veo 2.

Observes writer Chance Townsend: “In its press release, Google Deepmind states that Veo 2 can generate 4K videos and handle complex prompts — like specific camera lenses or cinematic shots.

“Further, the company says that, unlike earlier models that often “hallucinate” strange visuals (like extra limbs), Veo 2 reduces these quirks, making results more natural.”

*Jasper Studio: Perfect for Marketers Looking to Create Their Own AI Overlords: Expanding beyond automated writing, Jasper has released a new ‘Jasper Studio’ add-on to its service for marketers looking to create their own AI apps and workflows within the Jasper platform.

An example app that can be designed with the new studio would be an app that can generate brand-specific product descriptions at scale.

Meanwhile, a business-to-business marketing team might develop an app to recommend key accounts and personalized product suggestions.

With the new, no-code programming studio, marketers can also customize Jasper’s more than 90, pre-built applications and tailor them to meet needs such as character limits, image specifications, content formatting or brand compliance.

*Google Gemini: The Complete Rundown: If you’re looking for a complete, in-depth update on the AI engine Gemini — one of the fiercest competitors to the AI engines powering ChatGPT, this is your one-stop answer.

Writers Kyle Wiggers and Maxwell Zeff do a great job of illuminating every facet of the Gemini phenomenon.

And they offer up an insightful frame-of-reference for how Gemini fits into the grand-scheme-of-AI.

*AI Big Picture: Microsoft CEO: AI Agents Will Eat Software Apps: In this head-turning video, Microsoft CEO Satya Nadella predicts software apps are doomed to disappear as AI agents rise in popularity.

Makes sense: ChatGPT users are already creating their own AI-powered proofreading prompts in a few seconds, for example — which compete directly with Grammarly, a software proofreading app that took years to code by traditional programmers.

Interestingly, Nadella points to one of Microsoft’s own stalwart software apps — ‘Excel’ — as one of many software apps that may face the chopping block.

Nadella’s reasoning: Why reach for a software app to slice-and-dice your data, when one or more AI agents — that you can quickly create — can do the same thing?

Share a Link: Please consider sharing a link to https://RobotWritersAI.com from your blog, social media post, publication or emails. More links leading to RobotWritersAI.com helps everyone interested in AI-generated writing.

–Joe Dysart is editor of RobotWritersAI.com and a tech journalist with 20+ years experience. His work has appeared in 150+ publications, including The New York Times and the Financial Times of London.

The post ChatGPT Getting Smarter — Again appeared first on Robot Writers AI.

Best Smartphones to Click Christmas Pictures in 2024

Christmas is fast approaching, and the holiday spirit brings with it countless photo-worthy moments. From twinkling lights adorning the tree to the cozy gatherings of friends and family, capturing these scenes perfectly is a priority for many. The right smartphone can make all the difference in preserving these memories. Below, we explore the best smartphones...

The post Best Smartphones to Click Christmas Pictures in 2024 appeared first on 1redDrop.

Robot mimics traditional Chinese massage techniques for therapeutic use

Apple’s Lightning Era Nears Its End: What the USB-C Mandate Means for the Future

Apple Pulls iPhone SE, 14, and 14 Plus from EU Stores Apple has initiated a significant shift by removing the iPhone SE, iPhone 14, and iPhone 14 Plus from its Swiss online store, with similar moves expected across the European Union (EU). This decision comes in response to the impending EU regulation requiring all smartphones...

The post Apple’s Lightning Era Nears Its End: What the USB-C Mandate Means for the Future appeared first on 1redDrop.

AI-driven approach reveals hidden hazards of chemical mixtures in rivers

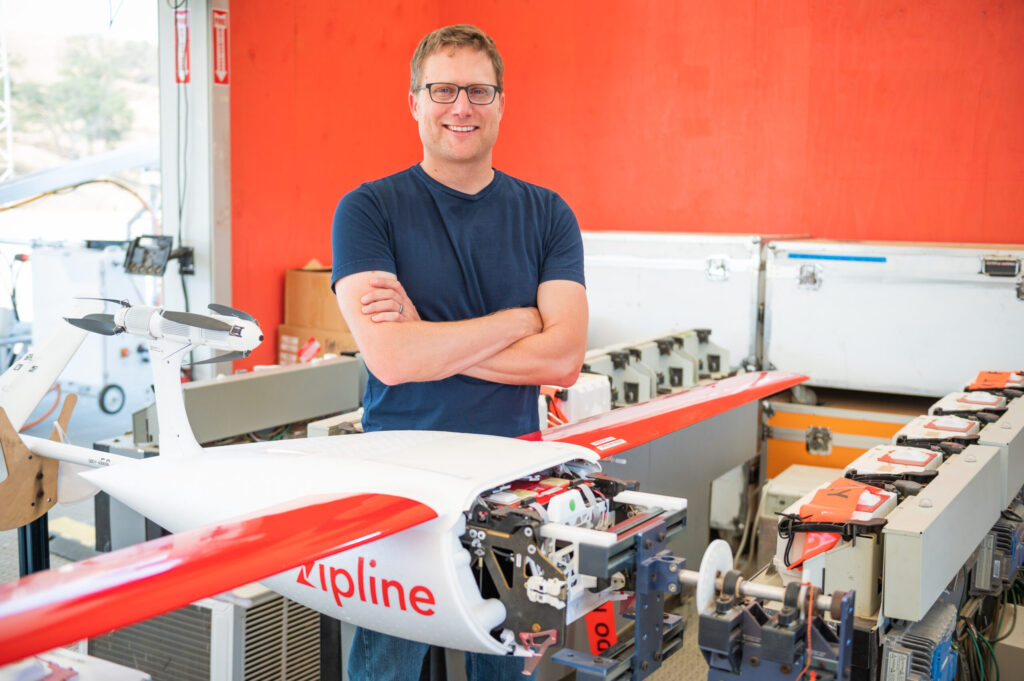

Robot Talk Episode 103 – Keenan Wyrobek

Claire chatted to Keenan Wyrobek from Zipline about drones for delivering life-saving medicine to remote locations.

Keenan Wyrobek is co-founder and head of product and engineering at Zipline, the world’s first drone delivery service whose focus is delivering life-saving medicine to the most difficult to reach places on earth. Prior to Zipline, Keenan was a co-founder and director of the Personal Robotics Program at Willow Garage. He was involved in launching the Robot Operating System (ROS) and shipping PR2, the first personal robot for software R&D. Keenan has spent years delivering high tech products to market across a range of fields including consumer electronics and medical robotics.

The next wave of humanoid robotics: how emerging tech is reshaping the future of automation

Top 10 Artificial Intelligence Technology Trends

Top 10 Artificial Intelligence Technology Trends

A 30-Sec Summary

- Artificial Intelligence Technology Trends is one of the trending topics that are widely talked over nowadays in technology & business circles. Many industry analysts & experts argue that AI is the future; but, when we look around and analyze, we are convinced that it’s not the future; it is the present.

- Artificial intelligence in general is showing promise for individuals who need to analyze data, perform repetitive tasks and enhance workflow with a hybrid system of AI bots and real employees.

- AI market is predicted to achieve $390.9 billion by the year 2025 and it is anticipated that Artificial intelligence will resolve 20% (Approx.) of neglected medicinal services requests.

- It has become a significant part of numerous business operations, aiding to better understand and enhance management functions and at the end, customer experience.

Artificial Intelligence is the technical story of 2010 decade. Over the past decade, machines that are indeed considered “intelligent” – capable of thinking, learning and performing like humans – have begun to become a reality outside of science fiction.

Get to know More About: What is Artificial Intelligence? And what are the Examples of AI?

As we started the new decade, advanced technology is changing at a rapid speed. The prediction engine that could shape the course of AI in the next decade has not yet been built, and we can be very sure of what will happen in the coming years. Expenditure on research, development and expansion continues to increase and discussions on broader social issues are raging.

Get a free quote

[contact-form-7]Today, we are here to help you get an idea about the AI technology trends that will change the 2025 decade:

Let’s dig in!

Top 10 Artificial Intelligence Technology Trends We Will Be Watching in 2025 Decade

#1 AI Monitors and Refines business processes

While the initial robots in the organizations were primarily associated with automated manual tasks like production routes and manufacturing, today’s AI-based robots take on the repetitive tasks but essential work that we do on computers.

The repetitive tasks like farms filling, report generation and document creation and instructions will be completely automated in the coming years by the machines and learn for us in a quicker and streamlined way.

This automation is called as ‘Robotic Process Automation’ which saves time and money, leaving humans to spend much time on strategic, interpersonal, creative, and complex tasks.

#2 AI Becomes More Useful As Data Becomes More Accessible & Accurate

The quality of data accessible is regularly an obstruction to organizations and businesses wanting to move towards automated AI is driven decision making. But, as innovation and strategies for reproducing real-world mechanisms and procedures in the digital space have improved over the late years, precise information has gotten progressively available.

Simulators have progressed to the phase where vehicle manufacturers and others are busy on the development of autonomous driving cars and other vehicles can obtain thousands of hours of driving data even without laboratory vehicles, which dramatically reduces cost and increases data quality. This decade sees a rise in the availability and accuracy of real-world simulations, which will lead to more accurate and robust AI.

#3 More Personalization Takes Place In Real-Time

This Artificial intelligence technology trend is driven by the success of ecommerce giants such as Google, Alibaba, Facebook, and Amazon and the ability to provide personalized recommendations and experiences.

Artificial Intelligence enables customers to accurately and rapidly project a 360-degree view in real-time when communicating through mobile apps and online portals, discovering how their expectations fit our needs and requirements with ever-developing accuracy.

For example, Domino’s know when we need pizza, and make sure the “Order Now” button is in front of us when we feel hungry, creating solutions to provide each other industry personalized consumer experiences.

#4 More Usage of AI in Games, Music and Films

However, the influence of AI on entertainment media is likely to increase. We have recently seen ‘ Robert de Niro de-Aged’ with the assistance of Artificial Intelligence, in Martin Scorsese epic. The use of AI in making incredible visual effects and computer graphics is likely to become increasingly common in the films.

Likewise, AI-generated music will be a trend in 2020, and human will differently enjoy this machine-generated music. Fully AI-created music may not be everyone’s choice, where AI excels at creating dynamic soundtracks – imagine smart playlists on platforms like Google Music or Spotify that match the pace and mood of our daily lives with remarkable tempo and tune.

AI is used to create human-like rivals that challenge players to compete and adjust game play and difficulty dynamically so that video games continue to provide a compelling task for gamers of all skill levels.

#5 Human Interaction Increases with AI

Most of us are accustomed to the idea of working with AI-powered bots and tools in our everyday lives. It builds tools that make the people skills – AI yet manageable such as attendance, strategy, design, and interactive skill and at the same time, enhancing them with the super-fast analytics capabilities provided by the vast datasets updated in real-time.

For most of us, it’s about learning new methods or learning new to use human skills along with these new software-based and robotic tools.

This AI trend is evident throughout 2020, and if your employer does not invest in Artificial intelligence tools and training, it is worth considering how well they will grow in the upcoming years.

Good to know more about: 10 Best Artificial Intelligence Applications

#6 More and More Devices Are Implementing AI-Powered Technology

As the expertise and hardware wanted to implement AI become more accessible and cheaper, we will be using it in a growing number of devices, gadgets, and tools. In 2019 we were already accustomed to running applications that provide AI-powered predictions on mobile, computers, and even on watches.

As we are in 2020 decade and hardware and hardware costs decline, AI tools will increasingly be incorporated into our automobiles, office equipment and home appliances. Growing up with models such as augmented and virtual reality displays and the cloud and the IoT, the coming year will see more and more devices of all sizes and shapes will start thinking and learning about themselves.

#7 AI increasingly at the “edge”

The AI technology that we now use to interact in our everyday lives takes place mostly “in the cloud” – when we web search through the various recommendations on entertainment platforms like Netflix, data-based algorithms function high on processors powered by remote data centers, on our desks and hands.

However, as these algorithms become increasingly capable of operating on low-power and more efficient devices, Artificial Intelligence is taking place at the edge computing closer to where data is collected and utilized.

Custom processors designed to perform analytics on the fly become part of the technology we communicate daily and we are mostly able to do this even if we have moss and non-existent internet connections.

#8 Deepfakes Get Better, Er, and Even Worse

Deepfake is another exciting AI technology trend that has made tremendous progress in recent years. 2019 has seen viral, thankfully humorous deep fakes on many social media networks like Facebook, Whatsapp, Instagram, Twitter, etc.

But this advanced technology is getting worldlier over time. This opens wide avenues to very worrying consequences that could damages or destroys reputation in the real world.

Since deep fakes are already so hard to distinguish from real video, how can we find whether something is fake or real? It is critical because Deep fake can be used immediately to spread cyber threats, political information, and corporate sabotage.

Facebook and Google are trying to break out of potential negative facet by unveiling thousands of deep fake scenes to teach them how to identify AI.

#9 AI Is Even More Prevalent In Cyber Security

As social engineering attacks, phishing, and hacking have become much more sophisticated, and they are powered by Artificial intelligence algorithms, smart and digital technology plays a vital role in protecting data from the intrusion of these attempts into our daily lives.

The rollout of 5G and other high-speed wifi technology brings enormous opportunities for enterprises to offer services in innovative and creative ways. Still, they open doors to more mature cyber attacks. The cost of cyber security continues to rise and is highly sought after by those with relevant skills.

#10 AI Recognizes Us Even If We Do Not

It is the fact that the intensity of facial recognition technology is likely to boost as we have entered into the 2020 decade. Governments and corporate companies across the world are widely investing in these methods of identifying the people and describing behavior and activities of them.

As this has some negative impact, San Francisco has banned the use of facial recognition by municipal and police agencies in 2019, and others are likely to halt in this year.

But the query of whether humans will eventually accept these intrusions into their lives, instead of increased convenience and security, is likely to become a topic of discussion in the coming year.

Confused to choose the right path Recommended reading: Top Artificial intelligence Companies in US, India & Europe

CONCLUSION

Artificial intelligence technology has the potential to redefine and reshape the way humans work and live. We all expect more AI-enabled solutions in the workplace as a growing AI trend in 2025 decade. These AI tools help us create new customer experiences, better results and guarantee that we achieve our goals in an efficient and timely manner.

We all know that AI has already had a tremendous impact on various markets, transforming the way businesses work and the way they approach their users. Companies that have experienced these important changes will have the choice to embrace and become market leaders or to stand in awe of change.

Boost Your Business Growth by Using Artificial Intelligence

USM Business Systems has vast experience and best practices in using AI and its technologies, deliver high-level AI solutions and apps.

Contact us today to know your business needs so that we get back to you to provide a solution.

Thanks for reading the blog!

Get a free quote

[contact-form-7]