Too much screen time may be hurting kids’ hearts

Iranian tech prodigies battle it out with robots

Robot Talk Episode 131 – Empowering game-changing robotics research, with Edith-Clare Hall

Claire chatted to Edith-Clare Hall from the Advanced Research and Invention Agency (ARIA) about accelerating scientific and technological breakthroughs.

Edith-Clare Hall is a PhD student at the University of Bristol, Frontier Specialist at ARIA, and leader of Women in Robotics UK. She focuses on the critical interfaces where interconnected systems meet, working to close the gap between academic research and real-world deployment to unlock cyber-physical autonomy. At ARIA, she works as a technical generalist, accelerating breakthroughs across emerging and future programmes. Her PhD research focussed on creating bespoke robotic systems that deliver support for people with progressive conditions such as motor neurone disease (MND).

First Autonomous Mobile Robots Roll Off the Line at Rockwell Automation’s Milwaukee Headquarters

Bionic leg’s pilot performance spotlights its technology and the role of teamwork

How tiny drones inspired by bats could save lives in dark and stormy conditions

Human-centric soft robotics flip the script on ‘The Terminator’

Rugged electric actuators provide reliable steering for extensible, self-driving platform

A flexible lens controlled by light-activated artificial muscles promises to let soft machines see

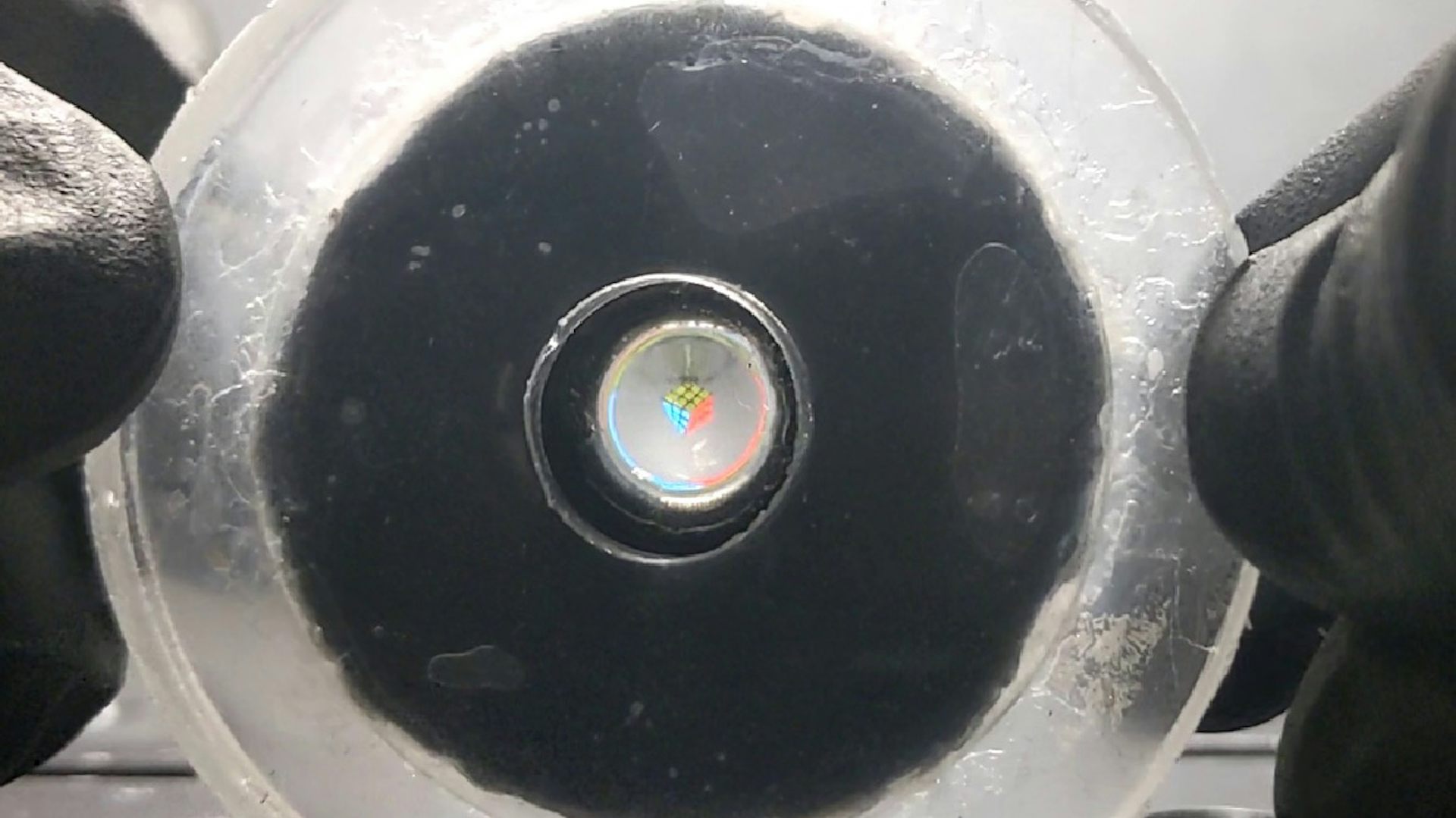

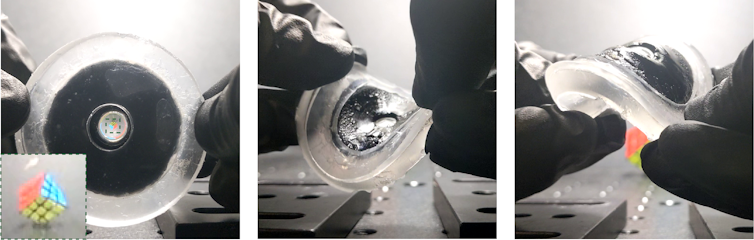

This rubbery disc is an artificial eye that could give soft robots vision. Image credit: Corey Zheng/Georgia Institute of Technology.

This rubbery disc is an artificial eye that could give soft robots vision. Image credit: Corey Zheng/Georgia Institute of Technology.

By Corey Zheng, Georgia Institute of Technology and Shu Jia, Georgia Institute of Technology

Inspired by the human eye, our biomedical engineering lab at Georgia Tech has designed an adaptive lens made of soft, light-responsive, tissuelike materials.

Adjustable camera systems usually require a set of bulky, moving, solid lenses and a pupil in front of a camera chip to adjust focus and intensity. In contrast, human eyes perform these same functions using soft, flexible tissues in a highly compact form.

Our lens, called the photo-responsive hydrogel soft lens, or PHySL, replaces rigid components with soft polymers acting as artificial muscles. The polymers are composed of a hydrogel − a water-based polymer material. This hydrogel muscle changes the shape of a soft lens to alter the lens’s focal length, a mechanism analogous to the ciliary muscles in the human eye.

The hydrogel material contracts in response to light, allowing us to control the lens without touching it by projecting light onto its surface. This property also allows us to finely control the shape of the lens by selectively illuminating different parts of the hydrogel. By eliminating rigid optics and structures, our system is flexible and compliant, making it more durable and safer in contact with the body.

Why it matters

Artificial vision using cameras is commonplace in a variety of technological systems, including robots and medical tools. The optics needed to form a visual system are still typically restricted to rigid materials using electric power. This limitation presents a challenge for emerging fields, including soft robotics and biomedical tools that integrate soft materials into flexible, low-power and autonomous systems. Our soft lens is particularly suitable for this task.

Soft robots are machines made with compliant materials and structures, taking inspiration from animals. This additional flexibility makes them more durable and adaptive. Researchers are using the technology to develop surgical endoscopes, grippers for handling delicate objects and robots for navigating environments that are difficult for rigid robots.

The same principles apply to biomedical tools. Tissuelike materials can soften the interface between body and machine, making biomedical tools safer by making them move with the body. These include skinlike wearable sensors and hydrogel-coated implants.

What other research is being done in this field

This work merges concepts from tunable optics and soft “smart” materials. While these materials are often used to create soft actuators – parts of machines that move – such as grippers or propulsors, their application in optical systems has faced challenges.

Many existing soft lens designs depend on liquid-filled pouches or actuators requiring electronics. These factors can increase complexity or limit their use in delicate or untethered systems. Our light-activated design offers a simpler, electronics-free alternative.

What’s next

We aim to improve the performance of the system using advances in hydrogel materials. New research has yielded several types of stimuli-responsive hydrogels with faster and more powerful contraction abilities. We aim to incorporate the latest material developments to improve the physical capabilities of the photo-responsive hydrogel soft lens.

We also aim to show its practical use in new types of camera systems. In our current work, we developed a proof-of-concept, electronics-free camera using our soft lens and a custom light-activated, microfluidic chip. We plan to incorporate this system into a soft robot to give it electronics-free vision. This system would be a significant demonstration for the potential of our design to enable new types of soft visual sensing.

The Research Brief is a short take on interesting academic work.![]()

Corey Zheng, PhD Student in Biomedical Engineering, Georgia Institute of Technology and Shu Jia, Assistant Professor of Biomedical Engineering, Georgia Institute of Technology

This article is republished from The Conversation under a Creative Commons license. Read the original article.