Novel smart fabrics give robots a delicate grip

SIMA 2: An Agent that Plays, Reasons, and Learns With You in Virtual 3D Worlds

Deep Dive into integration challenges of ONVIF-compliant GigE cameras and how ONVIF enhances interoperability

How can people hand over packages comfortably to delivery robots?

How High-Precision Reducers Drive the Development of Intelligent Manufacturing Technology

Humanoids – Safety Standards for the Next Wave of Robots

Teaching AI to see the world more like we do

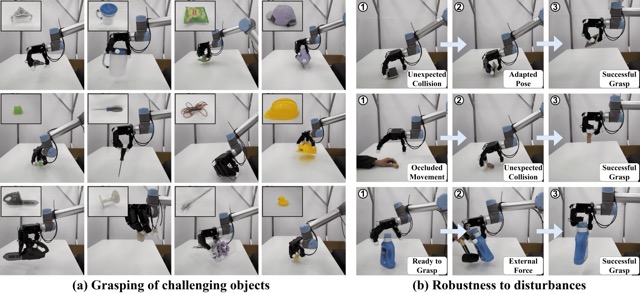

CoRL2025 – RobustDexGrasp: dexterous robot hand grasping of nearly any object

The dexterity gap: from human hand to robot hand

Observe your own hand. As you read this, it’s holding your phone or clicking your mouse with seemingly effortless grace. With over 20 degrees of freedom, human hands possess extraordinary dexterity, which can grip a heavy hammer, rotate a screwdriver, or instantly adjust when something slips.

With a similar structure to human hands, dexterous robot hands offer great potential:

Universal adaptability: Handling various objects from delicate needles to basketballs, adapting to each unique challenge in real time.

Fine manipulation: Executing complex tasks like key rotation, scissor use, and surgical procedures that are impossible with simple grippers.

Skill transfer: Their similarity to human hands makes them ideal for learning from vast human demonstration data.

Despite this potential, most current robots still rely on simple “grippers” due to the difficulties of dexterous manipulation. The pliers-like grippers are capable only of repetitive tasks in structured environments. This “dexterity gap” severely limits robots’ role in our daily lives.

Among all manipulation skills, grasping stands as the most fundamental. It is the gateway through which many other capabilities emerge. Without reliable grasping, robots cannot pick up tools, manipulate objects, or perform complex tasks. Therefore, we focus on equipping dexterous robots with the capability to robustly grasp diverse objects in this work.

The challenge: why dexterous grasping remains elusive

While humans can grasp almost any object with minimal conscious effort, the path to dexterous robotic grasping is fraught with fundamental challenges that have stymied researchers for decades:

High-dimensional control complexity. With 20+ degrees of freedom, dexterous hands present an astronomically large control space. Each finger’s movement affects the entire grasp, making it extremely difficult to determine optimal finger trajectories and force distributions in real-time. Which finger should move? How much force should be applied? How to adjust in real-time? These seemingly simple questions reveal the extraordinary complexity of dexterous grasping.

Generalization across diverse object shapes. Different objects demand fundamentally different grasp strategies. For example, spherical objects require enveloping grasps, while elongated objects need precision grips. The system must generalize across this vast diversity of shapes, sizes, and materials without explicit programming for each category.

Shape uncertainty under monocular vision. For practical deployment in daily life, robots must rely on single-camera systems—the most accessible and cost-effective sensing solution. Furthermore, we cannot assume prior knowledge of object meshes, CAD models, or detailed 3D information. This creates fundamental uncertainty: depth ambiguity, partial occlusions, and perspective distortions make it challenging to accurately perceive object geometry and plan appropriate grasps.

Our approach: RobustDexGrasp

To address these fundamental challenges, we present RobustDexGrasp, a novel framework that tackles each challenge with targeted solutions:

Teacher-student curriculum for high-dimensional control. We trained our system through a two-stage reinforcement learning process: first, a “teacher” policy learns ideal grasping strategies with privileged information (full object shape and tactile sensors) through extensive exploration in simulation. Then, a “student” policy learns from the teacher using only real-world perception (single-view point cloud, noisy joint positions) and adapts to real-world disturbances.

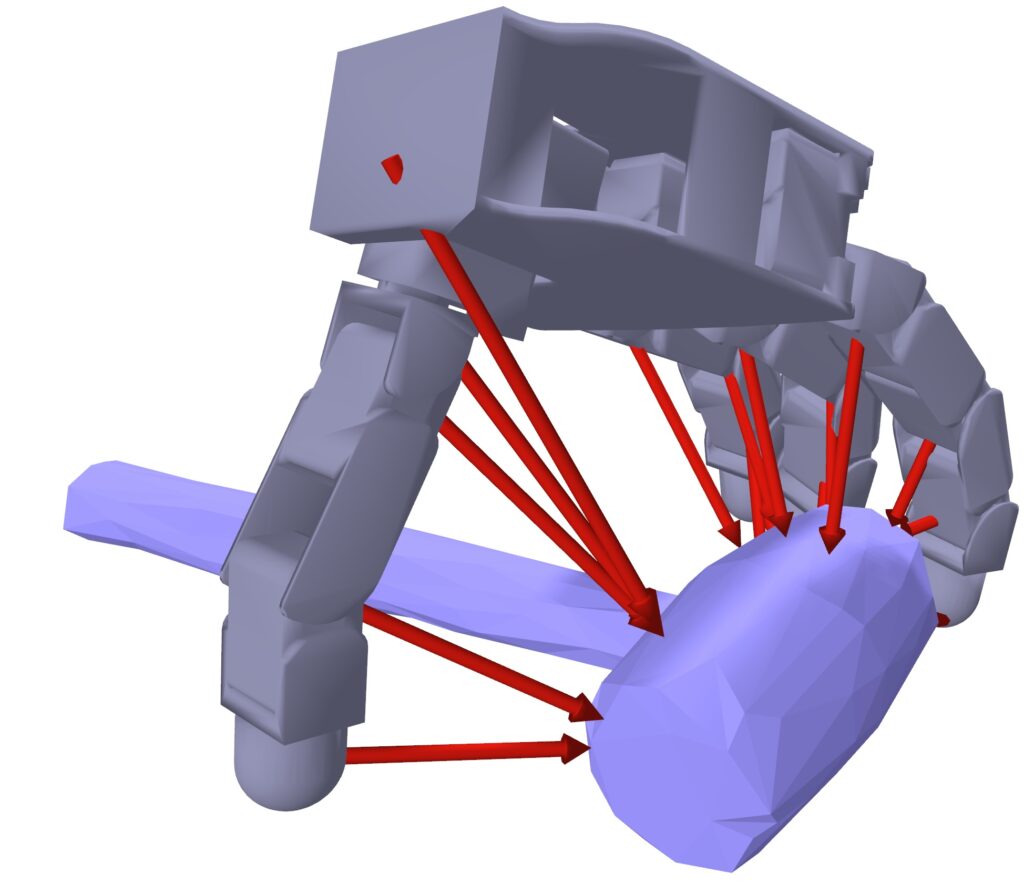

Hand-centric “intuition” for shape generalization. Instead of capturing complete 3D shape features, our method creates a simple “mental map” that only answers one question: “Where are the surfaces relative to my fingers right now?” This intuitive approach ignores irrelevant details (like color or decorative patterns) and focuses only on what matters for the grasp. It’s the difference between memorizing every detail of a chair versus just knowing where to put your hands to lift it—one is efficient and adaptable, the other is unnecessarily complicated.

Multi-modal perception for uncertainty reduction. Instead of relying on vision alone, we combine the camera’s view with the hand’s “body awareness” (proprioception—knowing where its joints are) and reconstructed “touch sensation” to cross-check and verify what it’s seeing. It’s like how you might squint at something unclear, then reach out to touch it to be sure. This multi-sense approach allows the robot to handle tricky objects that would confuse vision-only systems—grasping a transparent glass becomes possible because the hand “knows” it’s there, even when the camera struggles to see it clearly.

The results: from laboratory to reality

Trained on just 35 simulated objects, our system demonstrates excellent real-world capabilities:

Generalization: It achieved a 94.6% success rate across a diverse test set of 512 real-world objects, including challenging items like thin boxes, heavy tools, transparent bottles, and soft toys.

Robustness: The robot could maintain a secure grip even when a significant external force (equivalent to a 250g weight) was applied to the grasped object, showing far greater resilience than previous state-of-the-art methods.

Adaptation: When objects were accidentally bumped or slipped from its grasp, the policy dynamically adjusted finger positions and forces in real-time to recover, showcasing a level of closed-loop control previously difficult to achieve.

Beyond picking things up: enabling a new era of robotic manipulation

RobustDexGrasp represents a crucial step toward closing the dexterity gap between humans and robots. By enabling robots to grasp nearly any object with human-like reliability, we’re unlocking new possibilities for robotic applications beyond grasping itself. We demonstrated how it can be seamlessly integrated with other AI modules to perform complex, long-horizon manipulation tasks:

Grasping in clutter: Using an object segmentation model to identify the target object, our method enables the hand to pick a specific item from a crowded pile despite interference from other objects.

Task-oriented grasping: With a vision language model as the high-level planner and our method providing the low-level grasping skill, the robot hand can execute grasps for specific tasks, such as cleaning up the table or playing chess with a human.

Dynamic interaction: Using an object tracking module, our method can successfully control the robot hand to grasp objects moving on a conveyor belt.

Looking ahead, we aim to overcome current limitations, such as handling very small objects (which requires a smaller, more anthropomorphic hand) and performing non-prehensile interactions like pushing. The journey to true robotic dexterity is ongoing, and we are excited to be part of it.

Read the work in full

- RobustDexGrasp: Robust Dexterous Grasping of General Objects, Hui Zhang, Zijian Wu, Linyi Huang, Sammy Christen, Jie Song.

Popular AI models aren’t ready to safely power robots, study warns

How AI is giving Northern Ireland teachers time back

Q&A with Uncrewed & Autonomous Systems 2025

Introduction to ROS

China’s Out With a New Killer AI Creative Writer

Writers looking for AI preconceived to write brilliantly may want to check-out a new AI engine from China.

Dubbed Kimi K2 Thinking, the new tool promises the ability to navigate hundreds of steps on its way to auto-generating writing that is “shockingly good,” according to writer Grant Harvey.

Observes Harvey: “We co-wrote a YA novel called “The Salt Circus”—and the AI actually revised itself, scrapped bad ideas and showed genuine creative judgment.”

In other news and analysis on AI writing:

*Major Web Host Promises ‘Hour-a-Day’ Savings with AI-Powered Email: Hostinger is out with a new AI-powered email suite designed to save you serious time each day with your email.

Key features of the email suite include:

–AI email writer

–Automated smart email replies

–AI email summarizer

–AI writing stylizer

Warning: AI forged in China is often coded with the ability to forward the data you input to the Chinese Communist Party.

For more on saving time — while boosting writing prowess — with AI, check-out “Bringing in ChatGPT for Email,” by Joe Dysart.

*Use AI or You’re Fired: In another sign that the days of ‘AI is Your Buddy’ are fading fast, increasing numbers of businesses have turned to strong-arming employees when it comes to AI.

Observes Wall Street Journal writer Lindsay Ellis: “Rank-and-file employees across corporate America have grown worried over the past few years about being replaced by AI.

“Something else is happening now: AI is costing workers their jobs if their bosses believe they aren’t embracing the technology fast enough.”

*Auto-Write a Non-Fiction Book in an Hour: AI startup StoryOne says it has cracked-the-code on using AI to crank-out a full-length non-fiction book in about an hour.

StoryOne promises that anyone can use its software to transform ideas, podcasts, interviews, research or draft manuscripts into a high-quality, fact-based, non-fiction book in about an hour.

The software has been endorsed by Michael Reinartz, chief innovation officer, Vodafone Germany.

*ChatGPT-Maker Books One Millionth Business Customer: OpenAI recently booked its one millionth customer – making it the fastest-growing business app in history, according to the company.

Observes writer Mike Moore: “This goes along with its 800 million weekly users using ChatGPT in some form — which has helped make the platform synonymous with the constantly growing appetite for AI in our daily lives.

“The company has revealed a host of new tools in recent months to help boost adoption, including ‘company knowledge,’ where ChatGPT can reason across tools like Slack, SharePoint, Google Drive, GitHub and more to get answers.”

*AI Has a Hit Song: While AI’s ability to write and record songs has been an ongoing nag for music creators, the stakes just got much higher: AI now has its own hit song.

Dubbed “How Was I Supposed to Know?” the tune is currently charting at number thirty on Billboard’s Adult R&B Airplay Survey.

The powerhouse singer behind the hit is also AI-generated: Xania Monet, who was ‘signed’ as an artist at Hallwood Media.

*Microsoft Copilot Gets an AI Research Boost: Writers looking for yet another new option in AI research may want to check-out ‘Researcher with Computer Use.’

It’s a new feature embedded in Microsoft’s answer to ChatGPT – Copilot.

The new tool includes an AI agent that uses a secure, virtual computer to navigate public, gated and interactive Web content.

Plus, users also have the option to green-light the tool to access databases inside their enterprises as well.

*Study: AI Agents Virtually Useless at Completing Freelance Assignments: New research finds that much-ballyhooed AI agents are literally horrible at completing everyday assignments that are found on freelance brokerage sites like Fiverr and Upwork.

Observes writer Frank Landymore: “The top performer, they found, was an AI agent from the Chinese startup Manus with an automation rate of just 2.5 percent — meaning it was only able to complete 2.5 percent of the projects it was assigned at a level that would be acceptable as commissioned work in a real-world freelancing job, the researchers said.

“Second place was a tie, at 2.1 percent, between Elon Musk’s Grok 4 and Anthropic’s Claude Sonnet 4.5.”

*AI’s New Gig: Writing Official Quarterly and Annual Reports: Writer Mark Maurer reports that increasing numbers of official financial reports from public companies are being written in large part by AI.

Observes Maurer: “The efforts are the latest sign of finance executives’ growing ease with AI for public-facing work that was long handled solely by humans.”

*AI BIG PICTURE: Fed Chairman Confirms: AI is Eating Jobs: U.S. Federal Reserve Chairman Jerome Powell just made it official: AI is often sucking up jobs at businesses where the new technology has been embraced.

Observes writer Mike Kaput: “At a recent press conference, Powell noted that once you strip-out statistical over-counting, job creation is pretty close to zero.

“He then confirmed what many CEOs are now openly telling the Fed and investors: AI is allowing them to do more work with fewer people.”

Share a Link: Please consider sharing a link to https://RobotWritersAI.com from your blog, social media post, publication or emails. More links leading to RobotWritersAI.com helps everyone interested in AI-generated writing.

–Joe Dysart is editor of RobotWritersAI.com and a tech journalist with 20+ years experience. His work has appeared in 150+ publications, including The New York Times and the Financial Times of London.

The post China’s Out With a New Killer AI Creative Writer appeared first on Robot Writers AI.

IT as the new HR: Managing your AI workforce

Your organization is already hiring digital workers. Now, the question is whether IT is actually managing these “people-like” systems as part of the workforce, or as just another application in the tech stack.

Far from just another AI tool, AI agents are becoming digital coworkers that need the same lifecycle management as human employees: onboarding, supervision, performance reviews, and eventually, responsible decommissioning.

Many companies are already deploying agents to handle customer inquiries, process invoices, and make recommendations. The mistake is treating agents like software instead of managing them like team members.

IT is the natural leader to take on this “human resources for AI agents” role, managing agents’ lifecycle proactively versus inheriting a mismanaged system later. That’s how organizations move beyond pilots and manage agent lifecycles responsibly — with IT leading in partnership with business and compliance teams.

This is Post 3 in our Agent Workforce series, exploring how IT is well-positioned to manage agents as workforce assets, not just technology deployments.

Why IT is becoming the new HR for AI agents

AI agents are already steering IT into an expanded role. Just as HR oversees the employee lifecycle, IT is beginning to take ownership of managing the complete journey of AI agents:

- Recruiting the right talent (selecting appropriate agents)

- Onboarding (integrating with enterprise systems)

- Supervising performance (monitoring accuracy and behavior)

- Training and development (retraining and updates)

- Offboarding (decommissioning and knowledge transfer)

HR doesn’t just hire people and walk away. It creates policies, sets cultural norms, and enforces accountability frameworks. IT must do the same thing for agents, balancing developer autonomy with governance requirements, much like HR balances employee freedom with company policy.

The stakes of getting it wrong are comparable, too. HR works to prevent unvetted hires that could damage the business and brand. IT must prevent deployment that introduces uncontrolled risk. When business units spin up their own agents without oversight or approval, it’s like bringing on a new hire without a background check.

When IT owns agent lifecycle management, organizations can curb shadow AI, embed governance from day one, and measure ROI more effectively. IT becomes the single source of truth (SSOT) for enterprise-wide consistency across digital workers.

But governance is only part of the job. IT’s larger mandate is to build trust between humans and digital coworkers, ensuring clarity, accountability, and confidence in every agent decision.

How IT manages the digital coworker lifecycle

IT isn’t just tech support anymore. With a growing digital workforce, managing AI agents requires the same structure and oversight HR applies to employees. When agents misbehave or underperform, the financial and reputational costs can be significant.

Recruiting the right agents

Think of agent deployment as hiring: Just like you’d interview candidates to determine their capabilities and readiness for the role, IT needs to evaluate accuracy, cost, latency, and role fit before any agent is deployed.

It’s a balance between technical flexibility and enterprise governance. Developers need room to experiment and iterate, but IT still owns consistency and control. Frameworks should enable innovation within governance standards.

When business teams build or deploy agents without IT alignment, visibility and governance start to slip, turning small experiments into enterprise-level risks. This “shadow AI” can quickly erode consistency and accountability.

Without a governed path to deployment, IT will inherit the risk. An agent catalog solves this with pre-approved, enterprise-ready agents that business units can deploy quickly and safely. It’s self-service that maintains control and prevents shadow AI from becoming a cleanup project later on.

Supervising and upskilling agents

Monitoring is the performance review portion of the agent lifecycle, tracking task adherence, accuracy, cost efficiency, and business alignment — the same metrics HR uses for people.

Retraining cycles mirror employee development programs. Agents need regular updates to maintain performance and adapt to changing requirements, just as people need ongoing training to stay current (and relevant).

Proactive feedback loops matter:

- Identify high-value interactions

- Document failure modes

- Track improvement over time

This historical knowledge becomes invaluable for managing your broader agent workforce.

Performance degradation is often gradual, like an employee becoming slowly disengaged over time. Regular check-ins with agents (reviewing their decision patterns, accuracy trends, and resource consumption) can help IT spot potential issues before they become bigger problems.

Offboarding and succession planning

When a long-tenured employee leaves without proper knowledge transfer, it’s hard to recoup those lost insights. The same risks apply to agents. Decision patterns, learned behaviors, and accumulated context should be preserved and transferred to successor systems to make them even better.

Like employee offboarding and replacement, agent retirement is the final step of agentic workforce planning and management. It involves archiving decision history, compliance records, and operational context.

Continuity depends on IT’s discipline in documentation, version control, and transition planning. Handled well, this leads to succession planning, ensuring each new generation of agents starts smarter than the last.

How IT establishes control: The agent governance framework

Proactive governance starts at onboarding, not after the first failure. Agents should immediately integrate into enterprise systems, workflows, and policies with controls already in place from day one. This is the “employee handbook” moment for digital coworkers. CIOs set the expectations and guardrails early, or risk months of remediation later.

Provisioning and access controls

Identity management for agents needs the same rigor as human accounts, with clear permissions, audit trails, and role-based access controls. For example, an agent handling financial data needs different permissions than one managing customer inquiries.

Access rights should align to each agent’s role. For example:

- Customer service agents can access CRMs and knowledge bases, but not financial systems.

- Procurement agents can read supplier data, but can’t modify contracts without human approval.

- Analytics agents can query specific databases, but not personally identifiable information.

The principle of least privilege applies equally to digital and human workers. Start off extra restrictive, then expand access based on proven need and performance.

Workflow integration

Map workflows and escalation paths that define when agents act independently and when they collaborate with humans. Establish clear triggers, document decision boundaries, and build feedback loops for continuous improvement.

For example, an artificial intelligence resume screener might prioritize and escalate top candidates to human recruiters using defined handoff rules and audit trails. Ultimately, agents should enhance human capabilities, not blur the lines of accountability.

Retraining schedules

Ongoing training plans for agents should mirror employee development programs. Monitor for drift, schedule regular updates, and document improvements.

Much like employees need different types of training (technical skill sets, soft skills, compliance), agents need different updates as well, like accuracy improvements, new capability additions, security patches, and behavioral adjustments.

Retirement or decommissioning

Criteria for offboarding agents should include obsolescence, performance decline, or strategic changes. Archive decision history to preserve institutional knowledge, maintain compliance, and inform future deployments.

Retirement planning isn’t just turning a system off. You need to preserve its value, maintain compliance, and capture what it’s learned. Each retiring agent should leave behind insights that shape smarter, more capable systems in the future.

Tackling AI lifecycle management challenges

Like HR navigating organizational change, IT faces both technical and cultural hurdles in managing the AI agent lifecycle. Technical complexity, skills gaps, and governance delays can easily stall deployment initiatives.

Standardization is the foundation of scale. Establish repeatable processes for agent evaluation, deployment, and monitoring, supported by shared templates for common use cases. From there, build internal expertise through training and cross-team collaboration.

The DataRobot Agent Workforce Platform enables enterprise-scale orchestration and governance across the agent lifecycle, automating deployment, oversight, and succession planning for a scalable digital workforce.

But ultimately, CIO leadership drives adoption. Just as HR transformations rely on executive sponsorship, agent workforce initiatives demand transparent, sustained commitment, including budget, skills development, and cultural change management.

The skills gap is real, but manageable. Partner with HR to identify and train champions who can lead agent operations, model good governance, and mentor peers. Building internal champions isn’t optional; it’s how culture scales alongside technology.

From monitoring systems to managing digital talent

IT owns the rhythm of agent performance (setting goals, monitoring outcomes, and coordinating retraining cycles). But what’s truly transformative is scale.

For the first time, IT can oversee hundreds of digital coworkers in real time, spotting trends and performance shifts as they happen. This continuous visibility turns performance management from a reactive task into a strategic discipline, one that drives measurable business value.

With clear insight into which agents deliver the most impact, IT can make sharper decisions about deployment, investment, and capability development, treating performance data as a competitive advantage, not just an operational metric.

Getting AI agents to operate ethically (and with compliance)

The reputational stakes for CIOs are enormous. Biased agents, privacy breaches, or compliance failures directly reflect on IT leadership. AI governance frameworks aren’t optional. They’re a required part of the enterprise infrastructure.

Just as HR teams define company values and behavioral standards, IT must establish ethical norms for digital coworkers. That means setting policies that ensure fairness, transparency, and accountability from the start.

Three pillars define digital workforce governance:

- Fairness

Prevent discrimination and systemic bias in agent behavior. HR upholds equitable hiring practices; IT must ensure agents don’t exhibit bias in their decision-making. Regular audits, diverse testing scenarios, and bias detection tools should be standard. - Compliance

Compliance mapping to GDPR, CCPA, and industry-specific regulations requires the same rigor as human employee compliance training. Agents handling personal data need privacy safeguards; financial and healthcare agents require sector-specific oversight. - Explainability

Every agent decision should be documented and auditable. Clear reasoning builds trust, supports accountability, and enables continuous improvement. As HR manages employee performance and conduct issues, IT needs parallel processes for digital workers.

When people understand how agents operate — and how they’re governed — trust grows, resistance falls, and adoption accelerates.

Preparing today’s IT leaders to manage tomorrow’s AI teams

A strong ROI comes from treating agents as workforce investments, not technology projects. Performance metrics, compliance frameworks, and lifecycle management then become competitive differentiators, rather than overhead costs.

AI agents are the newest members of the enterprise workforce. Managed well, they help IT and business leaders:

- Scale without proportional headcount increases

- Enforce consistency across global operations

- Streamline routine tasks to focus on innovation

- Gain agility to respond to market changes

AI agents are the future of work. And it’s IT’s stewardship that will define how the future unfolds.

The post IT as the new HR: Managing your AI workforce appeared first on DataRobot.