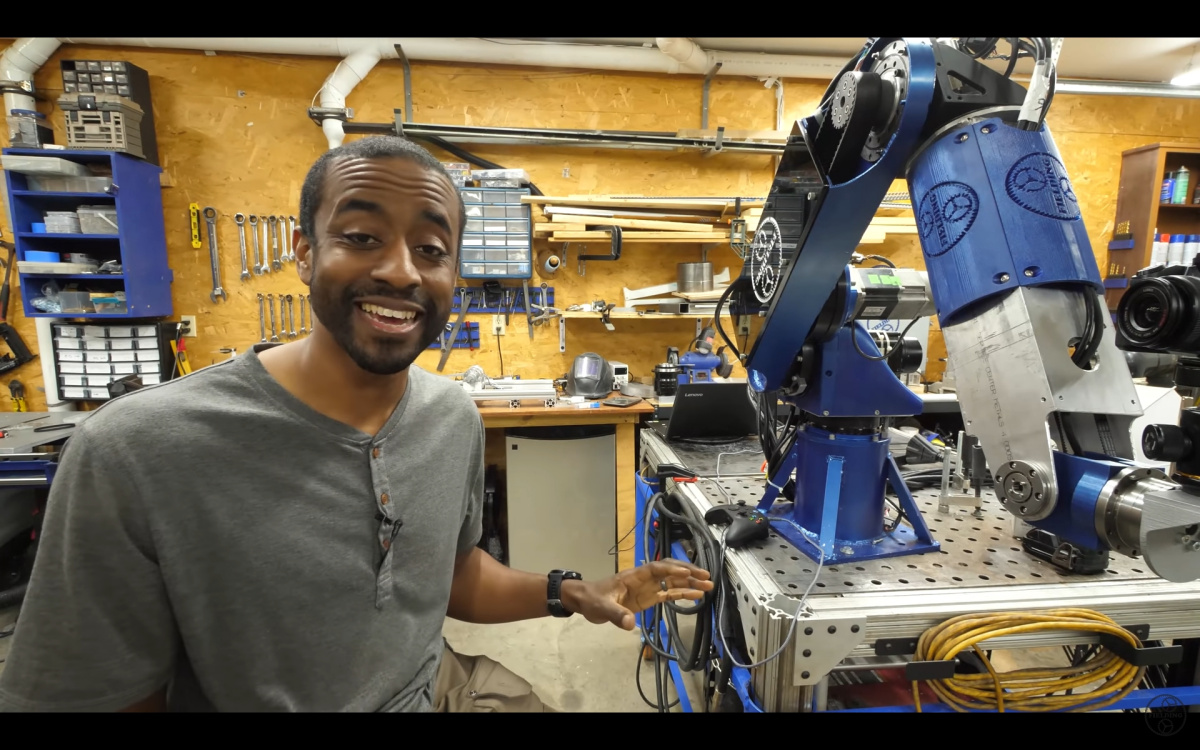

Building a 7 axis robot from scratch

Do you fancy making yourself an industrial robot to enjoy at home? Jeremy Fielding, a passionate fan of mechanical engineering, did. So he built one. Good news is: he’s preparing a series of videos to teach you the whole process from scratch. How much power do you need to run 7 motors at one time? If you lose power, how do you prevent the arm from collapsing on you or dropping the load? How do you keep the cost down? He’s recorded over 100 hours of video, and he’s planning to teach you how he used the servo motors, how you can use them for your projects and how he designed his 7 axis, articulated robot.

Jeremy’s aim (website, YouTube, Twitter, Instagram) is simple: draw people to engineering with amazing projects, inspire them with ideas, then teach them how to do it. And for this video series, he’s also looking for your collaboration. So if you’ve got experience and knowledge on building this type of robots and you’d like to share it, maybe you end up being part of the series!

We’d like to thank Black in Robotics for making it possible for us to discover Jeremy. Here’s the video Jeremy has released to introduce his project:

We had to go find this guy on YouTube because he looked very cool! https://t.co/xomix1TZXo @jeremy_fielding #BlackInRobotics #NationalRoboticsWeek @RoboWeek

— Black in Robotics (@BlackInRobotics) April 9, 2021

Check out Jeremy’s YouTube channel to discover many more instructional videos. You can also support his work on Patreon.

In an AI world we need to teach students how to work with robot writers

Dual Loop Control: No More Gear Backlash

Robo-starfish aims to enable closer study of aquatic life

Talking Robotics’ seminars of January – April 2021 (with videos and even a musical summary!)

Talking Robotics is a series of virtual seminars about Robotics and its interaction with other relevant fields, such as Artificial Intelligence, Machine Learning, Design Research, Human-Robot Interaction, among others. They aim to promote reflections, dialogues, and a place to network. In this seminars compilation, we bring you 7 talks (and a half?) from current roboticists for your enjoyment.

Filipa Correia “Group Intelligence on Social Robots”

Filipa Correia received a M.Sc. in Computer Science from University of Lisbon, Portugal, 2015. She is currently a junior researcher at GAIPSLab and she is pursuing a Ph.D. on Human-Robot Interaction at University of Lisbon, Portugal.

Her PhD thesis is focused on the challenges of creating social robots that are capable of sustaining cohesive alliances in team settings with humans. Moreover, it contributes with computational mechanisms for the robotic teammate to autonomously express group-based emotions or to gaze human teammates in multi-party settings.

For more information about the speaker and related papers, please see this website.

Ross Mead “Bringing Robots To Life”

Dr. Ross Mead is the Founder and CEO of Semio. Ross received his PhD and MS in Computer Science from the University of Southern California in 2015, and his BS in Computer Science from Southern Illinois University Edwardsville in 2007.

In this talk, Dr. Ross Mead discussed the technological advances paving the way for the personal robotics revolution, and the robotics hardware companies leading the charge along that path. He also introduced the software innovations in development at Semio for bringing robots to life.

For more information about the speaker and related papers, please see this website.

Kim Baraka “Humans and Robots Teaching and Learning through Social Interaction”

Kim Baraka is currently a postdoctoral fellow at the Socially Intelligent Machines Lab at the University of Texas at Austin. He holds a dual Ph.D. in Robotics from Carnegie Mellon University and Instituto Superior Técnico (Portugal), an M.S. in Robotics from Carnegie Mellon, and a Bachelor in Electrical and Computer Engineering from the American University of Beirut.

In the first part of the talk, focusing on robots teaching humans, Kim discussed algorithmic solutions that enable socially assistive robots to teach both children and therapists in a personalized way. In the second part of the talk, focusing on humans teaching robots, Kim discussed some preliminary efforts towards developing ways in which robots can learn tasks from human teachers in richer and more natural ways.

For more information about the speaker and related papers, please see this website.

Glenda Hannibal “Trust in HRI: Probing Vulnerability as an Active Precondition”

Glenda has previously worked in the Department of Sociology at the University of Vienna and as an expert for the HUMAINT project at the European Commission. Glenda holds a BA and MA in Philosophy from Aarhus University and is currently a PhD student in the Trust Robots Doctoral College and Human-Computer Interaction group at TU Wien.

In this talk, Glenda presented her research on vulnerability as a precondition of trust in HRI. In the first part, she argued that while the most commonly cited definitions of trust used in HRI recognize vulnerability as an essential element of trust, it is also often considered somewhat problematic too. In the second part of her talk, she presented the results of two empirical studies she has undertaken to explore trust in HRI in relation to vulnerability. Finally, she reflected on few ethical aspects related to this theme to end this talk.

For more information about the speaker and related papers, please see this website.

Carl Mueller “Robot Learning from Demonstration Driven Constrained Skill Learning & Motion Planning”

Carl Mueller is a Ph.D. student of computer science at the University of Colorado – Boulder, advised by Professor Bradley Hayes within the Collaborative Artificial Intelligence and Robotics Laboratory. He graduated from the University of California – Santa Barbara with a degree in Biopsychology and after a circuitous route through the pharmaceutical industry, he ended up in the tech, founding his own company building intelligent chat agents for business analytics.

The major theme of his research is the enablement of human users to communicate additional information to the robot learning system through ‘concept constraints’. Concept Constraints are abstract behavioral restrictions grounded as geometric and kinodynamical planning predicates that prohibit or limit the behavior of the robot resulting in more robust, generalizable, and safe skill execution. In this talk, Carl discussed how conceptual constraints are integrated into existing LfD methods, how unique interfaces can further enhance the communication of such constraints, and how the grounding of these constraints requires constrained motion planning techniques.

For more information about the speaker and related papers, please see this website.

Daniel Rakita “Methods and Applications for Generating Accurate and Feasible Robot-arm Motions in Real-time”

Daniel Rakita is a Ph.D. student of computer science at the University of Wisconsin-Madison advised by Michael Gleicher and Bilge Mutlu. He received a Bachelors of Music Performance from the Indiana University Jacobs School of Music in 2012.

In this talk, he overviewed technical methods they have developed that attempt to achieve feasible, accurate, and time-sensitive robot-arm motions. In particular, he detailed their inverse kinematics solver called RelaxedIK that utilizes both non-linear optimization and machine learning to achieve a smooth, feasible, and accurate end-effector to joint-space mapping on-the-fly. He highlighted numerous ways they have applied their technical methods to real-world-inspired problems, such as mapping human-arm-motion to robot-arm-motion in real-time to afford effective shared-control interfaces and automatically moving a camera-in-hand robot in a remote setting to optimize a viewpoint for a teleoperator.

For more information about the speaker and related papers, please see this website.

Barbara Bruno “Culture-Aware Robotics”

Barbara Bruno is a post-doc researcher at the École Polytechnique Fédérale de Lausanne (EPFL), in Lausanne, Switzerland, in the CHILI lab. Barbara received the M.Sc. and the Ph.D. in Robotics from the University of Genoa in 2011 and 2015, respectively. She is part of the NCCR Robotics organisation and currently involved in the EU ITN ANIMATAS.

In this talk, she explored how existing quantitative and qualitative methods for the assessment of culture, and cultural differences, can be combined with knowledge representation and reasoning tools such as ontologies and fuzzy controllers to endow robots with the capability of taking cultural factors into account in a range of tasks going from low-level motion planning to high-level dialogue management and user adaptation.

For more information about the speaker and related papers, please see this website.

Extra: When you forget to hit the record button but you value the speaker so much that you compose a musical summary of his talk

Because as they say, we are human after all!

Nils Hagberg “A call for a Human Approach to Technology”

Nils Hagberg is the Product Owner at Furhat Robotics. He is a computer linguist with almost ten years of industry experience in human-machine interaction and conversational technology.

In this talk, he gave a few examples of human-centric business case designs that he’s come across earlier in his career and at Furhat Robotics – the social robotics company that have set out to make technology more human. His hope for this talk is that it will put your own work in a larger context and nudge you towards a path that will ensure humans will be allowed to remain human.

For more information about the speaker and related papers, please see this website.

Warm feelings about human-looking robots can turn icy when bots blunder

Axis compensation module from the XYR1000-B series with new linear guide and new installation sizes

iRobot Education expands its free coding platform with social-emotional learning, multi-language support

iRobot Corp. unveiled new coding resources through iRobot Education that promote more inclusive, equitable access to STEM education and support social-emotional development. iRobot also updated its iRobot Coding App with the introduction of Python coding support and a new 3D Root coding robot simulator environment that is ideal for hybrid and remote learning landscapes.

The updates coincide with the annual National Robotics Week, a time when kids, parents and teachers across the nation tap into the excitement of robotics for STEM learning.

Supporting Social and Emotional Learning

The events of the past year changed the traditional learning environment with students, families and educators adapting to hybrid and remote classrooms. Conversations on the critical importance of diversity, equity and inclusion have also taken on increased importance in the classroom. To address this, iRobot Education has introduced social and emotional learning (SEL) lessons to its Learning Library that tie SEL competencies, like peer interaction and responsible decision-making, into coding and STEM curriculum. These SEL learning lessons, such as The Kind Playground, Seeing the Whole Picture and Navigating Conversations, provide educators with new resources that help students build emotional intelligence and become responsible global citizens, through a STEM lens.

Language translations for iRobot Coding App

More students can now enjoy the free iRobot Coding App with the introduction of Spanish, French, German, Czech and Japanese language support. iRobot’s mobile and web coding app offers three progressively challenging levels of coding language that advances users from graphical coding to hybrid coding, followed by full-text coding. Globally, users can now translate graphical and hybrid block coding levels into their preferred language, helping beginners and experts alike hone their language and computational thinking skills.

Introducing Python coding language support

One of the most popular coding languages, Python is now available to iRobot Coding App users in level 3, full-text coding. This new functionality provides an avenue to gain more complex coding experience in a coding language that is currently used in both academic and professional capacities worldwide, preparing the next generation of students for STEM curriculums and careers.

New Root Coding Robot 3D Simulator

Ready to code in 3D? The iRobot Coding App is uniquely designed to help kids learn coding at home and in school, with a virtual Root coding robot available for free within the app. iRobot updated the virtual Root SimBot with a fun and interactive 3D experience, allowing students to control their programmable Root® coding robot right on the screen.

“The COVID-19 pandemic has had, and continues to have, a tangible impact on students who’ve been learning in remote environments, which is why we identified solutions to nurture and grow SEL skills in students,” said Colin Angle, chairman and CEO of iRobot. “The expansion of these new iRobot Education resources, which are free to anyone, will hopefully facilitate greater inclusivity and accessibility for those who want to grow their coding experience and pursue STEM careers.”

In celebration of National Robotics Week, iRobot Education will also release weekly coding challenges focused on learning how to use code to communicate. Each challenge can be completed online in the iRobot Coding App with the Root SimBot or in-person with a Root coding robot. The weekly challenges build upon each other and include guided questions to facilitate discussions about the coding process, invite reflections, and celebrate new learning.

For more information on iRobot Education, Root coding robots and the iRobot Coding App, visit: https://edu.irobot.com/.

Information about National Robotics Week can be found at www.nationalroboticsweek.org.