How to build a robotics startup: getting the team right

This episode is about understanding why you can’t build your startup alone, and some criteria to properly select your co-founders.

In this podcast series of episodes we are going to explain how to create a robotics startup step by step.

We are going to learn how to select your co-founders, your team, how to look for investors, how to test your ideas, how to get customers, how to reach your market, how to build your product… Starting from zero, how to build a successful robotics startup.

I’m Ricardo Tellez, CEO and co-founder of The Construct startup, a robotics startup at which we deliver the best learning experience to become a ROS Developer, that is, to learn how to program robots with ROS.

Our company is already 5 years long, we are a team of 10 people working around the world. We have more than 100.000 students, and tens of Universities around the world use our online academy to provide the teaching environment to their students.

We have bootstrapped our startup, but we also (unsuccessfully) tried getting investors. We have done a few pivots and finally ended at the point that we are right now.

With all this experience, I’m going to teach you how to build your own startup. And we are going to go through the process by creating ourselves another startup, so you can see in the path how to create your own. So you are going to witness the creation of such robotics startup.

Subscribe to the podcast using any of the following methods

- ROS Developers Podcast on iTunes

- ROS Developers Podcast on Stitcher

- ROS Developers Podcast on Spotify

Or watch the video

The post 91. How to build a robotics startup: getting the team right appeared first on The Construct.

Best Resources to Learn Deep Learning Theory

Robot artist sells art for $688,888, now eyeing music career

A robot that senses hidden objects

By Daniel Ackerman | MIT News Office

In recent years, robots have gained artificial vision, touch, and even smell. “Researchers have been giving robots human-like perception,” says MIT Associate Professor Fadel Adib. In a new paper, Adib’s team is pushing the technology a step further. “We’re trying to give robots superhuman perception,” he says.

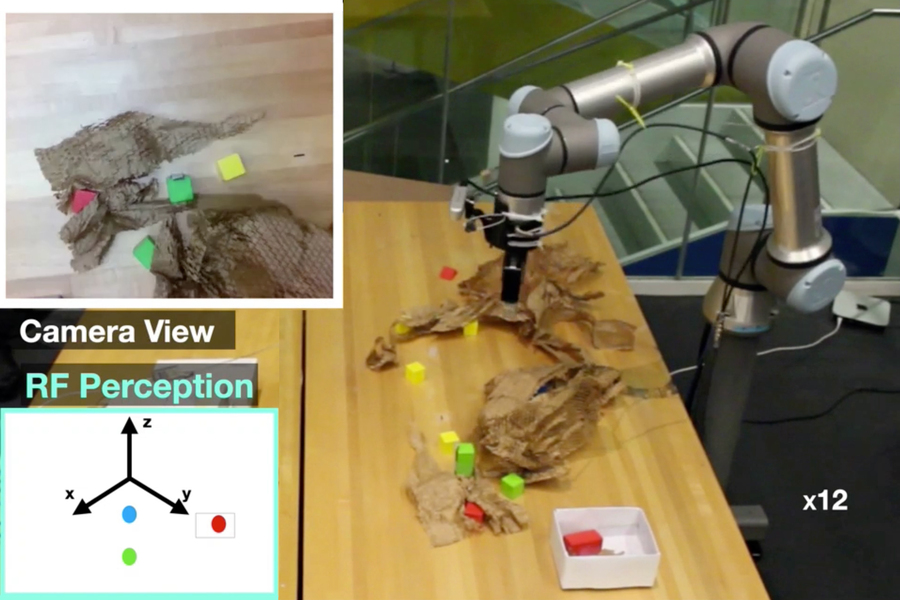

The researchers have developed a robot that uses radio waves, which can pass through walls, to sense occluded objects. The robot, called RF-Grasp, combines this powerful sensing with more traditional computer vision to locate and grasp items that might otherwise be blocked from view. The advance could one day streamline e-commerce fulfillment in warehouses or help a machine pluck a screwdriver from a jumbled toolkit.

The research will be presented in May at the IEEE International Conference on Robotics and Automation. The paper’s lead author is Tara Boroushaki, a research assistant in the Signal Kinetics Group at the MIT Media Lab. Her MIT co-authors include Adib, who is the director of the Signal Kinetics Group; and Alberto Rodriguez, the Class of 1957 Associate Professor in the Department of Mechanical Engineering. Other co-authors include Junshan Leng, a research engineer at Harvard University, and Ian Clester, a PhD student at Georgia Tech.

As e-commerce continues to grow, warehouse work is still usually the domain of humans, not robots, despite sometimes-dangerous working conditions. That’s in part because robots struggle to locate and grasp objects in such a crowded environment. “Perception and picking are two roadblocks in the industry today,” says Rodriguez. Using optical vision alone, robots can’t perceive the presence of an item packed away in a box or hidden behind another object on the shelf — visible light waves, of course, don’t pass through walls.

But radio waves can.

For decades, radio frequency (RF) identification has been used to track everything from library books to pets. RF identification systems have two main components: a reader and a tag. The tag is a tiny computer chip that gets attached to — or, in the case of pets, implanted in — the item to be tracked. The reader then emits an RF signal, which gets modulated by the tag and reflected back to the reader.

The reflected signal provides information about the location and identity of the tagged item. The technology has gained popularity in retail supply chains — Japan aims to use RF tracking for nearly all retail purchases in a matter of years. The researchers realized this profusion of RF could be a boon for robots, giving them another mode of perception.

“RF is such a different sensing modality than vision,” says Rodriguez. “It would be a mistake not to explore what RF can do.”

RF Grasp uses both a camera and an RF reader to find and grab tagged objects, even when they’re fully blocked from the camera’s view. It consists of a robotic arm attached to a grasping hand. The camera sits on the robot’s wrist. The RF reader stands independent of the robot and relays tracking information to the robot’s control algorithm. So, the robot is constantly collecting both RF tracking data and a visual picture of its surroundings. Integrating these two data streams into the robot’s decision making was one of the biggest challenges the researchers faced.

“The robot has to decide, at each point in time, which of these streams is more important to think about,” says Boroushaki. “It’s not just eye-hand coordination, it’s RF-eye-hand coordination. So, the problem gets very complicated.”

The robot initiates the seek-and-pluck process by pinging the target object’s RF tag for a sense of its whereabouts. “It starts by using RF to focus the attention of vision,” says Adib. “Then you use vision to navigate fine maneuvers.” The sequence is akin to hearing a siren from behind, then turning to look and get a clearer picture of the siren’s source.

With its two complementary senses, RF Grasp zeroes in on the target object. As it gets closer and even starts manipulating the item, vision, which provides much finer detail than RF, dominates the robot’s decision making.

RF Grasp proved its efficiency in a battery of tests. Compared to a similar robot equipped with only a camera, RF Grasp was able to pinpoint and grab its target object with about half as much total movement. Plus, RF Grasp displayed the unique ability to “declutter” its environment — removing packing materials and other obstacles in its way in order to access the target. Rodriguez says this demonstrates RF Grasp’s “unfair advantage” over robots without penetrative RF sensing. “It has this guidance that other systems simply don’t have.”

RF Grasp could one day perform fulfilment in packed e-commerce warehouses. Its RF sensing could even instantly verify an item’s identity without the need to manipulate the item, expose its barcode, then scan it. “RF has the potential to improve some of those limitations in industry, especially in perception and localization,” says Rodriguez.

Adib also envisions potential home applications for the robot, like locating the right Allen wrench to assemble your Ikea chair. “Or you could imagine the robot finding lost items. It’s like a super-Roomba that goes and retrieves my keys, wherever the heck I put them.”

The research is sponsored by the National Science Foundation, NTT DATA, Toppan, Toppan Forms, and the Abdul Latif Jameel Water and Food Systems Lab (J-WAFS).

Co-Learning of Task and Sensor Placement for Soft Robotics

Speed Reducers & Proper Shaft Alignment for Motion Control Applications

Towards mapping unknown environments with a robot swarm

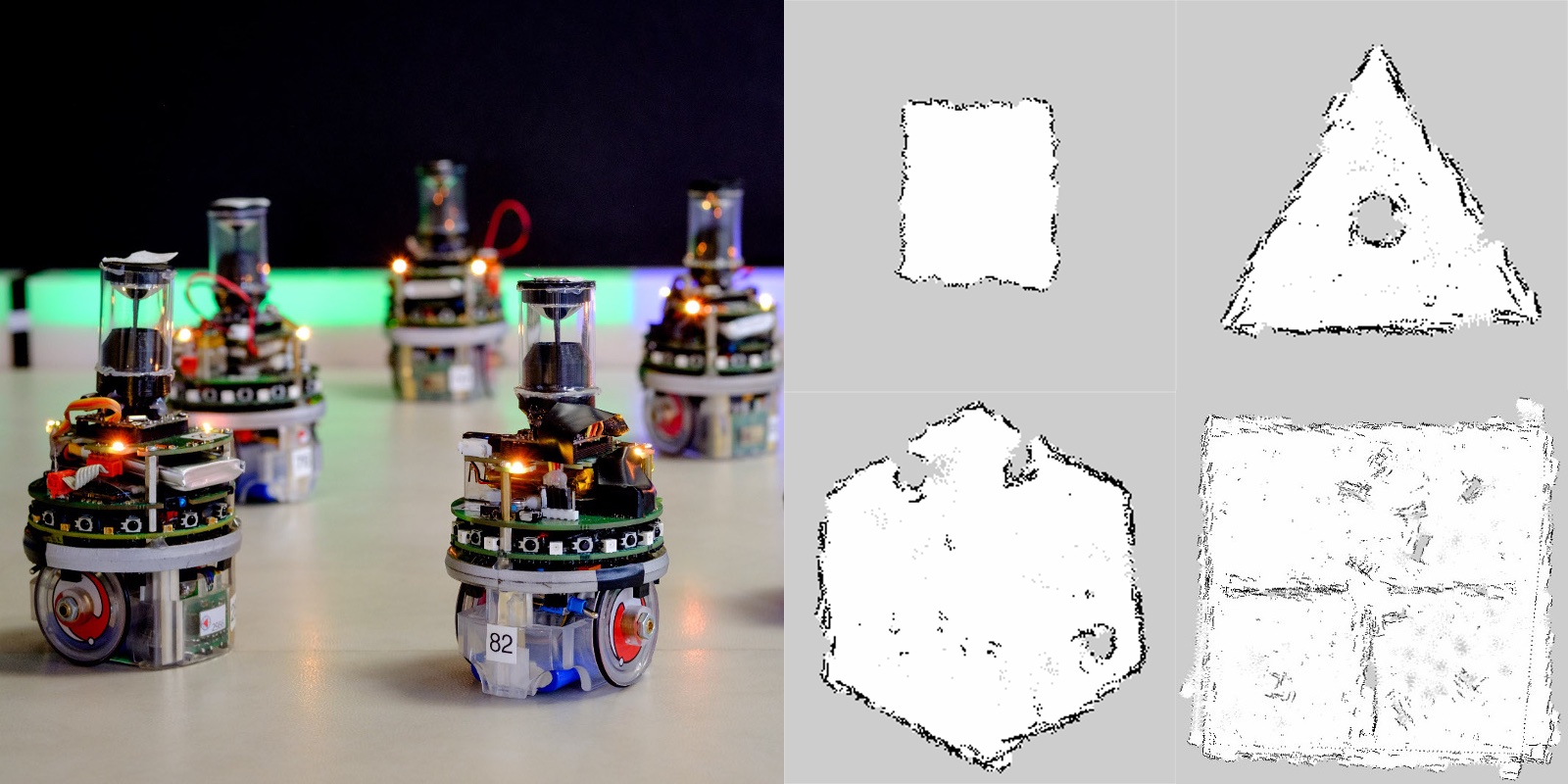

Mapping is an essential task in many robotics applications. A map is a representation of the environment generated from robots positions and sensors data. A map can be either used to navigate the robot that built it, or shared with other agents: humans, software, or robots. To build a map, it is frequently assumed that the positions of the robots are a priori unknown and need to be estimated during operation. Accordingly, the problem that robots must solve is known as simultaneous localization and mapping (SLAM). This problem has been extensively studied in the past decades. As a result, a large number of methods have been developed to generate various types of maps, in different environments, and using data gathered by a broad range of sensors. However, most of these methods were conceived for single-robot systems. Multi-robot SLAM is a more recent research direction that addresses the collective exploration and mapping of unknown environments by multi-robot systems. Yet, most results so far have been achieved for small groups of robots. Multi-robot SLAM is still a growing field, and a number of research directions are yet to be explored. Among them, swarm SLAM is an alternative, promising approach that takes advantage of the characteristics of robot swarms.

A robot swarm is a decentralized multi-robot system that can collectively accomplish missions that a single robot could not accomplish alone. With respect to centralized multi-robot systems, robot swarms present unique characteristics. First, a swarm does not need global knowledge nor external infrastructure to operate, and robots in a swarm only interact with close peers and the neighboring environment. This allows robot swarms to comprise an arbitrarily high number of robots without decreasing their performance (scalability). Then, as swarms are decentralized and self-organized, individual robots can dynamically allocate themselves to different tasks and hence meet the requirements of specific environments and operating conditions, even if these conditions evolve at operation time (flexibility). Finally, a robot swarm is characterized by high redundancy resulting from the large number of robots composing it. Redundancy, together with the absence of centralized control, allows robot swarms to cope with the loss or failure of some robots, and also with noise thanks to redundancy of measurements (fault tolerance). Hence, locality of sensing and communication, self-organization, and redundancy enable desirable properties such as scalability, flexibility, and fault tolerance that make a robot swarm the ideal candidate to perform missions in large unknown environments in which the risk that individual robots fail or are lost is high.

It is our contention that robot swarms could perform SLAM in environments and under operating conditions that are not appropriate for individual robots and for centralized multi-robot systems. Indeed, the robots in a swarm can work in parallel and thus quickly cover large areas. This is especially useful in dynamic environments in which changes can occur unexpectedly. By exploring in parallel, robots could track changes in the environment, identify areas that evolve more rapidly, and autonomously allocate more resources (i.e., more robots) to these areas. Thanks to its fault tolerance, a robot swarm can also operate in dangerous environments—like sea depths or outer space—as loosing a few robots will have little impact on the mission. This is also true cost-wise as robots in a swarm are often relatively simple and cheap in comparison with other robotics systems. However, a limitation is that simple robots usually rely on low-quality sensors and therefore, at the moment, swarm systems cannot produce metric maps as precise as those produced by single robots and centralized multi-robot systems. Yet, one of the main interests of robot swarms lies in their capacity of covering quickly large areas. Hence, they are best suited for building abstract maps in time-constrained scenarios. Indeed, applications requiring a very precise map are typically not constrained by time, while time-constrained applications can cope with rough but informative maps. For example, a patrolling robot has sufficient time to build a complete map of the building it is supposed to protect before beginning its protection task. On the other hand, robots sent to explore a disaster area and to locate survivors can quickly give to the rescuers an approximate path to the victims location.

At the moment, swarm robotics research has achieved many significant results but lacks proper applications. As building maps is at the basis of many robotics behaviors, swarm SLAM is a step forward to deploy robot swarms in real world scenarios. We believe that it could play an important role in time- or cost-constrained scenarios or for monitoring dynamic environments.

Our research in swarm SLAM is supported by the European Research Council (grant agreement No 681872) and the Belgian Fonds de la Recherche Scientifique–FNRS.