How Top Modules Make AMRs Possible

COBOTS VS COVID: Today’s Manufacturing Reality and Tomorrow’s New Normal

Robot vacuum cleaners can spy on private conversations

Nikolas Martelaro’s talk – Remote user research for human-robot interaction – with video

On Friday the 11th of December, Nikolas Martelaro (Assistant Professor at Carnegie Mellon’s Human-Computer Interaction Institute) gave an online seminar on ways robot design teams can do remote user research now (in these COVID-19 times) and in the future. If you missed it, you can now watch the recorded livestream.

About the speaker

Nikolas Martelaro is an Assistant Professor at Carnegie Mellon’s Human-Computer Interaction Institute. Martelaro’s lab focuses on augmenting designer’s capabilities through the use of new technology and design methods. Martelaro’s interest in developing new ways to support designers stems from my interest in creating interactive and intelligent products. Martelaro blends a background in product design methods, interaction design, human-robot interaction, and mechatronic engineering to build tools and methods that allow designers to understand people better and to create more human-centered products. Before moving to the HCII, Nikolas Martelaro was a researcher in the Digital Experiences group at the Accenture Technology Labs. Martelaro graduated with a Ph.D. in Mechanical Engineering from Stanford’s Center for Design Research where he was co-advised by Larry Leifer and Wendy Ju.

Abstract

COVID-19 has led to decreases in in-person user research. While designers who work on software can shift to using all digital remote research methods, people who work on hardware systems, including robots, are left with limited options. In this talk, I will discuss some ways the robot design teams can do remote user research now and in the future. I will draw on past work in human-computer interaction as well as my own work in creating systems to allow remote design teams to conduct remote observation and interaction prototyping. While things can be challenging for the user researcher team today, I believe that with some creative new methods, we can expand our abilities to do user research for robotics from anywhere in the world.

Papers covered during the talk

Karakuri unveils its first robotic canteen set to change the global food and hospitality industry

#CYBATHLON2020GlobalEdition winners of the powered wheelchair race (with interview + story of pilot)

In continuation to this series of CYBATHLON 2020 winners, today we feature the victory of the HSR Enhanced team from the Eastern Switzerland University of Applied Sciences (OST). In addition, we interviewed their team leader of this year, Christian Bermes.

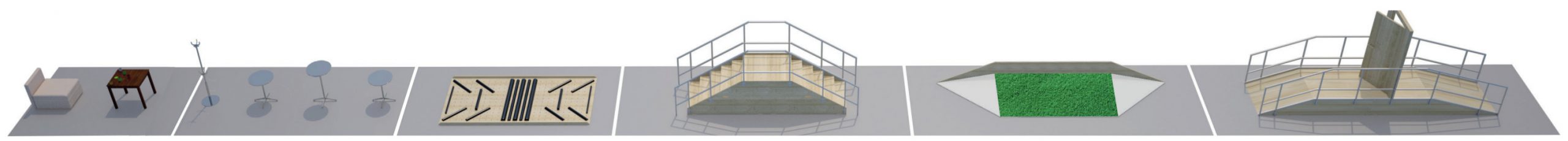

In this race, pilots with a severe walking disability from seven teams competed against each other in a motorized wheelchair. As the organizers of CYBATHLON describe, “motorized wheelchairs can make everyday life much easier for people with a walking disability. The important thing is that they can overcome obstacles such as ramps and yet are not too large to drive under a normal table. Motorized wheelchairs that are controlled by joystick, tongue control, touchpad or other technologies are eligible for this race and are characterized by innovative approaches to overcome obstacles such as stairs”. The challenge for the pilots was to complete the following tasks:

(1) Driving up to a table until half of the thighs were covered without displacing the table to test the size and seat height of the wheelchair.

(2) Driving through furniture without displacing it to test the size of the wheelchair and precise maneuverability.

(3) Crossing uneven terrain to test the grip of the wheels, ground clearance and power.

(4) Ascending and descending stairs, and bringing the wheelchair to a standstill while descending to test the ability to climb and descend stairs in a controlled manner, and power.

(5) Driving across tilted path with different surfaces to test the drifting and tipping stability, and power.

(6) Driving a ramp up and down, opening and closing the door in the middle using an externally powered technical support (e.g. a robotic arm) to test precise maneuverability and control of technical support in a confined space.

This year, team HSR Enhanced with pilot Florian Hauser was unbeatable again, as it happened in the 2016 edition. The silver medal went to team Caterwil from Russia with pilot Iurii Larin. The third finalist was team Fortississimo from Japan with pilot Hiroshi Nojima. Here’s a summary of the races of the top 4 finalists (from time 1:01:49):

You can see the results from the rest of the teams in this discipline here, or watch the recorded livestreams of both days on their website.

Interview to Christian Bermes – (ex) Team leader of HSR Enhanced team

We had the pleasure to interview Christian Bermes, team leader of the HSR Enhanced team in both 2016 and 2020 editions. After CYBATHLON 2020, he handed over the team leads, as he moved from OST to be Professor for Mobile Robotics at the University of Applied Sciences of the Grisons.

D. C. Z.: What does it mean for your team to have won in your CYBATHLON category?

C.B.: It is a huge confirmation that our first win in 2016 was not just a coïncidence, but again the result of human-centered innovation together with our pilot Florian Hauser, meticulous engineering, proper prior planning, hard training and of course a next-level pilot performance. Needless to say that there was a certain amount of luck involved, too – and hard work puts you where luck finds you.

D. C. Z.: And what does it mean for people with disabilities?

C.B.: I find it hard to answer this in general terms. Our wheelchair as it is right now will not enter a mass market, however some of its modules could, if we found partners for industrialization. I just think it’s great that people with disabilities are the figureheads and heroes of CYBATHLON. They have prepared themselves in the most professional way, have unmatched control over their machines and are simply impressive.

D. C. Z.: What are still your challenges?

C.B.: Right now we enjoy the weightlessness of the win. Soon after, CYBATHLON will publish the new race obstaces for 2024 and I am 100% sure that there will be many technical challenges right away. Moreover, budget has to be secured, sponsors must be found, the team must be sworn in, plus many things more. The goal for 2024 is clear – win another title. I have full confidence in the new lead crew and their team, they will outperform everything we have seen from HSR enhanced until this day. And with Florian Hauser as pilot, we will see lightning speed on the race day.

The story of pilot Florian Hauser

The organizers released a series of videos telling the personal story of some of the competing pilots. The pilot of the HSR Enhanced team, Florian Hauser, “is a tetraplegic since he had a motorcycle accident in 2014. However, this does not prevent him from riding fast. Not on a bike anymore, but in his wheelchair,” as the organizers describe. Apart from being the winner of his discipline in this 2020 edition, Florian also won CYBATHLON 2016 and the CYBATHLON wheelchair Series in Japan.

#CYBATHLON2020GlobalEdition winners of the powered wheelchair race (with interview + story of pilot)

In continuation to this series of CYBATHLON 2020 winners, today we feature the victory of the HSR Enhanced team from the Eastern Switzerland University of Applied Sciences (OST). In addition, we interviewed their team leader of this year, Christian Bermes.

In this race, pilots with a severe walking disability from seven teams competed against each other in a motorized wheelchair. As the organizers of CYBATHLON describe, “motorized wheelchairs can make everyday life much easier for people with a walking disability. The important thing is that they can overcome obstacles such as ramps and yet are not too large to drive under a normal table. Motorized wheelchairs that are controlled by joystick, tongue control, touchpad or other technologies are eligible for this race and are characterized by innovative approaches to overcome obstacles such as stairs”. The challenge for the pilots was to complete the following tasks:

(1) Driving up to a table until half of the thighs were covered without displacing the table to test the size and seat height of the wheelchair.

(2) Driving through furniture without displacing it to test the size of the wheelchair and precise maneuverability.

(3) Crossing uneven terrain to test the grip of the wheels, ground clearance and power.

(4) Ascending and descending stairs, and bringing the wheelchair to a standstill while descending to test the ability to climb and descend stairs in a controlled manner, and power.

(5) Driving across tilted path with different surfaces to test the drifting and tipping stability, and power.

(6) Driving a ramp up and down, opening and closing the door in the middle using an externally powered technical support (e.g. a robotic arm) to test precise maneuverability and control of technical support in a confined space.

This year, team HSR Enhanced with pilot Florian Hauser was unbeatable again, as it happened in the 2016 edition. The silver medal went to team Caterwil from Russia with pilot Iurii Larin. The third finalist was team Fortississimo from Japan with pilot Hiroshi Nojima. Here’s a summary of the races of the top 4 finalists (from time 1:01:49):

You can see the results from the rest of the teams in this discipline here, or watch the recorded livestreams of both days on their website.

Interview to Christian Bermes – (ex) Team leader of HSR Enhanced team

We had the pleasure to interview Christian Bermes, team leader of the HSR Enhanced team in both 2016 and 2020 editions. After CYBATHLON 2020, he handed over the team leads, as he moved from OST to be Professor for Mobile Robotics at the University of Applied Sciences of the Grisons.

D. C. Z.: What does it mean for your team to have won in your CYBATHLON category?

C.B.: It is a huge confirmation that our first win in 2016 was not just a coïncidence, but again the result of human-centered innovation together with our pilot Florian Hauser, meticulous engineering, proper prior planning, hard training and of course a next-level pilot performance. Needless to say that there was a certain amount of luck involved, too – and hard work puts you where luck finds you.

D. C. Z.: And what does it mean for people with disabilities?

C.B.: I find it hard to answer this in general terms. Our wheelchair as it is right now will not enter a mass market, however some of its modules could, if we found partners for industrialization. I just think it’s great that people with disabilities are the figureheads and heroes of CYBATHLON. They have prepared themselves in the most professional way, have unmatched control over their machines and are simply impressive.

D. C. Z.: What are still your challenges?

C.B.: Right now we enjoy the weightlessness of the win. Soon after, CYBATHLON will publish the new race obstaces for 2024 and I am 100% sure that there will be many technical challenges right away. Moreover, budget has to be secured, sponsors must be found, the team must be sworn in, plus many things more. The goal for 2024 is clear – win another title. I have full confidence in the new lead crew and their team, they will outperform everything we have seen from HSR enhanced until this day. And with Florian Hauser as pilot, we will see lightning speed on the race day.

The story of pilot Florian Hauser

The organizers released a series of videos telling the personal story of some of the competing pilots. The pilot of the HSR Enhanced team, Florian Hauser, “is a tetraplegic since he had a motorcycle accident in 2014. However, this does not prevent him from riding fast. Not on a bike anymore, but in his wheelchair,” as the organizers describe. Apart from being the winner of his discipline in this 2020 edition, Florian also won CYBATHLON 2016 and the CYBATHLON wheelchair Series in Japan.

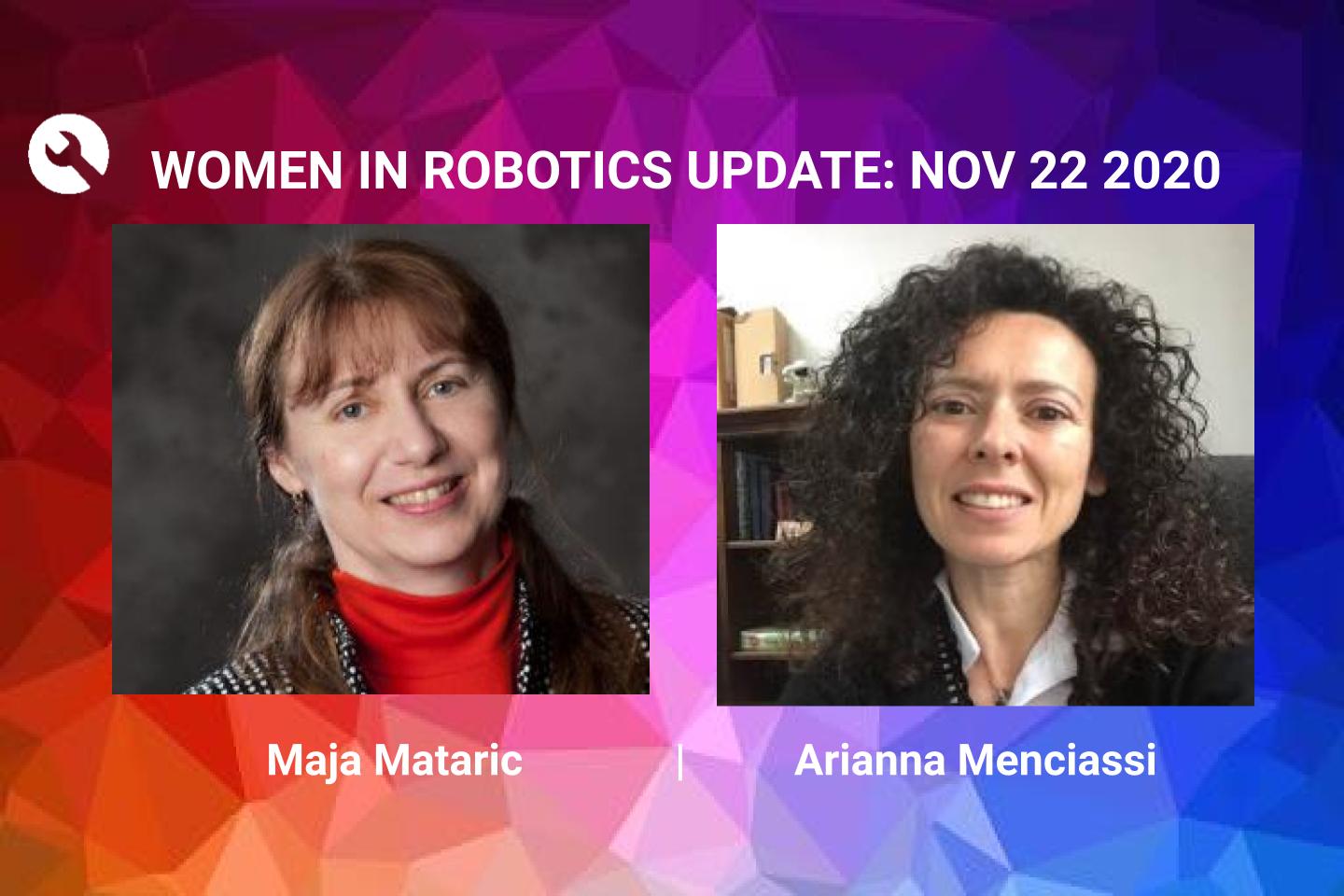

Women in Robotics Update: Maja Mataric, Arianna Menciassi

Introducing the second of our new series of Women in Robotics Updates, featuring Maja Mataric and Arianna Menciassi from our first “25 women in robotics you need to know about” list in 2013. Since we started Women in Robotics has focused on positive role models in robotics, highlighting women’s career work, but we’d like to point out just how much energy that these amazing women extend to outreach, to inspiring and supporting their junior colleagues and to science management, supporting and advancing the increasingly complex machinery of research.

For example, Ariana Menciasi has held many editorial and technical committee roles, and manages both European and extra-European research projects, which involves extensive collaboration efforts. And Maja Mataric started the US Women in Robotics Research Database, which inspired similar initiatives in Canada, with the goal that you should always be able to find a female robotics researcher for interviews, positions, panels and conferences.

|

Maja Mataric

Interim Vice President at University of Southern California | Founder of Embodied Inc

Maja Matarić (featured 2013) is now Interim Vice President of Research at the University of Southern California (USC) and the founding director of the USC Center for Robotics and Embedded Systems. She is a pioneer of socially assistive robotics (SAR) which focuses on developing robots that provide therapies and care through social interaction, especially for special-needs populations; her Interaction’s Lab has worked with children with autism, stroke patients, elderly users with Alzheimer’s, and many others. Matarić received the Distinguished Professor award at USC in 2019. She became a Fellow of the Association for the Advancement of Artificial Intelligence (AAAI) in 2017 and in 2015 she was listed in Top 100 Inspiring Women in STEM by Insight into Diversity. Matarić was also a recipient of the Anita Borg Institute Women of Vision Award in Innovation in 2013 and the Presidential Award for Excellence in Science, Mathematics, and Engineering Mentoring (PAESMEM) in 2011. She has more than 650 publications and 39000 citations and is very passionate about mentoring and empowering students and communicating the excitement of interdisciplinary research and careers in STEM to a diverse audience including K-12 students and teachers, women, and other underrepresented groups in engineering. In 2016, Matarić founded Embodied Inc, which in 2020 launched Moxie, a socially assistive robot for child development that provides “play-based learning that is paced to weekly themes and missions with content designed to promote social, emotional, and cognitive learning”. As Liz Ohanion at KCET said, “Maja Mataric is a robotics powerhouse and, when she’s not inspiring the next generation of engineers, she’s working on a series of robots that could very well change the lives of the people who use them.” |

|

Arianna Menciassi

Full professor at Scuola Superiore Sant’Anna

Arianna Menciassi (featured in 2013) is now a full professor in Biomedical Engineering at Scuola Superiore Sant’Anna (SSSA). She is also a team leader of the “Surgical Robotics & Allied Technologies” Area at The BioRobotics Institute in SSSA where she has been advancing intelligent devices that permit medical or surgical procedures to be performed in a minimally invasive regime, and in an increasingly reliable, reproducible and safe way. As Menciassi says in her interview at Autonomous Robotic Surgery : “I am looking for solutions for giving the best care to the patients not only using scissor and knife but also using energy for example ultra sounds, focused ultra sounds, when you take a pill this is sort of autonomous treatment, this is not an autonomous robot but it is an autonomous treatment.” Menciassi has received The Women Innovation Award, for female scientists in biomedical robotics, by WomenTech in 2017. Her SupCam project was awarded as Special Electronic Design with the Compasso d’Oro, ADI Associazione per il Disegno Industriale (Golden compass, Association for Industrial Design) in 2016 for her cost-effective and minimally invasive endoscopic device. Also, the FUTURA project, a novel robotic platform for Focused Ultrasound Surgery (FUS) in clinics, which was coordinated by Menciassi was awarded the Technology Award for the Society of Medical and Innovation Technology (SMIT) in 2015. In an already prolific career, Menciassi has more than 19000 citations, 650 publications, 7 book chapters and almost 50 patents in her name and has been constantly improving the fields of surgical and biomedical robotics. Her vision for the future is strong, “Maybe in 30 years all drugs will be more robotic and let’s say autonomous because they will be able to reach some specific areas of the human body to treat cells or to treat a disease.” |

Want to keep reading? There are 180 more stories on our 2013 to 2020 lists. Why not nominate someone for inclusion next year!

And we encourage #womeninrobotics and women who’d like to work in robotics to join our professional network at http://womeninrobotics.org

#CYBATHLON2020GlobalEdition winners of the powered exoskeleton race (with interview)

The last edition of CYBATHLON took place on 13-14 November, 2020. This competition, created by ETH Zurich and run as a non-profit project, aims to advance in the research and development of assistive technology by involving developers, people with disabilities, and the general public. We had the chance to interview the winning team of the powered exoskeleton race, Angel Robotics from South Korea.

In this race, pilots with complete thoracic or lumbar spinal cord injury from nine teams competed using an exoskeleton. This wearable, powered support enables them to walk and master other everyday tasks. Indeed, the motivation behind this race is that “the use of exoskeletons is still rare, they are currently mainly used for physiotherapy in hospitals and rehabilitation centers. Exoskeletons dramatically increase the mobility of people with paraplegia, which consequently improves their overall physical and psychological health and therefore might represent a welcome addition to a wheelchair”, as the organizers of CYBATHLON state. This race involved:

(1) Sitting down & standing up from a sofa, and stacking cups while standing next to a table to test the range of motion and strength in the knee and hip joints, and stability.

(2) Slaloming around furniture without displacing it to test precision of steps and agility.

(3) Crossing uneven terrain to test precision of steps and adaptation of step lengths and widths.

(4) Climbing and descending stairs to test range of motion and strength in the knee and hip joints, and step precision.

(5) Walking across a tilted path to test the lateral range of motion in hip and foot joints, and stability.

(6) Climbing a ramp, opening and closing the door in the middle of the ramp, and descending the ramp to test the range of motion in foot, knee and hip joints, stability and maneuvering in confined spaces.

The top three teams were the company Angel Robotics (1) from South Korea with pilot Byeong-Uk Kim, TWIICE from EPFL research group REHAssist with pilot Silke Pan, and Angel Robotics (2) with pilot Lee Joo-Hyun. Remarkably, the three of them achieved the highest score – 100 points. With this impressive result, the podium was decided based on finishing time. If you can’t wait to watch how tight the races were, you can enjoy them in the recorded livestream below (from time 3:10:30).

You can see the results from the rest of the teams in this discipline here, or watch the recoreded livestreams of both days on their website.

Interview to Kyoungchul Kong – Team leader of Angel Robotics team

We had the pleasure to interview Kyungchul Kong, team leader and CEO of Angel Robotics (1&2). He is also an Associate Professor of KAIST (Korea Advanced Institute of Science and Technology).

D. C. Z.: What does it mean for your team to have won in your CYBATHLON category?

K.K.: In WalkON Suit, the powered exoskeleton of Angel Robotics, there have been various dramatic technical advances. Since the first Cybathlon in 2016, the walking speed has become as fast as people without disabilities. The most important feature of WalkON Suit is its balance; as the center of mass is placed on the area of feet while standing straight, the wearer can stand without much effort for a long time. These superior functionalities of WalkON Suit could be proved by winning the Gold and Bronze medals at Cybathlon 2020.

D. C. Z.: And what does it mean for people with disabilities?

K.K.: While winning the Gold medal is glorious, winning two medals is especially meaningful. The physical conditions of the two pilots (i.e., the Gold medalist and the Bronze medalist) of Team Angel Robotics were extremely different. One was a male with very strong upper body, while the other was a female with much less muscles. Such different people could be successfully assisted by WalkON Suit, which means that the powered exoskeleton is not a technology optimized for a single user, but able to be utilized by many people with different body conditions.

D. C. Z.: What are still your challenges?

K.K.: In order to bring the WalkON Suit into the real life of people who need this technology, it has to be much improved in terms of wearability, price, and weight. The user should be able to wear the robot without anyone else’s help. It should be light enough to handle while sitting on a wheelchair. The price is another critical issue considering practical conditions. With these restrictions, the functionalities and performance of the robot must not be deteriorated. These are the challenges we are much trying to get over.