By Taylor Kubota, Stanford News Service

Imagine rescuers searching for people in the rubble of a collapsed building. Instead of digging through the debris by hand or having dogs sniff for signs of life, they bring out a small, air-tight cylinder. They place the device at the entrance of the debris and flip a switch. From one end of the cylinder, a tendril extends into the mass of stones and dirt, like a fast-climbing vine. A camera at the tip of the tendril gives rescuers a view of the otherwise unreachable places beneath the rubble.

This is just one possible application of a new type of robot created by mechanical engineers at Stanford University, detailed in a June 19 Science Robotics paper. Inspired by natural organisms that cover distance by growing — such as vines, fungi and nerve cells — the researchers have made a proof of concept of their soft, growing robot and have run it through some challenging tests.

“Essentially, we’re trying to understand the fundamentals of this new approach to getting mobility or movement out of a mechanism,” explained Allison Okamura, professor of mechanical engineering and senior author of the paper. “It’s very, very different from the way that animals or people get around the world.”

To investigate what their robot can do, the group created prototypes that move through various obstacles, travel toward a designated goal, and grow into a free-standing structure. This robot could serve a wide range of purposes, particularly in the realms of search and rescue and medical devices, the researchers said.

A growing robot

The basic idea behind this robot is straightforward. It’s a tube of soft material folded inside itself, like an inside-out sock, that grows in one direction when the material at the front of the tube everts, as the tube becomes right-side-out. In the prototypes, the material was a thin, cheap plastic and the robot body everted when the scientists pumped pressurized air into the stationary end. In other versions, fluid could replace the pressurized air.

What makes this robot design extremely useful is that the design results in movement of the tip without movement of the body.

“The body lengthens as the material extends from the end but the rest of the body doesn’t move,” explained Elliot Hawkes, a visiting assistant professor from the University of California, Santa Barbara and lead author of the paper. “The body can be stuck to the environment or jammed between rocks, but that doesn’t stop the robot because the tip can continue to progress as new material is added to the end.”

The group tested the benefits of this method for getting the robot from one place to another in several ways. It grew through an obstacle course, where it traveled over flypaper, sticky glue and nails and up an ice wall to deliver a sensor, which could potentially sense carbon dioxide produced by trapped survivors. It successfully completed this course even though it was punctured by the nails because the area that was punctured didn’t continue to move and, as a result, self-sealed by staying on top of the nail.

In other demonstrations, the robot lifted a 100-kilogram crate, grew under a door gap that was 10 percent of its diameter and spiraled on itself to form a free-standing structure that then sent out a radio signal. The robot also maneuvered through the space above a dropped ceiling, which showed how it was able to navigate unknown obstacles as a robot like this might have to do in walls, under roads or inside pipes. Further, it pulled a cable through its body while growing above the dropped ceiling, offering a new method for routing wires in tight spaces.

Difficult environments

“The applications we’re focusing on are those where the robot moves through a difficult environment, where the features are unpredictable and there are unknown spaces,” said Laura Blumenschein, a graduate student in the Okamura lab and co-author of the paper. “If you can put a robot in these environments and it’s unaffected by the obstacles while it’s moving, you don’t need to worry about it getting damaged or stuck as it explores.”

Some iterations of these robots included a control system that differentially inflated the body, which made the robot turn right or left. The researchers developed a software system that based direction decisions on images coming in from a camera at the tip of the robot.

A primary advantage of soft robots is that they can be safer than hard, rigid robots not only because they are soft but also because they are often lightweight. This is especially useful in situations where a robot could be moving in close quarters with a person. Another benefit, in the case of this robot, is that it is flexible and can follow complicated paths. This, however, also poses some challenges.

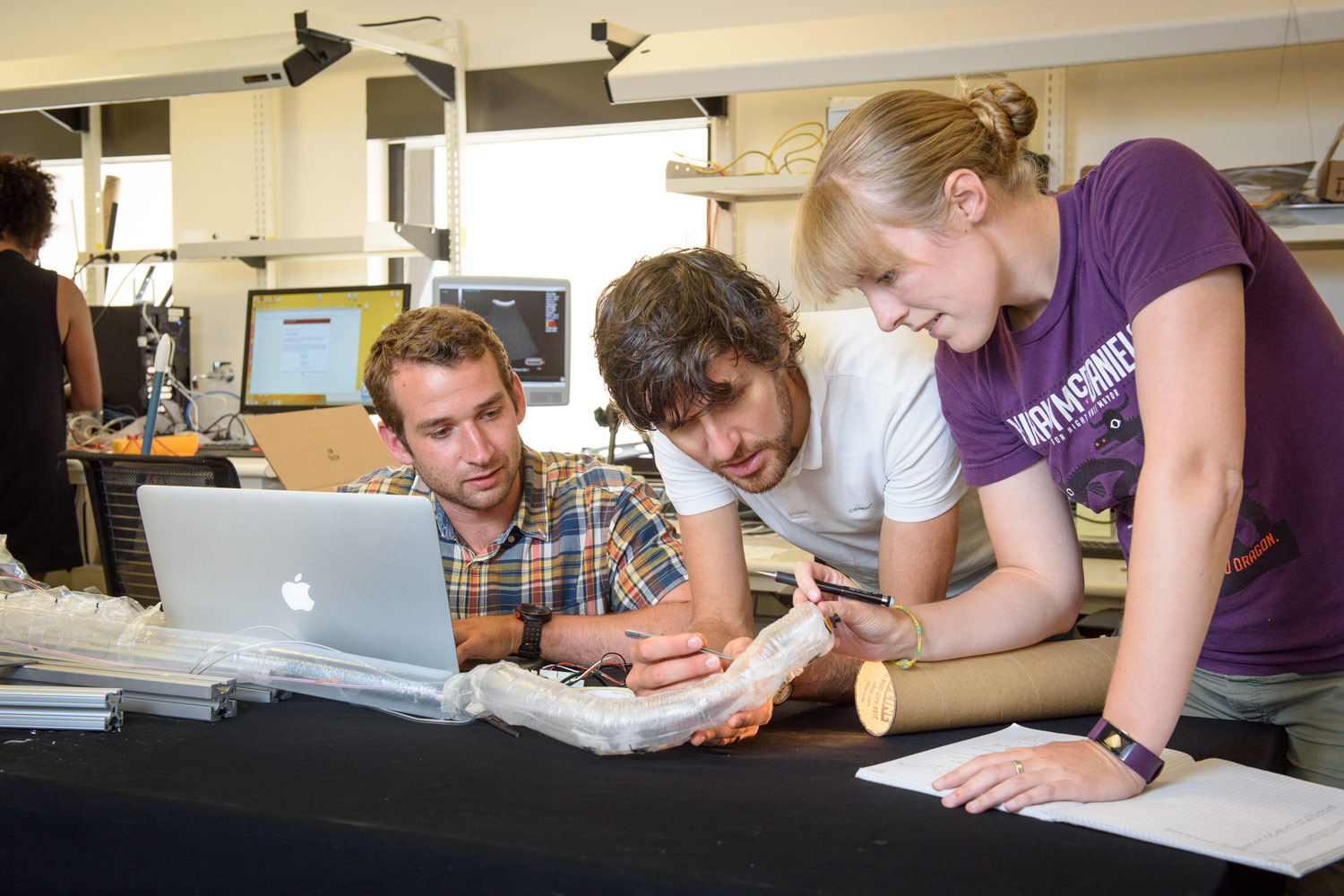

Joey Greer, a graduate student in the Okamura lab and co-author of the paper, said that controlling a robot requires a precise model of its motion, which is difficult to establish for a soft robot. Rigid robots, by comparison, are much easier to model and control, but are unusable in many situations where flexibility or safety is necessary. “Also, using a camera to guide the robot to a target is a difficult problem because the camera imagery needs to be processed at the rate it is produced. A lot of work went into designing algorithms that both ran fast and produced results that were accurate enough for controlling the soft robot,” Greer said.

Going big—and small

As it exists now, the scientists built the prototype by hand and it is powered through pneumatic air pressure. In the future, the researchers would like to create a version that would be manufactured automatically. Future versions may also grow using liquid, which could help deliver water to people trapped in tight spaces or to put out fires in closed rooms. They are also exploring new, tougher materials, like rip-stop nylon and Kevlar.

The researchers also hope to scale the robot much larger and much smaller to see how it performs. They’ve already created a 1.8 mm version and believe small growing robots could advance medical procedures. In place of a tube that is pushed through the body, this type of soft robot would grow without dragging along delicate structures.

Okamura is a member of Stanford Bio-X and the Stanford Neurosciences Institute.

This research was funded by the National Science Foundation.

Knightscope

Knightscope

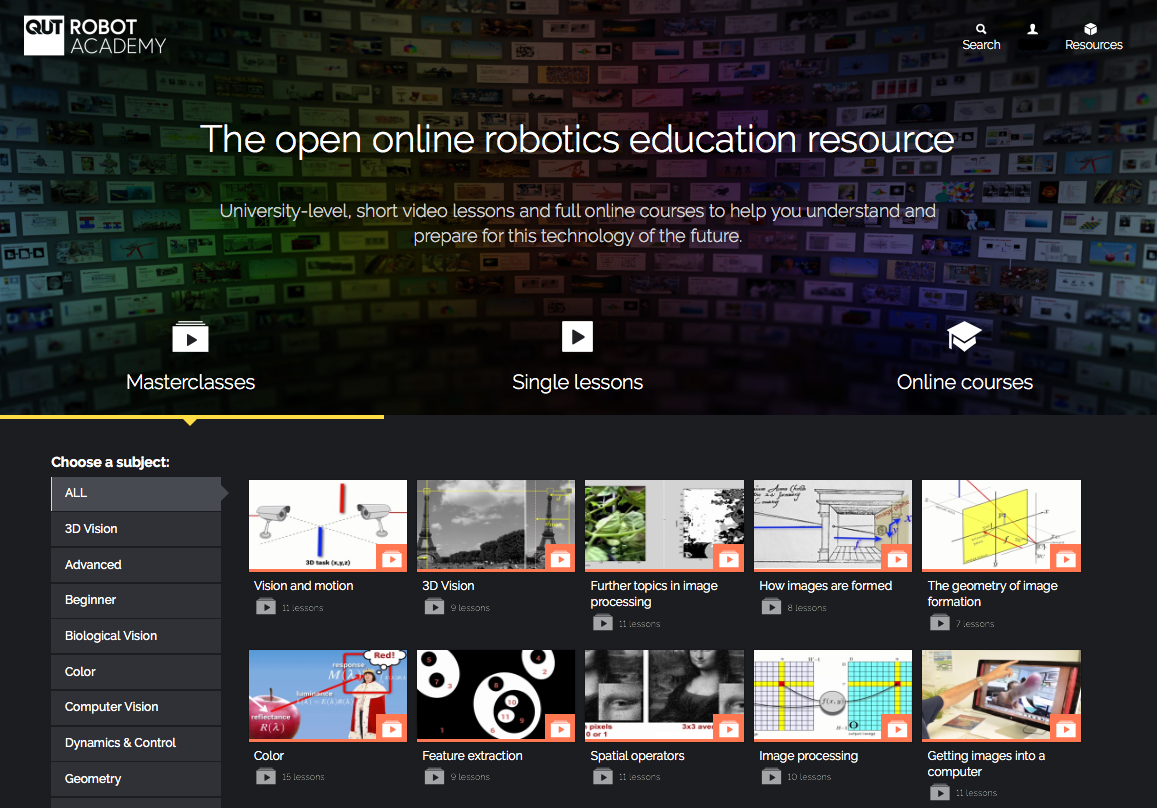

Peter Corke is Professor of Robotics and Control at the Queensland University of Technology leading the ARC Centre of Excellence for Robotic Vision in Australia. Previously he was a Senior Principal Research Scientist at the CSIRO ICT Centre where he founded and led the Autonomous Systems laboratory, the Sensors and Sensor Networks research theme and the Sensors and Sensor Networks Transformational Capability Platform. He is a Fellow of the IEEE. He was the Editor-in-Chief of the IEEE Robotics and Automation magazine; founding editor of the Journal of Field Robotics; member of the editorial board of the International Journal of Robotics Research, and the Springer STAR series. He has over 300 publications in the field and has held visiting positions at the University of Pennsylvania, University of Illinois at Urbana-Champaign, Carnegie-Mellon University Robotics Institute, and Oxford University.

Peter Corke is Professor of Robotics and Control at the Queensland University of Technology leading the ARC Centre of Excellence for Robotic Vision in Australia. Previously he was a Senior Principal Research Scientist at the CSIRO ICT Centre where he founded and led the Autonomous Systems laboratory, the Sensors and Sensor Networks research theme and the Sensors and Sensor Networks Transformational Capability Platform. He is a Fellow of the IEEE. He was the Editor-in-Chief of the IEEE Robotics and Automation magazine; founding editor of the Journal of Field Robotics; member of the editorial board of the International Journal of Robotics Research, and the Springer STAR series. He has over 300 publications in the field and has held visiting positions at the University of Pennsylvania, University of Illinois at Urbana-Champaign, Carnegie-Mellon University Robotics Institute, and Oxford University.