Six Predictions for the Future of IoT and Mobile App Integration

Team CERBERUS wins the DARPA Subterranean Challenge

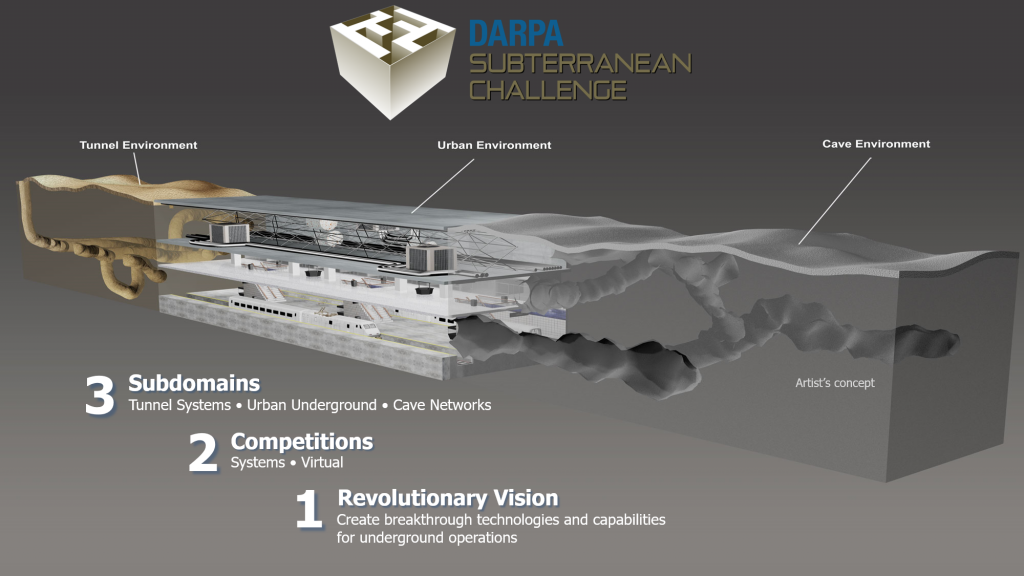

The DARPA Subterranean Challenge planned to develop novel approaches to rapidly map, explore and search underground environments in time-sensitive operations critical for the civilian and military domains alike. In the Final Event, DARPA designed an environment involving branches representing all three challenges of the “Tunnel Circuit”, the “Urban Circuit” and the “Cave Circuit”. Robots had to explore, search for objects (“artifacts”) of interest, and report their accurate location within underground tunnels, infrastructure similar to a subway, and natural caves and paths with extremely confined geometries, tough terrain, and severe visual degradation (including dense smoke).

Team CERBERUS deployed a diverse set of robots with the prime systems being four ANYmal C legged systems. In the Prize Round of the Final Event, the team won the competition and scored 23 points by correctly detecting and localizing 23 of 40 of the artifacts DARPA had placed inside the environment. The second team, “CSIRO Data61” also scored 23 points but reported the last artifact with a slight further delay to DARPA thus the tiebraker was in favor of Team CERBERUS. The third team, “MARBLE” scored 18 points.

The DARPA Subterranean Challenge was one of the rare types of global robotic competition events pushing the frontiers for resilient autonomy and calling teams to develop novel and innovative solutions with the capacity to help critical sectors such as search and rescue personnel and the industry in domains such as mining and beyond. The level of achievement of Team CERBERUS is best understood by looking at all the competitors in the “Systems Competition” of the Final Event. The participating teams including members from top international institutions, namely:

- CERBERUS (Score = 23): University of Nevada, Reno, ETH Zurich, NTNU, University of California Berkeley, Oxford Robotics Institute, Flyability, Sierra Nevada Corporation

- CSIRO Data61 (Score = 23): CSIRO, Emesent, Georgia Institute of Technology

- MARBLE (Score = 18): University of Colorado Boulder, University of Colorado Denver, Scientific Systems Company, University of California Santa Cruz

- Explorer (Score = 17): Carnegie Mellon University, Oregon State University

- CoSTAR (Score = 13): NASA Jet Propulsion Laboratory, California Institute of Technology, MIT, KAIST, Lulea University of Technology

- CTU-CRAS-NORLAB (Score = 7): Czech Technological University, Université Laval

- Coordinated Robotics (Score = 2): Coordinated Robotics, California State University Channel Islands, Oke Onwuka, Sequoia Middle School

- Robotika (Score = 2): Robotika International, Robotika.cz, Czech University of Life Science, Centre for Field Robotics, Cogito Team

We congratulate all members of the team and we are proud of this incredible and historic achievement! Most importantly, we are excited to be part of this amazing community pushing the frontier of resilient robotic autonomy in extreme environments.

Baby Robot: A system that helps toddlers practice their motor skills

An autonomous robot may have already killed people—here’s how the weapons could be more destabilizing than nukes

Blazing the Trail for Flying Robots

Exoskeleton research demonstrates the importance of training

Is your OLRP Implementation Process SMART?

Real Roboticist focus series #6: Dennis Hong (Making People Happy)

In this final video of our focus series on IEEE/RSJ IROS 2020 (International Conference on Intelligent Robots and Systems) original series Real Roboticist, you’ll meet Dennis Hong speaking about the robots he and his team have created (locomotion and new ways of moving; an autonomous car for the visually impaired; disaster relief robots), Star Wars and cooking. All in all, ingredients from different worlds that Dennis is using to benefit society.

Dennis Hong is a Professor and the Founding Director of RoMeLa (Robotics & Mechanisms Laboratory) of the Mechanical & Aerospace Engineering Department at UCLA. If you’d like to find out more about how Star Wars influenced his professional career in robotics, how his experience taking a cooking assistant robot to MasterChef USA inspired a multi-million research project, and all the robots he is creating, check out his video below!

Amazon unveils ‘Jetsons’-like roaming robot for the home

A flexible, stretchable battery capable of moving smoothly like snake scales

Autonomous glider withstands two hurricanes while transmitting continuous ocean data

Digital Transformation and the Smart Factory

Researchers develop a new robot that can efficiently navigate sidewalks in urban environments

What can I do here? Learning new skills by imagining visual affordances

How do humans become so skillful? Well, initially we are not, but from infancy, we discover and practice increasingly complex skills through self-supervised play. But this play is not random – the child development literature suggests that infants use their prior experience to conduct directed exploration of affordances like movability, suckability, graspability, and digestibility through interaction and sensory feedback. This type of affordance directed exploration allows infants to learn both what can be done in a given environment and how to do it. Can we instantiate an analogous strategy in a robotic learning system?

On the left we see videos from a prior dataset collected with a robot accomplishing various tasks such as drawer opening and closing, as well as grasping and relocating objects. On the right we have a lid that the robot has never seen before. The robot has been granted a short period of time to practice with the new object, after which it will be given a goal image and tasked with making the scene match this image. How can the robot rapidly learn to manipulate the environment and grasp this lid without any external supervision?