Casinos and robots? It must be CES time! A few years ago, the only robots at CES were toys. And as the robot toy makers at Ologic can attest, having your robot featured as the leading image for CES was still no guarantee that your robot would make it into production (AMP is pictured above). Luckily Ologic have transferred their consumer electronics experience into building robots of every other kind. And CES now showcases robots of every shape and size, from autonomous cars, trucks and construction robotics, to food production, health and rehabilitation robots. The 2022 CES Innovation Awards recognize a range of robotics technologies as Honorees, and feature three in the “Best of Innovation” category as well.

See & Spray

By John Deere

Best of Innovation

Robotics

Honoree

Vehicle Intelligence & Transportation

See & Spray is a technologically advanced, huge robot for the agriculture industry that leverages computer vision and machine learning to detect the difference between plants and weeds, and precisely spray herbicide only on the weeds. This groundbreaking plant-level management technology gives a machine the gift of vision and reduces the use of herbicide by up to 80 percent, benefiting the farmer, the surrounding community and the environment. This revolutionary approach of technology is unprecedented in both the technology and agriculture industries.

Leica BLK ARC

By Leica Geosystems, part of Hexagon

Best of Innovation

Robotics

The BLK ARC is an autonomous laser scanning module for robotics applications. It provides a safe and autonomous way to capture, in 3D, the images and data of areas that are difficult or dangerous for humans to reach. Professionals in architecture/engineering/construction (AEC), manufacturing, plant, public safety, and media and entertainment attach the BLK ARC to a robotic carrier to capture data that is used to build 3D models and recreate situations. For example, man-made or natural disasters, automotive plants, bridges, and movie location sets. The product’s speed, accuracy, flexibility, and modular design provides fully autonomous mobile LiDAR scanning and navigation.

WHILL Model F – Foldable Personal EV

By WHILL, Inc.

Best of Innovation

Accessibility

WHILL Model F is a foldable personal electric vehicle for everyone, including seniors and people who have difficulty walking. Unlike wheelchairs, which are typically built for patients in a medical environment, Model F is designed to fit an active lifestyle for everyday use. It is lightweight and foldable, which makes it easy to load in cars and convenient when traveling by air. The WHILL smartphone app allows you to drive and lock the Model F remotely, as well as check key device information such as total mileage and battery level.

DROWay – Intelligent UATM Ground Control Platform

By CLROBUR Co., Ltd. / HANSEO UNIVERSITY

Honoree

Drones & Unmanned Systems

DROWay is an integrated and expansional multi-mobile platform that goes beyond former mobility control services. (Part of DROW4D service) – Multi-heterogeneous mobility HW integrated control and real-time monitoring control system – Automatic multiple flight path generation and flight simulation in a 4D airspace(corridor) – Indoor/Outdoor Swarm flight & simulation (DROW World/DROW ART Manager) – AI based ground control data managing & analysis

DJ25 – Fuel cell powered VTOL (with JOUAV)

By Doosan Mobility Innovation

Honoree

Drones & Unmanned Systems

DJ25 is the world’s first hydrogen fuel cell VTOL(Vertical Take Off and Landing) commercial drone solution. Doosan and JOUAV successfully integrated its advanced PEMFC(Proton-Exchange Membrane Fuel Cell) technology to VTOL air frame, With this collaboration make the flight time achieve up to 5 and half hours. DJ25 can cover up to 500km in a single flight, and it is suitable for long distance inspections, large scale site surveying and mapping compared to the ordinary batter drone. and also hydrogen-powered version has low noise and no vibration during power generation. It is stable and efficient when loading various mission equipment.

Mini GUSS

By GUSS Automation

Honoree

Drones & Unmanned Systems

Mini GUSS is the world’s first and only autonomous vineyard, hops, berry, and high density orchard sprayer.

Drone Charging Station “ON STATION”

By ISON

Honoree

Drones & Unmanned Systems

High Mast Drone Charging Station consists of a pole, drone hangar, control box and other accessories if necessary by the users. The high mast pole can raise and lower automatically by the control box and the drone hangar can be stored up to 50 feet tall so drone hangar or other accessories such as anemometer, CCTV camera, rain sensor, solar panel module, LED lights or even the weapon (M60) can be added on the pole for the purpose of end user’ s circumstance.

Delivery AMR:Mighty-D3

By Piezo Sonic Corporation

Honoree

Drones & Unmanned Systems

The AMR for transportation: Mighty-D3 is designed based on the technology of Japanese lunar exploration robots, and uses Piezo Sonic Motor for the steering mechanism. It is designed based on Piezo Sonic’s 3C concept (Cool, Cute, Compact), so it has both design and functionality. Mighty-D3 is capable of climbing over bumps of up to 15cm, turning on the spot, and moving horizontally, allowing it to avoid obstacles and travel autonomously to its destination in both outdoor and indoor environments. Mighty-D3 can be used as a transportation support robot for urban areas, hospitals, commercial facilities, and big apartments.

WasteShark

By RanMarine Technology BV

Honoree

Drones & Unmanned Systems

To contribute to the huge challenge of cleaning and monitoring our world’s waters, RanMarine Technology developed the patented WasteShark. It is the world’s first autonomous aquadrone designed for clearing marine plastic waste. At a running cost of 20% of other marine waste removal solutions and with zero emission operation, the intelligent WasteShark is easy to operate and maintain. The WasteShark is our first model and our roadmap includes products based on the WasteShark such as the OilShark and MegaShark. It is accessible to public and private entities that aim to contribute to restoring the marine environment to its natural state.

SPIDER-GO ; Warehouse Inventory Automation System

By TACTRACER CO., LTD.

Honoree

Drones & Unmanned Systems

SPIDER-GO is an automated warehouse inventory system that periodically scans and updates the inventory status in real time. The key features include automated modelling of the warehouse layout, presenting the inventories on the 3D map, and inspecting the inventory status through remote control. It is also equipped with functions that allow monitoring by CCTV, detecting fire, and remote updating. Conventional warehouse management systems have had issues like frequent errors in loading and picking, discrepancies between the system and the actual inventories due to human input errors. SPIDER-GO can innovatively improve the visibility and efficiency for proper warehouse inventory management.

Whiz Gambit – 2-in-1 AI-powered cleaning & disinfection solution

By Avalon SteriTech Limited

Honoree

Robotics

Whiz Gambit, is a 2-in-1 AI-powered cleaning and disinfection robotic solution jointly developed by Avalon SteriTech and SoftBank Robotics Group. The robot is the first disinfection robot to achieve Performance Mark by SGS, the world’s leading verification and testing company, with proven efficacy to eliminate >99% microbial bioburden. Importantly, Whiz Gambit greatly minimizes potential health risks in communal areas with its effective, consistent cleaning and disinfection performance. It has been a trusted partner for hospitality groups, shopping malls, schools, and offices around the world.

The Essentials; The Ultimate Building Blocks for Mobile Robots

By Avular

Honoree

Robotics

Avular proudly presents the world’s first end-to-end solution for mobile robots. The Essentials are modular hardware and software building blocks, specifically designed to (1) rapidly build new mobile robot applications, (2) turn existing machines into autonomous ones, and (3) develop robust and scalable end-products. The Essentials cover all the core functionalities a mobile robot needs, allowing you to focus on your specific application. Our products already enable entrepreneurs and engineers to build robots that make the world a better place in the fields of food, safety, energy, and more.

CygLiDAR_h3 (2D/3D Dual Solid State LiDAR)

By CYGBOT CO,. LTD.

Honoree

Robotics

It is a Solid State LiDAR product that does not operate mechanically, and it can measure 2D and 3D at the same time. Most robots use 2D LiDAR to identify their position and 3D Camera to determine obstacles while driving. CygLiDAR, you can get 2D and 3D data together with one product. CygLiDAR allows robots to reduce the number of sensors used and to produce robots that are competitive in design and cost.

Doosan NINA (New Inspiration New Angle)

By Doosan Robotics

Honoree

Robotics

As an achievement of Project NINA (New Inspiration. New Angle.), Doosan created an unprecedented camera robot system that empowers everyone to become professionals, opening next-level creativeness. To democratize filming robots, Doosan innovated software with intuitive UI that helps content creators to film effortlessly, including sharing presets of camera movement on Doosan’s platform. Another key part of the system is that the robot shoots 360-degrees and tracks shooting objects, simplifying complex camera moves to allow filming at any angle. It can be controlled by hand and/or joypad so that people with no experience with robot operations can handle it without difficulties.

DOTSTAND V1

By DOTHEAL Co.,Ltd.

Honoree

Computer Peripherals & Accessories, Fitness & Sports, Robotics

Using computers for an extended number of hours with poor posture results in forward head posture syndrome (FHP), a very common problem that causes neck pain and fatigue. Doctor visits and therapy are both time consuming and expensive. DOT STAND is a patent-pending, smart monitor stand that uses an AI sensor to analyze the user and automatically self-adjusts any monitor’s position to induce better posture – without the user even knowing it. DOT STAND relieves pain and improves concentration and work efficiency and improves average cervical curve ARA by up to 8%.

Hey-Bot(AI-based, Smart Disinfection&Guide Robot)

By Hills Engineering co.,ltd. / HANSEO UNIVERSITY

Honoree

Robotics

‘Hey-Bot’ is an AI-based self-driving disinfection and guide robot for convention centers, hospitals, negative pressure wards and other important but vulnerable places. The robot protects people from the coronavirus pandemic by minimizing chance of getting infection. It effectively kills the virus and limits unnecessary social contacts in guiding one’s way to his or her destination and sanitizing a given area based on its self-driving, guide and disinfection functions. ‘Hey-Bot’ is highly advanced intelligent Disinfection & Guide robot that can open safe and convenient “with coronavirus” era where people can be reassured for living and working.

RGB-D AI Robot

By Industrial Technology Research Institute (ITRI)

Honoree

Robotics

The RGB-D AI Robot is the world’s first collaborative robot that includes smart 3D vision as a built-in standard for high-precision object recognition. The integration of a 3D camera and MEMS laser scanning projector, along with the auto labeling technology, allows the robot to quickly learn through visually acquired data and perform hand-eye coordination skills. The miniature size of the optical sensor makes it easy to install in robotic arms and can lower installation costs. This innovation can be applied to human-robot collaboration in both manufacturing and service sectors.

Lumotive Meta-Lidar Platform

Platform

By Lumotive

Honoree

Robotics, Vehicle Intelligence & Transportation

The Lumotive Meta-Lidar Platform is the mobility industry’s smallest and most cost-effective 3D sensing solution comprising a Lumotive tiny lidar sensor with patented beam steering technology, real-time control software, and a reference system design. Unlike previous generation lidar systems that use mechanical spinning assemblies and are known for being big and expensive, Lumotive’s solution is tiny and scalable for a range of size, performance and power requirements. The Meta-Lidar Platform removes barriers to greater proliferation of 3D sensing in automotive, industrial and consumer applications.

Platform is the mobility industry’s smallest and most cost-effective 3D sensing solution comprising a Lumotive tiny lidar sensor with patented beam steering technology, real-time control software, and a reference system design. Unlike previous generation lidar systems that use mechanical spinning assemblies and are known for being big and expensive, Lumotive’s solution is tiny and scalable for a range of size, performance and power requirements. The Meta-Lidar Platform removes barriers to greater proliferation of 3D sensing in automotive, industrial and consumer applications.

Monarch Tractor, MK-5

By Monarch Tractor

Honoree

Robotics

Monarch Tractor, the world’s first fully electric, driver optional, smart tractor, enhances farmer’s existing operations, alleviating labor shortages, and maximizing yields. The award-winning Monarch Tractor combines electrification, automation, machine learning, and data analysis to set a new standard in tractor technology and capabilities.

Ted

By Naio technologies

Honoree

Robotics

Ted offers a sustainable, serviceable and smart winegrowing solution combining high edge technology in robotics and AI. Ted is the first robot dedicated to vineyards, an alternative to the use of herbicides that respects your soils and improves your working conditions.

Autonomous Box Truck

By Gatik

Honoree

Vehicle Intelligence & Transportation

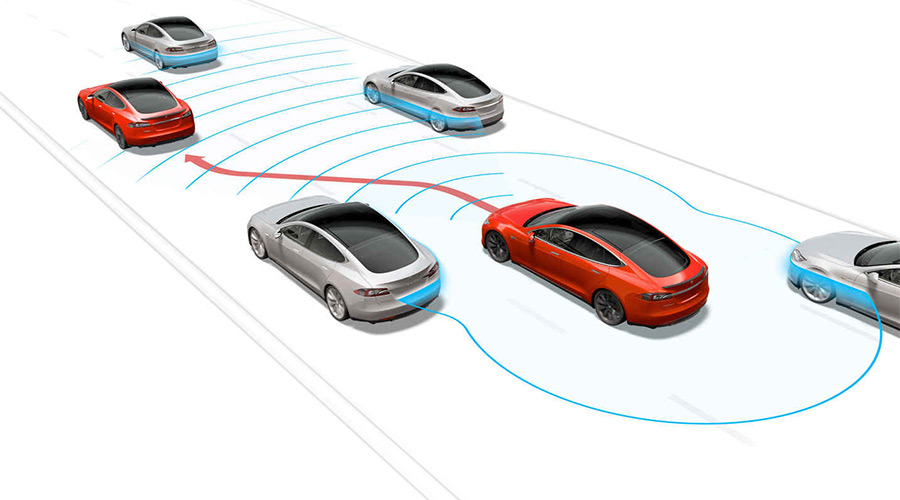

Gatik, the leader in autonomous Middle Mile logistics, delivers goods safely and efficiently using its fleet of light and medium duty trucks. The company focuses on short-haul, B2B logistics for Fortune 500 retailers such as Walmart and Loblaw. Gatik’s Class 3-6 autonomous box trucks are live for customers in multiple markets including Texas, Arkansas, Louisiana and Ontario. In April 2021, Gatik launched its strategic collaboration with Isuzu North America, marking the first time a global OEM is working in the medium duty category with a Middle Mile trucking company. Gatik’s autonomous driving.

XenoLidar-X

By XenomatiX – True-Solid-State-LiDAR

Honoree

Vehicle Intelligence & Transportation

XenoLidar-X is a true-solid-state LiDAR designed for high resolution and accurate analysis of the vehicle’s surroundings. It is a stand-alone, off-the-shelf solution with no moving parts, intended for autonomous and industrial applications. XenoLidar-X comes in two versions: Xact for mid-range, and Xpert for long-range measurements. Both versions include XenoWare, the 3D point cloud software enabling perception solutions in ADAS and up to fully autonomous driving.

4Sight M

By AEye, Inc.

Honoree

Vehicle Intelligence & Transportation

4Sight M is a high-performance, software-configurable adaptive LiDAR sensor designed to meet the diverse performance and functional requirements of autonomous and partially automated applications. Its solid-state reliability, combined with industry-leading LiDAR performance (extended range from 1cm to 1,000 meters), integrated intelligence, advanced vision capabilities (10-20x more accurate than camera-only systems), and unmatched reliability make it an ideal fit for mobility, trucking, transit, construction, rail, intelligent traffic systems, and aerospace and defense markets.

M is a high-performance, software-configurable adaptive LiDAR sensor designed to meet the diverse performance and functional requirements of autonomous and partially automated applications. Its solid-state reliability, combined with industry-leading LiDAR performance (extended range from 1cm to 1,000 meters), integrated intelligence, advanced vision capabilities (10-20x more accurate than camera-only systems), and unmatched reliability make it an ideal fit for mobility, trucking, transit, construction, rail, intelligent traffic systems, and aerospace and defense markets.

Eos Embedded Perception Software

By Algolux

Honoree

Vehicle Intelligence & Transportation

Advanced Driver Assistance Systems (ADAS) and Autonomous Vehicle (AV) systems today are not able to support many driving environments, weather conditions, and challenging scenarios. Driving in low light, snow, rain, and even tunnels are just some of the situations where perception systems often fail due to a lack of robustness. Eos is an award-winning embedded perception solution that delivers up to 3x improved accuracy across all conditions, especially in low light and harsh weather. Its efficient end-to-end deep learning architecture can be quickly personalized to any camera lens/sensor configuration or for multi-sensor fusion.

Nova Lidar

By Cepton Technologies

Honoree

Vehicle Intelligence & Transportation

Nova is a miniature, wide-angle, near-range lidar built for modern vehicles at a target volume price below $100, to help increase vehicle safety and enable autonomous driving capabilities. Powered by Cepton’s patented MMT®, Nova can be elegantly embedded all around a vehicle to provide a complete 360° view of its immediate surroundings without disrupting its design aesthetics. Nova is designed to help minimize perception blind spots and reduce accidents and vehicle damage. Nova is a transformational sensor that fundamentally changes the game for near-range sensing applications.

Automated Steering Actuator

By Nexteer Automotive

Honoree

Vehicle Intelligence & Transportation

Nexteer’s Automated Steering Actuator: High Availability, Output & Durability offers high safety coverage through a combination of software, electrical hardware, mechanical & sealing redundancies – plus increased durability & reliability performance required for higher loads due to increasing vehicle weights (battery mass) & more demanding use-cases of Shared Autonomous Vehicles without driver manual back-up. Our Automated Steering Actuator facilitates broader adoption of Shared Autonomous Vehicles (SAVs), like people movers, to be capable of higher loads and higher speeds, compared to current last-mile, geofenced and neighborhood vehicles on the market.

1-box Brake System for Highly Autonomous Driving

By Mando Corporation

Honoree

Vehicle Intelligence & Transportation

Mando’s Integrated Dynamic Brake is a 1-box electro-hydraulic brake system replacing and integrating multiple components of a traditional brake system into one unit. This promotes the reduction in vehicle mass and simplifying the assembly step by requiring less electrical and hydraulic components. ‘IDB for Highly Autonomous Driving’ is a world-first product that secures braking functional redundancy contained within the same 1-box system, eliminating the need for additional components. Moreover, in the unlikely event of failure, it provides fully redundant brake functionality and performance.

Hammerhead

By NODAR

Honoree

Vehicle Intelligence & Transportation

NODAR’s Hammerhead camera-based 3D vision software technology is a crucial component in the development of ADAS and autonomous vehicles bringing safety, advanced performance, and cost-effectiveness to the automotive market. NODAR’s 3D vision platform, Hammerhead, delivers reliable, ultra-precise, and real-time 3D sensing at long-range (up to 1000 meters), providing better than LiDAR-quality at the price point of camera technology. Between 2025 and 2030 a projected 250 million vehicles will require L2 and above autonomy to understand their environment and provide the high-level of safety required as autonomous systems control more of the driving function.

camera-based 3D vision software technology is a crucial component in the development of ADAS and autonomous vehicles bringing safety, advanced performance, and cost-effectiveness to the automotive market. NODAR’s 3D vision platform, Hammerhead, delivers reliable, ultra-precise, and real-time 3D sensing at long-range (up to 1000 meters), providing better than LiDAR-quality at the price point of camera technology. Between 2025 and 2030 a projected 250 million vehicles will require L2 and above autonomy to understand their environment and provide the high-level of safety required as autonomous systems control more of the driving function.

TriEye SEDAR

By TriEye

Honoree

Vehicle Intelligence & Transportation

TriEye’s SEDAR (Spectrum Enhanced Detection And Ranging) is the ultimate imaging and ranging solution for automated driving applications. SEDAR’s sensing modality, uniquely operating in the Short-Wave Infrared (SWIR) spectrum, enables imaging and 3D mapping of the road within all visibility conditions – in one sensory modality The SEDAR is based on two significant world’s first innovations: TriEye’s Raven (HD CMOS-based SWIR sensor) and TriEye’s UltraBlaze (Eye-safe SWIR pulsed laser illumination source). TriEye’s first-of-its-kind technology is suited for mass production, offering a 10X cost reduction compared to current LiDAR solutions on the market.

Phoenix Perception Radar

By Arbe

Honoree

Vehicle Intelligence & Transportation

Arbe’s Perception Radar, Phoenix, revolutionizes autonomous vehicle sensing, providing unmatched safety to the market. Ultra-high resolution transforms radar as a sensor to support advanced perception capabilities at mass market price, with top performance in all environments, weather, and lighting conditions. It is the first radar to detect stationary objects — a notorious stumbling block for autonomous radars — meeting NHTSA and NCAP requirements and resolving the factors behind ADAS and Autopilot related accidents. With an Advanced Free Space mapping and object tracking in all corner cases, Phoenix closes the sensor gap to achieve truly safe autonomous driving and Vision Zero.

‘Real-to-real’ – Unique ADAS Technology

By Cognata LTD – Ella Shlosberg

Honoree

Vehicle Intelligence & Transportation

Cognata is proud to launch its ‘real-to-real’ technology, which accelerates the global program for ADAS validation in order to meet worldwide regulatory requirements. The proprietary AI technology developed with Cognata transforms the super-high resolution data set to sensor inputs “as seen” by new sensors in new vehicle applications. Meaning, it is now possible to re-use previously collected video data to test a camera in a new position or with a new optical path and record multiscopic images and apply AI SLAM – based image transformation.

Robot Express for Smart Transportation and Logistics

By Mindtronic AI

Honoree

Smart Cities

The Robot Express is a smart logistic service that leverages the public transportation network for delivering goods. The advantage is that the sender and receiver can utilize the bus stop as express station. With the density and frequency of the shuttle bus, both service coverage and delivery time can be improved. Another advantage is energy saving because the shuttle not only transport the passengers but also the goods at the same time.

Maicat

By Macroact Inc.

Honoree

Smart Home

The social robot Maicat incorporates AI into Robotics, combining beneficial characteristics of a cute, engaging companion pet with the intelligence of AI, generating empathetic and personalized experiences for the user. Autonomous control and AI technology enable the robot to understand and adapt to its environment while its mobility, passive and active sensors support it to navigate and operate in the home, and allow an easy integration with other smart home technology via third-party Application, making it the center of the smart home. Being with Maicat adds another dimension to your life!

Mind-linked Bathbot

By Amorepacific

Honoree

Health & Wellness

Mind-linked Bathbot is the first beauty solution that produces bath bombs with customized fragrances and colors instantly, based on an individual’s electroencephalogram (EEG) data. The EEG signals are measured in real-time using a wearable headset and combined with a technology that has been developed to enable the quantitative evaluation of emotional indicators. These emotional indicators enable the identification of the fragrance and color that will provide a unique experience to the user. This user-optimized information is then transmitted to the Bathbot (bath bomb-making robot). This is the first technological invention to produce a customized bath bomb within a minute.

AVATAR 3D system (AVT-2020-700)

By AVATAR

Honoree

Health & Wellness

A next-generation system for quantification of animal behavior experiments that can replace the existing preclinical scoring that consists of human standards. As a fusion system of multi-vision hardware and AI software, all behaviors of mice, a representative animal of preclinical testing, can be quantified in real time with markerless and simulated in a 3D virtual space.

rebless

By H ROBOTICS INC.

Honoree

Health & Wellness

rebless is an FDA-registered robotic, exercise therapy device for both upper and lower limbs, providing motion to the elbow, wrist, ankle, and knee joints. With multiple operating modes, rebless

is an FDA-registered robotic, exercise therapy device for both upper and lower limbs, providing motion to the elbow, wrist, ankle, and knee joints. With multiple operating modes, rebless allows for passive, active, active-assisted and active-resisted exercise and range of motion measurement, so therapy can be customized based on each individual patient’s condition and progress. Continuous Passive Motion (CPM) and Assist-as-Needed technology allow patients to change exercising modes depending on their rehabilitation abilities.

allows for passive, active, active-assisted and active-resisted exercise and range of motion measurement, so therapy can be customized based on each individual patient’s condition and progress. Continuous Passive Motion (CPM) and Assist-as-Needed technology allow patients to change exercising modes depending on their rehabilitation abilities.

OMO Smart Trash Can

By GD International Inc.

Honoree

Home Appliances

The OmO Smart Can is a new and first of it’s kind self-sealing and touchless trash can. A built-in motion sensor and compatible feature with voice assistants like Alexa and Google Assistant gives you a hand-free approach to tidying up. When it’s time to take out the trash, an internal thermal sealing mechanism seals trash in and opens the inner bin for a ready to throw bag. A replacement bag automatically deploys once the bin closes back up and is ready for the next dispense.

Narwal World First Self-Cleaning Robot Mop and Vacuum

By Narwal Robotics Corporation

Honoree

Home Appliances

Narwal J2 is a robotic cleaner with automatic mop-cleaning base station. Users can select between vacuum or mop modules to meet their cleaning needs. The spinning mop with 10N pressure applied against the floor, which greatly enhances the cleaning effect. It utilizes Lidar and SLAM technologies to intelligently navigate and map the environment. When the intelligent algorithm detects dirtiness of mopping pads, it automatically returns to base station to wash pads and resume work from where it stopped. Users do not need to deal with dirty mops at any time, saving hands from coming in contact with allergens or dirt.

Nailbot

By Preemadonna Inc.

Honoree

Home Appliances

Nailbot is a connected, at-home manicure device that instantly prints on nails any photo, emoji, image or any self-created design. Along with the product experience, comes a built-in community of Gen Z creators via a mobile app art marketplace. Preemadonna is the maker of Nailbot.

The full list of CES Innovation Award Honorees is here,

Platform

Platform