Deploy a Deep Learning model as a web application using Flask and Tensorflow

3DMGQ7-GNSS/INS Dynamic Performance Test Report

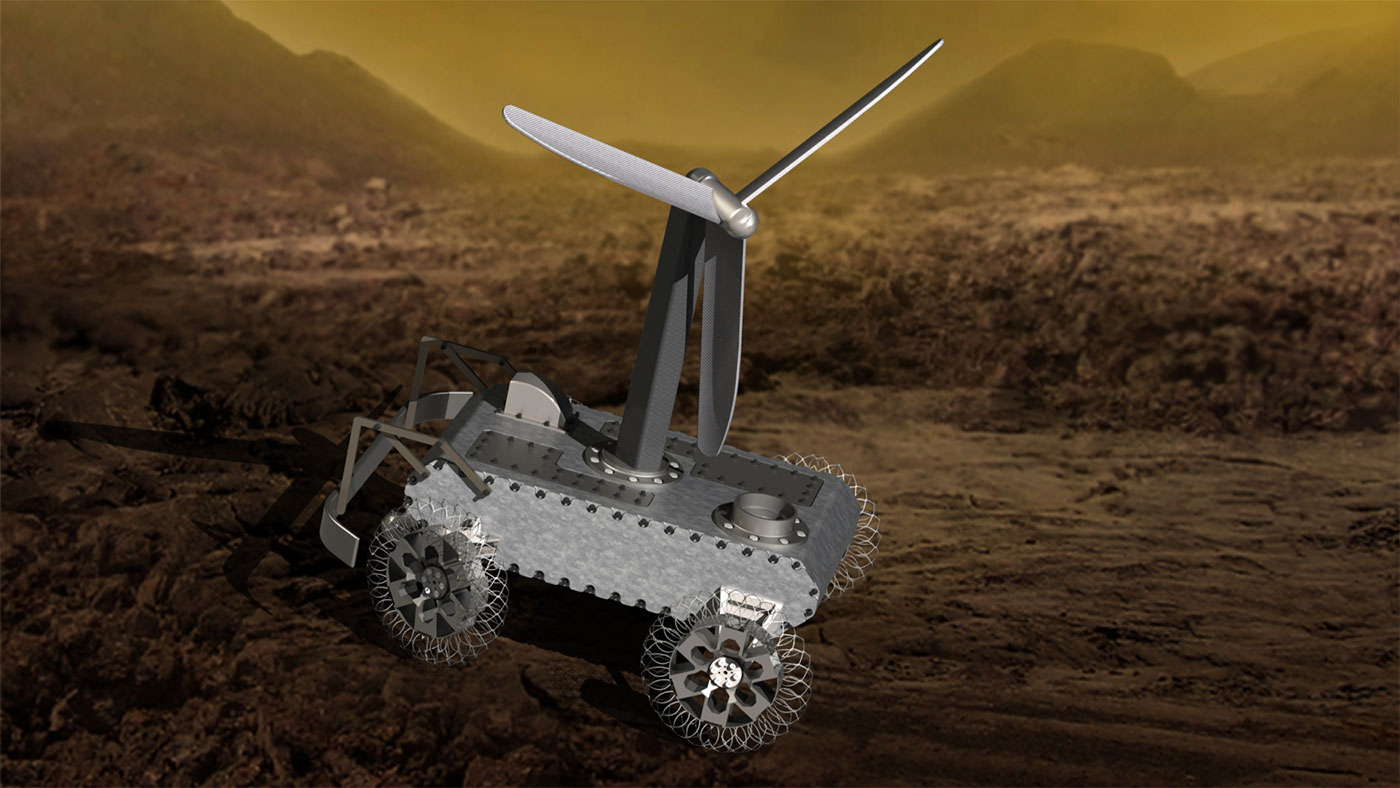

#322: Exploring Venus with a Clockwork Rover, with Jonathan Sauder

Credits: NASA/JPL-Caltech

In this episode, Lilly interviews Jonathan Sauder, the Principal Investigator of a NASA Innovative Advanced Concepts project to design a rover for the surface of Venus. Sauder explains why exploring Venus is important and why previous surface missions have only lasted a few hours. They discuss his innovative wheeled-robot concept, a hybrid automaton rover which would be mostly mechanical and powered by wind.

Jonathan Sauder

Jonathan Sauder is a NASA Innovative Advanced Concepts (NIAC) Fellow and Senior Mechatronics Engineer at NASA Jet Propulsion Lab in the Technology Infusion Group focused on innovative concepts. He is also a lecturer of “Design Theory and Methodology” and “Advanced Mechanical Design” at the University of Southern California, where he received his PhD in Mechanical Engineering.

Links

- Download mp3 (9.7 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

A self-balancing robot with a tail-like component

Drones that patrol forests could monitor environmental and ecological changes

The Future of Smart Logistics Automation

Walmart abandons shelf-scanning robots, lets humans do work

Cockroaches and lizards inspire new robot developed by researcher

Why robots and artificial intelligence creep us out

A raptor-inspired drone with morphing wing and tail

By Nicola Nosengo

NCCR Robotics researchers at EPFL have developed a drone with a feathered wing and tail that give it unprecedented flight agility.

The northern goshawk is a fast, powerful raptor that flies effortlessly through forests. This bird was the design inspiration for the next-generation drone developed by scientists of the Laboratory of Intelligent Systems of EPFL led by Dario Floreano. They carefully studied the shape of the bird’s wings and tail and its flight behavior, and used that information to develop a drone with similar characteristics.

“Goshawks move their wings and tails in tandem to carry out the desired motion, whether it is rapid changes of direction when hunting in forests, fast flight when chasing prey in the open terrain, or when efficiently gliding to save energy,” says Enrico Ajanic, the first author and PhD student in Floreano’s lab. Floreano adds: “our design extracts principles of avian agile flight to create a drone that can approximate the flight performance of raptors, but also tests the biological hypothesis that a morphing tail plays an important role in achieving faster turns, decelerations, and even slow flight.”

A drone that moves its wings and tail

The engineers already designed a bird-inspired drone with morphing wing back in 2016. In a step forward, their new model can adjust the shape of its wing and tail thanks to its artificial feathers. “It was fairly complicated to design and build these mechanisms, but we were able to improve the wing so that it behaves more like that of a goshawk,” says Ajanic. “Now that the drone includes a feathered tail that morphs in synergy with the wing, it delivers unparalleled agility.” The drone changes the shape of its wing and tail to change direction faster, fly slower without falling to the ground, and reduce air resistance when flying fast. It uses a propeller for forward thrust instead of flapping wings because it is more efficient and makes the new wing and tail system applicable to other winged drones and airplanes.

The advantage of winged drones over quadrotor designs is that they have a longer flight time for the same weight. However, quadrotors tend to have greater dexterity, as they can hover in place and make sharp turns. “The drone we just developed is somewhere in the middle. It can fly for a long time yet is almost as agile as quadrotors,” says Floreano. This combination of features is especially useful for flying in forests or in cities between buildings, as it can be necessary during rescue operation. The project is part of the Rescue Robotics Grand Challenge of NCCR Robotics.

Opportunities for artificial intelligence

Flying this new type of drone isn’t easy, due to the large number of wing and tail configurations possible. To take full advantage of the drone’s flight capabilities, Floreano’s team plans to incorporate artificial intelligence into the drone’s flight system so that it can fly semi-automatically. The team’s research has been published in Science Robotics.