RoboticsTomorrow – Fall Product Special – Part 2

The Future of Manufacturing: What to Expect

Don’t be data-driven in AI

Being data-driven is usually used and understood with positive connotations but when I hear the word used I get a little anxious about the “data-driven” decisions that might be about to happen. Let me explain why.

According to Wikipedia data-driven means “The adjective data-driven means that progress in an activity is compelled by data, rather than by intuition or by personal experience.” In other words - Look at the data as a primary source of information to act on. When the data gives you a reason to act, you act. It might at a glance seem like a very sound way to work and especially in the AI domain, that in so many ways rely on data. But in fact being data-driven can be very problematic when working with AI. I actually think people that say they are data-driven in general are on the wrong track. This does not mean that I’m against putting much effort into understanding your data. I’m actually a big believer that collecting, understanding and preparing data for AI projects should be the activities with the most resources allocated to it. So I’m pro good data science but against being driven by data and I see that as two very different things.

But then why is it so problematic to be data-driven?

My primary argument is that the driver behind decision making and activities should not be the data you have, but rather curiosity on the problem and the world around it. In a sense that would mean being driven by data you don't have. The final goal of AI projects often is to solve a problem or improve a process and the solutions to that do not always exist in the data you have generated or are being generated by the current world's solutions. So instead you should be curiosity-driven or at least problem-driven. This means that you should not approach problems by looking at your data and making a conclusion. You should look at your data and look for the blindspots and from there be curious. What is it that you don’t know? I’ll get back to curiosity later. First I have some more arguments against being data-driven.

You will extremely rarely have all relevant data to a problem. Even after exhausting all potential data sources. So when you make conclusions from the data you have, the conclusion will at least always be a bit off. This doesn’t mean that data is not useful and that the conclusion is not useful, but you will always be at least a bit wrong. As statisticians would say:"all models are wrong but some are useful".

Another problem with being data-driven is that there's a narrative that decisions made on data is better than decisions made on gut feeling. And while that might be true sometimes, data is not one-sized and can be very helpful at times and very misleading at others.

An example is the father of modern statistics Ronald Fischer that also in hindsight was a little too data-driven. He stubbornly held to his conclusion that data showed that lung cancer was not a result from smoking. The correlation he said must be the other way around and people with lung cancer or higher risk of lung cancer was just more likely to be smokers. He argued that it was either a genetic relation or that cancer patients would use smoking to soothe pain in lungs.So even the best statisticians can be told stories that are far from the truth by data.

The last problem with data is its ability to tell you the story you want it to tell. That can be done consciously or unconsciously. A famous quote by the economist Ronald Case goes “If you torture the data long enough, it will confess to anything” so there no certainty that the conclusion you get from data is correct. The interpretation can be very biased and sometimes we torture the data even without being aware of it ourself.

About curiosity

So as promised I’m getting back to being curious. If I had to choose one keyword to succeed with AI it would be curiosity. AI projects usually start with a process to optimize or a problem to solve and before training a model on data you have to be curious about the problem. In that way the data comes subsequently to the problem and will as a result be more relevant and more specific to the problem.

Curiosity to me means exploring with as little preconception as possible. The best example for me is when children lift up rocks on the ground just to see what is under the rock. If you ever saw a child doing that you will have seen that there is no expectations, only excitement both before and after the rock and been lifted. And that is exactly what curiosity does to the practitioner. It leads to excitement that in turn leads to passion. Passion makes everything much easier and even the tedious parts of a project will feel effortless.

AI is also explorative in its nature and that’s why it suits so well to be curious. If there's specific expectations in an explorative process then disappointed is almost given.

As a result you must let curiosity be the primary driver behind the decisions and activities you make. Being data-driven is reactive in nature and if you want to be innovative in solving problems you must be proactive. Being proactive requires you to be curious about your blind and be driven by the unknown.

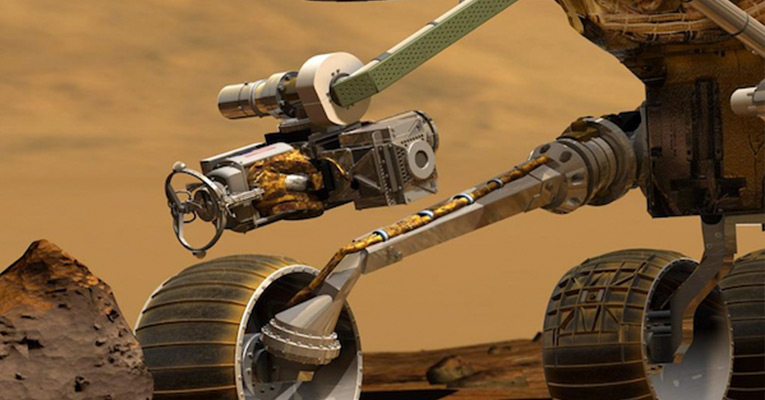

#320: Planetary Drilling, with Kris Zacny

In this episode, Lilly Clark interviews Kris Zacny, the Vice President of Exploration Technologies at Honeybee Robotics. They discuss two focuses of development at Honeybee: technology for finding extinct extraterrestrial life and technology for in-situ resource utilization. Zacny speaks about the design and operation of current flight projects, and why drilling technology is key to these missions.

Kris Zacny

Dr. Kris Zacny is VP and Senior Research Scientist at Honeybee Robotics. His interests include space mining, sample handling, soil and rock mechanics, extraterrestrial drilling, and In Situ Resource Utilization (ISRU). He co-founded Exploration Technology Division (EX), based in Altadena, CA. EX, with over 100 employees, focuses on developing space mining robots.

In his previous capacity as an engineer in South African underground gold, diamond, and coal mines, Dr. Zacny managed numerous mining projects and production divisions. This hands-on experience related to drilling and mining became invaluable in developing such technologies for space. Dr. Zacny received his PhD (UC Berkeley, 2005) in Geotechnical Engineering with an emphasis on Extraterrestrial Drilling and Mining, ME (UC Berkeley, 2001) in Petroleum Engineering with emphasis on Drilling and Materials Science, and BSc cum laude (U. Cape Town, 1997) in Mechanical Engineering. He participated in several Antarctic, Arctic, Atacama, and Greenland expeditions. Dr Zacny has approx. 200 publications, he has been a PI/Co-I on approx. 50 projects, he has over 40 NASA New Technology Records and four NASA Group Achievement Awards.

Links

This ‘squidbot’ jets around and takes pics of coral and fish

Underwater robots to autonomously dock mid-mission to recharge and transfer data

IP65, IP67, IP68…What Do Protection Ratings Mean?

Plan2Explore: Active model-building for self-supervised visual reinforcement learning

By Oleh Rybkin, Danijar Hafner and Deepak Pathak

To operate successfully in unstructured open-world environments, autonomous intelligent agents need to solve many different tasks and learn new tasks quickly. Reinforcement learning has enabled artificial agents to solve complex tasks both in simulation and real-world. However, it requires collecting large amounts of experience in the environment for each individual task.

Self-supervised reinforcement learning has emerged as an alternative, where the agent only follows an intrinsic objective that is independent of any individual task, analogously to unsupervised representation learning. After acquiring general and reusable knowledge about the environment through self-supervision, the agent can adapt to specific downstream tasks more efficiently.

In this post, we explain our recent publication that develops Plan2Explore. While many recent papers on self-supervised reinforcement learning have focused on model-free agents, our agent learns an internal world model that predicts the future outcomes of potential actions. The world model captures general knowledge, allowing Plan2Explore to quickly solve new tasks through planning in its own imagination. The world model further enables the agent to explore what it expects to be novel, rather than repeating what it found novel in the past. Plan2Explore obtains state-of-the-art zero-shot and few-shot performance on continuous control benchmarks with high-dimensional input images. To make it easy to experiment with our agent, we are open-sourcing the complete source code.

How does Plan2Explore work?

At a high level, Plan2Explore works by training a world model, exploring to maximize the information gain for the world model, and using the world model at test time to solve new tasks (see figure above). Thanks to effective exploration, the learned world model is general and captures information that can be used to solve multiple new tasks with no or few additional environment interactions. We discuss each part of the Plan2Explore algorithm individually below. We assume a basic understanding of reinforcement learning in this post and otherwise recommend these materials as an introduction.

Learning the world model

Plan2Explore learns a world model that predicts future outcomes given past observations $o_{1:t}$ and actions $a_{1:t}$ (see figure below). To handle high-dimensional image observations, we encode them into lower-dimensional features $h$ and use an RSSM model that predicts forward in a compact latent state-space $s$, from which the observations can be decoded. The latent state aggregates information from past observations that is helpful for future prediction, and is learned end-to-end using a variational objective.

A novelty metric for active model-building

To learn an accurate and general world model we need an exploration strategy that collects new and informative data. To achieve this, Plan2Explore uses a novelty metric derived from the model itself. The novelty metric measures the expected information gained about the environment upon observing the new data. As the figure below shows, this is approximated by the disagreement of an ensemble of $K$ latent models. Intuitively, large latent disagreement reflects high model uncertainty, and obtaining the data point would reduce this uncertainty. By maximizing latent disagreement, Plan2Explore selects actions that lead to the largest information gain, therefore improving the model as quickly as possible.

Planning for future novelty

To effectively maximize novelty, we need to know which parts of the environment are still unexplored. Most prior work on self-supervised exploration used model-free methods that reinforce past behavior that resulted in novel experience. This makes these methods slow to explore: since they can only repeat exploration behavior that was successful in the past, they are unlikely to stumble onto something novel. In contrast, Plan2Explore plans for expected novelty by measuring model uncertainty of imagined future outcomes. By seeking trajectories that have the highest uncertainty, Plan2Explore explores exactly the parts of the environments that were previously unknown.

To choose actions $a$ that optimize the exploration objective, Plan2Explore leverages the learned world model as shown in the figure below. The actions are selected to maximize the expected novelty of the entire future sequence $s_{t:T}$, using imaginary rollouts of the world model to estimate the novelty. To solve this optimization problem, we use the Dreamer agent, which learns a policy $\pi_\phi$ using a value function and analytic gradients through the model. The policy is learned completely inside the imagination of the world model. During exploration, this imagination training ensures that our exploration policy is always up-to-date with the current world model and collects data that are still novel.

Curiosity-driven exploration behavior

We evaluate Plan2Explore on 20 continuous control tasks from the DeepMind Control Suite. The agent only has access to image observations and no proprioceptive information. Instead of random exploration, which fails to take the agent far from the initial position, Plan2Explore leads to diverse movement strategies like jumping, running, and flipping. Later, we will see that these are effective practice episodes that enable the agent to quickly learn to solve various continuous control tasks.

Solving tasks with the world model

Once an accurate and general world model is learned, we test Plan2Explore on previously unseen tasks. Given a task specified with a reward function, we use the model to optimize a policy for that task. Similar to our exploration procedure, we optimize a new value function and a new policy head for the downstream task. This optimization uses only predictions imagined by the model, enabling Plan2Explore to solve new downstream tasks in a zero-shot manner without any additional interaction with the world.

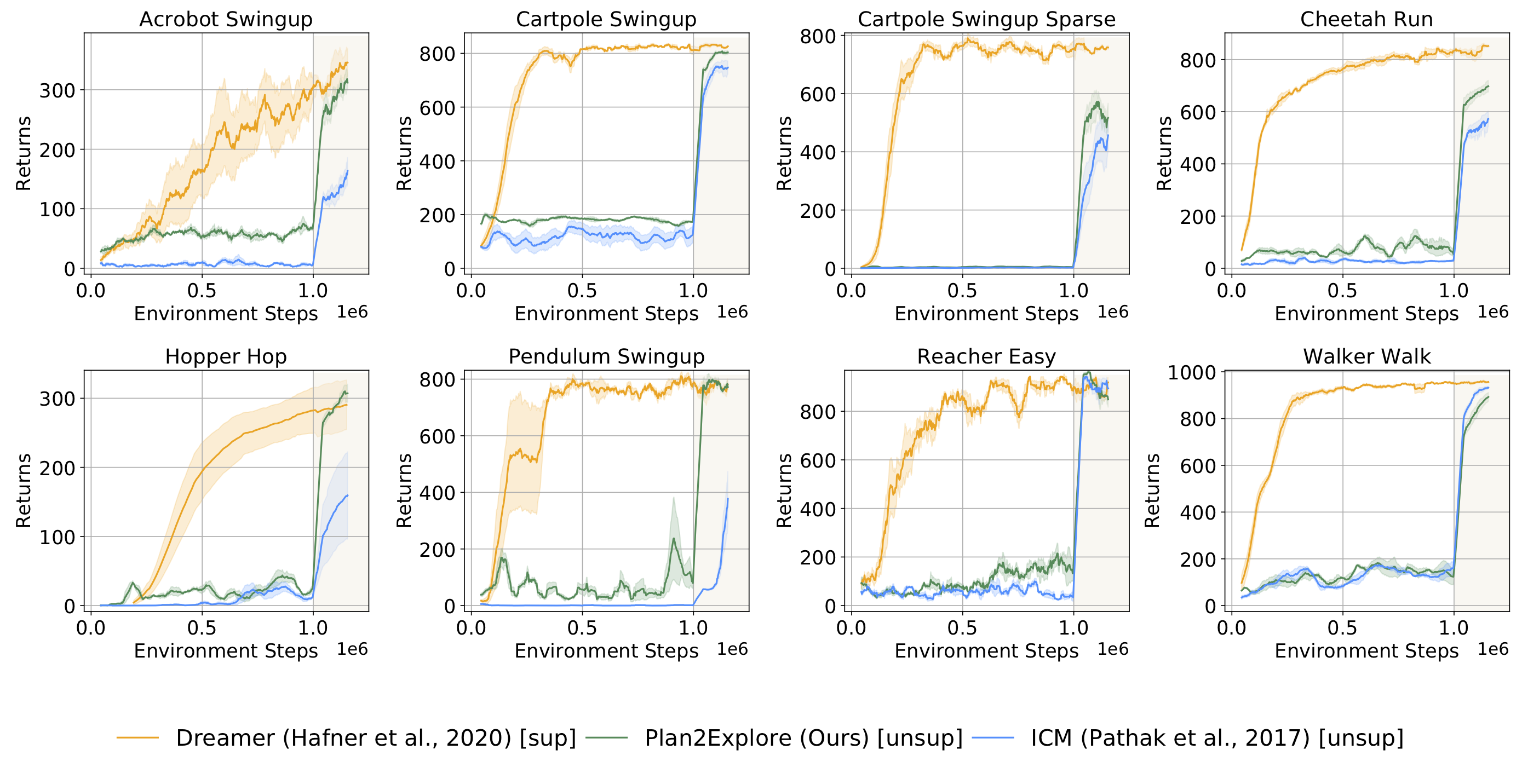

The following plot shows the performance of Plan2Explore on tasks from DM Control Suite. Before 1 million environment steps, the agent doesn’t know the task and simply explores. The agent solves the task as soon as it is provided at 1 million steps, and keeps improving fast in a few-shot regime after that.

Plan2Explore (—) is able to solve most of the tasks we benchmarked. Since prior work on self-supervised reinforcement learning used model-free agents that are not able to adapt in a zero-shot manner (ICM, —), or did not use image observations, we compare by adapting this prior work to our model-based plan2explore setup. Our latent disagreement objective outperforms other previously proposed objectives. More interestingly, the final performance of Plan2Explore is comparable to the state-of-the-art oracle agent that requires task rewards throughout training (—). In our paper, we further report performance of Plan2Explore in the zero-shot setting where the agent needs to solve the task before any task-oriented practice.

Future directions

Plan2Explore demonstrates that effective behavior can be learned through self-supervised exploration only. This opens multiple avenues for future research:

-

First, to apply self-supervised RL to a variety of settings, future work will investigate different ways of specifying the task and deriving behavior from the world model. For example, the task could be specified with a demonstration, description of the desired goal state, or communicated to the agent in natural language.

-

Second, while Plan2Explore is completely self-supervised, in many cases a weak supervision signal is available, such as in hard exploration games, human-in-the-loop learning, or real life. In such a semi-supervised setting, it is interesting to investigate how weak supervision can be used to steer exploration towards the relevant parts of the environment.

-

Finally, Plan2Explore has the potential to improve the data efficiency of real-world robotic systems, where exploration is costly and time-consuming, and the final task is often unknown in advance.

By designing a scalable way of planning to explore in unstructured environments with visual observations, Plan2Explore provides an important step toward self-supervised intelligent machines.

We would like to thank Georgios Georgakis for the useful feedback.

This post is based on the following paper:

- Planning to Explore via Self-Supervised World Models

Ramanan Sekar*, Oleh Rybkin*, Kostas Daniilidis, Pieter Abbeel, Danijar Hafner, Deepak Pathak

Thirty-seventh International Conference Machine Learning (ICML), 2020.

arXiv, Project Website

This article was initially published on the BAIR blog, and appears here with the authors’ permission.

AutoML solutions overview

Introduction

I have been looking for a list of AutoML solutions and a way to compare them, but I haven’t been able to find it. So I thought I might as well compile that list for others to use. If you are not familiar with AutoML read this post for a quick introduction and pros and cons.

I haven’t been able to test them all and make a proper review, so this is just a comparison based on features. I tried to pick the features that felt most important to me, but it might not be the most important for you. If you think some features are missing or if you know an AutoML solution that should be on the list, just let me know.

Before we go to the list I’d just quickly go through the features and how I interpret them.

Features

Deployment

Some solutions can be auto deployed directly to the cloud with a one-click deployment. Some just export to Tensorflow and some even have specific export to edge devices.

Types

This can be Text, Images, video, tabular. I guess some of the open source ones can be stretched to do anything if put in the work, so it might not be the complete truth.

Explainable

Explainability in AI is a hot topic and a very important feature for some projects. Some solutions give you no insights and some gives you a lot and it might even be a strategic differentiator for the provider. I have simply divided this feature into Little, Some and Very Explainable.

Monitor

Monitoring models after deployment to avoid drifting of models can be a very useful feature. I divided this into Yes and No.

Accessible

Some of the providers are very easy to use and some of them require coding and at least basic data science understanding. So I took this feature in so you can pick the tool that corresponds to the abilities you have access to.

Labeling tool

Some have an internal labelling tool so you can directly label data before training the model. That can be very useful in some cases.

General / Specialized

Most AutoML solutions are generalized for all industries but a few are specialized to specific industries. I suspect this will become more popular, so I took this feature in.

Open Source

Self-explanatory. Is it open source or not.

Includes transfer Learning

Transfer learning is one of the big advantages of AutoML. You get to piggyback on big models so you can get great results with very little data.

AutoML solutions list

Google AutoML

Google AutoML is the one I’m the most familiar with. I found it pretty easy to use even without coding. The biggest issue I’ve had is that the API requires a bunch of setup and is not just a simple token or Oauth-based authentication.

Deployment: To cloud, export, edge

Types: Text, Images, Video, Tabular

Explainable: Little

Monitor: No

Accessible: Very

Labeling tool: Used to have but is closed

General / Specialized: Generalized

Open Source: No

Includes transfer Learning: Yes

Link: https://cloud.google.com/automl

Azure AutoML

Microsoft's cloud AutoML seems to be more Xplainable than Google’s but with only tabular data models.

Deployment: To cloud, some Local

Types: Only Tabular

Explainable: Some

Monitor: No

Accessible: Very

Labeling tool: No

General / Specialized: Generalized

Open Source: No

Includes transfer Learning: Yes

Link: https://azure.microsoft.com/en-us/services/machine-learning/automatedml/

Lobe.AI

This solution is still in beta but works very well in my experience. I’ll write a review as soon as it goes public. Lobe is so easy to use that you can let a 10-year old use it to train deep learning models. I’d really recommend this for education purposes.

Deployment: Local and export to Tensorflow

Types: Images

Explainable: Little

Monitor: -

Accessible: Very - A third grader can use this

Labeling tool: Yes

General / Specialized: Generalized

Open Source: No

Includes transfer Learning: Yes

Link: https://lobe.ai/

Kortical

Kortical seems to be one the AutoML solutions that differentiates itself by being as explainable as possible. This can be a huge advantage when not just trying to get good results but also understand the business problem better. For that I’m a bit of a fan.

Deployment: To cloud

Types: Tabular

Explainable: Very

Monitor: No

Accessible: Very

Labeling tool: No

General / Specialized: Generalized

Open Source: No

Includes transfer Learning: Not sure

Link: https://kortical.com/

DataRobot

A big player that might even be the first pure AutoML to go IPO.

Deployment: To cloud

Types: Text, Images and Tabular

Explainable: Very

Monitor: Yes

Accessible: Very

Labeling tool: No

General / Specialized: Generalized

Open Source: No

Includes transfer Learning: Yes

Link: https://www.datarobot.com/platform/automated-machine-learning/

AWS Sagemaker Autopilot

Amazons AutoML. Requires more technical skills than the other big cloud suppliers and is quite limited and supports only two algorithms: XGBoost and Logistic regression.

Deployment: To cloud and export

Types: Tabular

Explainable: Some

Monitor: Yes

Accessible: Requires coding

Labeling tool: Yes

General / Specialized: Generalized

Open Source: No

Includes transfer Learning: Yes

Link: https://aws.amazon.com/sagemaker/autopilot/

MLJar

Deployment: Export and Cloud

Types: Tabular

Explainable: Yes

Monitor: -

Accessible: Very

Labeling tool: No

General / Specialized: Generalized

Open Source: MLJar has both and Open source(https://github.com/mljar/mljar-supervised ) and closed source solution.

Includes transfer Learning: Yes

Link: https://mljar.com/

Autogluon

Deployment: Export

Types: Text, Images, tabular

Explainable: -

Monitor: -

Accessible: Requires coding

Labeling tool: No

General / Specialized: Generalized

Open Source: Yes

Includes transfer Learning: Yes

Link: https://autogluon.mxnet.io/

JadBio

Deployment: Cloud and Export

Types: Tabular

Explainable: Some

Monitor: No

Accessible: Very

Labeling tool: No

General / Specialized: LifeScience

Open Source: No

Includes transfer Learning: -

Link: https://www.jadbio.com/

AUTOWEKA

This solution supports Bayesian models which is pretty cool.

Deployment : Export

Types: -

Explainable: -

Monitor: -

Accessible: Requires Code

Labeling tool: No

General / Specialized: Generalized

Open Source: Yes

Includes transfer Learning:No

Link: https://www.cs.ubc.ca/labs/beta/Projects/autoweka/

H2o Driverless AI

Also supports bayesian models

Deployment: Export

Types: -

Explainable: -

Monitor: -

Accessible: Semi

Labeling tool: No

General / Specialized: Generalized

Open Source: Both options

Includes transfer Learning: -

Link: https://www.h2o.ai/

Autokeras

Autokeras is one of the most popular open source solutions and is definitely worth trying out.

Deployment: Export

Types: Text, Images, tabular

Explainable: Possible

Monitor: -

Accessible: Requires Code

Labeling tool: No

General / Specialized: Generalized

Open Source: Yes

Includes transfer Learning: -

Link: https://autokeras.com/

TPOT

Deployment: Export

Types: Images and Tabular

Explainable: Possible

Monitor: -

Accessible: Requires Code

Labeling tool: No

General / Specialized: Generalized

Open Source: Yes

Includes transfer Learning: -

Link: http://epistasislab.github.io/tpot/

Pycaret

Deployment: Export

Types: Text, Tabular

Explainable: Possible

Monitor: -

Accessible: Requires Code

Labeling tool: No

General / Specialized: Generalized

Open Source: Yes

Includes transfer Learning: -

Link: https://github.com/pycaret/pycaret

AutoSklearn

Deployment: Export

Types: Tabular

Explainable: Possible

Monitor: -

Accessible: Requires Code

Labeling tool: No

General / Specialized: Generalized

Open Source: Yes

Includes transfer Learning: -

Link: https://automl.github.io/auto-sklearn/master/

TransmogrifAI

Made by Salesforce.

Deployment: Export

Types: Text and Tabular

Explainable: Possible

Monitor: -

Accessible: Requires Code

Labeling tool: No

General / Specialized: Generalized

Open Source: Yes

Includes transfer Learning: -

Link: https://transmogrif.ai/

Wearable technologies to make rehab more precise

By Tim Sullivan / Spaulding Rehabilitation Hospital Communications

A group based out of the Spaulding Motion Analysis Lab at Spaulding Rehabilitation Hospital published “Enabling Precision Rehabilitation Interventions Using Wearable Sensors and Machine Learning to Track Motor Recovery” in the newest issue of Nature Digital Medicine. The aim of the study is to lay the groundwork for the design of “precision rehabilitation” interventions by using wearable technologies to track the motor recovery of individuals with brain injury.

The study found that the technology is suitable to accurately track motor recovery and thus allow clinicians to choose more effective interventions and to improve outcomes. The study was a collaborative effort under students and former students connected to the Motion Analysis Lab under faculty mentorship.

Paolo Bonato, Ph.D., Director of the Spaulding Motion Analysis Lab and senior author on the study said, “By providing clinicians precise data will enable them to design more effective interventions to improve the care we deliver. To have so many of our talented young scientists and researchers from our lab collaborate to create this meaningful paper is especially gratifying for all of our faculty who support our ongoing research enterprise.” Bonato is also an Associate Faculty member at Harvard’s Wyss Institute for Biologically Inspired Engineering.

Catherine Adans-Dester, P.T., Ph.D., a member of Dr. Bonato’s team served as lead author on the manuscript. “The need to develop patient-specific interventions is apparent when one considers that clinical studies often report satisfactory motor gains only in a portion of participants, which suggests that clinical outcomes could be improved if we had better tools to develop patient-specific interventions. Data collected using wearable sensors provides clinicians with the opportunity to do so with little burden on clinicians and patients,” said Dr. Adans-Dester. The approach proposed in the paper relied on machine learning-based algorithms to derive clinical score estimates from wearable sensor data collected during functional motor tasks. Sensor-based score estimates showed strong agreement with those generated by clinicians.

By providing clinicians precise data will enable them to design more effective interventions to improve the care we deliver

Paolo Bonato

The results of the study demonstrated that wearable sensor data can be used to derive accurate estimates of clinical scores utilized in the clinic to capture the severity of motor impairments and the quality of upper-limb movement patterns. In the study, the upper-limb Fugl-Meyer Assessment (FMA) scale was used to generate clinical scores of the severity of motor impairments, and the Functional Ability Scale (FAS) was used to generate clinical scores of the quality of movement. Wearable sensor data (i.e., accelerometer data) was collected during the performance of eight functional motor tasks taken from the Wolf-Motor Function Test, thus providing a sample of gross arm movements and fine motor control tasks. Machine learning-based algorithms were developed to derive accurate estimates of the FMA and FAS clinical scores from the sensor data. A total of 37 study participants (16 stroke survivors and 21 traumatic brain injury survivors) participated in the study.

Involved in the study in addition to Dr. Bonato and Dr. Adans-Dester were Nicolas Hankov, Anne O’Brien, Gloria Vergara-Diaz, Randie Black-Schaffer, MD, Ross Zafonte, DO, from the Harvard Medical School Department of Physical Medicine & Rehabilitation at Spaulding Rehabilitation Hospital, Boston MA, USA, Jennifer Dy Department of Electrical and Computer Engineering, Northeastern University, Boston MA, and Sunghoon I. Lee of the College of Information and Computer Sciences, University of Massachusetts Amherst, Amherst MA.