Origami based tires can change shape while a vehicle is moving

Vision test for autonomous cars

Researchers develop a robotic guide dog to assist blind individuals

Emphasis on Quality Control Has Retail Distribution Centers Looking Like Automated Factories

An AR interface to assist human agents during critical missions

As the use of robotics and automation increases, so too does the need to protect these valuable assets.

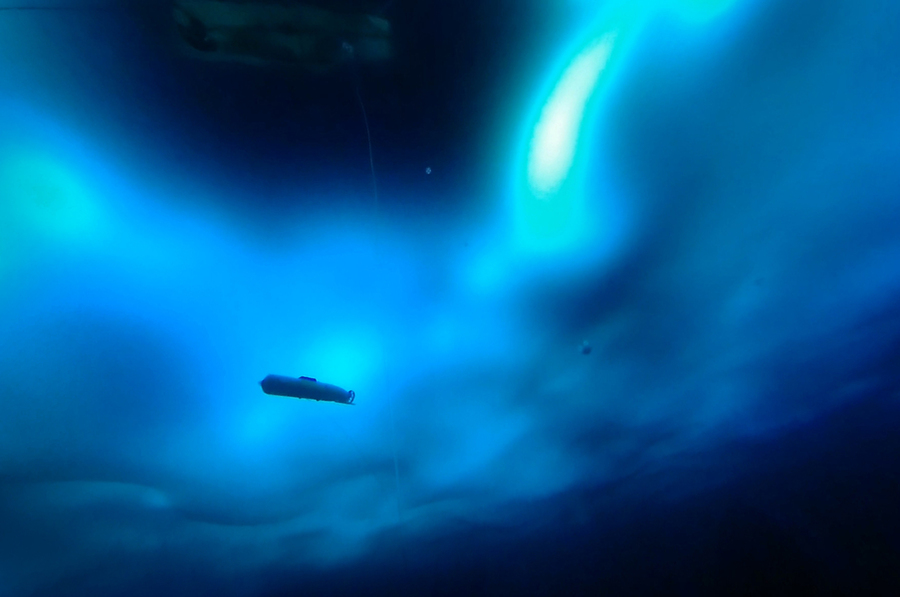

Navigating beneath the Arctic ice

By Mary Beth Gallagher | Department of Mechanical Engineering

There is a lot of activity beneath the vast, lonely expanses of ice and snow in the Arctic. Climate change has dramatically altered the layer of ice that covers much of the Arctic Ocean. Areas of water that used to be covered by a solid ice pack are now covered by thin layers only 3 feet deep. Beneath the ice, a warm layer of water, part of the Beaufort Lens, has changed the makeup of the aquatic environment.

For scientists to understand the role this changing environment in the Arctic Ocean plays in global climate change, there is a need for mapping the ocean below the ice cover.

A team of MIT engineers and naval officers led by Henrik Schmidt, professor of mechanical and ocean engineering, is trying to understand environmental changes, their impact on acoustic transmission beneath the surface, and how these changes affect navigation and communication for vehicles traveling below the ice.

“Basically, what we want to understand is how does this new Arctic environment brought about by global climate change affect the use of underwater sound for communication, navigation, and sensing?” explains Schmidt.

To answer this question, Schmidt traveled to the Arctic with members of the Laboratory for Autonomous Marine Sensing Systems (LAMSS) including Daniel Goodwin and Bradli Howard, graduate students in the MIT-Woods Hole Oceanographic Institution Joint Program in oceanographic engineering.

With funding from the Office of Naval Research, the team participated in ICEX — or Ice Exercise — 2020, a three-week program hosted by the U.S. Navy, where military personnel, scientists, and engineers work side-by-side executing a variety of research projects and missions.

A strategic waterway

The rapidly changing environment in the Arctic has wide-ranging impacts. In addition to giving researchers more information about the impact of global warming and the effects it has on marine mammals, the thinning ice could potentially open up new shipping lanes and trade routes in areas that were previously untraversable.

Perhaps most crucially for the U.S. Navy, understanding the altered environment also has geopolitical importance.

“If the Arctic environment is changing and we don’t understand it, that could have implications in terms of national security,” says Goodwin.

Several years ago, Schmidt and his colleague Arthur Baggeroer, professor of mechanical and ocean engineering, were among the first to recognize that the warmer waters, part of the Beaufort Lens, coupled with the changing ice composition, impacted how sound traveled in the water.

To successfully navigate throughout the Arctic, the U.S. Navy and other entities in the region need to understand how these changes in sound propagation affect a vehicle’s ability to communicate and navigate through the water.

Using an unpiloted, autonomous underwater vehicle (AUV) built by General Dynamics-Mission Systems (GD-MS), and a system of sensors rigged on buoys developed by the Woods Hole Oceanographic Institution, Schmidt and his team, joined by Dan McDonald and Josiah DeLange of GD-MS, set out to demonstrate a new integrated acoustic communication and navigation concept.

The framework, which was also supported and developed by LAMSS members Supun Randeni, EeShan Bhatt, Rui Chen, and Oscar Viquez, as well as LAMSS alumnus Toby Schneider of GobySoft LLC, would allow vehicles to travel through the water with GPS-level accuracy while employing oceanographic sensors for data collection.

“In order to prove that you can use this navigational concept in the Arctic, we have to first ensure we fully understand the environment that we’re operating in,” adds Goodwin.

Understanding the environment below

After arriving at the Arctic Submarine Lab’s ice camp last spring, the research team deployed a number of conductivity-temperature-depth probes to gather data about the aquatic environment in the Arctic.

“By using temperature and salinity as a function of depth, we calculate the sound speed profile. This helps us understand if the AUV’s location is good for communication or bad,” says Howard, who was responsible for monitoring environmental changes to the water column throughout ICEX.

Because of the way sound bends in water, through a concept known as Snell’s Law, sine-like pressure waves collect in some parts of the water column and disperse in others. Understanding the propagation trajectories is key to predicting good and bad locations for the AUV to operate.

To map the areas of the water with optimal acoustic properties, Howard modified the traditional signal-to-noise-ratio (SNR) by using a metric known as the multi-path penalty (MPP), which penalizes areas where the AUV receives echoes of the messages. As a result, the vehicle prioritizes operations in areas with less reverb.

These data allowed the team to identify exactly where the vehicle should be positioned in the water column for optimal communications which results in accurate navigation.

While Howard gathered data on how the characteristics of the water impact acoustics, Goodwin focused on how sound is projected and reflected off the ever-changing ice on the surface.

To get these data, the AUV was outfitted with a device that measured the motion of the vehicle relative to the ice above. That sound was picked up by several receivers attached to moorings hanging from the ice.

The data from the vehicle and the receivers were then used by the researchers to compute exactly where the vehicle was at a given time. This location information, together with the data Howard gathered on the acoustic environment in the water, offer a new navigational concept for vehicles traveling in the Arctic Sea.

Protecting the Arctic

After a series of setbacks and challenges due to the unforgiving conditions in the Arctic, the team was able to successfully prove their navigational concept worked. Thanks to the team’s efforts, naval operations and future trade vessels may be able to take advantage of the changing conditions in the Arctic to maximize navigational accuracy and improve underwater communications.

“Our work could improve the ability for the U.S. Navy to safely and effectively operate submarines under the ice for extended periods,” Howard says.

Howard acknowledges that in addition to the changes in physical climate, the geopolitical climate continues to change. This only strengthens the need for improved navigation in the Arctic.

“The U.S. Navy’s goal is to preserve peace and protect global trade by ensuring freedom of navigation throughout the world’s oceans,” she adds. “The navigational concept we proved during ICEX will serve to help the Navy in that mission.”

Simple robots, smart algorithms

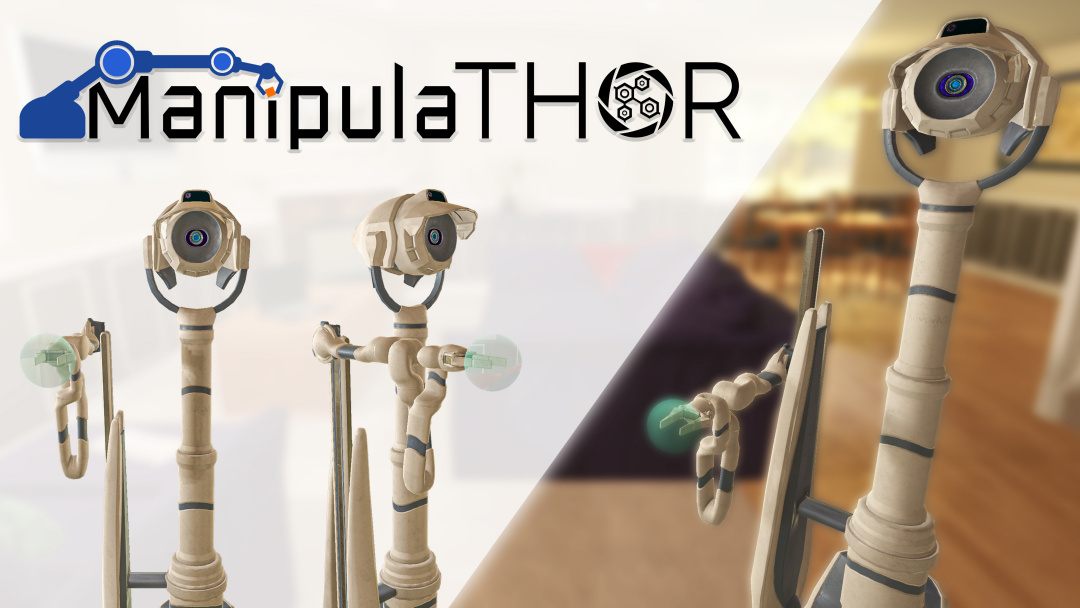

ManipulaTHOR: a framework for visual object manipulation

The Allen Institute for AI (AI2) announced the 3.0 release of its embodied artificial intelligence framework AI2-THOR, which adds active object manipulation to its testing framework. ManipulaTHOR is a first of its kind virtual agent with a highly articulated robot arm equipped with three joints of equal limb length and composed entirely of swivel joints to bring a more human-like approach to object manipulation.

AI2-THOR is the first testing framework to study the problem of object manipulation in more than 100 visually rich, physics-enabled rooms. By enabling the training and evaluation of generalized capabilities in manipulation models, ManipulaTHOR allows for much faster training in more complex environments as compared to current real-world training methods, while also being far safer and more cost-effective.

“Imagine a robot being able to navigate a kitchen, open a refrigerator and pull out a can of soda. This is one of the biggest and yet often overlooked challenges in robotics and AI2-THOR is the first to design a benchmark for the task of moving objects to various locations in virtual rooms, enabling reproducibility and measuring progress,” said Dr. Oren Etzioni, CEO at AI2. “After five years of hard work, we can now begin to train robots to perceive and navigate the world more like we do, making real-world usage models more attainable than ever before.”

Despite being an established research area in robotics, the visual reasoning aspect of object manipulation has consistently been one of the biggest hurdles researchers face. In fact, it’s long been understood that robots struggle to correctly perceive, navigate, act, and communicate with others in the world. AI2-THOR solves this problem with complex simulated testing environments that researchers can use to train robots for eventual activities in the real world.

With the pioneering of embodied AI through AI2-THOR, the landscape has changed for the common good. AI2-THOR enables researchers to efficiently devise solutions that address the object manipulation issue, and also other traditional problems associated with robotics testing.

“In comparison to running an experiment on an actual robot, AI2-THOR is incredibly fast and safe,” said Roozbeh Mottaghi, Research Manager at AI2. “Over the years, AI2-THOR has enabled research on many different tasks such as navigation, instruction following, multi-agent collaboration, performing household tasks, reasoning if an object can be opened or not. This evolution of AI2-THOR allows researchers and scientists to scale the current limits of embodied AI.”

In addition to the 3.0 release, the team is hosting the RoboTHOR Challenge 2021 in conjunction with the Embodied AI Workshop at this year’s Conference on Computer Vision and Pattern Recognition (CVPR). AI2’s challenges cover RoboTHOR object navigation; ALFRED (instruction following robots); and Room Rearrangement.

To read AI2-THOR’s ManipulaTHOR paper: ai2thor.allenai.org/publications