A Lot of Power in a Very Small Space

How to Unit Test Deep Learning: Tests in TensorFlow, mocking and test coverage

Conversation on racism and robotics

Talking about racism and it’s impact on robotics and roboticists was the first conversation in our new biweekly online discussion series “Society, Robots and Us” on alternate Tuesdays at 6pm PDT. It was a generous, honest and painful discussion that I hope has left a lasting impact on everyone who listened. There is systemic racism in America, and this does have an impact on robotics and roboticists in many many ways.

The US Senator Elizabeth Warren in conversation today with Alicia Garza from Black Futures Lab said, “America was founded on principles of liberty and freedom, but it was built on the backs of enslaved people. This is a truth we must not ignore. Racism and white supremacy have shaped every crucial aspect of our economy, and our political system for generations now.”

The speakers in ‘Society, Robots and Us’ were Chad Jenkins, Monroe Kennedy III, Jasmine Lawrence, Tom Williams, Ken Goldberg and Maynard Holliday explored the impact of racism in their experiences in robotics, along with explicit information about changes that we all can make. And we discussed learnings for allies and supporters and what a difference support could make. Please listen to the full discussion but Chad Jenkin’s notes capture some of the critical insights.

“I have been in computing for nearly 30 years and a roboticist for over 20 years. Thus, I have been able to experience firsthand many of the systemic problems that face our field. Let me build on some of the recommendations from the blackincomputing.org open letter and call to action. “

In particular, I believe we can bring equal opportunity to STEM quickly by upholding Title VI of the Civil Rights Act of 1964 and Title IX of the Educational Amendments of 1972 for institutions receiving federal funding, and public funding more generally. We now incentivize systemic disparate impacts in STEM.

Like law enforcement, university faculty are asked to do too much. Given our bandwidth limits, we have to make hard choices about what gets our attention and effort.

This creates a dilemma in every faculty member about whether to bolster their own personal advancement (by gaining social acceptance in the establishments of the field that control access to funding, hiring, and publishing through peer review) or further create and extend opportunity to others (taking a professional sacrifice to provide mentorship and empathy to future generations towards broadening participation in the STEM workforce).

It is clear STEM incentivizes the former given systemic exclusion of underrepresented minorities, with disastrous results thus far.

I believe we are a vastly better society with the upholding of Title VII of the Civil Rights Act of 1964 yesterday by the Supreme Court to prohibit employment discrimination against LGBTQ+ citizens. Discrimination is wrong. My hope is that we can apply this same standard and attention for Title VI of this statue to outcomes in STEM. This is not an issue of altruism, it reflects our true values at a nation and affects the quality of our work and its impact on the world.

There are placeholder measures that can be enacted to incentivize equal opportunity. For example, universities could decline sabbatical and leave requests from faculty seeking to collaborate with companies that have failed to provide equal opportunity, such as OpenAI and Google DeepMind.

To achieve systemic fairness in robotics, however, we must go beyond token gestures to address the causal factors of inequity rooted in the core economic incentives of our universities. It is universities that are the central ladder to opportunity through the development of future leaders, innovators, and contributors to our society.

We have the tools at hand today to create equal opportunity in STEM. The question is whether we have the will.

Equal opportunity cannot be true for anyone unless equal opportunity is true for everyone.

Odeste Chadwicke Jenkins, Associate Professor University of Michigan Robotics Institute

Our next episode of “Society, Robots and Us” on June 30 is going to discuss the role and the roll out of killer robots, but we’ll be coming back to talk more about racism, diversity and inclusion in robotics because we’ve only just scratched the surface.

Conversation on racism and robotics

Talking about racism and it’s impact on robotics and roboticists was the first conversation in our new biweekly online discussion series “Society, Robots and Us” on alternate Tuesdays at 6pm PDT. It was a generous, honest and painful discussion that I hope has left a lasting impact on everyone who listened. There is systemic racism in America, and this does have an impact on robotics and roboticists in many many ways.

The US Senator Elizabeth Warren in conversation today with Alicia Garza from Black Futures Lab said, “America was founded on principles of liberty and freedom, but it was built on the backs of enslaved people. This is a truth we must not ignore. Racism and white supremacy have shaped every crucial aspect of our economy, and our political system for generations now.”

The speakers in ‘Society, Robots and Us’ were Chad Jenkins, Monroe Kennedy III, Jasmine Lawrence, Tom Williams, Ken Goldberg and Maynard Holliday explored the impact of racism in their experiences in robotics, along with explicit information about changes that we all can make. And we discussed learnings for allies and supporters and what a difference support could make. Please listen to the full discussion but Chad Jenkin’s notes capture some of the critical insights.

“I have been in computing for nearly 30 years and a roboticist for over 20 years. Thus, I have been able to experience firsthand many of the systemic problems that face our field. Let me build on some of the recommendations from the blackincomputing.org open letter and call to action. “

In particular, I believe we can bring equal opportunity to STEM quickly by upholding Title VI of the Civil Rights Act of 1964 and Title IX of the Educational Amendments of 1972 for institutions receiving federal funding, and public funding more generally. We now incentivize systemic disparate impacts in STEM.

Like law enforcement, university faculty are asked to do too much. Given our bandwidth limits, we have to make hard choices about what gets our attention and effort.

This creates a dilemma in every faculty member about whether to bolster their own personal advancement (by gaining social acceptance in the establishments of the field that control access to funding, hiring, and publishing through peer review) or further create and extend opportunity to others (taking a professional sacrifice to provide mentorship and empathy to future generations towards broadening participation in the STEM workforce).

It is clear STEM incentivizes the former given systemic exclusion of underrepresented minorities, with disastrous results thus far.

I believe we are a vastly better society with the upholding of Title VII of the Civil Rights Act of 1964 yesterday by the Supreme Court to prohibit employment discrimination against LGBTQ+ citizens. Discrimination is wrong. My hope is that we can apply this same standard and attention for Title VI of this statue to outcomes in STEM. This is not an issue of altruism, it reflects our true values at a nation and affects the quality of our work and its impact on the world.

There are placeholder measures that can be enacted to incentivize equal opportunity. For example, universities could decline sabbatical and leave requests from faculty seeking to collaborate with companies that have failed to provide equal opportunity, such as OpenAI and Google DeepMind.

To achieve systemic fairness in robotics, however, we must go beyond token gestures to address the causal factors of inequity rooted in the core economic incentives of our universities. It is universities that are the central ladder to opportunity through the development of future leaders, innovators, and contributors to our society.

We have the tools at hand today to create equal opportunity in STEM. The question is whether we have the will.

Equal opportunity cannot be true for anyone unless equal opportunity is true for everyone.

Odeste Chadwicke Jenkins, Associate Professor University of Michigan Robotics Institute

Our next episode of “Society, Robots and Us” on June 30 is going to discuss the role and the roll out of killer robots, but we’ll be coming back to talk more about racism, diversity and inclusion in robotics because we’ve only just scratched the surface.

Next-generation cockroach-inspired robot is small but mighty

By Leah Burrows

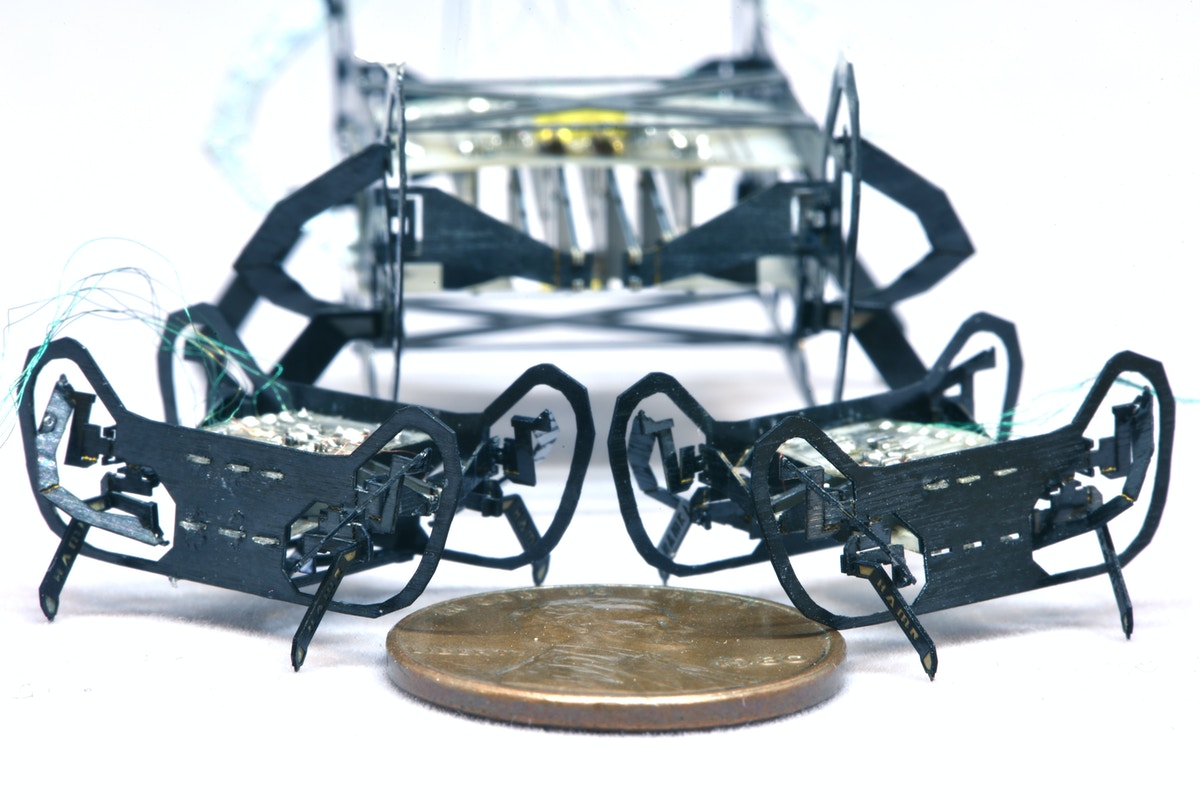

This itsy-bitsy robot can’t climb up the waterspout yet but it can run, jump, carry heavy payloads and turn on a dime. Dubbed HAMR-JR, this microrobot developed by researchers at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) and Harvard’s Wyss Institute for Biologically Inspired Engineering, is a half-scale version of the cockroach-inspired Harvard Ambulatory Microrobot or HAMR.

About the size of a penny, HAMR-JR can perform almost all of the feats of its larger-scale predecessor, making it one of the most dexterous microrobots to date.

“Most robots at this scale are pretty simple and only demonstrate basic mobility,” said Kaushik Jayaram, Ph.D., a former postdoctoral fellow at SEAS and the Wyss Institute, and first author of the paper. “We have shown that you don’t have to compromise dexterity or control for size.”

Jayaram is currently an Assistant Professor at the University of Colorado, Boulder.

The research was presented virtually at the International Conference on Robotics and Automation (ICRA 2020) this week.

One of the big questions going into this research was whether or not the pop-up manufacturing process used to build previous versions of HAMR and other microbots, including the RoboBee, could be used to build robots at multiple scales — from tiny surgical bots to large-scale industrial robots.

PC-MEMS (short for printed circuit microelectromechanical systems) is a fabrication process in which the robot’s components are etched into a 2D sheet and then popped out in its 3D structure. To build HAMR-JR, the researchers simply shrunk the 2D sheet design of the robot — along with the actuators and onboard circuitry — to recreate a smaller robot with all the same functionalities.

“The wonderful part about this exercise is that we did not have to change anything about the previous design,” said Jayaram. “We proved that this process can be applied to basically any device at a variety of sizes.”

HAMR-JR comes in at 2.25 centimeters in body length and weighs about 0.3 grams — a fraction of the weight of an actual penny. It can run about 14 body lengths per second, making it not only one of the smallest but also one of the fastest microrobots.

Scaling down does change some of the principles governing things like stride length and joint stiffness, so the researchers also developed a model that can predict locomotion metrics like running speeds, foot forces, and payload based on a target size. The model can then be used to design a system with the required specifications.

“This new robot demonstrates that we have a good grasp on the theoretical and practical aspects of scaling down complex robots using our folding-based assembly approach,” said co-author Robert Wood, Ph.D., Charles River Professor of Engineering and Applied Sciences in SEAS and Core Faculty Member of the Wyss Institute.

This research was co-authored by Jennifer Shum, Samantha Castellanos and E. Farrell Helbling, Ph.D. This research was supported by the Defense Advanced Research Projects Agency (DARPA) and the Wyss Institute.

Drones learn acrobatics by themselves

Researchers from NCCR Robotics at the University of Zurich and Intel developed an algorithm that pushes autonomous drones to their physical limit.

Since the dawn of flight, acrobatics has been a way for pilots to prove their bravery and worth. It is also a way to push the envelope of what can be done with an aircraft, learning lessons that are useful to all pilots and engineers. The same is true for unmanned flight. Professional drone pilots perform acrobatic maneuvers in dedicated competitions, pushing drones to their physical limits and perfecting their control and efficiency.

Now a collaboration between researchers from the University of Zurich (part of the NCCR Robotics consortium) and Intel has developed a quadcopter that can learn to fly acrobatics autonomously, paving the way to drones that can fully exploit their agility and speed, and cover more distance within their battery life. Though no drone mission will probably ever require a power loop or a Matty flip – the typical acrobatic maneuvers – a drone that can perform them autonomously is likely to be more efficient at all times.

A step forward towards integrating drones in our everyday life

Researchers of the University of Zurich and Intel developed a novel algorithm that pushes autonomous drones with only on-board sensing and computation close to their physical limits. To prove the efficiency of the developed algorithm, the researchers made an autonomous quadrotor fly acrobatic maneuvers such as the Power Loop, the Barrel Roll, and the Matty Flip, during which the drone incurs accelerations of up to 3g. “Several applications of drones, such as search-and-rescue or delivery, will strongly benefit from faster drones, which can cover large distances in limited time. With this algorithm we have taken a step forward towards integrating autonomously navigating drones into our everyday life”, says Davide Scaramuzza, Professor and Director of the Robotics and Perception Group at the University of Zurich, and head of the Rescue Robotics Grand Challenge for NCCR Robotics.

Simulation for training, real-world for testing

The navigation algorithm that allows drones to fly acrobatic maneuvers is represented by an artificial neural network that directly converts observations from the on-board camera and inertial sensors, to control commands. This neural network is trained exclusively in simulation. Learning agile maneuvers entirely in simulation has several advantages: (i) Maneuvers can be simply specified by reference trajectories in simulation and do not require expensive demonstrations by a human pilot, (ii) training is safe and does not pose any physical risk to the quadrotor, and (iii) the approach can scale to a large number of diverse maneuvers, including ones that can only be performed by the very best human pilots.

The algorithm transfers its knowledge to reality by using appropriate abstractions of the visual and inertial inputs (i.e., feature tracks and integrated inertial measurements), which decreases the gap between the simulated and physical world. Indeed, without physically-accurate modeling of the world or any fine-tuning on real-world data, the trained neural network can be deployed on a real quadrotor to perform acrobatic maneuvers.

Towards fully autonomous drones

Within a few hours of training in simulation, our algorithm learns to fly acrobatic maneuvers with an accuracy comparable to professional human pilots. Nevertheless, the research team warns that there is still a significant gap between what human pilots and autonomous drones can do. “The best human pilots still have an edge over autonomous drones given their ability to quickly interpret and adapt to unexpected situations and changes in the environment,” says Prof. Scaramuzza.

Paper: E. Kaufmann*, A. Loquercio*, R. Ranftl, M. Müller, V. Koltun, D. Scaramuzza “Deep Drone Acrobatics”, Robotics: Science and Systems (RSS), 2020

Paper

Video

Code

Why robotics startups fail!

What are the main reasons that robotics companies and startups fail? Is it the technology or is it the business? Fresh Consulting analyzed significant industry case studies from Rethink Robotics to iRobot for their whitepaper “Why Robotics Companies Fail,” and launched it on June 11 at a panel discussion moderated by James Dietrich, from Fresh Consulting, with guest speakers Aaron Prather, Senior Advisor for the Technology Research and Planning Team at FedEx Express; Andra Keay, Managing Director of Silicon Valley Robotics and startup accelerator advisor, and Eric Klein, Partner and Founder at Lemnos Labs.

In a lively discussion, the speakers weighed in on what key factors for success or failure were most likely in their experience. Andra Keay believes that lack of business fundamentals is the most critical error a young company faces. “There are a lot of resources out there to help startups pitch, value their company, draw up cap tables and divide equity. We’ve never had such a well educated set of founders from that perspective. But still simple business fundamentals bring down startups, whereas serial entrepreneurs know how to avoid a lot of those pitfalls.”

As Eric Klein continued, “We’re entering a golden era for applied robotics. It’s never been easier or cheaper from a technological standpoint to build robotics companies. But from a venture capital perspective there are still business barriers, particularly in understanding the customer’s economics.”

From a wealth of experience at FedEx working with robotics companies, Aaron Prather agrees that customer knowledge and understanding was the most significant point of failure from startups. “One of the things that we’re really big on at FedEx is seeing if you are matching technology to the right use case. How well do you know your customer’s problems and needs?”

According to the whitepaper, the five central themes that are consistent among failed robotics companies are:

- lacking in business fundamentals,

- poor market fit and timing,

- bad user experience and integration,

- misaligned investors and partners

- and focusing on the wrong problem.

Is it the investor or the startup who should take the blame for failure? The panelists all agreed that misalignment is the key issue. Keay said “it’s honestly scary that startups still need to be told to ask their investors questions! It’s not just about getting money, it’s about finding the right partners for your company’s growth. Some investors are hands-on, some are not, which is fine. Startups need to ask questions about what value they’re getting out of the deal.”

According to Klein, “if you don’t have strong business fundamentals or you’re doing all your learning real time, then that’s like driving into the fog of war and hoping to find your way out. Your selection of a venture capitalist is a two way relationship. A serial entrepreneur may say, Hey, I know a lot of what I need to do and what I need is access to silent capital. But for other folks it may be their first time, even if they’re an amazing crew of mechatronics folks coming out of the best schools on a global basis, Waterloo or Tel Aviv or Shenzhen or Sydney. Wherever you come from, your mechatronics degree means you can build the product. And the first risk any venture capitalist is trying to disassemble is engineering risk. But the second equally important risk is product market fit”

The discussion continued with the pros and cons of the Robot-As-A-Service business model, which is becoming very popular in the venture capital community but doesn’t make as much sense for some larger customers, for example like Fedex, who are happy to put robots on the capex budget, not the opex budget. If the customer is always right, then a startup needs to adapt their business model, in spite of push from the investors eager for ARR (annual returning revenue), or similar business models borrowed from the software industry.

Another discussion point was that finding the right metrics for success for each company is still a black art. Is it volume? Is it revenue? Is it number of customers? And the more innovative you are, the harder it might be to arrive at the right metrics. Keay suggested Series C funding as one metric in proxy for number of deployments, and Klein pointed out that in his opinion most robotics startups were seriously undervalued at a Series B and C stage at the moment, because investors don’t yet place sufficient value on the ability to produce multiple physical robots.

Final comments from the panel included request for startups to get in touch. Both Fedex, Lemnos Labs and Silicon Valley Robotics are there to share knowledge that can help startups succeed, and as Klein said, “Between the three of us as panelists we have this collective scar tissue from having done this for a while. And I want you to make spectacular mistakes. Don’t make the silly mistakes I made as an entrepreneur for 20 years, make a new spectacular one. So network in the robotics community.”

We hope you enjoyed this in-depth discussion on the topic of “Why Robotics Companies Succeed or Fail” as much as we did. In case you missed it, we’ve made the webinar recording available for you to watch online! Click here to view the recording.

If you haven’t already, please use this link to download our newest white paper, “Why Robotics Companies Fail,” which provides a deeper exploration into the topics and themes we discussed with our panelists.