Guest post by José Hernández-Orallo, Professor at Technical University of Valencia

Two decades ago I started working on metrics of machine intelligence. By that time, during the glacial days of the second AI winter, few were really interested in measuring something that AI lacked completely. And very few, such as David L. Dowe and I, were interested in metrics of intelligence linked to algorithmic information theory, where the models of interaction between an agent and the world were sequences of bits, and intelligence was formulated using Solomonoff’s and Wallace’s theories of inductive inference.

In the meantime, seemingly dozens of variants of the Turing test were proposed every year, the CAPTCHAs were introduced and David showed how easy it is to solve some IQ tests using a very simple program based on a big-switch approach. And, today, a new AI spring has arrived, triggered by a blossoming machine learning field, bringing a more experimental approach to AI with an increasing number of AI benchmarks and competitions (see a previous entry in this blog for a survey).

Considering this 20-year perspective, last year was special in many ways. The first in a series of workshops on evaluating general-purpose AI took off, echoing the increasing interest in the assessment of artificial general intelligence (AGI) systems, capable of finding diverse solutions for a range of tasks. Evaluating these systems is different, and more challenging, than the traditional task-oriented evaluation of specific systems, such as a robotic cleaner, a credit scoring model, a machine translator or a self-driving car. The idea of evaluating general-purpose AI systems using videogames had caught on. The arcade learning environment (the Atari 2600 games) or the more flexible Video Game Definition Language and associated competition became increasingly popular for the evaluation of AGI and its recent breakthroughs.

Last year also witnessed the introduction of a different kind of AI evaluation platforms, such as Microsoft’s Malmö, GoodAI’s School, OpenAI’s Gym and Universe, DeepMind’s Lab, Facebook’s TorchCraft and CommAI-env. Based on a reinforcement learning (RL) setting, these platforms make it possible to create many different tasks and connect RL agents through a standard interface. Many of these platforms are well suited for the new paradigms in AI, such as deep reinforcement learning and some open-source machine learning libraries. After thousands of episodes or millions of steps against a new task, these systems are able to excel, with usually better than human performance.

Despite the myriads of applications and breakthroughs that have been derived from this paradigm, there seems to be a consensus in the field that the main open problem lies in how an AI agent can reuse the representations and skills from one task to new ones, making it possible to learn a new task much faster, with a few examples, as humans do. This can be seen as a mapping problem (usually under the term transfer learning) or can be seen as a sequential problem (usually under the terms gradual, cumulative, incremental, continual or curriculum learning).

One of the key notions that is associated with this capability of a system of building new concepts and skills over previous ones is usually referred to as “compositionality”, which is well documented in humans from early childhood. Systems are able to combine the representations, concepts or skills that have been learned previously in order to solve a new problem. For instance, an agent can combine the ability of climbing up a ladder with its use as a possible way out of a room, or an agent can learn multiplication after learning addition.

In my opinion, two of the previous platforms are better suited for compositionality: Malmö and CommAI-env. Malmö has all the ingredients of a 3D game, and AI researchers can experiment and evaluate agents with vision and 3D navigation, which is what many research papers using Malmö have done so far, as this is a hot topic in AI at the moment. However, to me, the most interesting feature of Malmö is building and crafting, where agents must necessarily combine previous concepts and skills in order to create more complex things.

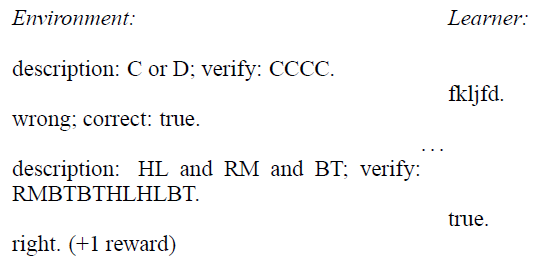

CommAI-env is clearly an outlier in this set of platforms. It is not a video game in 2D or 3D. Video or audio don’t have any role there. Interaction is just produced through a stream of input/output bits and rewards, which are just +1, 0 or -1. Basically, actions and observations are binary. The rationale behind CommAI-env is to give prominence to communication skills, but it still allows for rich interaction, patterns and tasks, while “keeping all further complexities to a minimum”.

When I was aware that the General AI Challenge was using CommAI-env for their warm-up round I was ecstatic. Participants could focus on RL agents without the complexities of vision and navigation. Of course, vision and navigation are very important for AI applications, but they create many extra complications if we want to understand (and evaluate) gradual learning. For instance, two equal tasks for which the texture of the walls changes can be seen as requiring higher transfer effort than two slightly different tasks with the same texture. In other words, this would be extra confounding factors that would make the analysis of task transfer and task dependencies much harder. It is then a wise choice to exclude this from the warm-up round. There will be occasions during other rounds of the challenge for including vision, navigation and other sorts of complex embodiment. Starting with a minimal interface to evaluate whether the agents are able to learn incrementally is not only a challenging but an important open problem for general AI.

Also, the warm-up round has modified CommAI-env in such a way that bits are packed into 8-bit (1 byte) characters. This makes the definition of tasks more intuitive and makes the ASCII coding transparent to the agents. Basically, the set of actions and observations is extended to 256. But interestingly, the set of observations and actions is the same, which allows many possibilities that are unusual in reinforcement learning, where these subsets are different. For instance, an agent with primitives such as “copy input to output” and other sequence transformation operators can compose them in order to solve the task. Variables, and other kinds of abstractions, play a key role.

This might give the impression that we are back to Turing machines and symbolic AI. In a way, this is the case, and much in alignment to Turing’s vision in his 1950 paper: “it is possible to teach a machine by punishments and rewards to obey orders given in some language, e.g., a symbolic language”. But in 2017 we have a range of techniques that weren’t available just a few years ago. For instance, Neural Turing Machines and other neural networks with symbolic memory can be very well suited for this problem.

By no means does this indicate that the legion of deep reinforcement learning enthusiasts cannot bring their apparatus to this warm-up round. Indeed they won’t be disappointed by this challenge if they really work hard to adapt deep learning to this problem. They won’t probably need a convolutional network tuned for visual pattern recognition, but there are many possibilities and challenges in how to make deep learning work in a setting like this, especially because the fewer examples, the better, and deep learning usually requires many examples.

As a plus, the simple, symbolic sequential interface opens the challenge to many other areas in AI, not only recurrent neural networks but techniques from natural language processing, evolutionary computation, compression-inspired algorithms or even areas such as inductive programming, with powerful string-handling primitives and its appropriateness for problems with very few examples.

I think that all of the above makes this warm-up round a unique competition. Of course, since we haven’t had anything similar in the past, we might have some surprises. It might happen that an unexpected (or even naïve) technique could behave much better than others (and humans) or perhaps we find that no technique is able to do something meaningful at this time.

I’m eager to see how this round develops and what the participants are able to integrate and invent in order to solve the sequence of micro and mini-tasks. I’m sure that we will learn a lot from this. I hope that machines will, too. And all of us will move forward to the next round!

José Hernández-Orallo is a professor at Technical University of Valencia and author of “The Measure of All Minds, Evaluating Natural and Artificial Intelligence”, Cambridge University Press, 2017.

Back to the core of intelligence … to really move to the future was originally published in AI Roadmap Institute Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.