*All images credit: ROBBO

30 teachers arrived, excited to learn. They rolled up their sleeves and placed laptops and Robot kits on the floor. The room filled with excitement (and laughter!) as everyone tried to come up with different solutions on how to create different programs. The results were hilarious; a robot inspired by Darth Vader, a robot that asked everyone to turn the lights off when it was too bright in the room, and a robot that tricked the teacher to leave the classroom during an exam.

Not bad for a day of “work!”

Training, like above, is what we’re all about at ROBBO. ROBBO is a fun and simple way for absolutely anyone to get introduced to the world of robotics and coding. As a part of one of our many projects, we organized a training weekend for the single purpose of introducing teachers to programming and robotics. The teachers started with simple exercises in RobboScratch, a visual programming environment; moving the character, creating series of multiple commands, and learning the advantages of the infinite loop when making programs.

So, what do we mean about classroom robotics? Our educational robotics consist of two different robots; the Robot kit and the Lab. Both robots are ideal for learning programming, robotics as well as skills in problem-solving, mathematics and physics while working in interactive teams. The Lab includes a microphone, LED-lights, light sensor and a slider and is great for experimenting with different elements such as sound or light and numeric values. Our other robot is the Robot kit, equipped with a motor, which is a fun way to explore everyday technology using a touch sensor, proximity sensor, light sensor, line sensors and an LED-light. Our robots are programmed in the visual programming environment RobboScratch, an adapted version of Scratch developed at MIT.

In our earlier example, teachers were divided into separate workshops, working in pairs, or teams of three. We believe it is important to communicate and discuss with others to better understand different programs and come up with alternative solutions if the program doesn’t work in the desired way. The workshops are all based on the exercises from our pedagogical guide, and teachers were given a copy of the guide for their own use. Our guide provides instructions and multiple exercise card (with solutions!) and is free to download here (http://robbo.world/support/).

Our teaching guide is for anyone who wants to learn the basics of programming with the help of ROBBO™ robotics and RobboScratch. Our pedagogical guide is a comprehensive educational tool with instructions, exercise cards and ideas for creating the ultimate learning experience. It has been developed together with Innokas Network at the University of Helsinki and Finnish teachers and students. The majority of the teachers that participated in the training had only limited knowledge of Scratch or Scratch Junior and, therefore, we started from the beginning.

The pedagogical guide includes an introduction to RobboScratch, Lab and Robot kit as well as up to 28 exercise cards to help you along the way. The exercises are designed to develop necessary programming skills step by step, teaching children to think logically as a software developer would do, which may also be useful in many everyday situations. These are, in particular, the ability to understand the whole, to split a problem into smaller parts, and to develop a simple program to perform an operation. In the initial exercises, students will make a program using a predefined model, but as the practice progresses, they will have more and more space for their own ideas.

By developing new skills, users are encouraged to plan and develop innovations in robotics. The training goal is to learn understand and use technology to invent something new. As the final assignment of the teachers’ training, we asked teachers to form teams of four and come up with a small prank using the different capabilities and sensors of either Robot kit or Lab or simultaneous use of both robots.

If you’d like to to learn more about ROBBO or download our free guide, visit our website: http://robbo.world/support/

Comments from teachers:

“The teaching guide is a great support when learning coding. And I can just hand out these ready-made exercise cards to my students as well!”

“The exercise in the guide are good for understanding the different possibilities you have with the robots, because when you start doing an exercise you come up with more ideas on how to develop a more complicated program.”

“The robots emphasized practicality in the learning process. In addition to programming, ROBBO teaches environmental studies and all-around useful skills, in particular when the exercises of the pedagogical guide are being utilized.”

If you’d like to learn more about classroom robotics, check out these articles:

- How to get started (and progress) in robotics: A quick guide for teens and adults

- Teaching the teachers: Study focuses on how teachers perceive educational robots in classrooms

- Jade Robot: Hands on STEM for kids and classrooms

- Classroom robotics: Motivating independent learning and discovery

- Classroom robots: A great way to get young people started

See all the latest robotics news on Robohub, or sign up for our weekly newsletter.

Dr. Bradley Knox is the founder of bots_alive. He researched human-robot interaction, interactive machine learning, and artificial intelligence at the MIT Media Lab and at UT Austin. At MIT, he designed and taught Interactive Machine Learning. He has won two best paper awards at major robotics and AI conferences, was awarded best dissertation from UT Austin’s Computer Science Department, and was named to IEEE’s AI’s 10 to Watch in 2013.

Dr. Bradley Knox is the founder of bots_alive. He researched human-robot interaction, interactive machine learning, and artificial intelligence at the MIT Media Lab and at UT Austin. At MIT, he designed and taught Interactive Machine Learning. He has won two best paper awards at major robotics and AI conferences, was awarded best dissertation from UT Austin’s Computer Science Department, and was named to IEEE’s AI’s 10 to Watch in 2013.

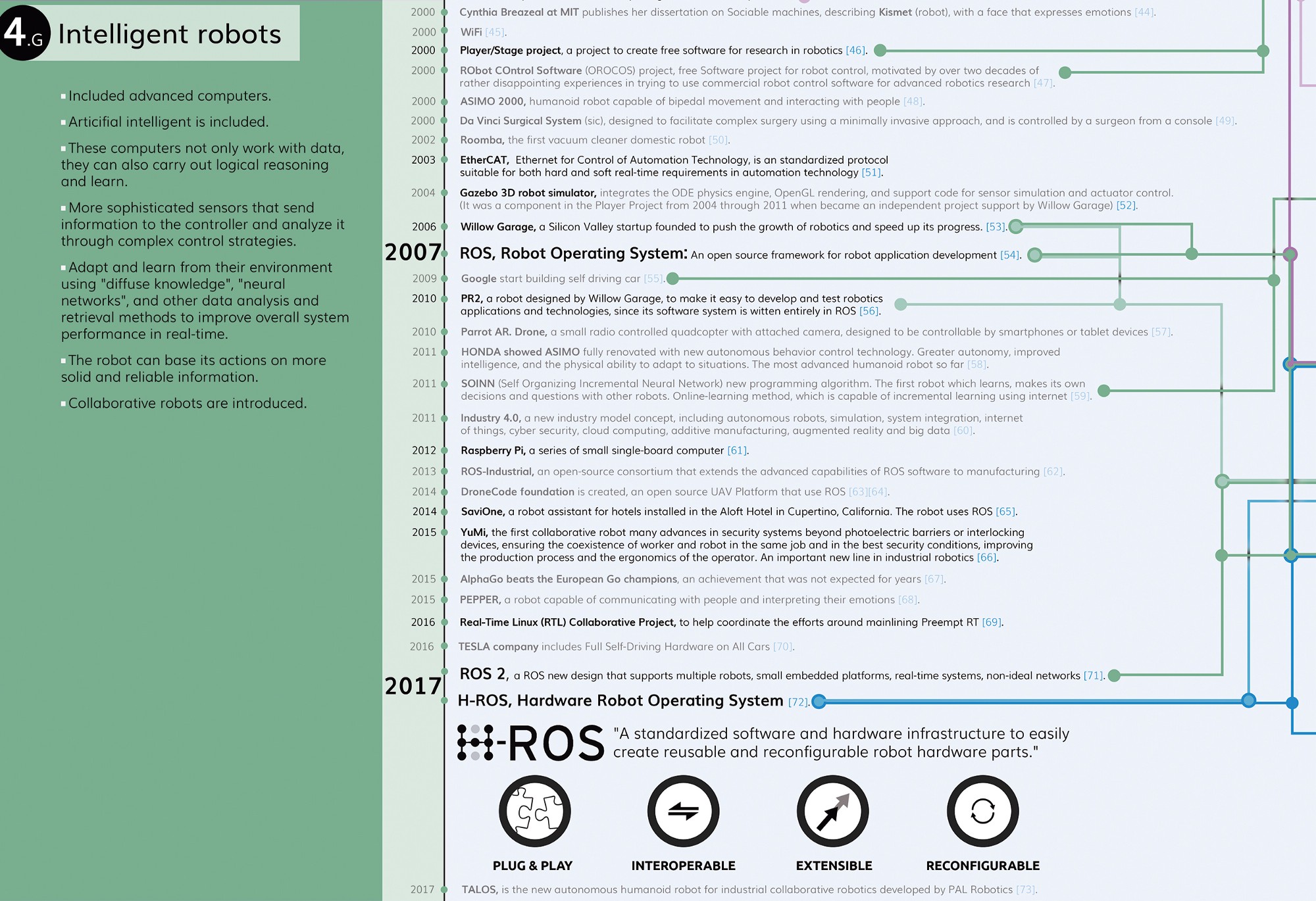

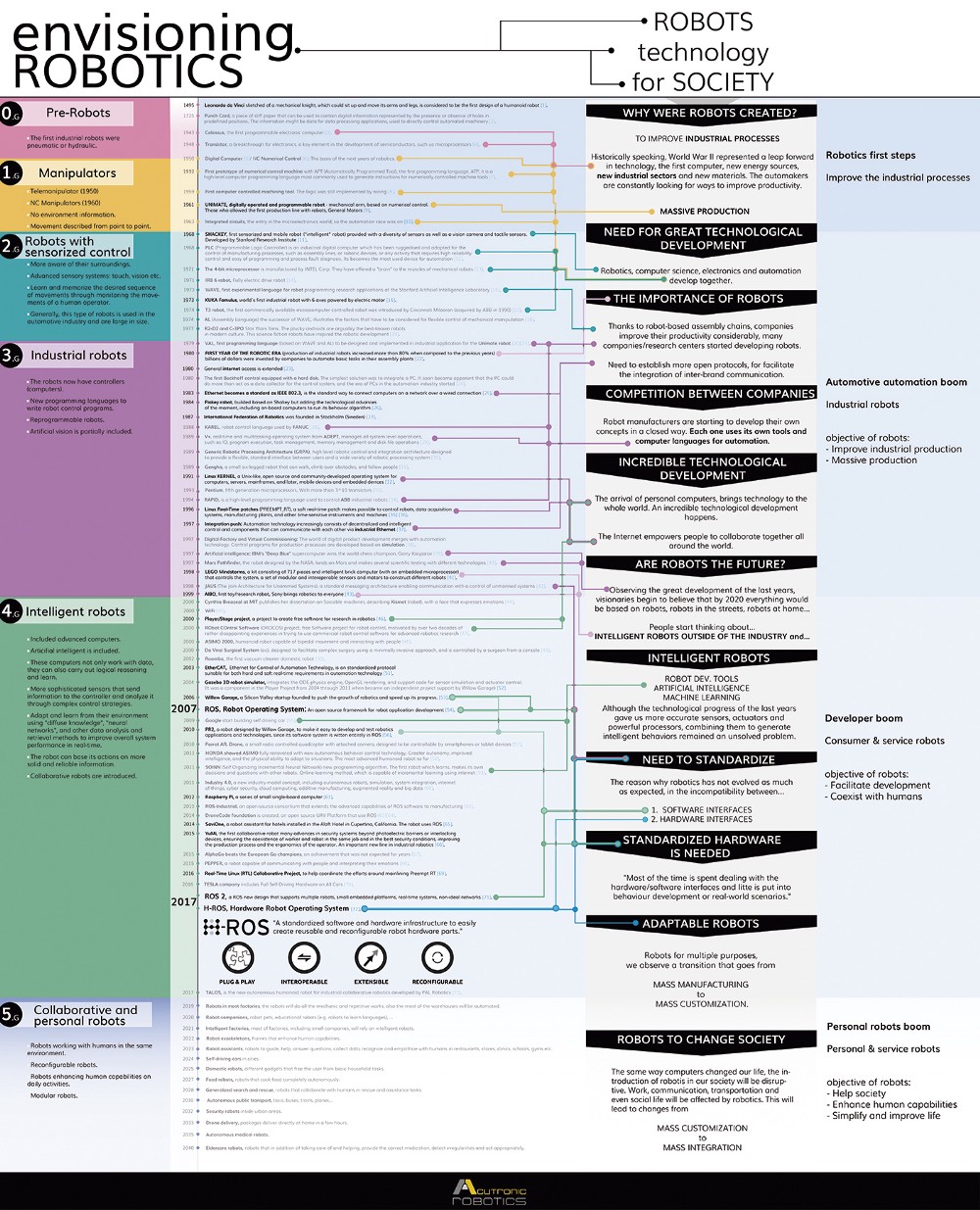

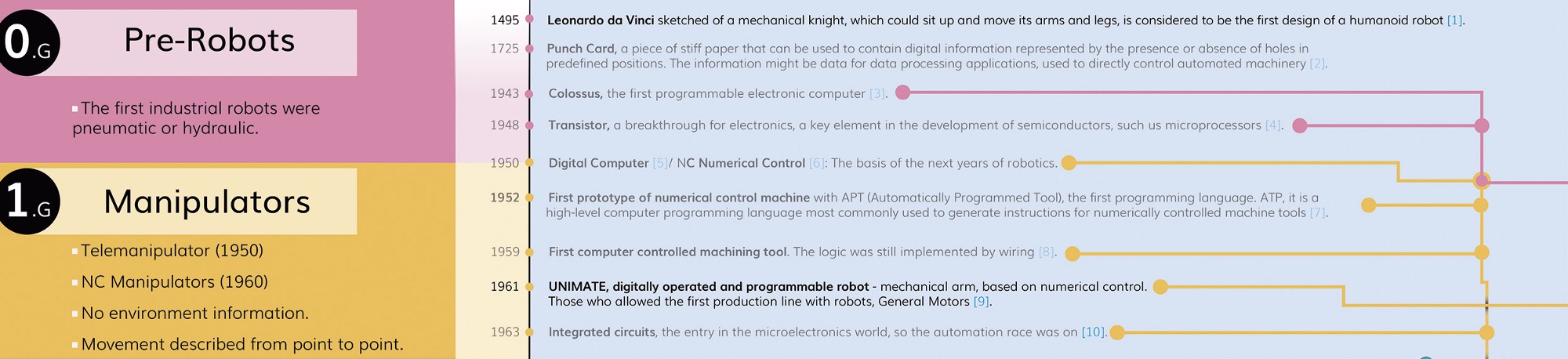

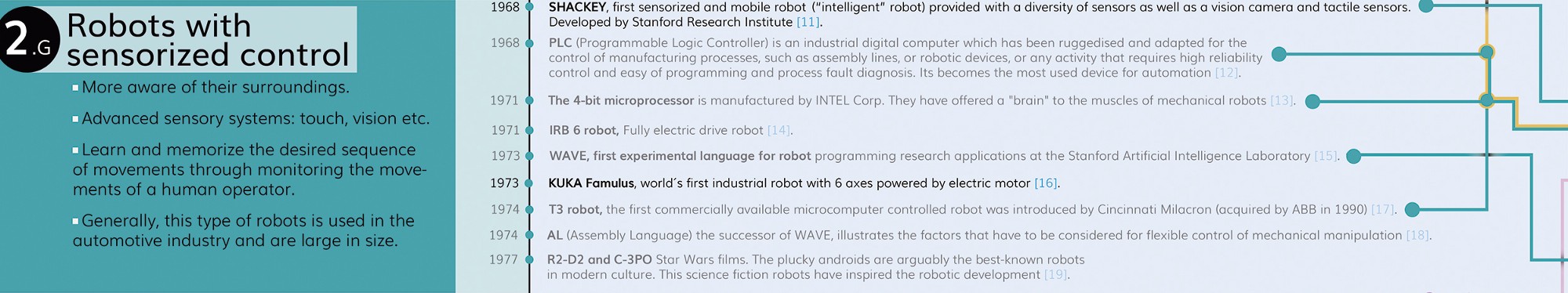

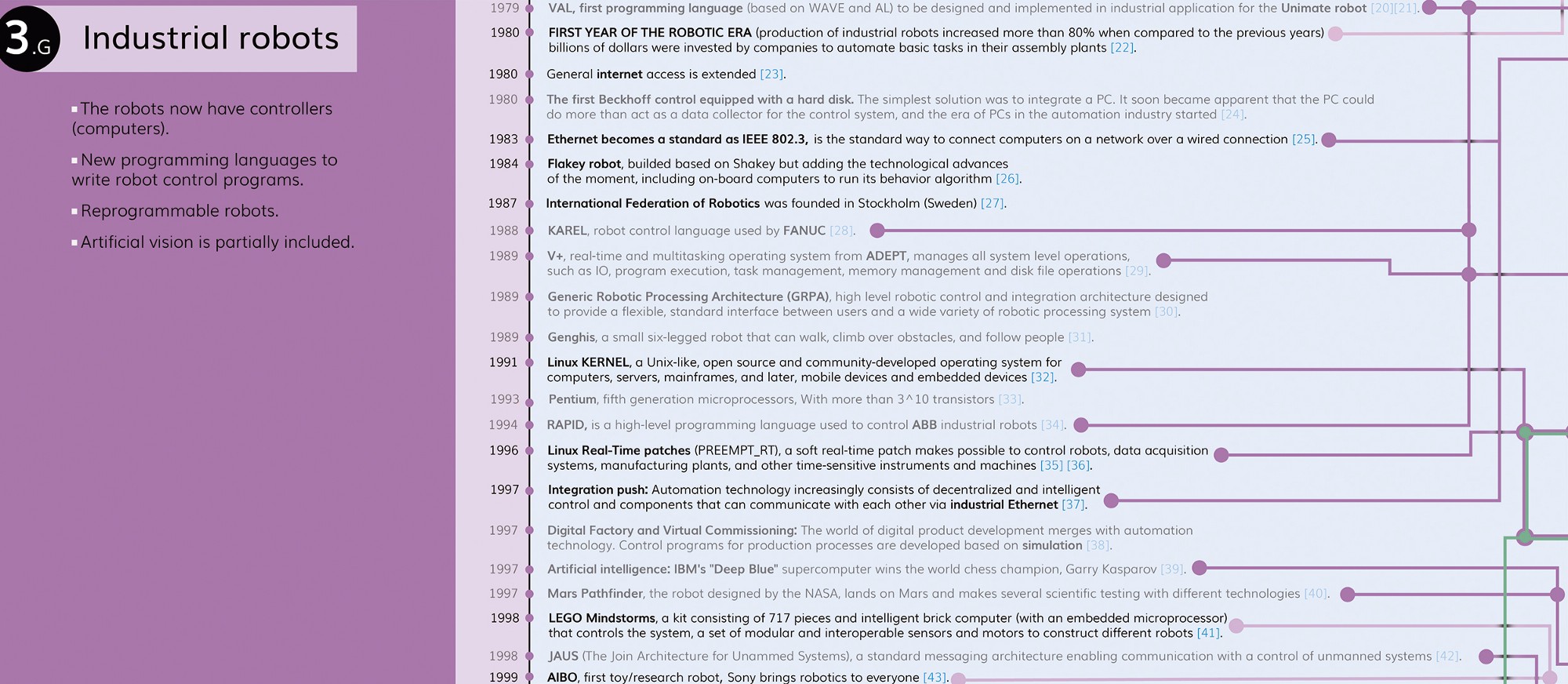

Integration effort was identified as one of the main issues within robotics, particularly related to industrial robots. A common infrastructure typically reduces the integration effort by facilitating an environment in which components can be connected and made to interoperate. Each of the infrastructure-supported components are optimized for such integration at their conception, and the infrastructure handles the integration effort. At that point, components could come from different manufacturers (yet when supported by a common infrastructure, they will interoperate).

Integration effort was identified as one of the main issues within robotics, particularly related to industrial robots. A common infrastructure typically reduces the integration effort by facilitating an environment in which components can be connected and made to interoperate. Each of the infrastructure-supported components are optimized for such integration at their conception, and the infrastructure handles the integration effort. At that point, components could come from different manufacturers (yet when supported by a common infrastructure, they will interoperate).