Talking ATX with ABB Robotics

Video: Aircraft-inspecting suction robot successfully trialled

A Step Closer to Self-Aware Machines – Columbia engineers create a robot that can imagine itself

A new machine learning based intention detection method using first-person-view camera for Exo Glove Poly II

Streamlining Operations: Automotive Robotic Automation and Fork Truck Free Initiatives

Talking ATX West with ATI Industrial Automation

ASU’s Southwest Robotics Symposium previews the new technology guiding the next wave of human-robot interaction

Toshiba unveils robot to probe melted Fukushima nuclear fuel

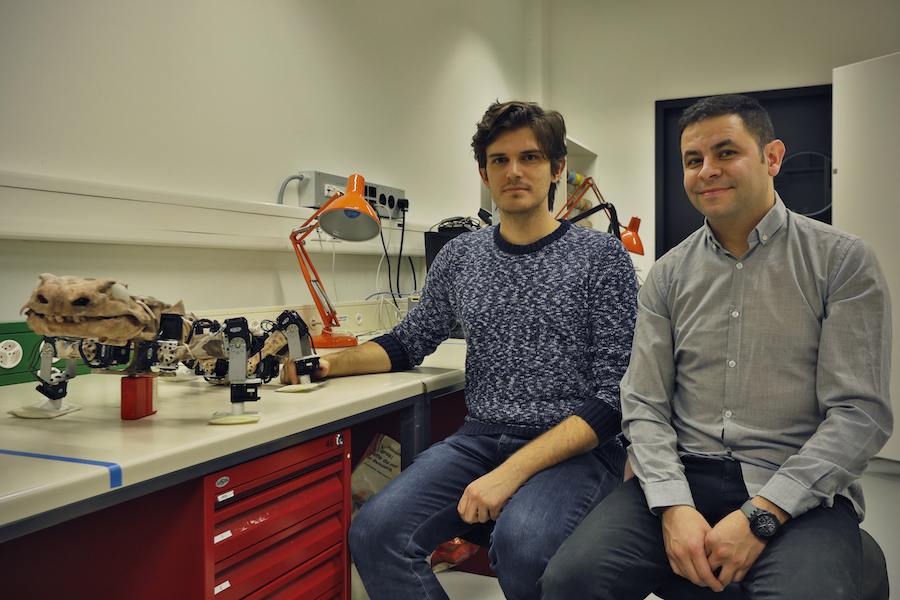

A robot recreates the walk of a fossilized animal

Using the fossil and fossilized footprints of a 300-million-year-old animal, scientists from EPFL and Humboldt-Universität zu Berlin have identified the most likely gaits of extinct animals and designed a robot that can recreate an extinct animal’s walk. This study can help researchers better understand how vertebrate locomotion evolved over time.

How did vertebrates walk 300 million years ago? Could they already stand upright on their legs? Did they move in a balanced, energy-efficient way? Scientists at EPFL’s Biorobotics Laboratory – supported by the National Center of Competence in Research (NCCR) Robotics – and the Interdisciplinary Laboratory Image Knowledge Gestaltung at Humboldt-Universität zu Berlin set out to answer these questions. Using the fossilized skeleton and footprints of Orobates pabsti – a vertebrate that, on the evolutionary tree, comes between amphibians on one hand and reptiles and mammals on the other – the scientists created computer simulations and a robot. Drawing on experimental studies of four living amphibian and reptile species, they used these tools to gauge how plausible different ways of walking were for the fossilized animal.

“Orobates is an ideal candidate for understanding how land vertebrates evolved because it is in the lineage leading to modern amniotes. These animals formed in eggs laid on land and became largely independent of water,” says John Nyakatura, a professor at Humboldt-Universität. What’s more, Orobates is the oldest-known vertebrate for which scientists have been able to link a fossil with its fossilized footprints. “This combination is what enabled us to carry out our unique quantitative study, which paves the way to replicating the walk of other fossilized animals,” says NCCR Robotics Professor Auke Ijspeert. The researchers’ findings appear in Nature.

A motion-based model and then a robotic one

To better understand how Orobates walked and pinpoint just how advanced its locomotion was, the scientists at Humboldt-Universität developed a digital model of its skeleton based on the animal’s fossil and the biomechanics of modern animals with sprawling postures. They used this model to carry out the first kinematic computer simulation of Orobates’ gait as it walks on its digitalized footprints. This simulation focuses on movements (rather than forces) and identifies gaits where the animal’s bones do not collide or come out of their joints.

In parallel, two scientists at EPFL’s Biorobotics Laboratory – post-doctoral researcher Kamilo Melo and PhD student Tomislav Horvat, both members of NCCR Robotics at the time of the study – used the fossilized animal anatomy to build a robot called OroBOT. Designed and scaled to match the shape and movements of the extinct animal, OroBOT was used to calculate the physics of how Orobates walked. “We tested our hypotheses about the animal’s locomotion dynamics with our robotic model, which factors in the real-world physics of the animal’s gait,” says Melo.

Testing hundreds of different gaits, based on contemporary animals

The interdisciplinary team of scientists tested hundreds of different gaits with their robot in order to determine which ones Orobates could have used – and those that it clearly did not. The gaits they tested were based on biomechanical principles extracted from similar modern-day animals such as caimans, salamanders, iguanas and skinks, which they analyzed through X-ray videos and force measurements. “We studied the biomechanics of their movements and determined which mechanical principles they all followed”, says Nyakatura. The research team looked at three features in particular: how erect the animal stood on its legs; how its backbone bent; and how much its elbow or shoulder joints bent as it walked. These three features determine what the researchers call the animal’s “sprawling gait space”. They created a powerful interactive website where fellow scientists – and the wider public – can explore the universe of movements that Orobates could have used.

With these results, they came up with the most likely ways that Orobates may have walked. They scored the gaits based on how much energy was required, how stable the movements were, how the leg forces compared with those of other sprawling animals, and how closely the movements aligned with the fossilized footprints. The gaits with good scores appear quite athletic and most closely resemble the movements of caimans. This suggests that Orobates probably already held itself a little upright on its legs – unlike salamanders and skinks. Its locomotion was thus more advanced — more upright, balanced and mechanically power-saving — than had been previously thought.

The study concludes that advanced locomotion, as in Orobates, may have evolved before the common ancestor of reptiles and mammals lived. The novel approach developed for this study can be applied by other scientists in their work, and it could be modified to study other evolutionary transitions, such as the origins of flight or galloping gaits in mammals. Last but not least, being able to select the most efficient gait for any given morphology is of fundamental importance for the walking robots that NCCR Robotics researchers are developing, in particular for search-and-rescue applications.

Orobates fossil

Literature

John A. Nyakatura, Kamilo Melo, Tomislav Horvat, Kostas Karakasiliotis, Vivian R. Allen, Amir Andikfar, Emanuel Andrada, Patrick Arnold, Jonas Lauströer, John R. Hutchinson, Martin S. Fischer and Auke J. Ijspeert. “Reverse-engineering the locomotion of a stem amniote“, Nature, 17 January 2019.

From robotic companions to third thumbs, machines can change the human brain

People’s interactions with machines, from robots that throw tantrums when they lose a colour-matching game against a human opponent to the bionic limbs that could give us extra abilities, are not just revealing more about how our brains are wired – they are also altering them.

Emily Cross is a professor of social robotics at the University of Glasgow in Scotland who is examining the nature of human-robot relationships and what they can tell us about human cognition.

She defines social robots as machines designed to engage with humans on a social level – from online chatbots to machines with a physical presence, for example, those that check people into hotel rooms.

According to Prof. Cross, as robots can be programmed to perform and replicate specific behaviours, they make excellent tools for shedding light on how our brains work, unlike humans, whose behaviour varies.

‘The central tenets to my questions are, can we use human-robot interaction to better understand the flexibility and fundamental mechanisms of social cognition and the human brain,’ she said.

Brain imaging shows that a sad, happy or neutral robotic expression will engage the same parts of the brain as a human face with similar expressions.

Through their project called Social Robots, Prof. Cross and her team are using neural decoding techniques to probe the extent to which human feelings towards a robot change depending on how it behaves.

Tantrums

When the robots used in the project lose a game, they alternate between throwing tantrums or appearing dejected. ‘So far, people actually find it really funny when the robot gets angry,’ she said. ‘But people do respond to them quite strongly and that’s really interesting to see.’

Having robots as colleagues has been shown to affect humans in complex ways. Researchers at the University of Washington found that when soldiers used robots in bomb disposal, they developed emotional attachments towards them and felt frustration, anger or sadness if their robot was destroyed.

Prof. Cross says that from an evolutionary perspective, this doesn’t make sense. ‘We care about people and perhaps animals that might help us or hurt us,’ she said. ‘But with machines it’s a bit more of a mystery and understanding how far we can push that (to develop social relationships with machines) is a really, really fascinating question.’

It’s important to understand these dynamics since, as she points out, robots are already working as companions in nursing homes or even as tutors in early childhood education. Home care and education are prime areas of social robotics research, with R&D efforts focusing on adults suffering from dementia and young children.

Ten-hour rule

Typically, studies on such groups observe interactions over a relatively short time-span. They rarely exceed what Prof. Cross describes as a ten-hour rule, beyond which study participants tend to get bored of their robotic toys. But her team is looking at how feelings towards robots evolve over time.

As part of the project, the researchers send a palm-sized Cozmo robot home with study participants and instruct them to interact with it every day for a week by playing games or introducing it to their friends and pets. The participants’ brains are imaged at the start and end of that period to track changes.

‘If we’re going to have robots in our home environment, if they’re going to be in our schools teaching our kids across weeks, if not years, if they’re going to be peoples’ social companions, we want to know a lot more than just what happens after ten hours’ (of exposure),’ she said.

‘We want to know how people’s social bonds and relationships to robots change across many, many more hours.’

With such technologies set to become a bigger part of our future, other studies are investigating how the brain reacts to a different kind of robot – wearable robotic limbs that augment the body, providing extra abilities.

Wearables could have social and healthcare benefits. For instance, a third arm could assist surgeons to carry out procedures more safely rather than relying on human assistants, enable people to complete their household chores much faster or help construction workers.

But even as the technology capabilities develop apace, Dr Tamar Makin, a neuroscientist at University College London, UK, is exploring what it would take for the brain to accept and operate a robotic appendage as part of the body, through a five-year project called Embodied Tech.

Additional thumb

In order to understand how the brain deals with an extra body part, Dr Makin’s team asks participants to wear an additional opposable thumb for a week. Created by a designer named Dani Clode, the thumb is controlled by pressure sensors worn on the big toes.

Product designer Dani Clode created a prosthetic opposable thumb for people to wear as an extra digit. Video credit: Dani Clode

With the additional thumb, the augmented hand almost has the capabilities of two hands, giving people extra capacity to carry out actions. The question is what effect that has on the brain.

The study is still underway but preliminary results indicate that the presence of an extra thumb alters the brain’s internal map of what the biological hand looks like. Scans show that the brain represents the fingers as collapsing onto each other, away from the thumb and index finger.

This mirrors what happens in diseases like dystonia, when the representation of fingers begins to merge – for instance, when musicians use their fingers excessively – and causes cramp-like pain. The same effect could theoretically cause pain in the wearer of an extra thumb.

‘One important interim message we have is that there are potential costs, not just benefits, to using augmentation technology,’ said Dr Makin.

She believes that the newness of human augmentation means there are lots of unanswered questions but it’s vital to explore the challenges of wearable robotics in order to fully realise the promises, such as multitasking or safer working conditions.

‘I feel like we have a responsibility to gain a much better understanding of how having good control of an additional body part is going to change the representation of the body parts you already have.’

The research in this article was funded by the European Research Council.

The news from CES is there wasn’t much news from CES

My feet are aching, as usual, after 3 days on the CES show floor, and the question people always ask others there is “what have you seen that was interesting?”

I won’t say I didn’t see anything interesting, and I had a large number of rewarding conversations with all sorts of companies, making the trip very worthwhile, but I will say I saw less that was new and exciting than ever before. This may be a result of the show’s constant growth that meant in 3 days I still did not manage to get to 3 1/2 major rooms of the show, putting my focus on cars as I usually do.

A larger fraction of CES is not even remotely consumer electronics. In fact, the reason I go is largely the robocar related companies, none of whom are selling to consumers at this time, and most of which never plan to. And yes, the amazing new TVs from Samsung, LG and others are always a draw — LG always creates an astonishing video wall, and all companies were now displaying 8K TVs as a real product (even if a very expensive one for which no video sources are available, but which will be fantastic for still photos.)

My favourite part of the show, Eureka Park, even disappointed even though I gave it most of a day. The proportion of products which are poorly thought through is always high, but you search for gems that might someday change the world. I saw few Eureka moments.

Several fields, such as Iot/Smarthome were growing and maturing, with lots more companies, but at the same time, very little in smart home moves beyond the “Oh, that sounds nice” into truly appealing and useful. I’ve had computer controlled lights for decades. They are handy, and I would rather have them than not have them, but they aren’t essentials the way my phone and a dozen apps within it are, things I can’t do without any more.

There were, again, a ton of different LIDAR companies. What’s interesting about the LIDAR crowd is that they are almost all quite different, in many cases taking entirely different approaches to the technology — the output, the steering, the sensing, the band and even the core technology. I’ll have more coverage of that later. Of course these are not for consumers.

Other press were equally unexcited — I saw far less coverage of the “hot new thing” in the media this year as the show opened and progressed. This was the year of mostly incremental improvements.

Flying car style vehicles did generate excitement, but they were barely there. The most buzz was about Bell helicopter’s “Nexus” tilt-rotor hybrid vehicle on display. It’s a high-end vehicle, with 6 giant ducted fan tilt-rotors, and very much a prototype. Bell knows a lot about tilt-rotor from their work on the V-22 Osprey, a vehicle which has a rather checkered history, so it’s not sure how this will go. The few other companies doing flying vehicles (for people) had tiny booths showing nothing.

The Nexus is a hybrid-hybrid — both multirotor and fixed-wing flight, and both electric batteries and gasoline powered generator for power.

There was some interesting battery technology, including a small startup named GBatteries which claimed that by pulsing the charge voltage they could charge at vastly faster speeds — 5C, or 10 minutes from empty to 80%, and 5 minutes to 50%, without any more heat or battery damage than today’s fast charging (which takes about an hour.) Their demo was just on a drill, so it is yet to be proven in a car, but it would change some of the usability of battery devices and electric cars.

I saw other interesting battery tech but there is some irony – because of all the investment made by Tesla and others in standard lithium ion cells, these cells are now better than other, more innovative technologies, because of their mass production (driving down the cost) and the heavy R&D (improving charge times and reducing battery degradation.) The lithium ion cell was created to be lightweight for laptops where weight is absolutely crucial, but now it’s everywhere, from cars to scooters to even grid storage.

There was an uptick of mobility products (or at least I am paying the most attention to them) beyond cars, including scooters, delivery, bike riding and more.

Identifying artificial intelligence “blind spots”

By Rob Matheson

By Rob Matheson

A novel model developed by MIT and Microsoft researchers identifies instances in which autonomous systems have “learned” from training examples that don’t match what’s actually happening in the real world. Engineers could use this model to improve the safety of artificial intelligence systems, such as driverless vehicles and autonomous robots.

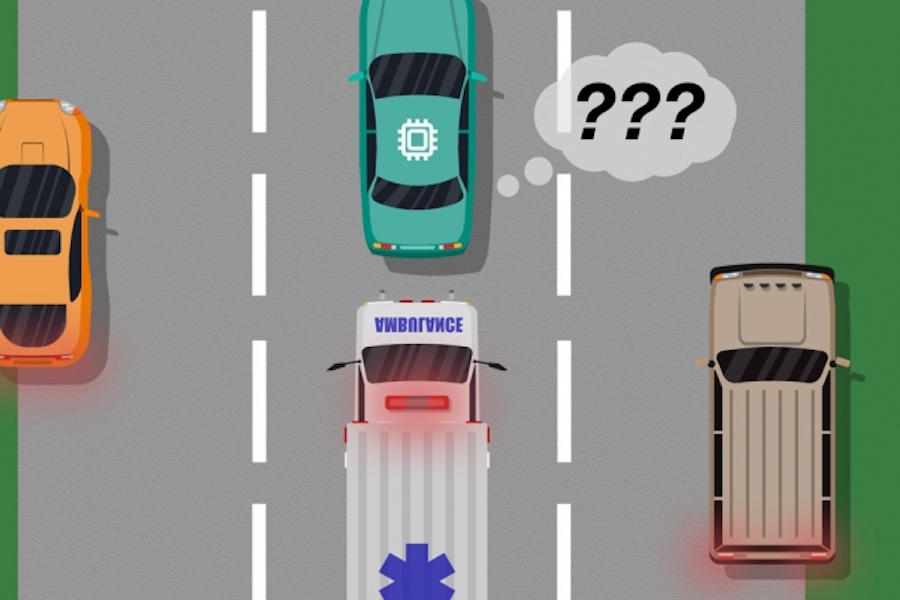

The AI systems powering driverless cars, for example, are trained extensively in virtual simulations to prepare the vehicle for nearly every event on the road. But sometimes the car makes an unexpected error in the real world because an event occurs that should, but doesn’t, alter the car’s behavior.

Consider a driverless car that wasn’t trained, and more importantly doesn’t have the sensors necessary, to differentiate between distinctly different scenarios, such as large, white cars and ambulances with red, flashing lights on the road. If the car is cruising down the highway and an ambulance flicks on its sirens, the car may not know to slow down and pull over, because it does not perceive the ambulance as different from a big white car.

In a pair of papers — presented at last year’s Autonomous Agents and Multiagent Systems conference and the upcoming Association for the Advancement of Artificial Intelligence conference — the researchers describe a model that uses human input to uncover these training “blind spots.”

As with traditional approaches, the researchers put an AI system through simulation training. But then, a human closely monitors the system’s actions as it acts in the real world, providing feedback when the system made, or was about to make, any mistakes. The researchers then combine the training data with the human feedback data, and use machine-learning techniques to produce a model that pinpoints situations where the system most likely needs more information about how to act correctly.

The researchers validated their method using video games, with a simulated human correcting the learned path of an on-screen character. But the next step is to incorporate the model with traditional training and testing approaches for autonomous cars and robots with human feedback.

“The model helps autonomous systems better know what they don’t know,” says first author Ramya Ramakrishnan, a graduate student in the Computer Science and Artificial Intelligence Laboratory. “Many times, when these systems are deployed, their trained simulations don’t match the real-world setting [and] they could make mistakes, such as getting into accidents. The idea is to use humans to bridge that gap between simulation and the real world, in a safe way, so we can reduce some of those errors.”

Co-authors on both papers are: Julie Shah, an associate professor in the Department of Aeronautics and Astronautics and head of the CSAIL’s Interactive Robotics Group; and Ece Kamar, Debadeepta Dey, and Eric Horvitz, all from Microsoft Research. Besmira Nushi is an additional co-author on the upcoming paper.

Taking feedback

Some traditional training methods do provide human feedback during real-world test runs, but only to update the system’s actions. These approaches don’t identify blind spots, which could be useful for safer execution in the real world.

The researchers’ approach first puts an AI system through simulation training, where it will produce a “policy” that essentially maps every situation to the best action it can take in the simulations. Then, the system will be deployed in the real-world, where humans provide error signals in regions where the system’s actions are unacceptable.

Humans can provide data in multiple ways, such as through “demonstrations” and “corrections.” In demonstrations, the human acts in the real world, while the system observes and compares the human’s actions to what it would have done in that situation. For driverless cars, for instance, a human would manually control the car while the system produces a signal if its planned behavior deviates from the human’s behavior. Matches and mismatches with the human’s actions provide noisy indications of where the system might be acting acceptably or unacceptably.

Alternatively, the human can provide corrections, with the human monitoring the system as it acts in the real world. A human could sit in the driver’s seat while the autonomous car drives itself along its planned route. If the car’s actions are correct, the human does nothing. If the car’s actions are incorrect, however, the human may take the wheel, which sends a signal that the system was not acting unacceptably in that specific situation.

Once the feedback data from the human is compiled, the system essentially has a list of situations and, for each situation, multiple labels saying its actions were acceptable or unacceptable. A single situation can receive many different signals, because the system perceives many situations as identical. For example, an autonomous car may have cruised alongside a large car many times without slowing down and pulling over. But, in only one instance, an ambulance, which appears exactly the same to the system, cruises by. The autonomous car doesn’t pull over and receives a feedback signal that the system took an unacceptable action.

“At that point, the system has been given multiple contradictory signals from a human: some with a large car beside it, and it was doing fine, and one where there was an ambulance in the same exact location, but that wasn’t fine. The system makes a little note that it did something wrong, but it doesn’t know why,” Ramakrishnan says. “Because the agent is getting all these contradictory signals, the next step is compiling the information to ask, ‘How likely am I to make a mistake in this situation where I received these mixed signals?’”

Intelligent aggregation

The end goal is to have these ambiguous situations labeled as blind spots. But that goes beyond simply tallying the acceptable and unacceptable actions for each situation. If the system performed correct actions nine times out of 10 in the ambulance situation, for instance, a simple majority vote would label that situation as safe.

“But because unacceptable actions are far rarer than acceptable actions, the system will eventually learn to predict all situations as safe, which can be extremely dangerous,” Ramakrishnan says.

To that end, the researchers used the Dawid-Skene algorithm, a machine-learning method used commonly for crowdsourcing to handle label noise. The algorithm takes as input a list of situations, each having a set of noisy “acceptable” and “unacceptable” labels. Then it aggregates all the data and uses some probability calculations to identify patterns in the labels of predicted blind spots and patterns for predicted safe situations. Using that information, it outputs a single aggregated “safe” or “blind spot” label for each situation along with a its confidence level in that label. Notably, the algorithm can learn in a situation where it may have, for instance, performed acceptably 90 percent of the time, the situation is still ambiguous enough to merit a “blind spot.”

In the end, the algorithm produces a type of “heat map,” where each situation from the system’s original training is assigned low-to-high probability of being a blind spot for the system.

“When the system is deployed into the real world, it can use this learned model to act more cautiously and intelligently. If the learned model predicts a state to be a blind spot with high probability, the system can query a human for the acceptable action, allowing for safer execution,” Ramakrishnan says.

Technology and robots will shake labour policies in Asia and the world

By Asit K. Biswas, University of Glasgow and Kris Hartley, The Education University of Hong Kong

In the 21st century, governments cannot ignore how changes in technology will affect employment and political stability.

The automation of work – principally through robotics, artificial intelligence (AI) and the Internet of things (IoT), collectively known as the Fourth Industrial Revolution – will provide an unprecedented boost to productivity and profit. It will also threaten the stability of low- and mid-skilled jobs in many developing and middle-income countries.

From labour to automation

Developing countries must begin seriously considering how technological changes will impact labour trends. Technology now looms just as large a disruptive force, if not larger, than the whims of global capital.

China has for decades increased its global contribution to manufacturing value-added goods, now enjoying a competitive position in Apple products, household appliances, and technology. In the process, the country has made historic progress lifting its citizens out of poverty.

China has accomplished this by raising worker productivity through technology and up-skilling (improving or acquiring new skills), and higher wages have predictably followed.

However, this trend is also compelling manufacturers to relocate some low-skill production to Southeast Asia. US-China trade disputes could exacerbate this trend.

Relocation of manufacturing activity has been an economic boon for workers in countries like Vietnam and Indonesia. However, the race among global manufacturers to procure the cheapest labour brings no assurances of long-term growth and prosperity to any one country.

Governments in developing countries must parlay the proceeds of ephemeral labour cost advantages into infrastructure investment, industrial upgrading and worker upskilling. China has done this to better effect than many.

The growth in sophistication and commercial feasibility of robotics, IoT, and other automation technologies will impact jobs at nearly every skill level. More broadly, the fallout from technological advancement may replicate the disruptive geographic shifts in production once resulting from labour cost arbitrage.

Political blowback

After many decades of globalisation, a borderless economy has emerged in which capital and production move freely to locations with the greatest investment returns and lowest cost structures. This has prompted a pattern of global economic restructuring, generating unprecedented growth opportunities for developing countries.

Workers have been rewarded for their personal efforts in education and skill development, while millions have been lifted from poverty.

Given advancements in technology and the associated impact on livelihoods, it is time to consider how the next chapter of global development will play out politically. Automation will be a highly disruptive force by most economic, social, and political measures. Few countries – developed or otherwise – will escape this challenge.

Some Western countries, including the United States, are already experiencing a populist political wave fuelled in part by the economic grievances of workers displaced from once stable, middle-class manufacturing jobs. Similar push-back may erupt in countries already embroiled in nationalist politics, including India.

Growing populations and the automation of work will soon mix to create unemployment crises, with serious implications for domestic political stability.

As education systems flood the employment market with scores of ambitious graduates, one of the greatest challenges governments face is how to generate well-paying jobs.

Further, vulnerable workers will include not only new entrants but also experienced workers, some of whom are continuously and aggressively up-skilling in anticipation of more lucrative employment.

In India, over 1 million people enter the working-age population every month. More than 8 million new jobs are needed each year to maintain current employment levels.

India’s young population is becoming increasingly pessimistic about their employment prospects. Although official statistics are unreliable, as a large percentage of work occurs in the informal sector in positions such domestic workers, coolies, street vendors, and transient positions lacking contracts, indications are that India may be facing the prospect of jobless growth.

Insufficient skill levels in much of the workforce are impeding India’s effort to accelerate growth in high-productivity jobs. Thus, the country’s large-scale manufacturers, both domestically and internationally owned, are turning to robots to ensure consistent, reliable, and efficient production.

Urbanisation also adds to India’s employment challenge. The promise of higher-paying jobs has lured many rural workers into urban areas, but these workers are often illiterate and lack sufficient skills. This was not always a concern, as these workers could find menial factory jobs. Robots are now doing much of the low-skilled work that migrant workers were once hired to do.

Towards a future of stable livelihoods

The lingering socio-economic imperative for many governments is to replace eliminated jobs. According to The World Economic Forum, “inequality represents the greatest societal concern associated with the Fourth Industrial Revolution.”

However, the WEF and others have given little useful guidance on how to address this challenge. How should the economy absorb multitudes of variously skilled workers displaced by technology?

People aspire to economic and social mobility more than ever before, particularly as they observe wealth rising ostentatiously all around them – on the streets, in the news, and among seemingly lucky friends and acquaintances. Sadly, the aspirations of most will go unfulfilled.

One way forward is said to be through up-skilling by retraining workers to operate and maintain technology systems. However, this seems to be a paradox, as workers would be training robots to eventually take jobs held by humans. If a major driver of automation is reduction or elimination of labour costs, one cannot expect all displaced workers to enjoy stable and continuing employment opportunities.

Despite political promises about employment growth from high-tech industries and the technological transformation of primary sectors, the tension between the drive for technology-based efficiency and the loss of jobs is undeniable and may have no clear resolution.

Societies have reacted to global economic restructuring in discouraging ways, indulging in nationalism, racism, militarism, and arbitrary economic protectionism. Populist opportunists and foul-tempered troglodytes have ridden reactionary rhetoric into positions of political power, raging against what former White House chief strategist Steve Bannon calls the “liberal postwar international order.” At the same time, left-leaning solutions such as universal basic income face significant fiscal and political headwinds.

The 21st century will see increased disruptions to once-stable work life, due to technological progress and the continuing liberalisation of global capital and production. Early indications about how countries will respond – haphazardly and with no clear long-term strategy – are not encouraging.![]()

Asit K. Biswas, Visiting professor, University of Glasgow and Kris Hartley, Assistant professor, The Education University of Hong Kong

This article is republished from The Conversation under a Creative Commons license. Read the original article.