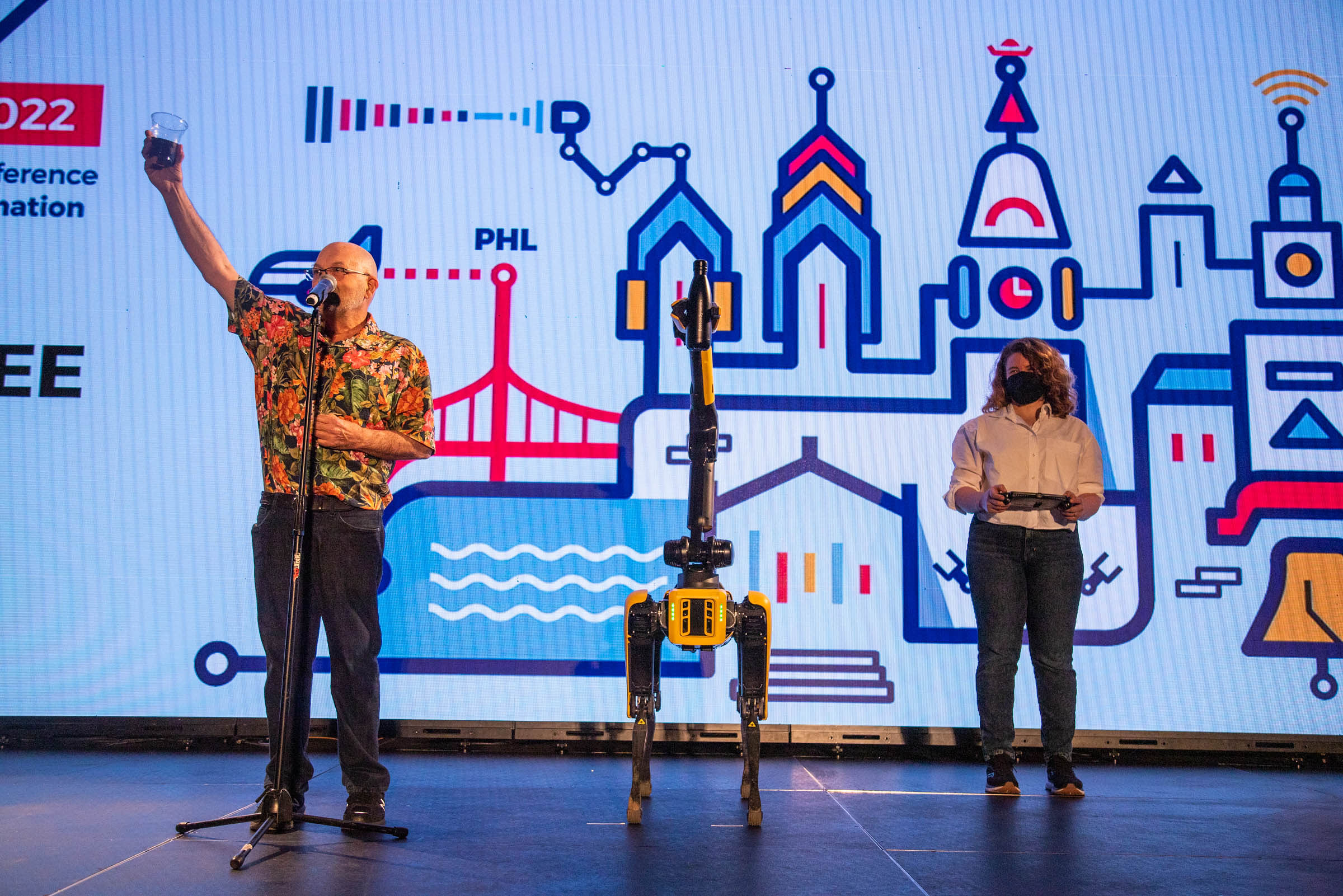

Credits: Wise Owl Multimedia

In this post we bring you all the paper awards finalists and winners presented during the 2022 edition of the IEEE International Conference on Robotics and Automation (ICRA).

ICRA 2022 Outstanding Paper

- ‘Translating Images into Maps‘, by Saha, Avishkar; Mendez Maldonado, Oscar Alejandro; Russell, Chris; Bowden, Richard. (WINNER)

- ‘CT-ICP: Real-time Elastic LiDAR Odometry with Loop Closure‘, by Dellenbach, Pierre; Deschaud, Jean-Emmanuel; Jacquet, Bastien; GOULETTE, François.

- ‘D2A-BSP: Distilled Data Association Belief Space Planning with Performance Guarantees Under Budget Constraints‘, by Shienman, Moshe; Indelman, Vadim.

ICRA 2022 Outstanding Student Paper

- ‘Offline Learning of Counterfactual Perception as Prediction for Real-World Robotic Reinforcement Learning‘, by Jin, Jun; Graves, Daniel; Haigh, Cameron; Luo, Jun; Jagersand, Martin.

- ‘Dynamic Underactuated Manipulator Using a Flexible Body with a Structural Anisotropy‘, by Maruo, Akihiro; Shibata, Akihide; Higashimori, Mitsuru.

- ‘Interactive Robotic Grasping with Attribute-Guided Disambiguation‘, by Yang, Yang; Lou, Xibai; Choi, Changhyun. (WINNER)

ICRA 2022 Outstanding Automation Paper

- ‘SPIN Road Mapper: Extracting Roads from Aerial Images via Spatial and Interaction Space Graph Reasoning for Autonomous Driving‘, by Bandara, Wele Gedara Chaminda; Valanarasu, Jeya Maria Jose; Patel, Vishal.

- ‘ARChemist: Autonomous Robotic Chemistry System Architecture‘, by Fakhruldeen, Hatem; Pizzuto, Gabriella; Jakub, Glowacki; Cooper, Andrew Ian.

- ‘Precise 3D Reconstruction of Plants from UAV Imagery Combining Bundle Adjustment and Template Matching‘, by Marks, Elias Ariel; Magistri, Federico; Stachniss, Cyrill. (WINNER)

ICRA 2022 Outstanding Coordination Paper

- ‘Learning Scalable Policies over Graphs for Multi-Robot Task Allocation using Capsule Attention Networks‘, by Paul, Steve; Ghassemi, Payam; Chowdhury, Souma.

- ‘Decentralized Model Predictive Control for Equilibrium-based Collaborative UAV Bar Transportation‘, by Castro Sundin, Roberto; Roque, Pedro; Dimarogonas, Dimos V.. (WINNER)

- ‘A Deep Reinforcement Learning Environment for Particle Robot Navigation and Object Manipulation‘, by Shen, Jeremy; Xiao, Erdong; Liu, Yuchen; Feng, Chen.

ICRA 2022 Outstanding Deployed Systems Paper

- ‘Autonomous Teamed Exploration of Subterranean Environments using Legged and Aerial Robots‘, by Kulkarni, Mihir; Dharmadhikari, Mihir Rahul; Tranzatto, Marco; Zimmermann, Samuel; Reijgwart, Victor; De Petris, Paolo; Nguyen, Huan; Khedekar, Nikhil Vijay; Papachristos, Christos; Ott, Lionel; Siegwart, Roland; Hutter, Marco; Alexis, Kostas. (WINNER)

- ‘Learning Model Predictive Control for Quadrotors‘, by Li, Guanrui; Tunchez, Alex; Loianno, Giuseppe.

- ‘Optimizing Terrain Mapping and Landing Site Detection for Autonomous UAVs‘, by Proença, Pedro F.; Delaune, Jeff; Brockers, Roland.

ICRA 2022 Outstanding Dynamics and Control Paper

- ‘Cooperative Modular Single Actuator Monocopters Capable of Controlled Passive Separation‘, by CAI, Xinyu; Win, Shane Kyi Hla; Win, Luke Soe Thura; Sufiyan, Danial; Foong, Shaohui. (WINNER)

- ‘Real-time Optimal Landing Control of the MIT Mini Cheetah‘, by Jeon, Se Hwan; Kim, Sangbae; Kim, Donghyun.

- ‘Real-Time Multi-Contact Model Predictive Control via ADMM‘, by Aydinoglu, Alp; Posa, Michael.

ICRA 2022 Outstanding Interaction Paper

- ‘Effects of Interfaces on Human-Robot Trust: Specifying and Visualizing Physical Zones‘, by Hudspeth, Marisa; Balali, Sogol; Grimm, Cindy; Sowell, Ross T..

- ‘Synergistic Scheduling of Learning and Allocation of Tasks in Human-Robot Team‘, by Vats, Shivam; Kroemer, Oliver; Likhachev, Maxim.

- ‘Human-Robot Shared Control for Surgical Robot Based on Context-Aware Sim-to-Real Adaptation‘, by Zhang, Dandan; wu, zicong; Chen, Junhong; Zhu, Ruiqi; Munawar, Adnan; Xiao, Bo; Guan, Yuan; Su, Hang; Guo, Yao; Fischer, Gregory Scott; Lo, Benny Ping Lai; Yang, Guang-Zhong. (WINNER)

ICRA 2022 Outstanding Learning Paper

- ‘Symphony: Learning Realistic and Diverse Agents for Autonomous Driving Simulation‘, by Igl, Maximilian; Kim, Daewoo; Kuefler, Alex; Mougin, Paul; Shah, Punit; Shiarlis, Kyriacos; Anguelov, Dragomir; Palatucci, Mark; White, Brandyn; Whiteson, Shimon.

- ‘TartanDrive: A Large-Scale Dataset for Learning Off-Road Dynamics Models‘, by Triest, Samuel; Sivaprakasam, Matthew; Wang, Sean J.; Wang, Wenshan; Johnson, Aaron; Scherer, Sebastian.

- ‘Augmenting Reinforcement Learning with Behavior Primitives for Diverse Manipulation Tasks‘, by Nasiriany, Soroush; Liu, Huihan; Zhu, Yuke. (WINNER)

ICRA 2022 Outstanding Locomotion Paper

- ‘Scalable Minimally Actuated Leg Extension Bipedal Walker Based on 3D Passive Dynamics‘, by Islam, Sharfin; Carter, Kamal; Yim, Justin K.; Kyle, James; Bergbreiter, Sarah; Johnson, Aaron.

- ‘Omni-Roach: A legged robot capable of traversing multiple types of large obstacles and self-righting‘, by Mi, Jonathan; Wang, Yaqing; Li, Chen.

- ‘Trajectory Optimization Formulation with Smooth Analytical Derivatives for Track-leg and Wheel-leg Ground Robots‘, by Mane, Adwait; Swart, Dylan; White, Jason; Hubicki, Christian. (WINNER)

ICRA 2022 Outstanding Manipulation Paper

- ‘Online Object Model Reconstruction and Reuse for Lifelong Improvement of Robot Manipulation‘, by Lu, Shiyang; Wang, Rui; Miao, Yinglong; Mitash, Chaitanya; Bekris, Kostas E..

- ‘Multi-view object pose distribution tracking for pre-grasp planning on mobile robots‘, by Naik, Lakshadeep; Iversen, Thorbjørn Mosekjær; Kramberger, Aljaz; Wilm, Jakob; Krüger, Norbert.

- ‘Manipulation of unknown objects via contact configuration regulation‘, by Doshi, Neel; Taylor, Orion; Rodriguez, Alberto. (WINNER)

ICRA 2022 Outstanding Mechanisms and Design Paper

- ‘TaTa: A Universal Jamming Gripper with High-Quality Tactile Perception and Its Application to Underwater Manipulation‘, by Li, Shoujie; Yin, Xianghui; XIA, Chongkun; Ye, Linqi; WANG, xueqian; LIANG, bin.

- ‘Design of a Biomimetic Tactile Sensor for Material Classification‘, by Dai, Kevin; Wang, Xinyu; Rojas, Allison M.; Harber, Evan; Tian, Yu; Paiva, Nicholas; Gnehm, Joseph; Schindewolf, Evan; Choset, Howie; Webster-Wood, Victoria; Li, Lu. (WINNER)

- ‘A Wearable Fingertip Cutaneous Haptic Device with Continuous Omnidirectional Motion Feedback‘, by Zhang, Peizhi; Kamezaki, Mitsuhiro; Hattori, Yutaro; Sugano, Shigeki.

ICRA 2022 Outstanding Navigation Paper

- ‘Event-Triggered Tracking Control Scheme for Quadrotors with External Disturbances: Theory and Validations‘, by Gao, Pengcheng; Wang, Gang; Li, Qingdu; Zhang, Jianwei; Shen, Yantao.

- ‘EDPLVO: Efficient Direct Point-Line Visual Odometry‘, by Zhou, Lipu; Huang, Guoquan (Paul); Mao, Yinian; Wang, Shengze; Kaess, Michael. (WINNER)

- ‘Confidence-based Robot Navigation under Sensor Occlusion with Deep Reinforcement Learning‘, by Ryu, Hyeongyeol; Yoon, Minsung; Park, Daehyung; Yoon, Sung-eui.

ICRA 2022 Outstanding Planning Paper

- ‘Optimizing Trajectories with Closed-Loop Dynamic SQP‘, by Singh, Sumeet; Slotine, Jean-Jacques E.; Sindhwani, Vikas.

- ‘Trajectory Distribution Control for Model Predictive Path Integral Control using Covariance Steering‘, by Yin, Ji; Zhang, Zhiyuan; Theodorou, Evangelos; Tsiotras, Panagiotis.

- ‘Non-Gaussian Risk Bounded Trajectory Optimization for Stochastic Nonlinear Systems in Uncertain Environments‘, by Han, Weiqiao; M. Jasour, Ashkan; Williams, Brian. (WINNER)