I’ve written a few times that perhaps the biggest unsolved problem in robocars is how to know we have made them safe enough. While most people think of that in terms of government certification, the truth is that the teams building the cars are very focused on this, and know more about it than any regulator, but they still don’t know enough. The challenge is going to be convincing your board of directors that the car is safe enough to release, for if it is not, it could ruin the company that releases it, at least if it’s a big company with a reputation.

We don’t even have a good definition of what “safe enough” is though most people are roughly taking that as “a safety record superior to the average human.” Some think it should be much more, few think it should be less. Tesla, now with the backing of the NTSB, has noted that their autopilot system — combined with a mix of mostly attentive but some inattentive humans, may have a record superior to the average human, for example, even though with the inattentive humans it is worse.

Last week I attended a conference in Stuttgart devoted to robocar safety testing, part of a larger auto show including an auto testing show. It was interesting to see the main auto testing show — scores of expensive and specialized machines and tools that subject cars to wear and tear, slamming doors thousands of times, baking the surfaces, rattling and vibrating everything. And testing the electronics, too.

In Europe, the focus of testing is very strongly on making sure you are compliant with standards and regulations. That’s true in the USA but not quite as much. It was in Europe some time ago that I learned the word “homologation” which names this process.

There is a lot to be learned from the previous regimes of testing. They have built a lot of tools and learned techniques. But robocars are different beasts, and will fail in different ways. They will definitely not fail the way human drivers do, where usually small things are always going wrong, and an accident happens when 2 or 3 things go wrong at once. The conference included a lot of people working on simulation, which I have been promoting for many years. The one good thing in the NHTSA regulations — the open public database of all incidents — may vanish in the new rules, and it would have made for a great simulator. The companies making the simulators (and the academic world) would have put every incident into a shared simulator so every new car could test itself in every known problem situation.

Still, we will see lots of simulators full of scenarios, and also ways to parameterize them. That means that instead of just testing how a car behaves if somebody cuts it off, you test what it does if it gets cut off with a gap of 1cm, or 10cm, or 1m, or 2m, and by different types of vehicles, and by two at once etc. etc. etc. The nice thing about computers is you can test just about every variation you can think of, and test it in every road situation and every type of weather, at least if your simulator is good enough,

Yoav Hollander, who I met when he came as a student to the program at Singularity U, wrote a report on the approaches to testing he saw at the conference that contains useful insights, particularly on this question of new and old thinking, and what regulations drive vs. liability and fear of the public. He puts it well — traditional and certification oriented testing has a focus on assuring you don’t have “expected bugs” but is poor at finding unexpected ones. Other testing is about finding unexpected bugs. Expected bugs are of the “we’ve seen this sort of thing before, we want to be sure you don’t suffer from it” kind. Unexpected bugs are “something goes wrong that we didn’t know to look for.”

Avoiding old thinking

I believe that we are far from done on the robocar safety question. I think there are startups who have not yet been founded who, in the future, will come up with new techniques both for promoting safety and testing it that nobody has yet thought of. As such, I strongly advise against thinking that we know very much about how to do it yet.

A classic example of things going wrong is the movement towards “explainable AI.” Here, people are concerned that we don’t really know how “black box” neural network tools make the decisions they do. Car regulations in Europe are moving towards banning software that can’t be explained in cars. In the USA, the draft NHTSA regulations also suggest the same thing, though not as strongly.

We may find ourselves in a situation where we take to systems for robocars, one explainable and the other not. We put them through the best testing we can, both in simulator and most importantly in the real world. We find the explainable system has a “safety incident” every 100,000 miles, and the unexplainable system has an incident every 150,000 miles. To me it seems obvious that it would be insane to make a law that demands the former system which, when deployed, will hurt more people. We’ll know why it hurt them. We might be better at fixing the problems, but we also might not — with the unexplainable system we’ll be able to make sure that particular error does not happen again, but we won’t be sure that others very close it it are eliminated.

Testing in sim is a challenge here. In theory, every car should get no errors in sim, because any error found in sim will be fixed or judged as not really an error or so rare as to be unworthy of fixing. Even trained machine learning systems will be retrained until they get no errors in sim. The only way to do this sort of testing in sim will be to have teams generate brand new scenarios in sim that the cars have never seen, and see how they do. We will do this, but it’s hard. Particularly because as the sims get better, there will be fewer and fewer real world situations they don’t contain. At best, the test suite will offer some new highly unusual situations, which may not be the best way to really judge the quality of the cars. In addition, teams will be willing to pay simulator companies well for new and dangerous scenarios in sim for their testing — more than the government agencies will pay for such scenarios. And of course, once a new scenario displays a problem, every customer will fix it and it will become much less valuable. Eventually, as government regulations become more prevalent, homologation companies will charge to test your compliance rate on their test suites, but again, they will need to generate a new suite every time since everybody will want the data to fix any failure. This is not like emissions testing, where they tell you that you went over the emissions limit, and it’s worth testing the same thing again.

The testing was interesting, but my other main focus was on the connected car and security sessions. More on that to come.

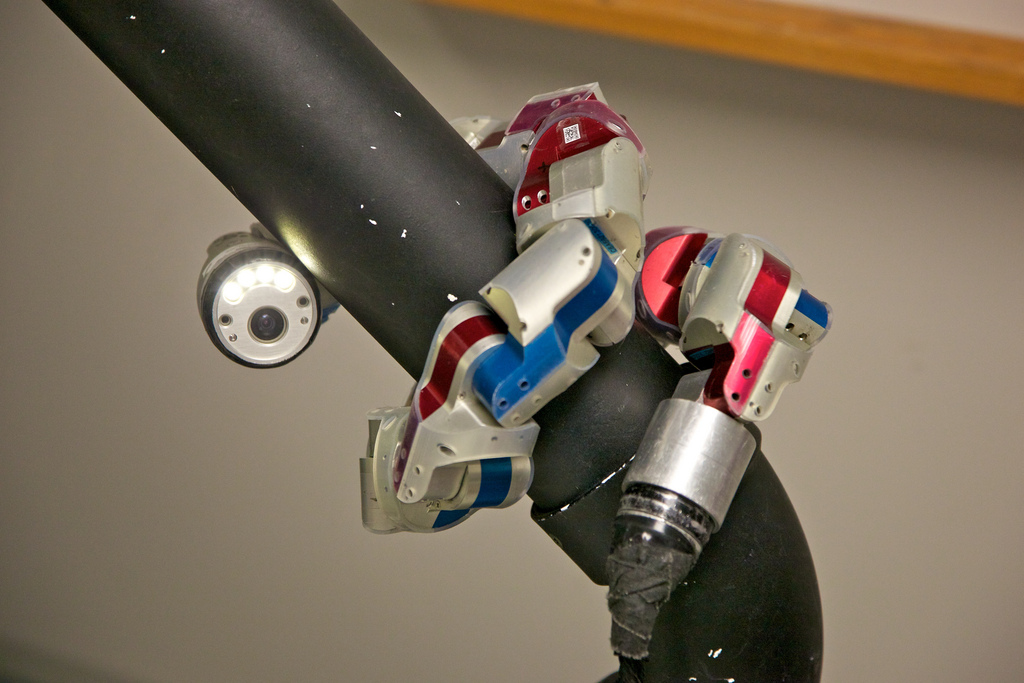

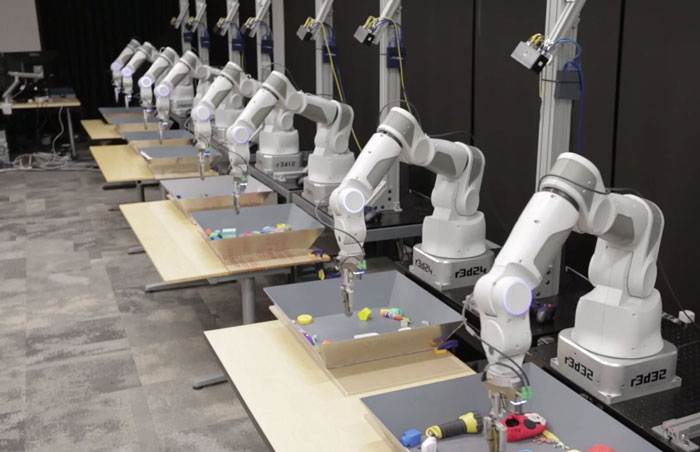

Sergey Levine is an assistant professor at UC Berkeley. His research focuses on robotics and machine learning. In his PhD thesis, he developed a novel guided policy search algorithm for learning complex neural network control policies, which was later applied to enable a range of robotic tasks, including end-to-end training of policies for perception and control. He has also developed algorithms for learning from demonstration, inverse reinforcement learning, efficient training of stochastic neural networks, computer vision, and data-driven character animation.

Sergey Levine is an assistant professor at UC Berkeley. His research focuses on robotics and machine learning. In his PhD thesis, he developed a novel guided policy search algorithm for learning complex neural network control policies, which was later applied to enable a range of robotic tasks, including end-to-end training of policies for perception and control. He has also developed algorithms for learning from demonstration, inverse reinforcement learning, efficient training of stochastic neural networks, computer vision, and data-driven character animation.