What Makes Us Superior To Robots When It Comes To Common Intelligence?

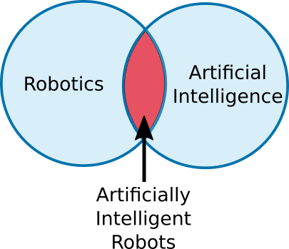

The debate about man vs robots is an evergreen and common thing now. While robots are viewed as an enabler of a dystopian future brought by digital disruption, the main question that has baffled minds is how smart are they. When it comes to human intelligence, there isn’t any other living being or ‘mechanical or AI mind’ that can draw parallel with us. Yet, robots powered by AI have been able to perform trivial, monotonous tasks with accuracy far better than us. It is important to note that this does not imply robots have acquired cognitive intelligence nor common sense which are intrinsic to humans, despite de facto of the recent marvels of robotics.

The main problem is that most of the algorithms that are written for robots are based on machine learning coding. These codes are collected from a particular type of data, and models are trained based on individual test conditions. Hence, when put in a situation that is not in their code nor algorithm, robots can fail terribly or draw a conclusion that can be catastrophic. This has highlighted in Stanley Kubrick’s landmark film 2001: A Space Odyssey. The movie features a supercomputer, HAL-9000, who is informed by its creators of the purpose of the mission: to reach Jupiter and search for signs of extra-terrestrial intelligence. When HAL makes an error, it refuses to admit this and alleges that it was caused due to human error. Therefore, astronauts decide to shut HAL down, but unfortunately, the AI discovers their plot by lip-reading. Conclusively, HAL arrives at a new conclusion that wasn’t part of its original programming, deciding to save itself by systematically killing off the people onboard.

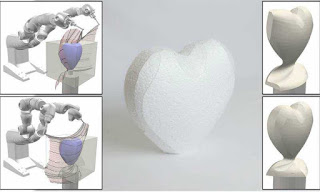

Another illustration which experts often mention it that, while we can teach a robot on how to open a door by training it and feeding data on 500 different types of door, the robots will still fail when asked to open the 501st door. Also, this example is the best way to explain why robots don’t share the typical thought process and intelligence of humans. Humans don’t need to be ‘trained’ they observe and learn, or they experiment thanks to curiosity. Further, every time someone knocks the door, we don’t tend to open it, there is always an unfriendly neighbor we dislike. Again we don’t need to be reminded to lock the door either, but robots need a clear set of instruction. Let us consider other aspects of our life, robots and AI are trained on a particular set of data; hence they will function effectively when the input is something they have been trained or programmed for, beyond it the observation is different. For instance, if one uses the expression “Hit the road” while driving a car, she means to say to herself or the driver to begin the journey emphatically. If a robot does not know the phrasal meaning of the same expression, it may believe that the person is asking to ‘hit’ the road. This misunderstanding can lead to accidents. While researchers are working hard, devising algorithms, running codes, we are yet to see a robot that understands the way humans converse, all with accents, dialects, colloquy and jargons.

Michio Kaku, a futurist and theoretical physicist, once said that “Our robots today, have the collective intelligence and wisdom of a cockroach.” While robots of today can make salads on our command, or robots like Deep Blue or AlphaGo Zero can defeat humans in chess, it does not necessarily qualify as ‘common sense’ nor smartness. And let us not forget that Deep Blue and AlphaGo Zero were following instructions given by a team of ‘smart’ human scientists. These robots were designed by people who were smart enough to solve a seemingly impossible task. So to sum up, while robots are becoming smarter that, they are now able to fold laundry, impersonate as a person looking for dating online, they still lag when it comes to cognitive intelligence and common sense. It is a long wait till we find a robot we see in sci-fi movies, i.e. C3P0, R2D2 or WALL-E.