Building and operating production-grade agentic AI applications requires more than just great foundation models (FMs). AI teams must manage complex workflows, infrastructure and the full AI lifecycle – from prototyping to production.

Yet, fragmented tooling and rigid infrastructure force teams to spend more time managing complexity than delivering innovation.

With the acquisition of Agnotiq and their open-source distributed computing platform, Covalent, DataRobot accelerates agentic AI development and deployment by unifying AI-driven decision-making, governance, lifecycle management, and compute orchestration – enabling AI developers to focus on application logic instead of infrastructure management.

In this blog, we’ll explore how these expanded capabilities help AI practitioners build and deploy agentic AI applications in production faster and more seamlessly.

How DataRobot empowers agentic AI

- Business process specific AI-driven workflows. Mechanisms to translate business use cases into business context aware agentic AI workflows and enable multi-agent frameworks to dynamically decide which functions, agents, or tools to call.

- The broadest suite of AI tools and models. Build, compare, and deploy the best agentic AI workflows.

- Best-in-class governance and monitoring. Governance (with AI registry) and monitoring for AI models, applications, and autonomous agents.

How Agnostiq enhances the stack

- Heterogeneous compute execution. Agents run where data and applications reside, ensuring compatibility across diverse environments instead of being confined to a single location.

- Optimized compute flexibility. Customers can leverage all available compute options—on-prem, accelerated clouds, and hyperscalers — to optimize for availability, latency, and cost.

- Orchestrator of orchestrators. Works seamlessly with popular frameworks like Run.ai, Kubernetes, and SLURM to unify workload execution across infrastructures.

The hidden complexity of building and managing production-grade agentic AI applications

Today, many AI teams can develop simple prototypes and demos, but getting agentic AI applications into production is a far greater challenge. Two hurdles stand in the way.

1. Building the application

Developing a production-grade agentic AI application requires more than just writing code. Teams must:

- Translate business needs into workflows.

- Experiment with different strategies using a combination of LLMs, embedding models, Retrieval Augmented Generation (RAG), fine-tuning techniques, guardrails, and prompting methods.

- Ensure solutions meet strict quality, latency, and cost objectives for specific business use cases.

- Navigate infrastructure constraints by custom-coding workflows to run across cloud, on-prem, and hybrid environments.

This demands not only a broad set of generative AI tools and models that work together seamlessly with enterprise systems but also infrastructure flexibility to avoid vendor lock-in and bottlenecks.

2. Deploying and operating at scale

Production AI applications require:

- Provisioning and managing GPUs and other infrastructure.

- Monitoring performance, ensuring reliability, and adjusting models dynamically.

- Enforcement of governance, access controls, and compliance reporting.

Even with existing solutions, it can take months to move an application from development to production.

Existing AI solutions fall short

Most teams rely on one of the two strategies – each with trade-offs

- Custom “build your own” (BYO) AI stacks: Offer more control but require significant manual effort to integrate tools, configure infrastructure, and manage systems – making it resource-intensive and unsustainable at scale.

- Hyperscaler AI platforms: Offer an ensemble of tools for different parts of the AI lifecycle, but these tools aren’t inherently designed to work together. AI teams must integrate, configure, and manage multiple services manually, adding complexity and reducing flexibility. In addition, they tend to lack governance, observability, and usability while locking teams into proprietary ecosystems with limited model and tool flexibility.

A faster, smarter way to build and deploy agentic AI applications

AI teams need a seamless way to build, deploy, and manage agentic AI applications without infrastructure complexity. With DataRobot’s expanded capabilities, they can streamline model experimentation and deployment, leveraging built-in tools to support real-world business needs.

Key benefits for AI teams

- Turnkey, use-case specific AI apps: Customizable AI apps enable fast deployment of agentic AI applications, allowing teams to tailor workflows to fit specific business needs.

- Iterate rapidly with the broadest suite of AI tools. Experiment with custom and open-source generative AI models. Use fully managed RAG, Nvidia NeMo guardrails, and built-in evaluation tools to refine agentic AI workflows.

- Optimize AI workflows with built-in evaluation. Select the best agentic AI approach for your use case with LLM-as-a-Judge, human-in-the-loop evaluation, and operational monitoring (latency, token usage, performance metrics).

- Deploy and scale with adaptive infrastructure. Set criteria like cost, latency, or availability and let the system allocate workloads across on-prem and cloud environments. Scale on-premises and expand to the cloud as demand grows without manual reconfiguration.

- Unified observability and compliance. Monitor all models – including third-party – from a single pane of glass, track AI assets in the AI registry, and automate compliance with audit-ready reporting.

With these capabilities, AI teams no longer have to choose between speed and flexibility. They can build, deploy, and scale agentic AI applications with less friction and greater control.

Let’s walk through an example of how these capabilities come together to enable faster, more efficient agentic AI development.

Orchestrating multi-agent AI workflows at scale

Sophisticated multi-agent workflows are pushing the boundaries of AI capability. While several open-source and proprietary frameworks exist for building multi-agent systems, one key challenge remains overlooked: orchestrating the heterogeneous compute and governance, and operational requirements of each agent.

Each member of a multi-agent workflow may require different backing LLMs — some fine-tuned on domain-specific data, others multi-modal, and some vastly different in size. For example:

- A report consolidation agent might only need Llama 3.3 8B, requiring a single Nvidia A100 GPU.

- A primary analyst agent might need Llama 3.3 70B or 405B, demanding multiple A100 or even H100 GPUs.

Provisioning, configuring environments, monitoring, and managing communication across multiple agents with varying compute requirements is already complex. In addition, operational and governance constraints can determine where certain jobs must run. For instance, if data is required to reside in certain data centers or countries.

Here’s how it works in action.

Use case: A multi-agent stock investment strategy analyzer

Financial analysts need real-time insights to make informed investment decisions, but manually analyzing vast amounts of financial data, news, and market signals is slow and inefficient.

A multi-agent AI system can automate this process, providing faster, data-driven recommendations.

In this example, we build a Stock Investment Strategy Analyzer, a multi-agent workflow that:

- Generates a structured investment report with data-driven insights and a buy rating.

- Tracks market trends by gathering and analyzing real-time financial news.

- Evaluates financial performance, competitive landscape, and risk factors using dynamic agents.

How dynamic agent creation works

Unlike static multi-agent workflows, this system creates agents on-demand based on the real-time market data. The primary financial analyst agent dynamically generates a cohort of specialized agents, each with a unique role.

Workflow breakdown

- The primary financial analyst agent gathers and processes initial news reports on a stock of interest.

- It then generates specialized agents, assigning them roles based on real-time data insights.

- Specialized agents analyze different factors, including:

– Financial performance (balance sheets, earnings reports)

– Competitive landscape (industry positioning, market threats)

– External market signals (web searches, news sentiment analysis) - A set of reporting agents compiles insights into a structured investment report with a buy/sell recommendation.

This dynamic agent creation allows the system to adapt in real time, scaling resources efficiently while ensuring specialized agents handle relevant tasks.

Infrastructure orchestration with Covalent

The combined power of DataRobot and Agnostiq’s Covalent platform eliminates the need to manually build and deploy Docker images. Instead, AI practitioners can simply define their package dependencies, and Covalent handles the rest.

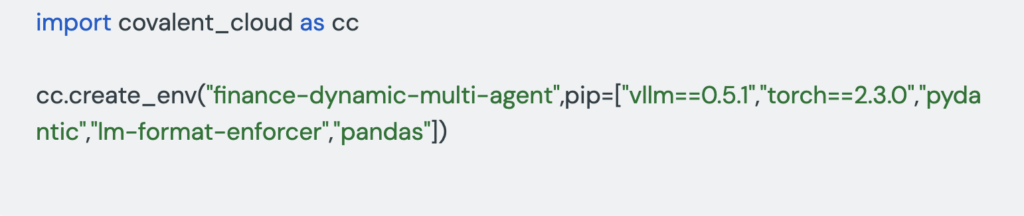

Step 1: Define the compute environment

- No manual setup required. Simply list dependencies and Covalent provisions the necessary environment.

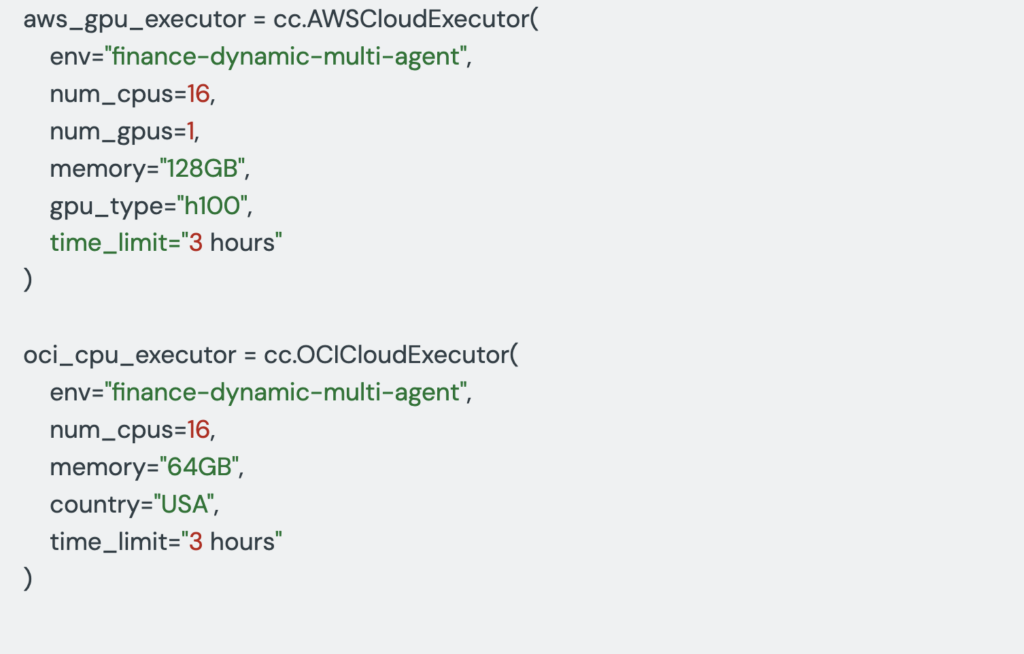

Step 2: Provision compute resources in a software-defined manner

Each agent requires different hardware, so we define compute resources accordingly:

Covalent automates compute provisioning, allowing AI developers to define compute needs in Python while handling resource allocation across multiple cloud and on-prem environments.

Acting as an “orchestrator of orchestrators” it bridges the gap between agentic logic and scalable infrastructure, dynamically assigning workloads to the best available compute resources. This removes the burden of manual infrastructure management, making multi-agent applications easier to scale and deploy.

Combined with DataRobot’s governance, monitoring, and observability, it gives teams the flexibility to manage agentic AI more efficiently.

- Flexibility: Agents using large models (e.g., Llama 3.3 70B) can be assigned to multi-GPU A100/H100 instances, while running lightweight agents on CPU-based infrastructure.

- Automatic scaling: Covalent provisions resources across clouds and on-prem as needed, eliminating manual provisioning.

Once compute resources are provisioned, agents can seamlessly interact through a deployed inference endpoint for real-time decision-making.

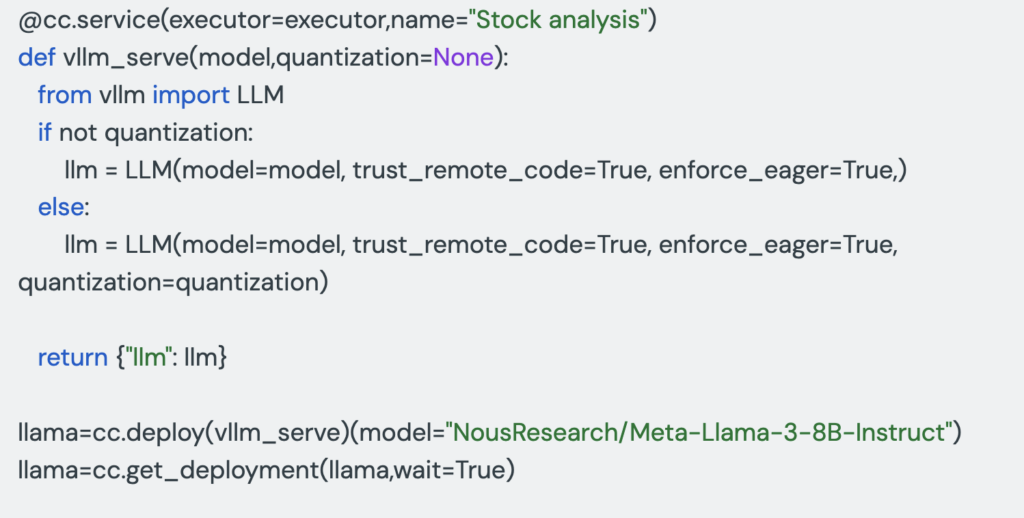

Step 3: Deploy an AI inference endpoint

For real-time agent interactions, Covalent makes deploying inference endpoints seamless. Here’s an inference service set-up for our primary financial analyst agent using Llama 3.3 8B:

- Persistent inference service enables multi-agent interactions in real time.

- Supports lightweight and large-scale models. Simply adjust the execution environment as needed.

Want to run a 405B parameter model that requires 8x H100s? Just define another executor and deploy it in the same workflow.

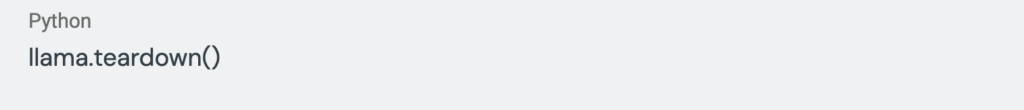

Step 4: Tearing down infrastructure

Once the workflow completes, shutting down resources is effortless.

- No wasted compute. Resources deallocate instantly after teardown.

- Simplified management. No manual cleanup required.

Scaling AI without automation

Before jumping into the implementation, consider what it would take to build and deploy this application manually. Managing dynamic, semi-autonomous agents at scale requires constant oversight — teams must balance capabilities with guardrails, prevent unintended agent proliferation, and ensure a clear chain of responsibility.

Without automation, this is a massive infrastructure and operational burden. Covalent removes these challenges, enabling teams to orchestrate distributed applications across any environment — without vendor lock-in or specialized infra teams.

Give it a try.

Explore and customize the full working implementation in this detailed documentation.

A look inside Covalent’s orchestration engine

Compute infra abstraction

Covalent lets AI practitioners define compute requirements in Python — without manual containerization, provisioning, or scheduling. Instead of dealing with raw infrastructure, users specify abstracted compute concepts similar to serverless frameworks.

- Run AI pipelines anywhere, from an on-prem GPU cluster to AWS P5.24xl instances — with minimal code changes.

- Developers can access cloud, on-prem, and hybrid compute resources through a single Python interface.

Cloud-agnostic orchestration: Scaling across distributed environments

Covalent operates as an orchestrator of the orchestrator layer above traditional orchestrators like Kubernetes, Run:ai and SLURM, enabling cross-cloud and multi-data center orchestration.

- Supports diverse environments. Covalent was built to be cloud-agnostic and to unify heterogeneous computing technologies and frameworks. It supports on-prem HPC schedulers like SLURM, and Kubernetes, as well as major cloud services (AWS EC2, AWS Batch, AWS ECS, GCP Batch, Azure Batch).

- Abstracts clusters, not just VMs. The first generation of orchestrators abstracted VMs into clusters. Covalent takes it further by abstracting clusters themselves.

- Eliminates DevOps overhead. AI teams get cloud flexibility without vendor lock-in, while Covalent automates provisioning and scaling.

Workflow orchestration for agentic AI pipelines

Covalent includes native workflow orchestration built for high-throughput, parallel AI workloads.

- Optimizes execution across hybrid compute environments. Ensures seamless coordination between different models, agents, and compute instances.

- Orchestrates complex AI workflows. Ideal for multi-step, multi-model agentic AI applications.

Designed for evolving AI workloads

Originally built for quantum and HPC applications, Covalent now unifies diverse computing paradigms with a modular architecture and plug-in ecosystem.

- Extensible to new HPC technologies & hardware. Ensures applications remain future-proof as new AI hardware enters the market.

By integrating Covalent’s pluggable compute orchestrator, the DataRobot extends its capabilities as an infrastructure-agnostic AI platform, enabling the deployment of AI applications that require large-scale, distributed GPU workloads while remaining adaptable to emerging HPC technologies & hardware vendors.

Bringing agentic AI to production without the complexity

Agentic AI applications introduce new levels of complexity—from managing multi-agent workflows to orchestrating diverse compute environments. With Covalent now part of DataRobot, AI teams can focus on building, not infrastructure.

Whether deploying AI applications across cloud, on-prem, or hybrid environments, this integration provides the flexibility, scalability, and control needed to move from experimentation to production—seamlessly.

Big things are ahead for agentic AI. This is just the beginning of simplifying orchestration, governance, and scalability. Stay tuned for new capabilities coming soon and sign up for a free trial to explore more.

The post Agentic AI: Real-world business impact, enterprise-ready solutions appeared first on DataRobot.