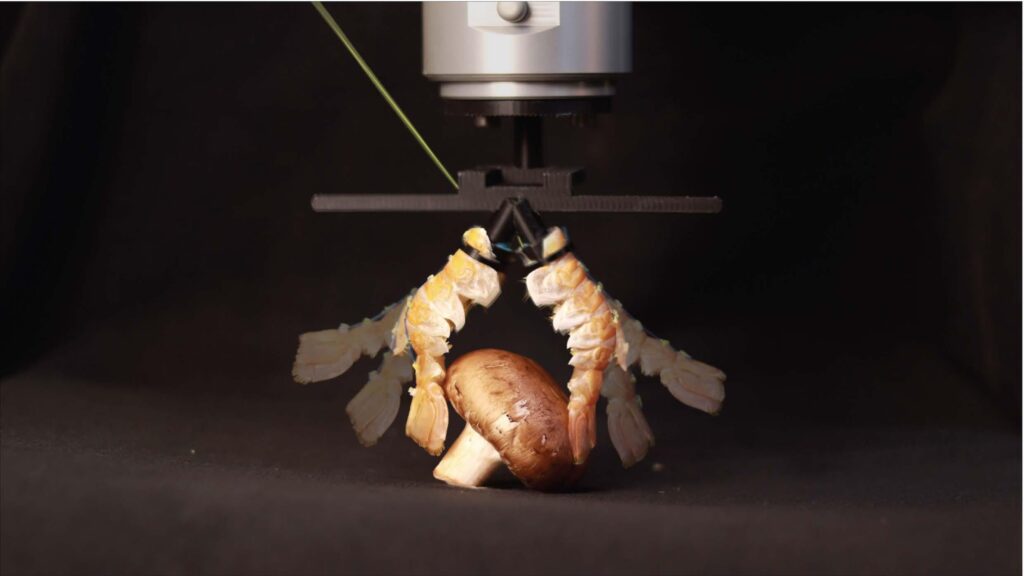

Demonstration of the robotic gripper made from langoustine tails. 2025 CREATE Lab EPFL CC BY SA.

Demonstration of the robotic gripper made from langoustine tails. 2025 CREATE Lab EPFL CC BY SA.

By Celia Luterbacher

Although many roboticists today turn to nature to inspire their designs, even bioinspired robots are usually fabricated from non-biological materials like metal, plastic and composites. But a new experimental robotic manipulator from the Computational Robot Design and Fabrication Lab (CREATE Lab) in EPFL’s School of Engineering turns this trend on its head: its main feature is a pair of langoustine abdomen exoskeletons.

Although it may look unusual, CREATE Lab head Josie Hughes explains that combining biological elements with synthetic components holds significant potential not only to enhance robotics, but also to support sustainable technology systems.

“Exoskeletons combine mineralized shells with joint membranes, providing a balance of rigidity and flexibility that allows their segments to move independently. These features enable crustaceans’ rapid, high-torque movements in water, but they can also be very useful for robotics. And by repurposing food waste, we propose a sustainable cyclic design process in which materials can be recycled and adapted for new tasks.”

In a paper published in Advanced Science, Hughes and her team demonstrate three robotic applications by augmenting the exoskeletons of langoustines, which had previously been harvested and processed for the food industry, with the precise control and longevity of synthetic components: a manipulator that can handle objects weighing up to 500g, grippers that can bend and grasp various objects, and a swimming robot.

Design, operate, recycle, repeat

For their study, the CREATE Lab decided to bring together the structural robustness and flexibility of the exoskeletons of langoustines with the precise control and longevity of synthetic components.

They achieved this by embedding an elastomer inside the exoskeleton to control each of its segments and then mounting it on a motorized base to modulate its stiffness response (extension and flexion). Finally, the team covered the exoskeleton in a silicon coating to reinforce it and extend its lifespan.

When mounted on the motorized base, the device can be used to move an object weighing up to 500 g into a target zone. When mounted as a gripping pair, two exoskeletons can successfully grasp a variety of objects ranging in size and shape from a highlighter pen to a tomato. The robotic system can even be used to propel a swimming robot with two flapping exoskeletal ‘fins’ at speeds of up to 11 centimeters per second.

After use, the exoskeleton and its robotic base can be separated and most of the synthetic components can be reused. “To our knowledge, we are the first to propose a proof of concept to integrate food waste into a robotic system that combines sustainable design with reuse and recycling,” says CREATE Lab researcher and first author Sareum Kim.

One limitation of the approach lies in the natural variation in biological structures; for example, the unique shape of each langoustine tail means that the two- ‘fingered’ gripper bends slightly differently on each side. The researchers say this challenge will require the development of more advanced synthetic augmentation mechanisms like tunable controllers. With such improvements, the team sees potential for future systems integrating bioderived structural elements, for example in biomedical implants or bio-system monitoring platforms.

“Although nature does not necessarily provide the optimal form, it still outperforms many artificial systems and offers valuable insights for designing functional machines based on elegant principles,” Hughes summarizes.

Read the work in full

Dead Matter, Living Machines: Repurposing Crustaceans’ Abdomen Exoskeleton for Bio-Hybrid Robots, S. Kim, K. Gilday, and J. Hughes, Adv. Sci. (2025).