Elvis Nava is a fellow at ETH’ Zurich’s AI center as well as a doctoral student at the Institute of Neuroinformatics and in the Soft Robotics Lab. (Photograph: Daniel Winkler / ETH Zurich)

By Christoph Elhardt

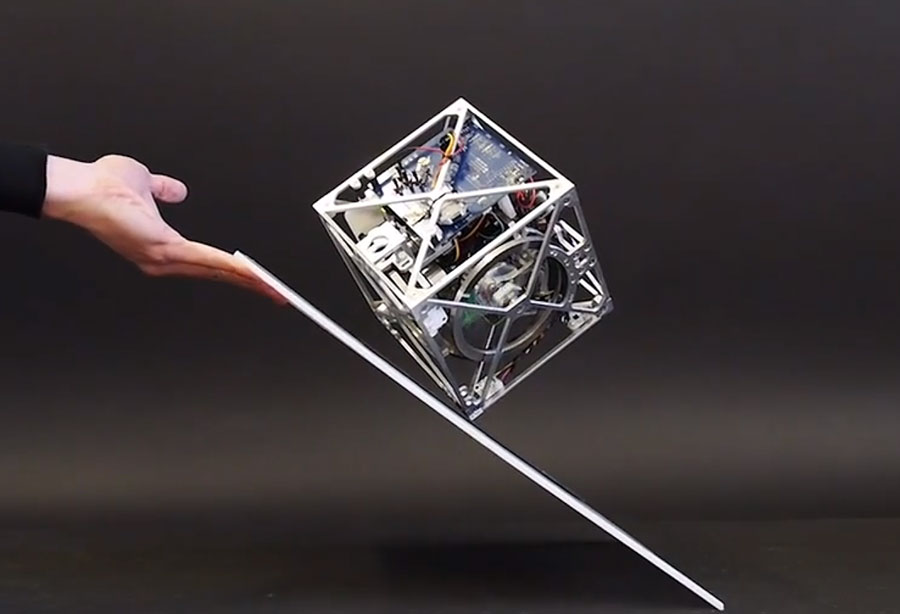

In ETH Zurich’s Soft Robotics Lab, a white robot hand reaches for a beer can, lifts it up and moves it to a glass at the other end of the table. There, the hand carefully tilts the can to the right and pours the sparkling, gold-coloured liquid into the glass without spilling it. Cheers!

Computer scientist Elvis Nava is the person controlling the robot hand developed by ETH start-up Faive Robotics. The 26-year-old doctoral student’s own hand hovers over a surface equipped with sensors and a camera. The robot hand follows Nava’s hand movement. When he spreads his fingers, the robot does the same. And when he points at something, the robot hand follows suit.

But for Nava, this is only the beginning: “We hope that in future, the robot will be able to do something without our having to explain exactly how,” he says. He wants to teach machines to carry out written and oral commands. His goal is to make them so intelligent that they can quickly acquire new abilities, understand people and help them with different tasks.

Functions that currently require specific instructions from programmers will then be controlled by simple commands such as “pour me a beer” or “hand me the apple”. To achieve this goal, Nava received a doctoral fellowship from ETH Zurich’s AI Center in 2021: this program promotes talents that bridges different research disciplines to develop new AI applications. In addition, the Italian – who grew up in Bergamo – is doing his doctorate at Benjamin Grewe’s professorship of neuroinformatics and in Robert Katzschmann’s lab for soft robotics.

Developed by the ETH start-up Faive Robotics, the robot hand imitates the movements of a human hand. (Video: Faive Robotics)

Combining sensory stimuli

But how do you get a machine to carry out commands? What does this combination of artificial intelligence and robotics look like? To answer these questions, it is crucial to understand the human brain.

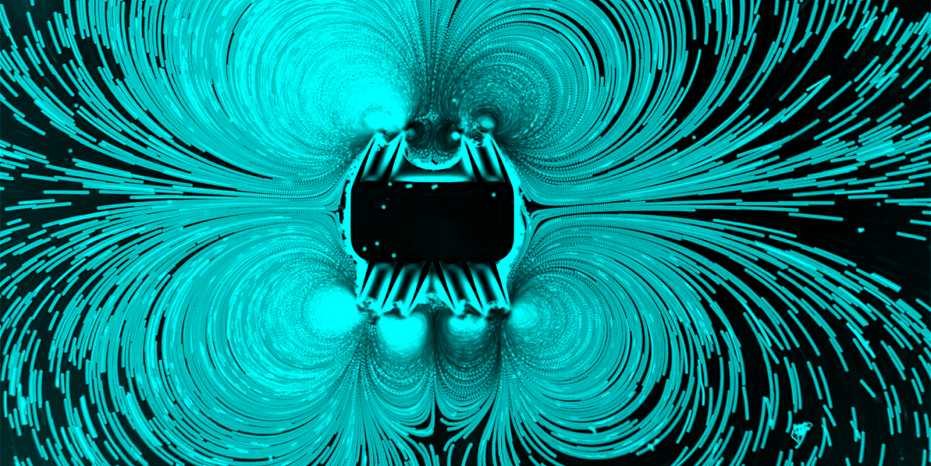

We perceive our environment by combining different sensory stimuli. Usually, our brain effortlessly integrates images, sounds, smells, tastes and haptic stimuli into a coherent overall impression. This ability enables us to quickly adapt to new situations. We intuitively know how to apply acquired knowledge to unfamiliar tasks.

“Computers and robots often lack this ability,” Nava says. Thanks to machine learning, computer programs today may write texts, have conversations or paint pictures, and robots may move quickly and independently through difficult terrain, but the underlying learning algorithms are usually based on only one data source. They are – to use a computer science term – not multimodal.

For Nava, this is precisely what stands in the way of more intelligent robots: “Algorithms are often trained for just one set of functions, using large data sets that are available online. While this enables language processing models to use the word ‘cat’ in a grammatically correct way, they don’t know what a cat looks like. And robots can move effectively but usually lack the capacity for speech and image recognition.”

“Every couple of years, our discipline changes the way we think about what it means to be a researcher,” Elvis Nava says. (Video: ETH AI Center)

Robots have to go to preschool

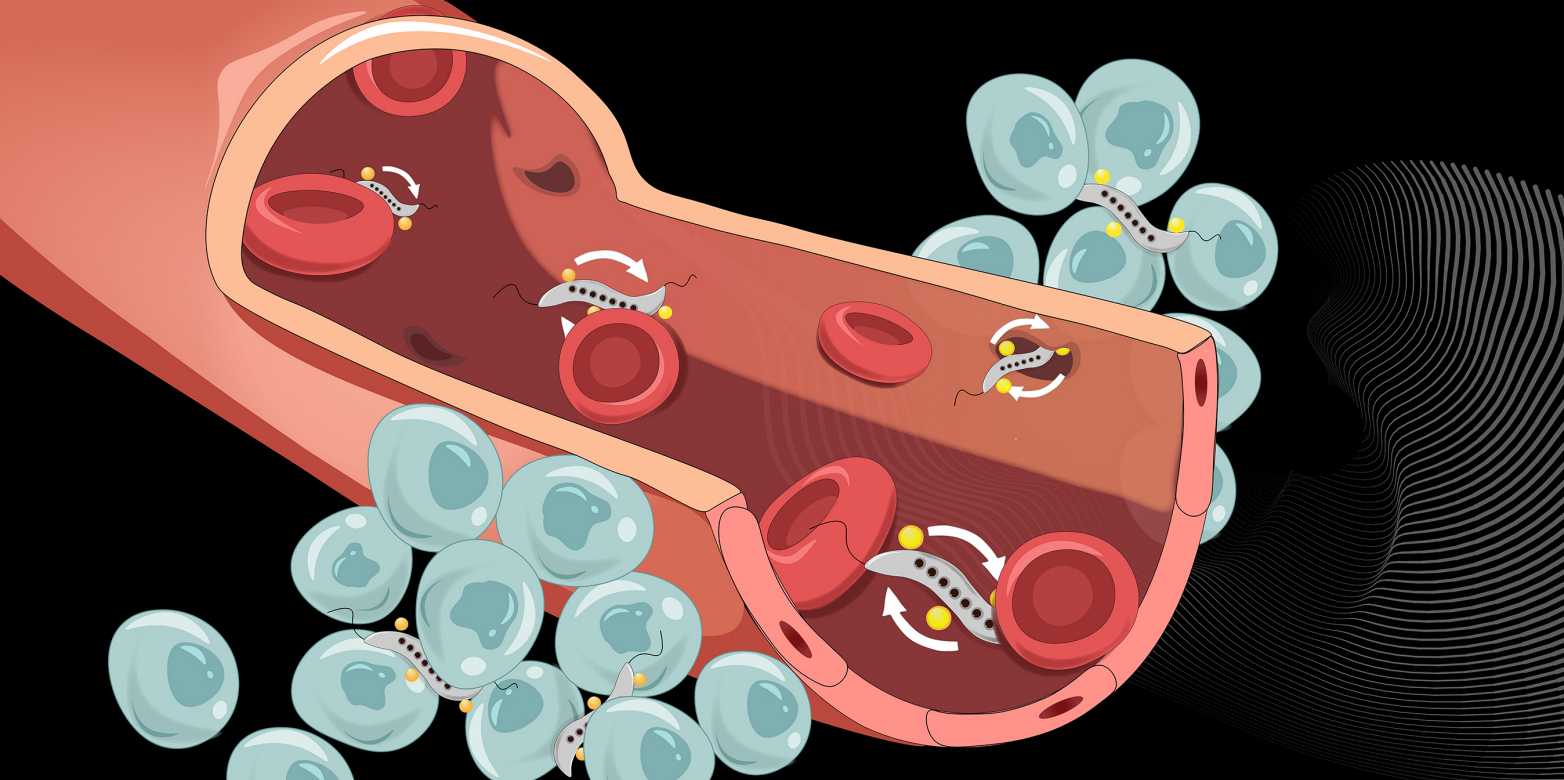

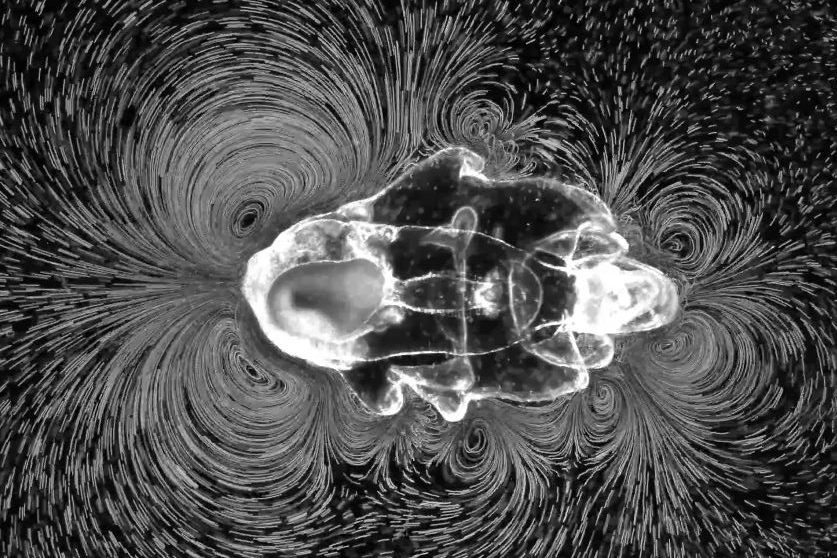

This is why Nava is developing learning algorithms for robots that teach them exactly that: to combine information from different sources. “When I tell a robot arm to ‘hand me the apple on the table,’ it has to connect the word ‘apple’ to the visual features of an apple. What’s more, it has to recognise the apple on the table and know how to grab it.”

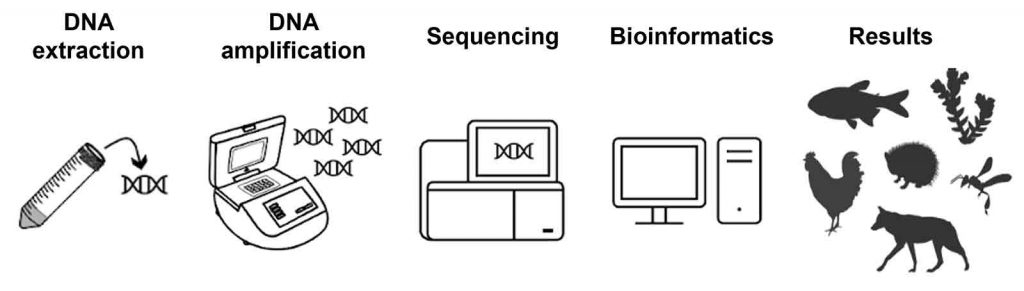

But how does the Nava teach the robot arm to do all that? In simple terms, he sends it to a two-stage training camp. First, the robot acquires general abilities such as speech and image recognition as well as simple hand movements in a kind of preschool.

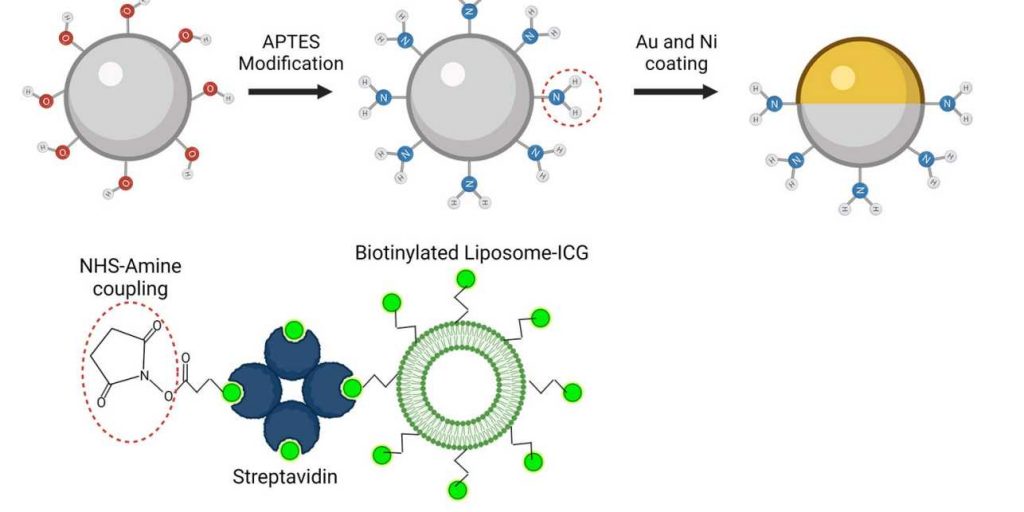

Open-source models that have been trained using giant text, image and video data sets are already available for these abilities. Researchers feed, say, an image recognition algorithm with thousands of images labelled ‘dog’ or ‘cat.’ Then, the algorithm learns independently what features – in this case pixel structures – constitute an image of a cat or a dog.

A new learning algorithm for robots

Nava’s job is to combine the best available models into a learning algorithm, which has to translate different data, images, texts or spatial information into a uniform command language for the robot arm. “In the model, the same vector represents both the word ‘beer’ and images labelled ‘beer’,” Nava says. That way, the robot knows what to reach for when it receives the command “pour me a beer”.

Researchers who deal with artificial intelligence on a deeper level have known for a while that integrating different data sources and models holds a lot of promise. However, the corresponding models have only recently become available and publicly accessible. What’s more, there is now enough computing power to get them up and running in tandem as well.

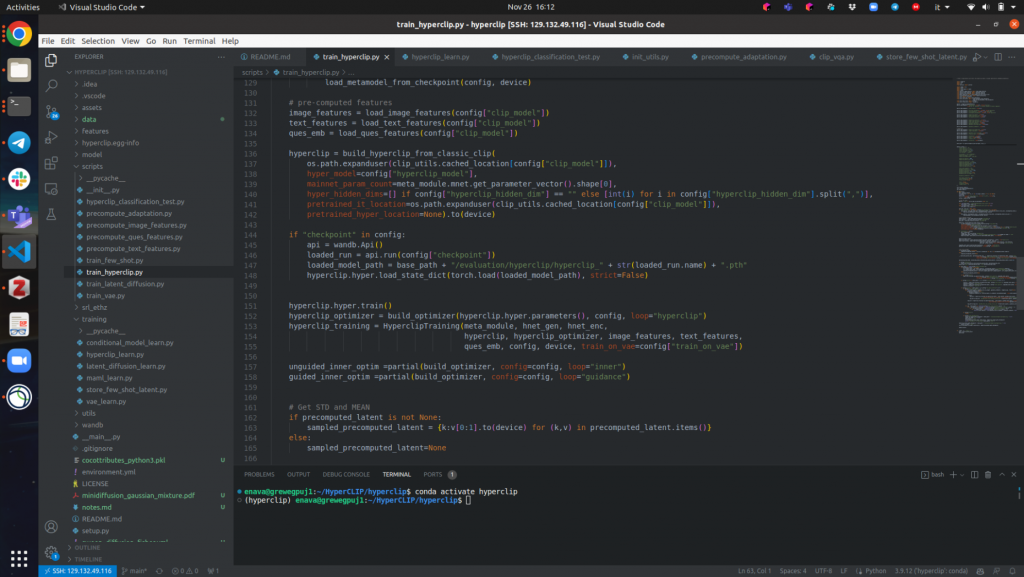

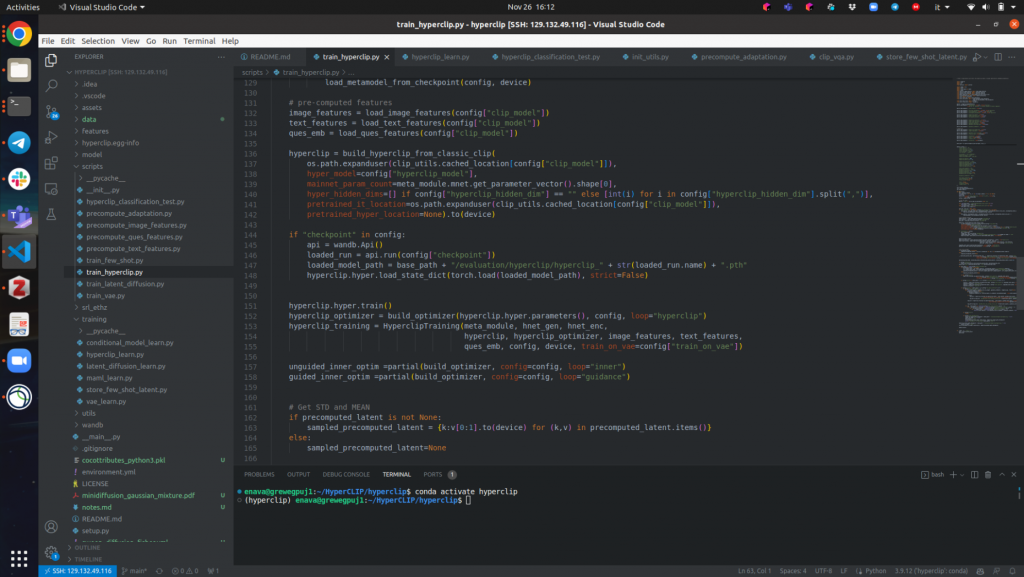

When Nava talks about these things, they sound simple and intuitive. But that’s deceptive: “You have to know the newest models really well, but that’s not enough; sometimes getting them up and running in tandem is an art rather than a science,” he says. It’s tricky problems like these that especially interest Nava. He can work on them for hours, continuously trying out new solutions.

Nava spends the majority of his time coding. (Photograph: Elvis Nava)

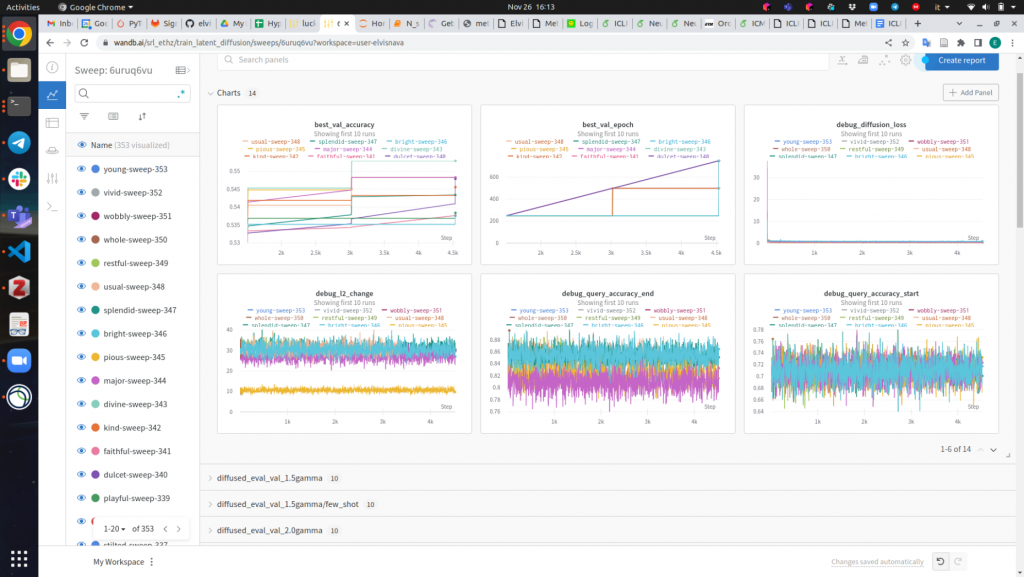

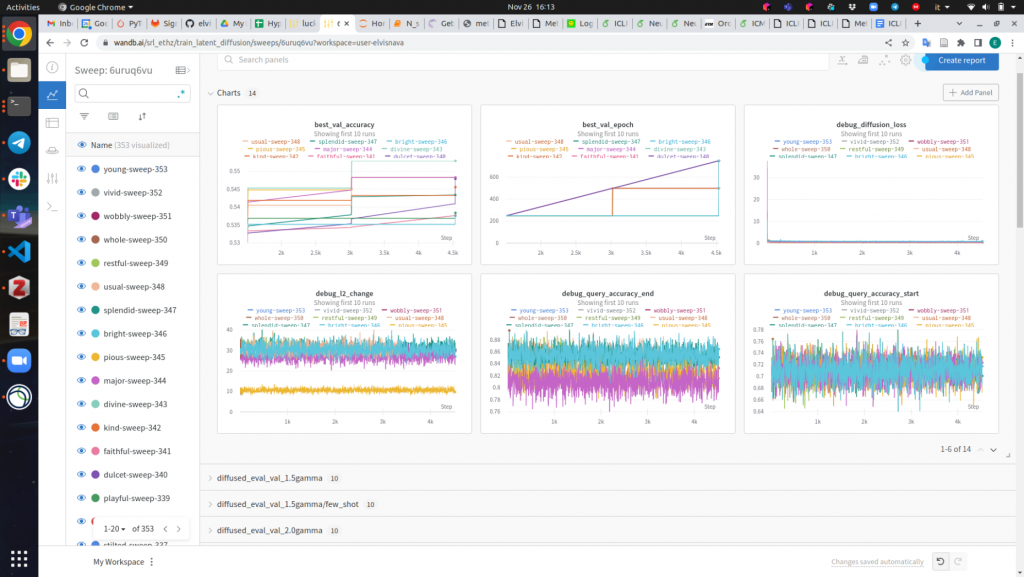

Nava evaluates his learning algorithm. The results of the experiment in a nutshell. (Photograph: Elvis Nava)

Special training: Imitating humans

Once the robot arm has completed preschool and has learnt to understand speech, recognise images and carry out simple movements, Nava sends it to special training. There, the machine learns to, say, imitate the movements of a human hand when pouring a glass of beer. “As this involves very specific sequences of movements, existing models no longer suffice,” Nava says.

Instead, he shows his learning algorithm a video of a hand pouring a glass of beer. Based on just a few examples, the robot then tries to imitate these movements, drawing on what it has learnt in preschool. Without prior knowledge, it simply wouldn’t be able to imitate such a complex sequence of movements.

“If the robot manages to pour the beer without spilling, we tell it ‘well done’ and it memorises the sequence of movements,” Nava says. This method is known as reinforcement learning in technical jargon.

Elvis Nava teaches robots to carry out oral commands such as “pour me a beer”. (Photograph: Daniel Winkler / ETH Zürich)

Foundations for robotic helpers

With this two-stage learning strategy, Nava hopes to get a little closer to realising the dream of creating an intelligent machine. How far it will take him, he does not yet know. “It’s unclear whether this approach will enable robots to carry out tasks we haven’t shown them before.”

It is much more probable that we will see robotic helpers that carry out oral commands and fulfil tasks they are already familiar with or that closely resemble them. Nava avoids making predictions as to how long it will take before these applications can be used in areas such as the care sector or construction.

Developments in the field of artificial intelligence are too fast and unpredictable. In fact, Nava would be quite happy if the robot would just hand him the beer he will politely request after his dissertation defence.