Free-ranging Risso’s dolphin (Grampus griseus) swimming with plastic litter © Massimiliano Rosso for Maelstrom H2020 project

By Sandrine Ceurstemont

If you happened to be around the coast of Dubrovnik, Croatia in September 2021, you might have spotted two robots scouring the seafloor for debris. The robots were embarking on their inaugural mission and being tested in a real-world environment for the first time, to gauge their ability to perform certain tasks such as recognising garbage and manoeuvring underwater. ‘We think that our project is the first one that will collect underwater litter in an automatic way with robots,’ said Dr Bart De Schutter, a professor at Delft University of Technology in the Netherlands and coordinator of the SeaClear project.

The robots are an example of new innovations being developed to clean up underwater litter. Oceans are thought to contain between 22 and 66 million tonnes of waste, which can differ in type from area to area, where about 94% of it is located on the seafloor. Fishing equipment discarded by fishermen, such as nets, are prevalent in some coastal areas while plastic and glass bottles are mostly found in others, for example. ‘We also sometimes see construction material (in the water) like blocks of concrete or tyres and car batteries,’ said Dr De Schutter.

When litter enters oceans and seas it can be carried by currents to different parts of the world and even pollute remote areas. Marine animals can be affected if they swallow garbage or are trapped in it while human health is also at risk if tiny pieces end up in our food. ‘It’s a very serious problem that we need to tackle,’ said Dr Fantina Madricardo, a researcher at the Institute of Marine Sciences – National Research Council (ISMAR-CNR) in Venice, Italy and coordinator of the Maelstrom project.

(Our robotic system) will be much more efficient, cost effective and safer than the current solution which is based on human divers.

Human divers are currently deployed to pick up waste in some marine areas but it’s not an ideal solution. Experienced divers are needed, which can be hard to find, while the amount of time they can spend underwater is limited by their air supply. Some areas may also be unsafe for humans, due to contamination for example. ‘These are aspects that the automated system we are developing can overcome,’ said Dr De Schutter. ‘(It) will be much more efficient, cost effective and safer than the current solution which is based on human divers.’

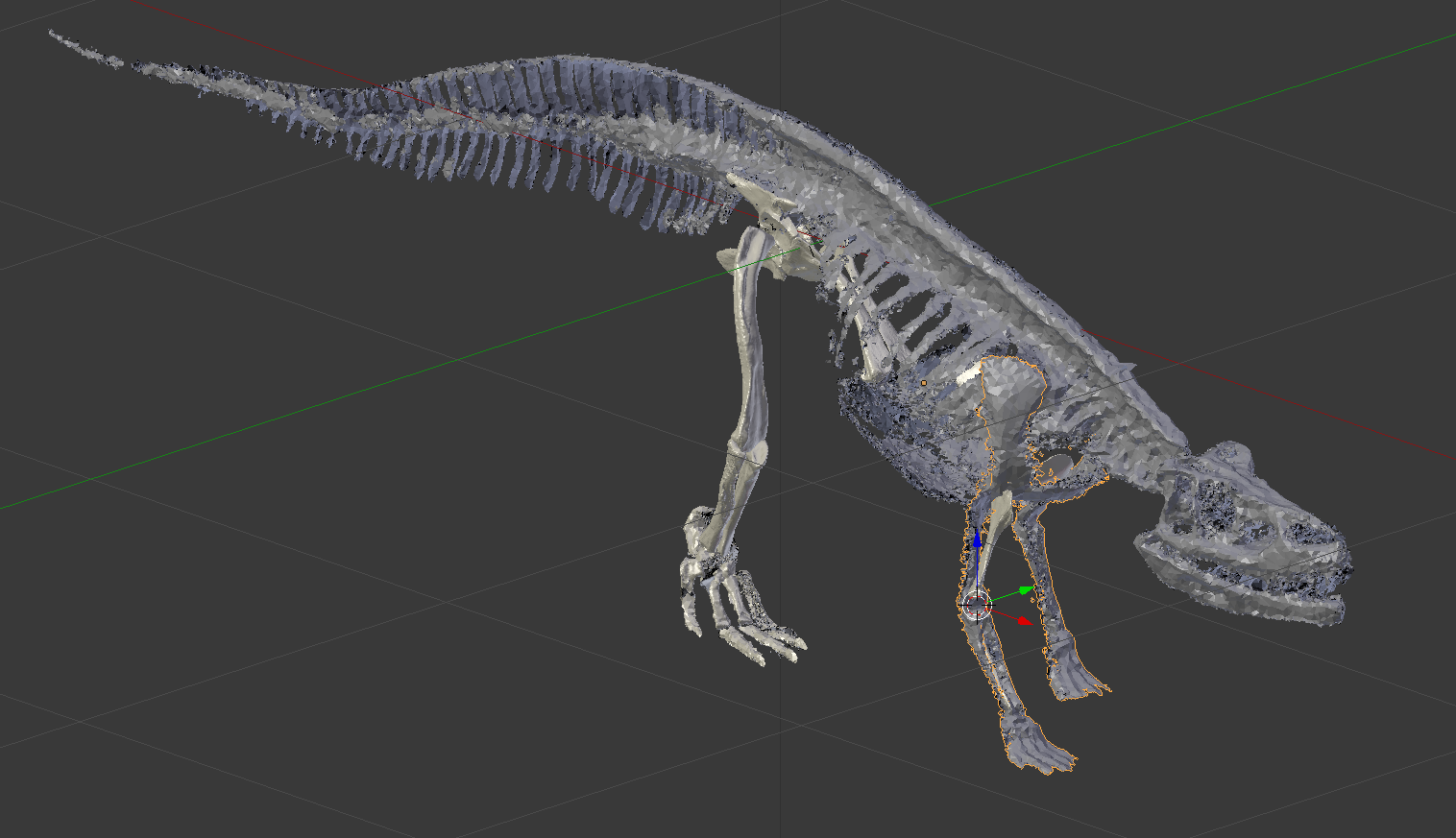

SeaClear’s ROV TORTUGA is known as ‘the cleaner’ robot. It collects the litter from the seafloor. @ SeaClear, 2021

A team of litter-seeking robots

Dr De Schutter and his team are building a prototype of their system for the SeaClear project, which is made up of four different robots that will work collaboratively. A robotic vessel, which remains on the water’s surface, will act as a hub by providing electrical power to the other robots and will contain a computer that is the main brain of the system. The three other robots – two that operate underwater and an aerial drone – will be tethered to the vessel.

The system will be able to distinguish between litter and other items on the seafloor, such as animals and seaweed, by using artificial intelligence. An algorithm will be trained with several images of various items it might encounter, from plastic bottles to fish, so that it learns to tell them apart and identify trash

One underwater robot will be responsible for finding litter by venturing close to the sea floor to take close-up scans using cameras and sonar. The drone will also help search for garbage when the water is clear by flying over an area of interest, while in murky areas it will look out for obstacles to avoid such as ships. The system will be able to distinguish between litter and other items on the seafloor, such as animals and seaweed, by using artificial intelligence. An algorithm will be trained with several images of various items it might encounter, from plastic bottles to fish, so that it learns to tell them apart and identify trash.

Litter collection will be taken care of by the second underwater robot, which will pick up items mapped out by its companions. Equipped with a gripper and a suction device, it will collect pieces of waste and deposit them into a tethered basket placed on the seafloor that will later be brought to the surface. ‘We did some initial tests near Dubrovnik where one plastic bottle was deposited on purpose and we collected it with a gripper robot,’ said Dr De Schutter. ‘We will have more experiments where we will try to recognise more pieces of trash in more difficult circumstances and then collect them with the robot.’

Impact on underwater clean-up

Dr De Schutter and his colleagues think that their system will eventually be able to detect up to 90% of litter on the seafloor and collect about 80% of what it identifies. This is in line with some of the objectives of the EU Mission Restore Our Oceans and Waters by 2030, which is aiming to eliminate pollution and restore marine ecosystems by reducing litter at sea.

When the project is over at the end of 2023, the team expects to sell about ten of their automated systems in the next five to seven years. They think it will be of interest to local governments in coastal regions, especially in touristic areas, while companies may also be interested in buying the system and providing a clean-up service or renting out the robots. ‘These are the two main directions that we are looking at,’ said Dr De Schutter.

Honing in on litter hotspots

Another team is also developing a robotic system to tackle garbage on the seafloor as part of the Maelstrom project. However, their first step is to identify hotspots underwater where litter accumulates so that they will know where it should be deployed. Different factors such as water currents, the speed at which a particular discarded item sinks, and underwater features such as canyons all affect where litter will pool. ‘We are developing a mathematical model that can predict where the litter will end up,’ said Dr Madricardo.

Their robotic system, which is being tested near Venice, is composed of a floating platform with eight cables that are connected to a mobile robot that will move around on the seafloor beneath it to collect waste items in a box, using a gripper, hook or suction device depending on the size of the litter. The position and orientation of the robot can be controlled by adjusting the length and tension of the cables and will initially be operated by a human on the platform. However, using artificial intelligence, the robot will learn to recognise different objects and will eventually be able to function independently.

Repurposing underwater litter

Dr Madricardo and her colleagues are also aiming to recycle all the litter that is picked up. A second robot will be tasked with sorting through the retrieved waste and classifying it based on what it is made of, such as organic material, plastic or textiles. Then, the project is teaming up with industrial partners involved in different types of recycling, from plastic to chemical to fibreglass, to transform what they have recovered.

We want to demonstrate that you can really try to recycle everything, which is not easy

Dirty and mixed waste plastics are difficult to recycle, so the team used a portable pyrolysis plant developed under the earlier marGnet project to turn waste plastic into fuel to power their removal technology. This fits with the EU’s goal to move towards a circular economy, where existing products and materials are repurposed for as long as possible, as part of the European Green Deal and Plastics Strategy. ‘We want to demonstrate that you can really try to recycle everything, which is not easy,’ said Dr Madricardo.

Harnessing bubbles to clean up rivers

Dr Madricardo and her colleagues are also developing a second technology focussed on removing litter floating in rivers so that it can be intercepted before it reaches the sea. A curtain of bubbles, called a Bubble Barrier, will be created by pumping air through a perforated tube placed on the bottom of a river, which produces an upwards current to direct litter towards the surface and eventually to the banks where it is collected.

The system has been tested in canals in the Netherlands and is currently being trialled in a river north of Porto in Portugal, where it is expected to be implemented in June. ‘It’s a simple idea that does not have an impact on (boat) navigation,’ said Dr Madricardo. ‘We believe it will not have a negative impact on fauna either, but we will check that.’

Although new technologies will help tackle underwater litter, Dr Madricardo and her team are also aiming to reduce the amount of waste that ends up in water bodies in the first place. The Maelstrom project therefore involves outreach efforts, such as organised coastal clean-up campaigns, to inform and engage citizens about what they can do to limit marine litter. ‘We really believe that a change (in society) is needed,’ said Dr Madricardo. ‘There are technologies (available) but we also need to make a collective effort to solve this problem.’

The research in this article was funded by the EU. If you liked this article, please consider sharing it on social media.