Autonomous AI agents – once a sci-fi concept – are rapidly becoming a mainstream reality. These agents don’t just chat; they plan, reason, and act across digital environments to achieve user goals independently. As we move into 2025, the race to build these agents is in full swing, with tech giants and nimble startups alike unveiling platforms that promise a new paradigm for how we interact with software.

From Chatbots to Agents

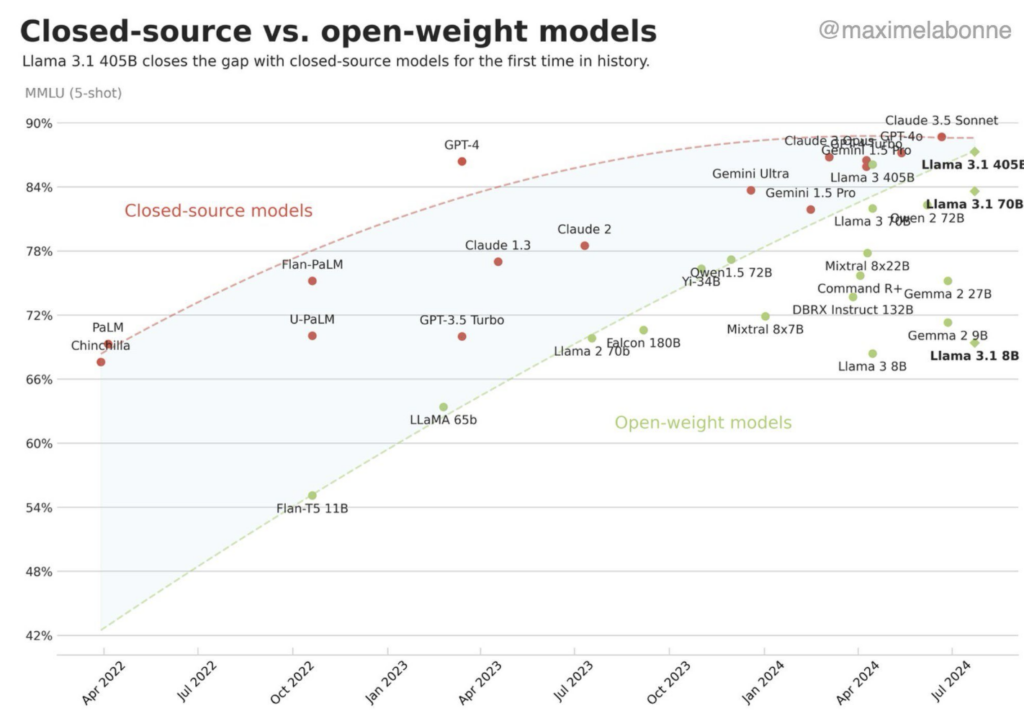

The rise of large language models (LLMs) like GPT-4 and Claude set the stage for a shift in how AI systems interact with users. But the current wave of innovation isn’t about smarter chat – it’s about action. AI agents can navigate websites, manipulate documents, send emails, write code, or coordinate workflows – all with minimal user oversight. While the concept isn’t new, execution is becoming increasingly sophisticated. Tech leaders now see agents as foundational to artificial general intelligence (AGI), with OpenAI’s Sam Altman forecasting a near future where AI agents join the workforce.

OpenAI: The Builder’s Toolkit

OpenAI kicked off 2025 by launching new agent-building tools. Their Agents SDK and Responses API allow developers to create GPT-powered agents that use tools, execute functions, and handle multi-step tasks autonomously. ChatGPT’s new Deep Research mode turns the assistant into a self-directed analyst capable of synthesizing hundreds of sources and producing high-quality reports.

Perhaps most impressive is Operator, a research agent that can interact with live websites on the user’s behalf. It fills out forms, clicks through interfaces, and completes transactions – effectively automating browser workflows with human-level precision.

Google: Agents at Enterprise Scale

Google’s Agentspace is a hub for building and deploying AI agents in enterprise environments. Powered by Gemini LLMs, it supports Google-built agents like Deep Research, Idea Generation, and NotebookLM Plus, which automate reporting, strategy, and data synthesis – all within secure access controls. Users can also create custom agents without coding via an intuitive, conversational interface. This makes automating workflows accessible even to non-technical staff.

Google is also pushing for agent interoperability with its Agent2Agent (A2A) protocol, enabling agents across platforms to securely communicate and collaborate. Over 50 partners have signed on to this standard, including technology partners like Atlassian, Cohere, Intuit, Langchain, MongoDB, PayPal, Salesforce, and SAP; as well as leading service providers including Accenture, BCG, Capgemini, Deloitte, Infosys, KPMG, McKinsey, and PwC. A2A enables developers to create agents that can seamlessly interact with any other agent built on the protocol, while giving users the flexibility to mix and match agents from different providers.

Microsoft: Agents Inside Office

Microsoft is embedding agents directly into its Microsoft 365 Copilot suite. While Copilot is an AI-powered assistant designed to support tasks, deliver insights, and enhance productivity, agents are purpose-built AI tools tailored to manage specific processes or address particular business challenges.

Its new Copilot agents – Researcher and Analyst – operate inside Office apps and can autonomously generate reports, analyze datasets, and summarize insights with secure, compliant access to your work data (e.g., emails, meetings, files, chats, etc) and the web.

Researcher helps users tackle complex, multi-step research tasks at work by combining OpenAI’s advanced research model with Microsoft 365 Copilot’s powerful orchestration and deep search capabilities. It can also integrate third-party data through connectors, enhancing its functionality with more comprehensive insights – pulling information directly from external sources like Salesforce, ServiceNow, Confluence, and more into the Microsoft 365 environment.

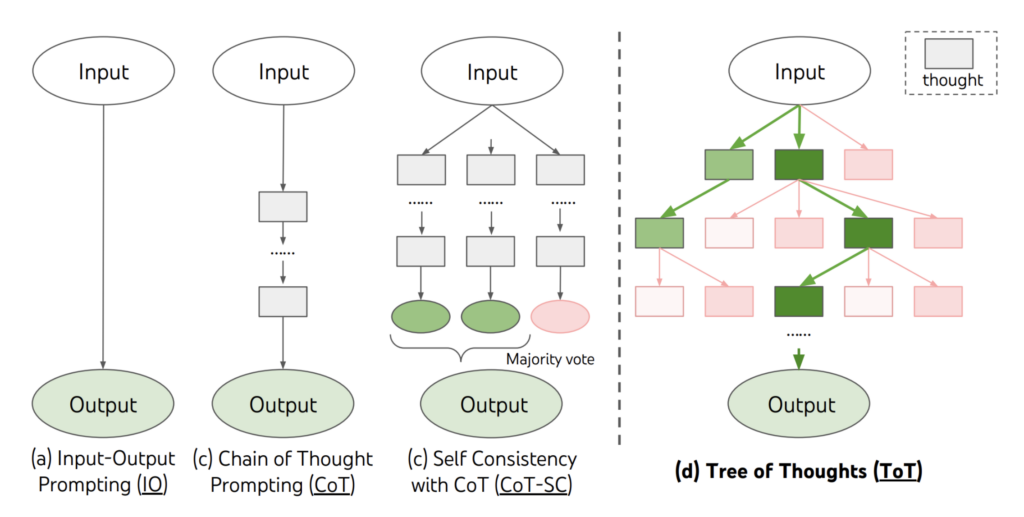

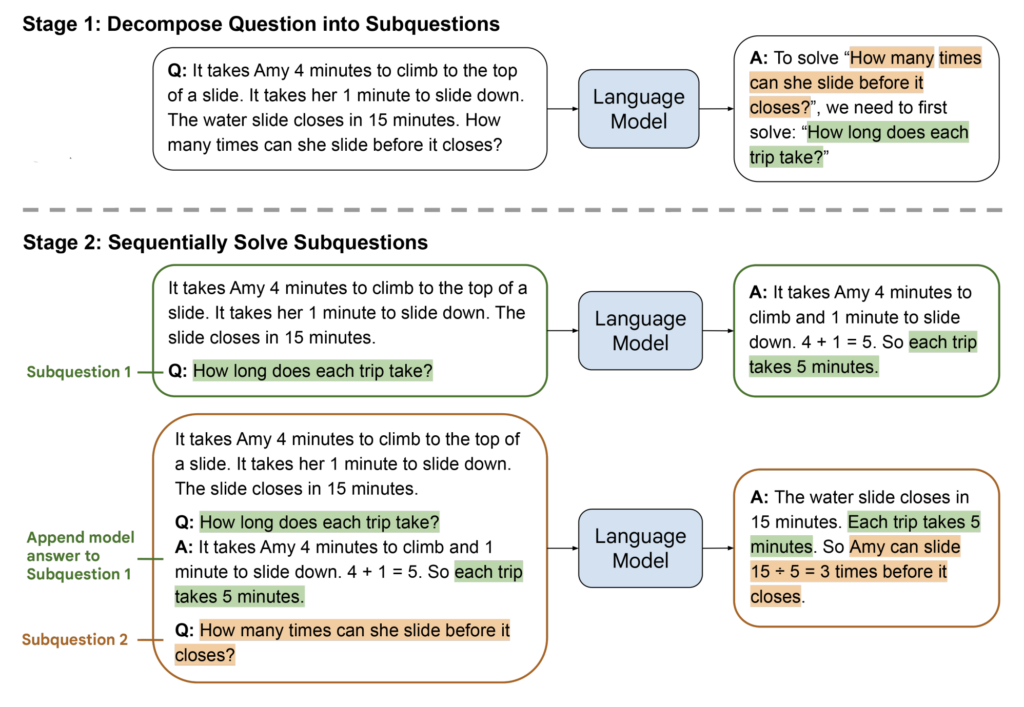

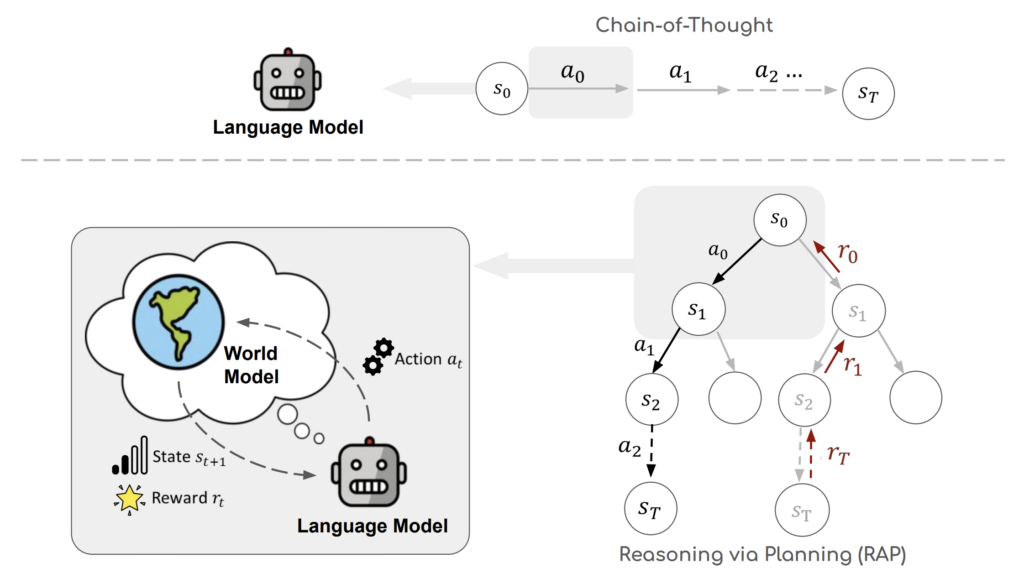

Analyst thinks like a skilled data scientist, turning raw data into actionable insights within minutes. Powered by OpenAI’s o3-mini reasoning model and optimized for advanced workplace data analysis, Analyst applies chain-of-thought reasoning to break down problems step by step, refining its approach as needed to deliver high-quality, human-like analytical responses.

Anthropic: Reliable and Aligned Agents

Anthropic’s Claude model is praised for its strong reasoning, transparency, and alignment, making it a top choice for developers building safe and effective AI agents.

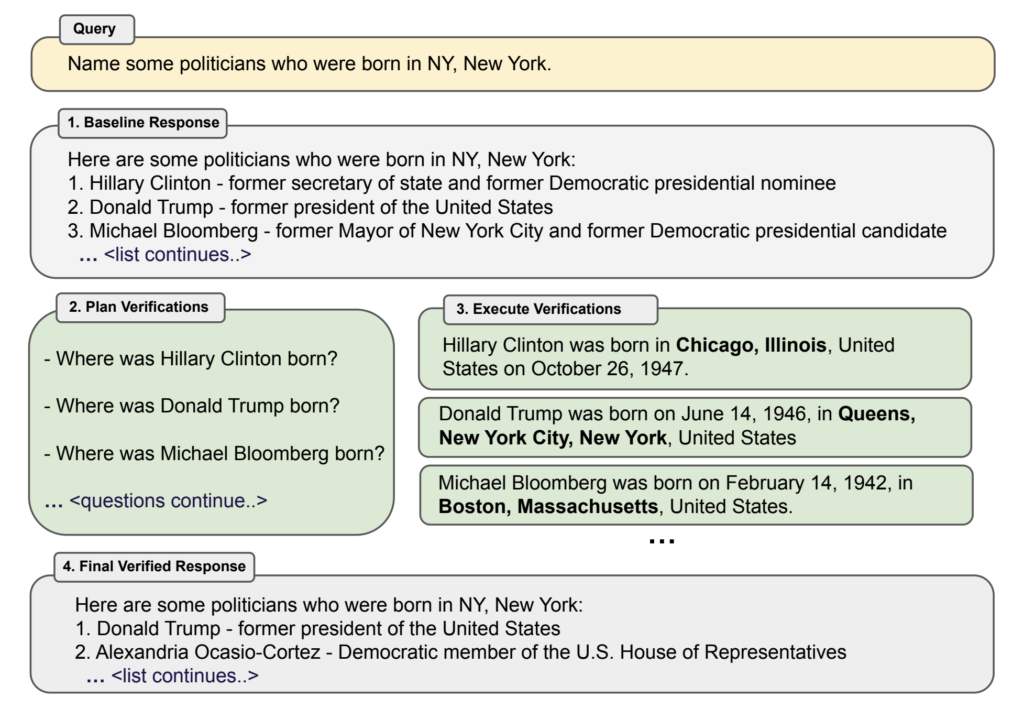

One of the standout capabilities of Claude is its tool-use functionality. Anthropic supports structured function calling, allowing Claude to interact with APIs, retrieve external data, and manipulate content through calls to external tools. Developers can define functions and expose them to Claude, which then chooses when and how to call them based on user intent. This makes it possible to build Claude-powered agents that can, for instance, fetch live data, trigger workflows in third-party apps, or even write and execute code snippets.

Another implementation of Claude’s agentic capabilities is Claude Code, a coding-focused agent designed to assist developers in real time. Claude Code can autonomously generate, debug, and modify code within an IDE-like interface, interacting via terminal and code editor to carry out tasks like writing functions, resolving bugs, or refactoring logic. It uses a combination of tool use, context retention, and code execution to function like a highly capable AI pair programmer.

Claude continues to power agents across multiple enterprise partners, including integrations with Slack and Databricks, further solidifying its role as a dependable core for agentic applications.

Amazon: Nova Act and the Legacy of Adept

Amazon joined the agent arms race with the Nova Act initiative, enabling developers to build agents capable of performing tasks within a web browser. These agents can execute complex, multi-step workflows, such as submitting out-of-office requests, scheduling calendar events, and managing emails.

The SDK allows for the integration of detailed instructions, API calls, and direct browser manipulation through Playwright, enhancing the reliability and flexibility of the agents. This focus on dependable, composable actions aims to reduce the need for constant human supervision, paving the way for more autonomous and efficient AI agents in various applications.

Nova Act is likely a continuation of Adept’s work on ACT-1, as several members of that team (including David Luan, Adept’s CEO) now lead the project.

Manus: Going Full Autonomy

Among startups, Chinese company Monica made headlines with its Manus agent. Launched in March 2025, Manus claims to complete full tasks – like planning a trip, building a website, or comparing insurance options – end-to-end without user intervention.

Unlike simpler automation tools, Manus dynamically plans and executes multi-step tasks by integrating web browsing, tool use, and real-time reasoning. It impressed early users on benchmarks like GAIA, achieving over 86% task success.

Users report that while Manus shows promise, it isn’t without flaws – it sometimes misunderstands instructions, makes incorrect assumptions, or takes shortcuts to complete tasks more quickly. However, it stands out for its clear explanations, impressive adaptability, and significant improvement when given detailed guidance or feedback. Overall, it’s a promising tool, though not yet perfect.

Other Startups and Frameworks Driving Agent Innovation

Salesforce with its Agentforce platform is another notable entrant in the space. Agentforce helps automate CRM workflows by embedding AI agents within the Salesforce ecosystem.

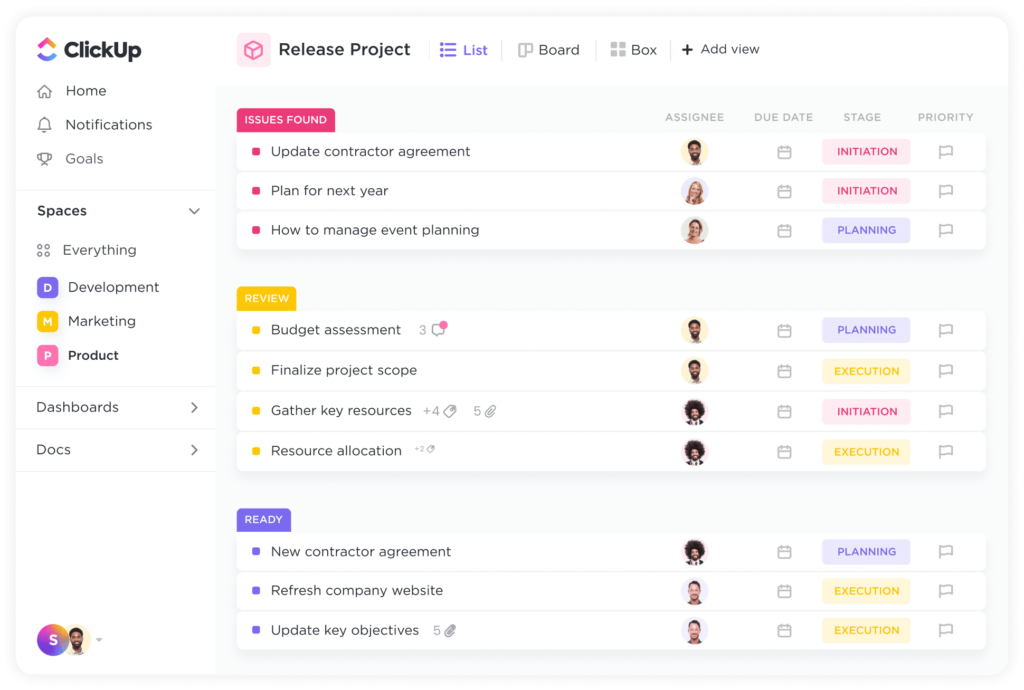

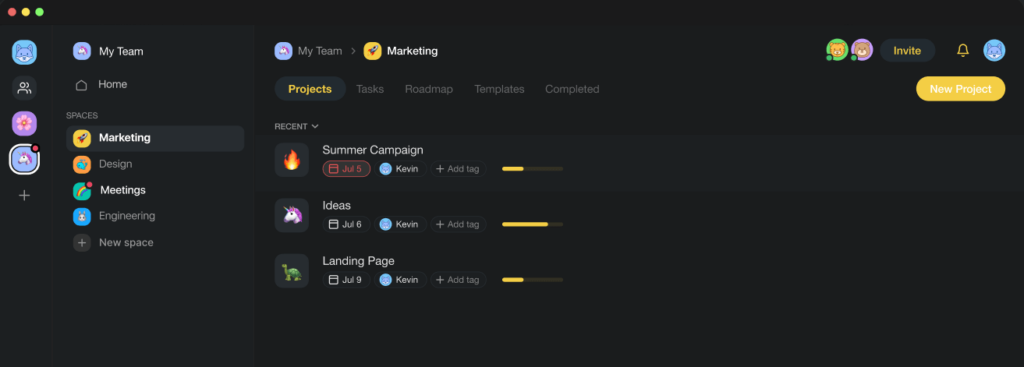

Open-source frameworks such as AutoGPT and SuperAGI continue to lower the barrier for developers seeking to build agents. These and other similar frameworks allow AI language models to function as autonomous agents by providing them with the ability to break down complex tasks into steps, use external tools, and maintain memory between operations. These systems enable AI to work on goals with minimal human supervision by creating structured loops of planning, execution, and reflection that let the AI tackle multi-stage problems independently.

Newer frameworks such as crewAI and Autogen are also gaining traction. crewAI enables developers to coordinate multiple AI agents assigned to different roles within a shared crew, facilitating collaborative problem-solving for complex tasks. Autogen, developed by Microsoft Research, allows for orchestration of conversations between agents with specialized functions, enabling more scalable and modular workflows. Both platforms aim to bring structured multi-agent systems into mainstream application development.

What Comes Next?

Three trends define the next phase of agent development:

- Enterprise Integration: Agents are being embedded in productivity tools, security software, and cloud environments.

- Collaboration & Communication: Multi-agent systems are beginning to work in teams, with protocols like A2A enabling inter-agent dialogue.

- Autonomy with Oversight: While agents act more independently, transparency, logging, and permissioning systems are being built in to ensure control and alignment.

Looking ahead, we’ll see agents with memory, improved reasoning, and even the ability to interface with physical systems (like robots). As autonomous agents evolve from assistants to collaborators, they could reshape knowledge work, software usage, and human-computer interaction at large.

The age of passive chatbots is over. Autonomous agents are here – and they’re ready to work.

Enjoy this article? Sign up for more AI updates.

We’ll let you know when we release more summary articles like this one.

The post The AI Agent Race Heats Up: Who’s Leading in 2025? appeared first on TOPBOTS.