Presentation of the best paper award at the RoboCup 2025 symposium.

Presentation of the best paper award at the RoboCup 2025 symposium.

An important aspect of autonomous soccer-playing robots concerns accurate detection of the ball. This is the focus of work by Can Lin, Daniele Affinita, Marco Zimmatore, Daniele Nardi, Domenico Bloisi, and Vincenzo Suriani, which won the best paper award at the recent RoboCup symposium. The symposium takes place alongside the annual RoboCup competition, which this year was held in Salvador, Brazil. We caught up with some of the authors to find out more about the work, how their method can be transferred to applications beyond RoboCup, and their future plans for the competition.

Could you start by giving us a brief description of the problem that you were trying to solve in your paper “Self-supervised Feature Extraction for Enhanced Ball Detection on Soccer Robots”?

Daniele Affinita: The main challenge we faced was that deep learning generally requires a large amount of labeled data. This is not a major problem for common tasks that have already been studied, because you can usually find labeled datasets online. But when the task is highly specific, like in RoboCup, you need to collect and label the data yourself. That means gathering the data and manually annotating it before you can even start applying deep learning. This process is not scalable and demands a significant human effort.

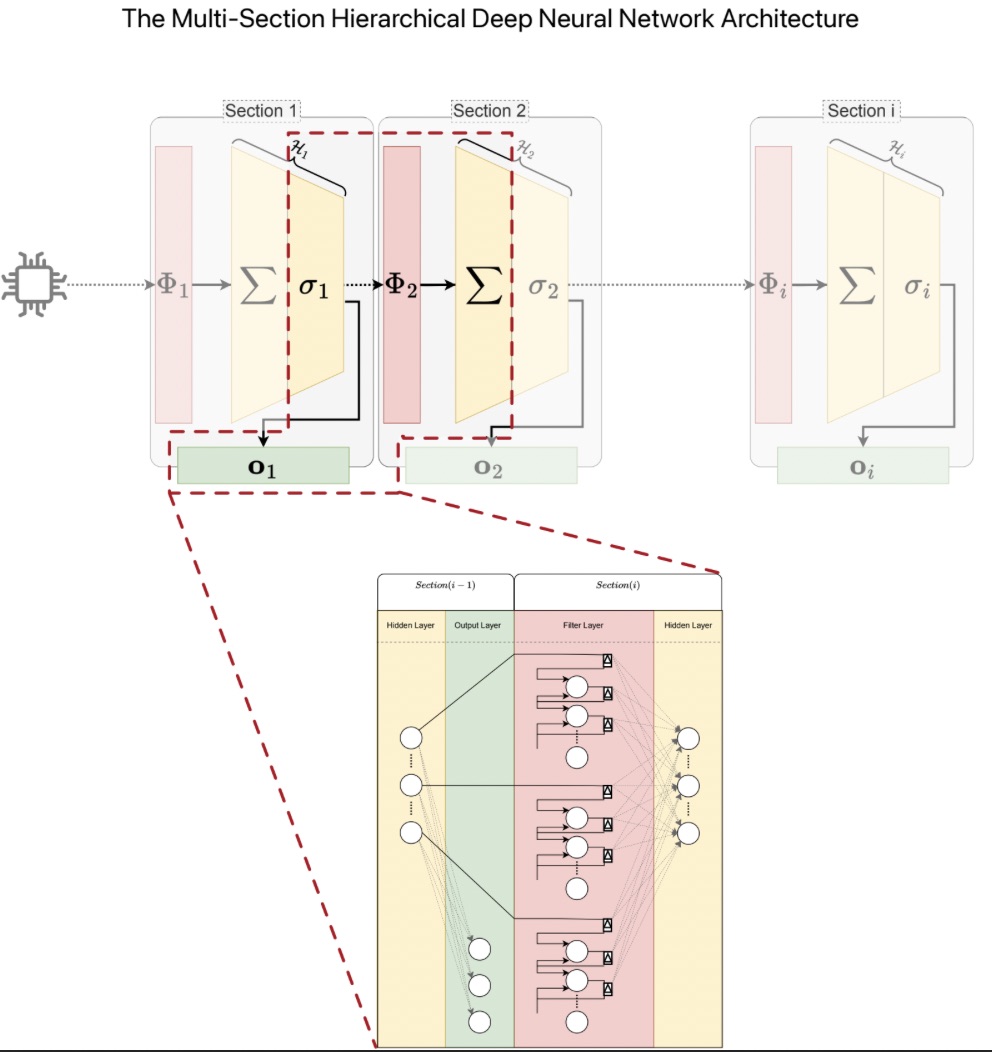

The idea behind our paper was to reduce this human effort. We approached the problem through self-supervised learning, which aims to learn useful representations of the data. After all, deep learning is essentially about learning latent representations from the available data.

Could you tell us a bit more about your self-supervised learning framework and how you went about developing it?

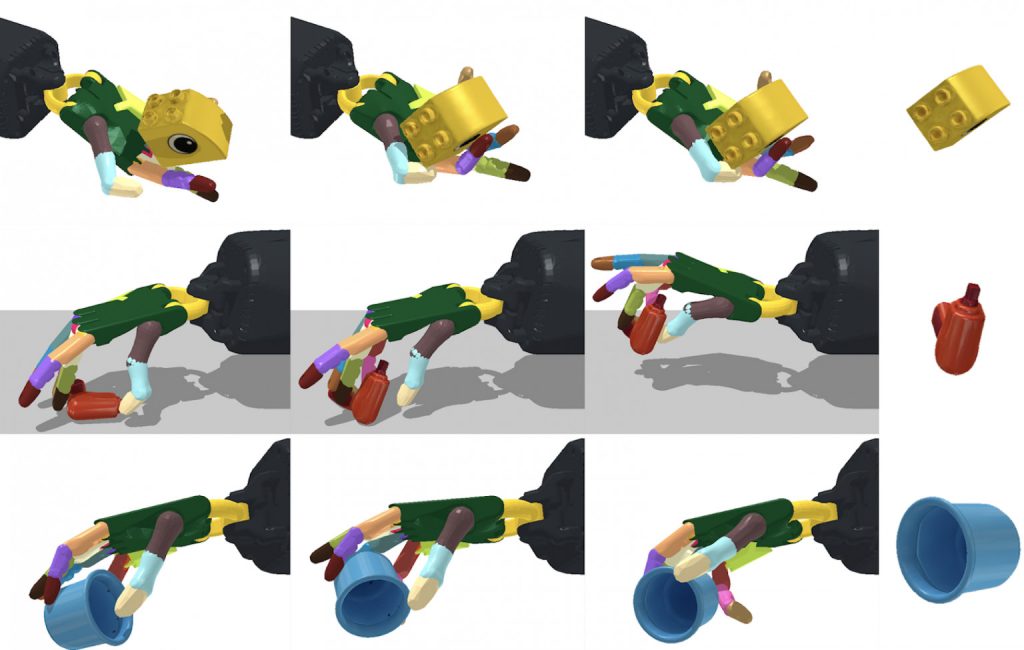

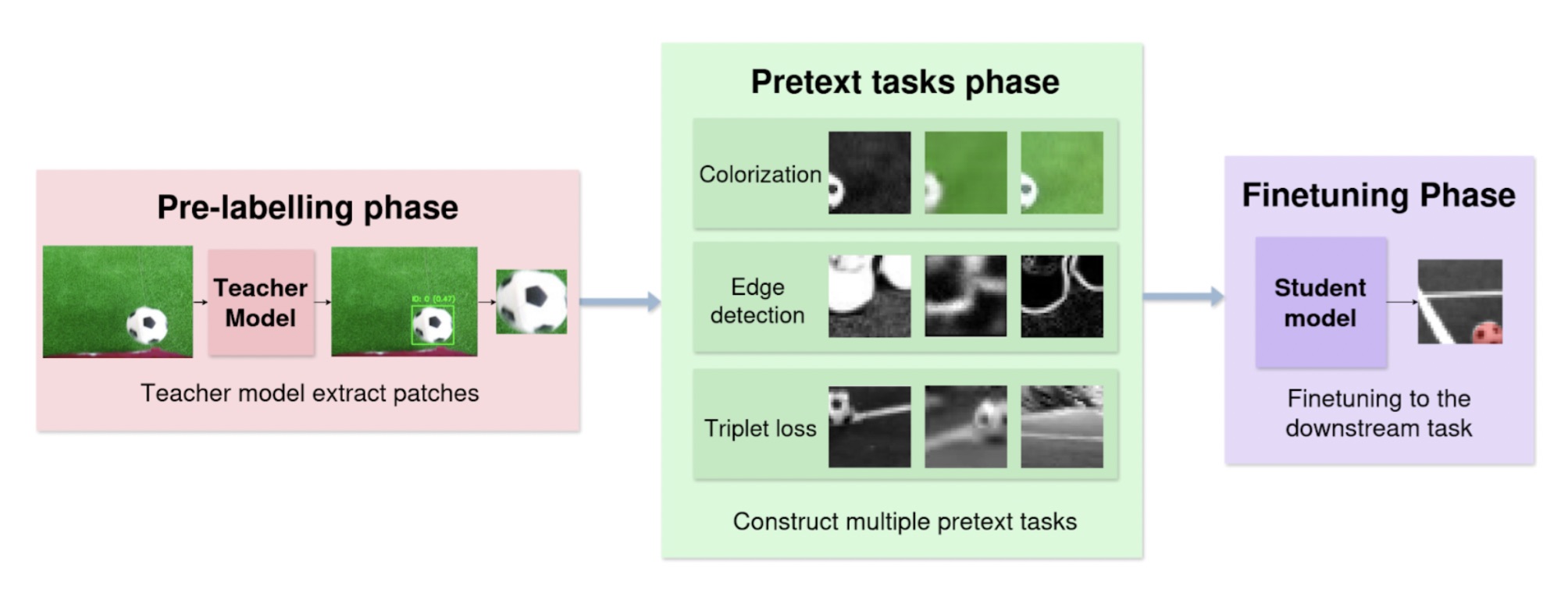

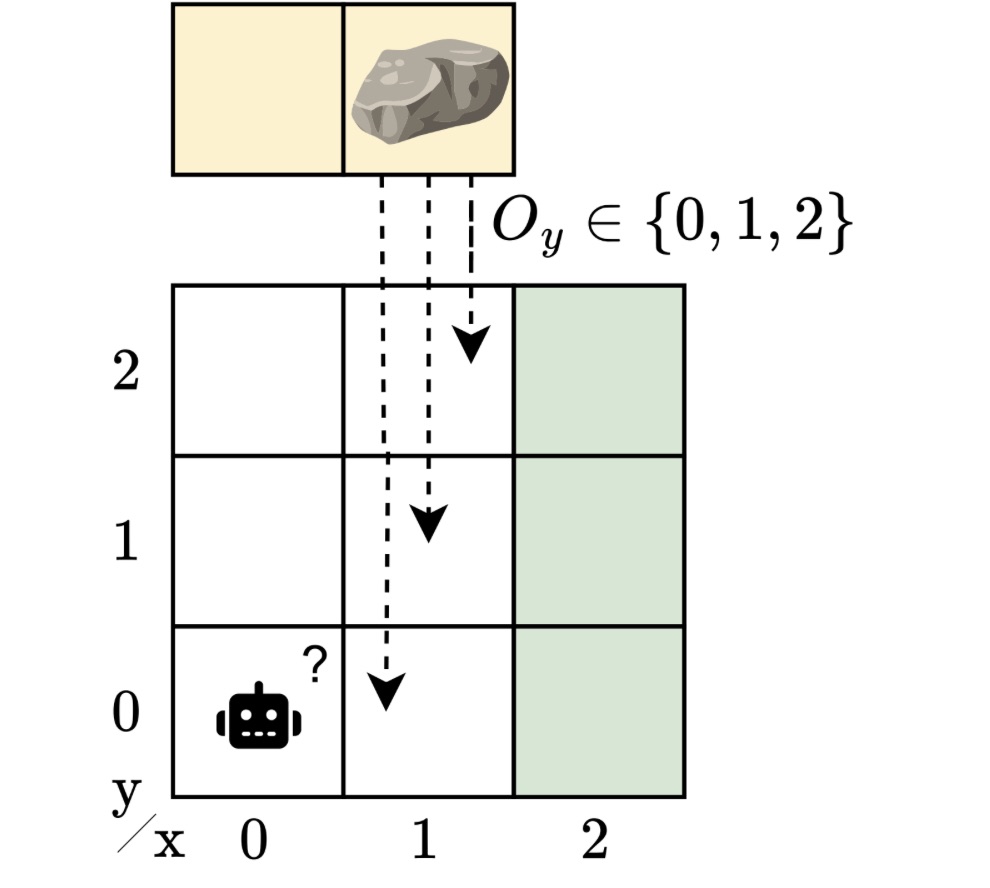

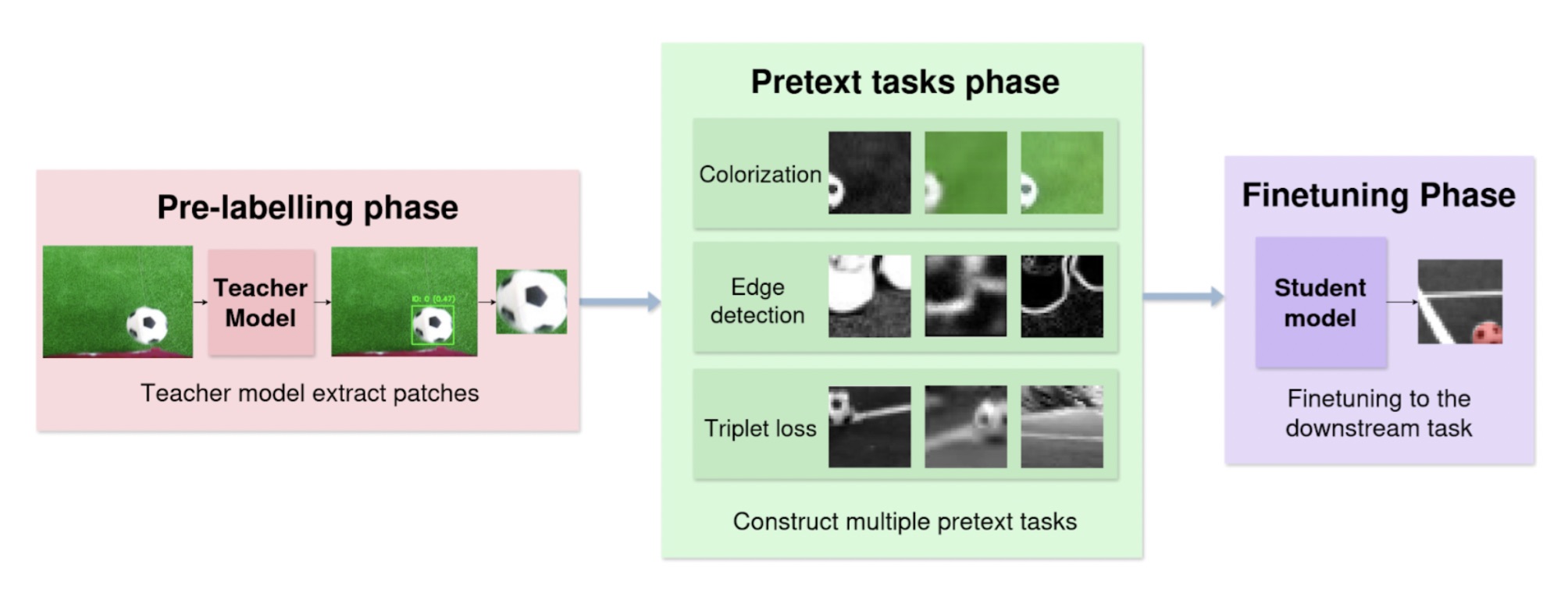

Daniele: First of all, let me introduce what self-supervised learning is. It is a way of learning the structure of the data without having access to labels. This is usually done through what we call pretext tasks. These are tasks that don’t require explicit labels, but instead exploit the structure of the data. For example, in our case we worked with images. You can randomly mask some patches and train the model to predict the missing parts. By doing so, the model is forced to learn meaningful features from the data.

In our paper, we enriched the data by using not only raw images but also external guidance. This came from a larger model which we refer to as the teacher. This model was trained on a different task which is more general than the target task we aimed for. This way the larger model can provide guidance (an external signal) that helps the self-supervision to focus more on the specific task we care about.

In our case, we wanted to predict a tight circle around the ball. To guide this, we used an external pretrained model (YOLO) for object detection, which instead predicts a loose bounding box around the ball. We can arguably say that the bounding box, a rectangle, is more general than a circle. So in this sense, we were trying to use external guidance that doesn’t solve exactly the underlying task.

Overview of the data preparation pipeline.

Overview of the data preparation pipeline.

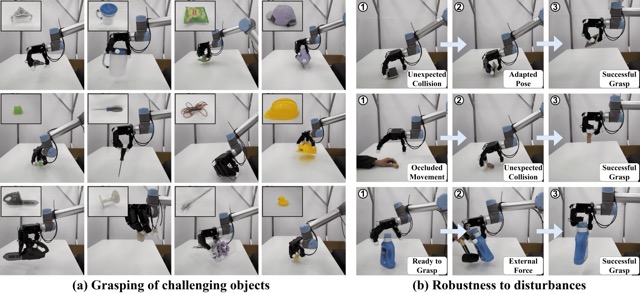

Were you able to test this model out at RoboCup 2025?

Daniele: Yes, we deployed it at RoboCup 2025 and showed great improvements over our previous benchmark, which was the model we used in 2024. In particular, we noticed that the final training requires much less data. The model was also more robust under different lighting conditions. The issue we had with previous models was that they were tailored for specific situations. But of course, all the venues are different, the lighting and the brightness are different, there might be shadows on the field. So it’s really important to have a reliable model and we really noticed a great improvement this year.

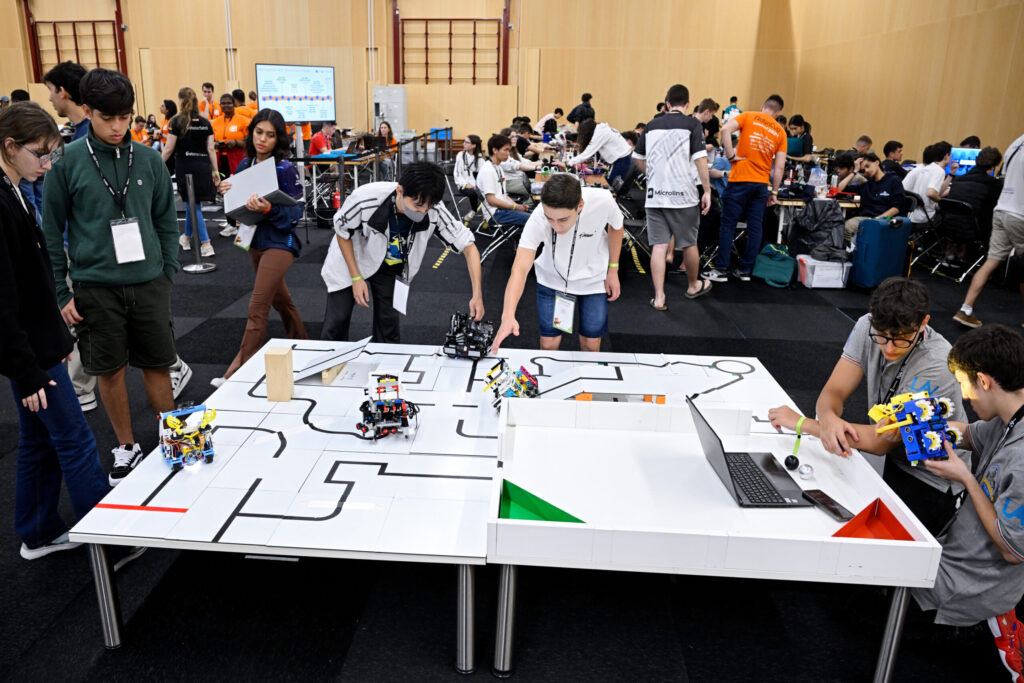

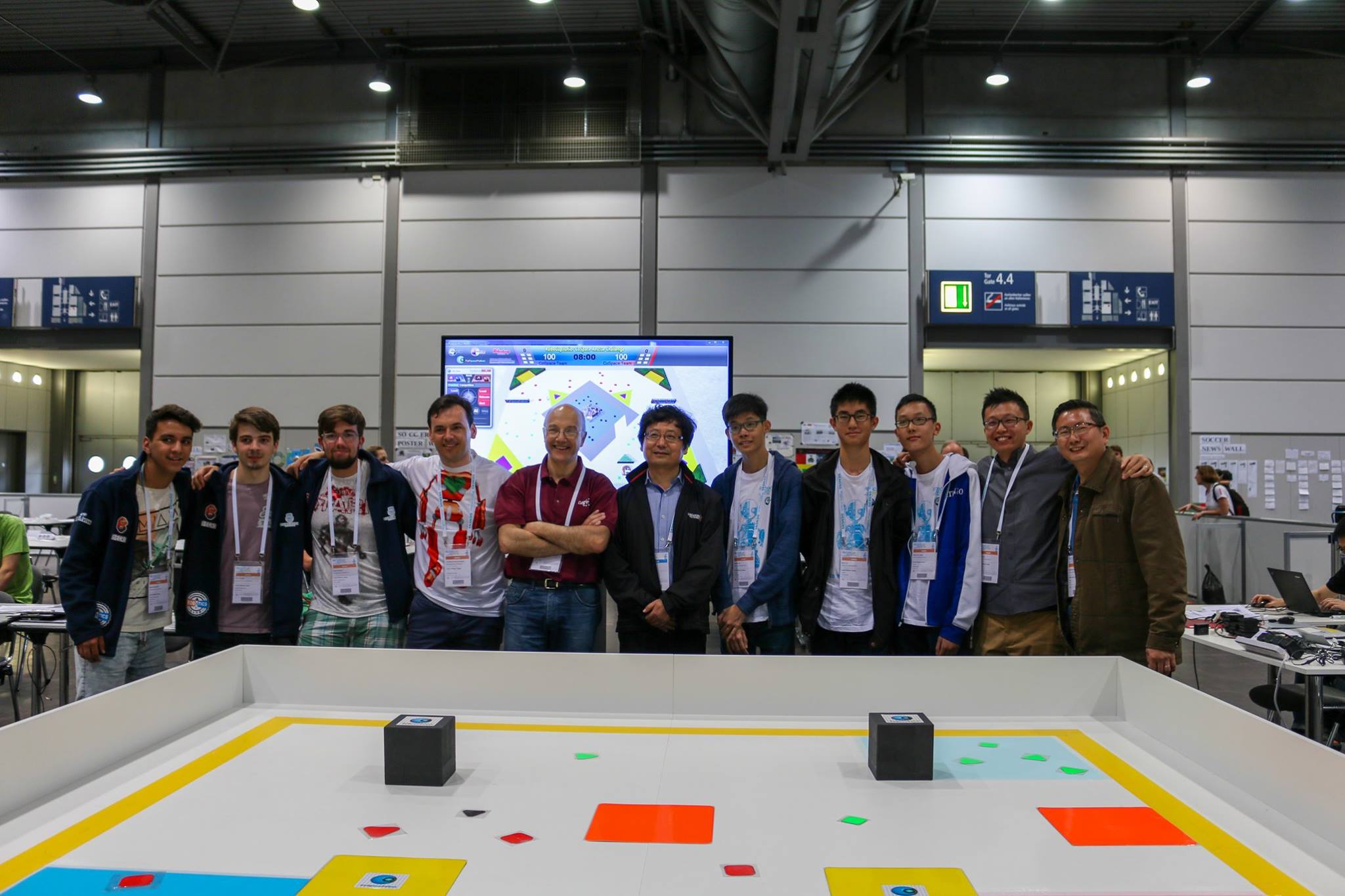

What’s your team name, and could you talk a bit about the competition and how it went?

Daniele: So our team is SPQR. We are from Rome, and we have been competing in RoboCup for a long time.

Domenico Blois: We started in 1998, so we are one of the oldest teams in RoboCup.

Daniele: Yeah, I wasn’t even born then! Our team started with the four-legged robots. And then the league shifted more towards biped robots because they are more challenging, they require balance and, overall it’s harder to walk on just two legs.

Our team has grown a lot during recent years. We have been following a very positive trend, going from 9th place in 2019 to third place at the German Open in 2025, and we got 4th place at RoboCup 2025. Our recent success has attracted more students to the team. So it’s kind of a loop – you win more, you attract more students, and you can work more on the challenges proposed by RoboCup.

SPQR team.

SPQR team.

Domenico: I want to add that also, from a research point of view, we have won three best paper awards in the last five years, and we have been proposing some new trends towards, for example, the use of LLMs for coding (as a robot’s behaviour generator under the supervision of a human coach). So we are trying to keep the open research field active in our team. We want to win the matches but we also want to solve the research problems that are bound together with the competition.

One of the important contributions of our paper is towards the use of our algorithms outside RoboCup. For example, we are trying to apply the ball detector in precision farming. We want to use the same approach to detect rounded fruits. This is something that is really important for us; to exit the context of Robocup and to use Robocup tools for new approaches in other fields. So if we lose a match, it’s not a big deal for us. We want our students, our team members, to be open minded towards the use of RoboCup as a starting point for understanding teamwork and for understanding how to deal with strict deadlines. This is something that RoboCup can give us. We try to have a team that is ready for every type of challenge, not only within RoboCup, but also other types of AI applications. Winning is not everything for us. We’d prefer to use our own code and not win, than win using code developed by others. This is not optimal for achieving first place, but we want to teach our students to be prepared for the research that is outside of RoboCup.

You said that you’ve previously won two other best paper awards. What did those papers cover?

Domenico: So the last two best papers were kind of visionary papers. In one paper, we wanted to give an insight in how to use the spectators to help the robots score. For example, if you cheer louder, the robots tend to kick the ball. So this is something that is not actually used in the competition now, but is something more towards the 2050 challenge. So we want to imagine how it will be 10 years from now.

The other paper was called “play everywhere”, so you can, for example, play with different types of ball, you can play outside, you can even play without a specific goal, you can play using Coca-Cola cans as goalposts. So the robot has to have a general approach that is not related to the specific field used in RoboCup. This is in contrast to other teams that are very specific. We have a different approach and this is something that makes it harder for us to win the competition. However, we don’t want to win the competition, we want to achieve this goal of having, in 2050, this match between the RoboCup winners and the FIFA World Cup winners.

I’m interested in what you said about transferring the method for ball detection to farming and other applications. Could you say more about that research?

Vincenzo Suriani: Our lab has been involved in some different projects relating to farming applications. The Flourish project ran from 2015 – 2018. More recently, the CANOPIES project has focussed on precision agriculture for permanent crops where farmworkers can efficiently work together with teams of robots to perform agronomic interventions, like harvesting or pruning.

We have another project that is about detecting and harvesting grapes. There is a huge effort in bringing knowledge back from RoboCup to other projects, and vice versa.

Domenico: Our vision now is to focus on the new generation of humanoid robots. We participated in a new event, the World Humanoid Robot Games, held in Beijing in August 2025, because we want to use the platform of RoboCup for other kinds of applications. The idea is to have a single platform with software that is derived from RoboCup code that can be used for other applications. If you have a humanoid robot that needs to move, you can reuse the same code from RoboCup because you can use the same stabilization, the same vision core, the same framework (more or less), and you can just change some modules and you can have a completely different type of application with the same robot with more or less the same code. We want to go towards this idea of reusing code and having RoboCup as a test bed. It is a very tough test bed, but you can use the results in other fields and in other applications.

Looking specifically at RoboCup, what are your future plans for the team? There are some big changes planned for the RoboCup Leagues, so could you also say how this might affect your plans?

Domenico: We have a very strong team and some of the team members will do a PhD in the coming years. One of our targets was to keep the students inside the university and the research ward, and we were successful in this, because now they are very passionate about the RoboCup competition and about AI in general.

In terms of the changes, there will be a new league within RoboCup that is a merger of the standard platform league (SPL) and the humanoid kid-size league. The humanoid adult-size league will remain, so we need to decide whether to join the new merged league, or move to adult-sized robots. At the moment we don’t have too many details, but what we know is that we will go towards a new era of robots. We acquired robots from Booster and we are now acquiring another G1 robot from Unitree. So we are trying to have a complete family of new robots. And then I think we will go towards the league that is chosen by the other teams in the SPL league. But for now we are trying to organize an event in October in Rome with two other teams to exchange ideas and to understand where we want to go. There will also be a workshop to discuss the research side.

Vincenzo: We are also in discussion about the best size of robot for the competition. We are going to have two different positions, because robots are becoming cheaper and there are teams that are pushing to move more quickly to a bigger platform. On the other hand, there are teams that want to stick with a smaller platform in order to do research on multi agents. We have seen a lot of applications for a single robot but not many applications with a set of robots that are cooperating. And this has been historically one of the core parts of research we did in RoboCup, and also outside of RoboCup.

There are plenty of points of view on which robot size to use, because there are several factors, and we don’t know how fast the world will change in two or three years. We are trying to shape the rules and the conditions to play for next year, but, because of how quickly things are changing, we don’t know what the best decision will be. And also the research we are going to do will be affected by the decision we make on this.

There will be some changes to other leagues in the near future too; the small and middle sizes will close in two years probably, and the simulation league also. A lot will happen in the next five years, probably more than during the last 10-15 years. This is a critical year because the decisions are based on what we can see, what we can spot in the future, but we don’t have all the information we need, so it will be challenging.

For example, the SPL has a big, probably the biggest, community among the RoboCup leagues. We have a lot of teams that are grouping by interest and so there are teams that are sticking to working on this specific problem with a specific platform and teams that are trying to move to another platform and another problem. So even inside the same community we are going to have more than one point of view and hopes for the future. At a certain point we will try to figure out what is the best for all of them.

Daniele: I just want to add that in order to achieve the 2050 challenge, in my opinion, it is necessary to have just one league encompassing everything. So up to this point, different leagues have been focusing on different research problems. There were leagues focusing only on strategy, others focusing only on the hardware, our league focusing mainly on the coordination and dynamic handling of the gameplay. But at the end of the day, in order to compete with humans, there must be only one league bringing all these single aspects together. From my point of view, it totally makes sense to keep merging leagues together.

About the authors

|

Daniele Affinita is a PhD student in Machine Learning at EPFL, specializing in the intersection of Machine Learning and Robotics. He has over four years of experience competing in RoboCup with the SPQR team. In 2024, he worked at Sony on domain adaptation techniques. He holds a Bachelor’s degree in Computer Engineering and a Master’s degree in Artificial Intelligence and Robotics from Sapienza University of Rome.

|

|

Vincenzo Suriani earned his Ph.D. in Computer Engineering in 2024 from Sapienza University of Rome, with a specialization in artificial intelligence, robotic vision, and multi-agent coordination. Since 2016, he has served as Software Development Leader of the Sapienza Soccer Robot Team, contributing to major robotic competitions and international initiatives such as EUROBENCH, SciRoc, and Tech4YOU. He is currently a Research Fellow at the University of Basilicata, where he focuses on developing intelligent environments for software testing automation. His research, recognized with award-winning papers at the RoboCup International Symposium (2021, 2023, 2025), centers on robotic semantic mapping, object recognition, and human–robot interaction.

|

|

Domenico Daniele Bloisi is an associate professor of Artificial Intelligence at the International University of Rome UNINT. Previously, he was associate professor at the University of Basilicata, assistant professor at the University of Verona, and assistant professor at Sapienza University of Rome. He received his PhD, master’s and bachelor’s degrees in Computer Engineering from Sapienza University of Rome in 2010, 2006 and 2004, respectively. He is the author of more than 80 peer-reviewed papers published in international journals and conferences in the field of artificial intelligence and robotics, with a focus on image analysis, multi-robot coordination, visual perception and information fusion. Dr. Bloisi conducts research in the field of melanoma and oral carcinoma prevention through automatic medical image analysis in collaboration with specialized medical teams in Italy. In addition, Dr. Bloisi is WP3 leader of the EU H2020 SOLARIS project, unit leader for the PRIN PNRR RETINA project, unit leader for the PRIN 2022 AIDA project. Since 2015, he is the team manager of the SPQR robot soccer team participating in the RoboCup world competitions

|

|

Can Lin is a master student in Data Science at Sapienza university of Rome. He holds a bachelor degree in Computer science and Artificial intelligence from the same university. He joined the SPQR team in September of 2024, focusing on tasks related to computer vision.

|

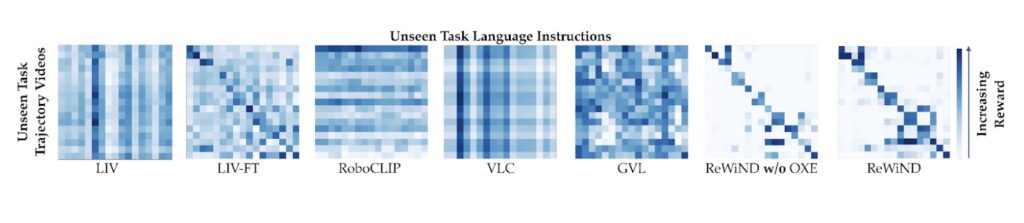

Video-language reward confusion matrix. See

Video-language reward confusion matrix. See

This week, we are participating in the IEEE/RSJ International Conference on Intelligent Robots and Systems #IROS2025 in Hangzhou #China

This week, we are participating in the IEEE/RSJ International Conference on Intelligent Robots and Systems #IROS2025 in Hangzhou #China  (IRI researchers right-left): @juliaborrassol.bsky.social, David Blanco-Mulero and Anais Garrell

(IRI researchers right-left): @juliaborrassol.bsky.social, David Blanco-Mulero and Anais Garrell

Presentation of the best paper award at the RoboCup 2025 symposium.

Presentation of the best paper award at the RoboCup 2025 symposium. Overview of the data preparation pipeline.

Overview of the data preparation pipeline. SPQR team.

SPQR team.

Roberto’s first RoboCup in 2016, Leipzig, pictured with the Singapore team celebrating after the finals.

Roberto’s first RoboCup in 2016, Leipzig, pictured with the Singapore team celebrating after the finals. EuroRoboCup 2022 in Portugal. Roberto (kneeling in photo) was part of the organising committee.

EuroRoboCup 2022 in Portugal. Roberto (kneeling in photo) was part of the organising committee.

Screenshot from Ana’s talk.

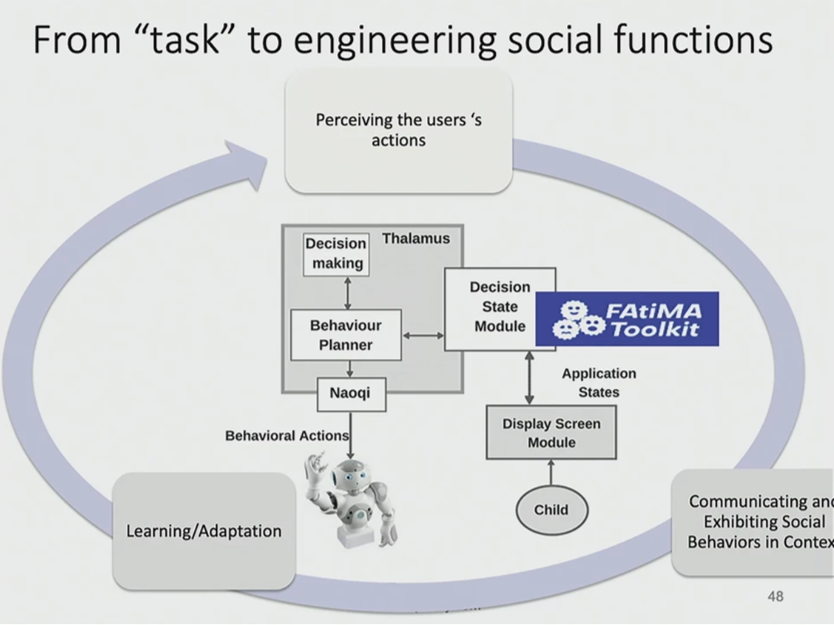

Screenshot from Ana’s talk.  Screenshot from Ana’s talk. System architecture.

Screenshot from Ana’s talk. System architecture.

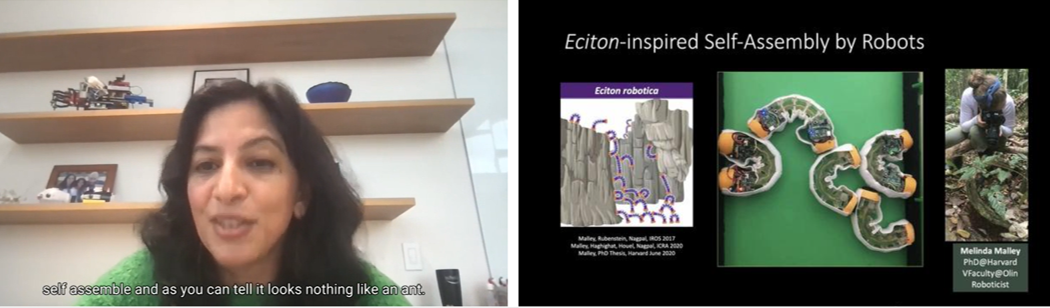

Screenshot from Radhika’s talk

Screenshot from Radhika’s talk