In this technical talk, Amanda Prorok, Assistant Professor in the Department of Computer Science and Technology at Cambridge University, and a Fellow of Pembroke College, discusses her team’s latest research on what, how and when information needs to be shared among agents that aim to solve cooperative tasks.

Abstract

Effective communication is key to successful multi-agent coordination. Yet it is far from obvious what, how and when information needs to be shared among agents that aim to solve cooperative tasks. In this talk, I discuss our recent work on using Graph Neural Networks (GNNs) to solve multi-agent coordination problems. In my first case-study, I show how we use GNNs to find a decentralized solution to the multi-agent path finding problem, which is known to be NP-hard. I demonstrate how our policy is able to achieve near-optimal performance, at a fraction of the real-time computational cost. Secondly, I show how GNN-based reinforcement learning can be leveraged to learn inter-agent communication policies. In this case-study, I demonstrate how non-shared optimization objectives can lead to adversarial communication strategies. Finally, I address the challenge of learning robust communication policies, enabling a multi-agent system to maintain high performance in the presence of anonymous non-cooperative agents that communicate faulty, misleading or manipulative information.

Biography

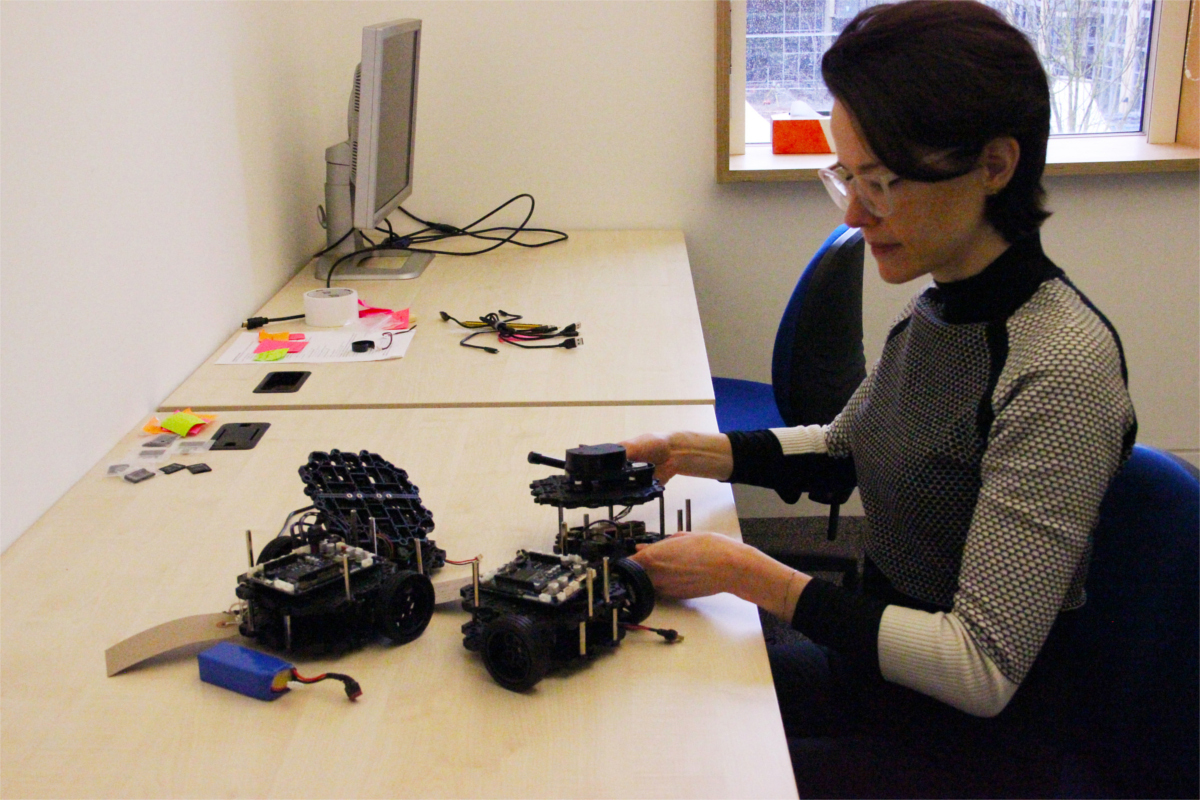

Amanda Prorok is an Assistant Professor in the Department of Computer Science and Technology, at Cambridge University, UK, and a Fellow of Pembroke College. Her mission is to find new ways of coordinating artificially intelligent agents (e.g., robots, vehicles, machines) to achieve common goals in shared physical and virtual spaces. Amanda Prorok has been honored by an ERC Starting Grant, an Amazon Research Award, an EPSRC New Investigator Award, an Isaac Newton Trust Early Career Award, and the Asea Brown Boveri (ABB) Award for the best thesis at EPFL in Computer Science. Further awards include Best Paper at DARS 2018, Finalist for Best Multi-Robot Systems Paper at ICRA 2017, Best Paper at BICT 2015, and MIT Rising Stars 2015. She serves as Associate Editor for IEEE Robotics and Automation Letters (R-AL), and Associate Editor for Autonomous Robots (AURO). Prior to joining Cambridge, Amanda Prorok was a postdoctoral researcher at the General Robotics, Automation, Sensing and Perception (GRASP) Laboratory at the University of Pennsylvania, USA. She completed her PhD at EPFL, Switzerland.

Featuring Guest Panelist(s): Stephanie Gil, Joey Durham

The next technical talk will be delivered by Koushil Sreenath from UC Berkeley, and it will take place on April 23 at 3pm EDT. Keep up to date on this website.