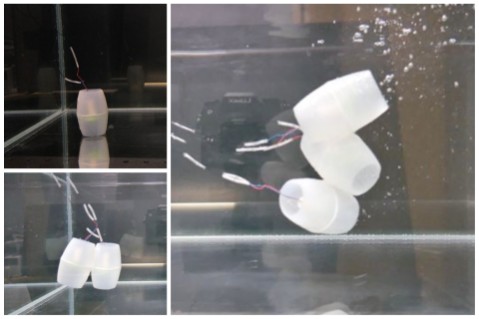

Suction cup grasping a stone – Image credit: Tianqi Yue

Suction cup grasping a stone – Image credit: Tianqi Yue

The team, based at Bristol Robotics Laboratory, studied the structures of octopus biological suckers, which have superb adaptive suction abilities enabling them to anchor to rock.

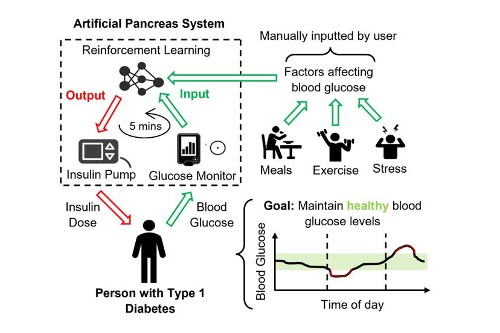

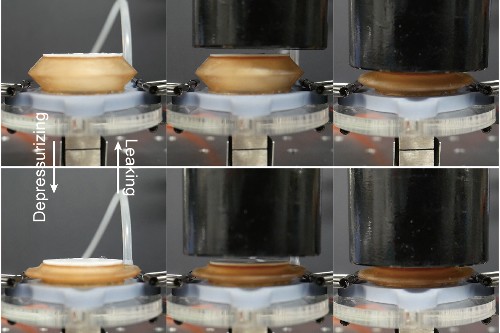

In their findings, published in the journal PNAS today, the researchers show how they were able create a multi-layer soft structure and an artificial fluidic system to mimic the musculature and mucus structures of biological suckers.

Suction is a highly evolved biological adhesion strategy for soft-body organisms to achieve strong grasping on various objects. Biological suckers can adaptively attach to dry complex surfaces such as rocks and shells, which are extremely challenging for current artificial suction cups. Although the adaptive suction of biological suckers is believed to be the result of their soft body’s mechanical deformation, some studies imply that in-sucker mucus secretion may be another critical factor in helping attach to complex surfaces, thanks to its high viscosity.

Lead author Tianqi Yue explained: “The most important development is that we successfully demonstrated the effectiveness of the combination of mechanical conformation – the use of soft materials to conform to surface shape, and liquid seal – the spread of water onto the contacting surface for improving the suction adaptability on complex surfaces. This may also be the secret behind biological organisms ability to achieve adaptive suction.”

Their multi-scale suction mechanism is an organic combination of mechanical conformation and regulated water seal. Multi-layer soft materials first generate a rough mechanical conformation to the substrate, reducing leaking apertures to just micrometres. The remaining micron-sized apertures are then sealed by regulated water secretion from an artificial fluidic system based on the physical model, thereby the suction cup achieves long suction longevity on diverse surfaces but with minimal overflow.

Tianqi added: “We believe the presented multi-scale adaptive suction mechanism is a powerful new adaptive suction strategy which may be instrumental in the development of versatile soft adhesion.

”Current industrial solutions use always-on air pumps to actively generate the suction however, these are noisy and waste energy.

“With no need for a pump, it is well known that many natural organisms with suckers, including octopuses, some fishes such as suckerfish and remoras, leeches, gastropods and echinoderms, can maintain their superb adaptive suction on complex surfaces by exploiting their soft body structures.”

The findings have great potential for industrial applications, such as providing a next-generation robotic gripper for grasping a variety of irregular objects.

The team now plan to build a more intelligent suction cup, by embedding sensors into the suction cup to regulate suction cup’s behaviour.

Paper

‘Bioinspired multiscale adaptive suction on complex dry surfaces enhanced by regulated water secretion’ by Tianqi Yue, Weiyong Si, Alex Keller, Chenguang Yang, Hermes Bloomfield-Gadêlha and Jonathan Rossiter in PNAS.