A robot that can help firefighters during indoor emergencies

RoboHouse Interview Trilogy, part II: Wendel Postma and Project MARCH

For the second part of our RoboHouse Interview Trilogy: The Working Life of the Robotics Engineer we speak with Wendel Postma, chief engineer at Project MARCH VIII. How does he resolve the conundrum of integration: getting a bunch of single-minded engineers to ultimately serve the needs of one single exoskeleton user? Rens van Poppel inquires.

Wendel oversees technical engineering quality, and shares responsible for on-time delivery within budget with the other project managers. He spends his days wandering around the Dream Hall on TU Delft Campus, encouraging his team to explore new avenues for developing the exoskeleton. What is possible within the time that we have? Can conflicting design solutions work together?

Bringing bad news is part of the chief engineer’s job.

There is no shortage of hobbies and activities for Chief Engineer, Wendel. Sitting still is something he can’t do, which is why outside of Project MARCH, he is doing a lot of sports. This year, Wendel is making sure the team has 1 exoskeleton at the end of the year instead of many different parts. He also communicates well within the team so all the technological advances are understood and with a class of yoga so everyone can relax again. Wendel has many different goals. For example, he later wants to work in the health industry and complete an Ironman. Source: Project MARCH website.

In daily life, Arnhem-based Project MARCH pilot Koen van Zeeland is an executive in laying fibreglass in the Utrecht area. He was diagnosed with a spinal cord injury in 2013. Koen is a hard worker and his phone is always ringing. Yet he likes to make time to have a drink with his friends in the pub. Besides the pub, you might also find him on the moors, where he likes to walk his dog Turbo. Koen is also super sporty. Besides working out three times a week, Koen is also an avid cyclist with the goal of cycling up the mountains in Austria on his handbike. Source: Project MARCH website.

Koen van Zeeland is the primary test user of the exoskeleton and has control over the movements he makes. Project MARCH therefore calls him the ‘pilot’ of the exoskeleton. As the twenty-seventh and perhaps most important team member, Koen is valued highly within Project MARCH VIII. Source: Project MARCH website.

Project MARCH is iterative enterprise.

Most of its workplace drama comes from the urgency to deliver at least one significant improvement on the existing prototype. This year’s obsessions is weight; a lighter exoskeleton would require less power from both pilot and motors. Self-balancing would become easier to realise.

In order not to weaken the frame of the exoskeleton, there was a lot of enthusiasm to experiment with carbon fibre, which is both a light and strong material. Something, however, got in the way: the team struggled to find a pilot.

My job is making sure that in the end we don’t have 600 separate parts, but one exoskeleton.

“Having a test pilot is crucial if we are to reach our goals,” Wendel says. “Our current exoskeleton is built to fit the particular body shape of the person controlling it. The design is not yet adjustable to a different body shape. So it is crucial to get the pilot involved as quickly as possible.”

Not having a pilot was stressful for the entire team.

Their dream of creating a self-balancing exoskeleton was in danger. Wendel had to step up: “As chief engineer you have to make tough decisions. Carbon fibre is strong, but not flexible and difficult to machine. That is why we switched to aluminium, because it is easier to modify even after it is finished.”

“It was a huge disappointment,” Wendel says. “Some of us had already finished trainings for carbon manufacturing. Carbon parts were already ordered. The team felt let down. We had spent a so much time on something that was now impossible – because of the delays caused by having no pilot.”

“I learnt that bringing bad news is part of the chief engineer’s job. The next step is to look at how to convert the engineers’ enthusiasm for carbon fibre into new solutions and to redeploy their personal qualities.”

Wendel says the job also taught him to consider a hundred things at the same time. And to make sacrifices. Project MARCH involves long workdays and maybe not seeing your friends and roommates as much as you would like.

As a naturally curious person, Wendel found out that curiosity must be complemented by grit to make it in robotics. You often need to go deeper and study in more detail to make a good decision. “It is hard work. However, that is also what makes the job so much fun. You work in such a highly motivated team.”

That is also what makes the job so much fun.

The carbon story ended well, though.

When the team did found a pilot, hard-working Koen van Zeeland, the choice for aluminium as a base material paid off. Through a process of weight analysis, parts can now be optimised for an ever lighter exoskeleton.

The Project MARCH team continues to grow through setbacks and has doubled-down on their efforts to create the world’s first self-balancing exoskeleton. If they succeed, it will be a huge success for this unique way of running a business.

The post RoboHouse Interview Trilogy, Part II: Wendel Postma and Project MARCH appeared first on RoboHouse.

Third Wave Automation Announces Strategic Investment from Qualcomm Ventures and Zebra Technologies

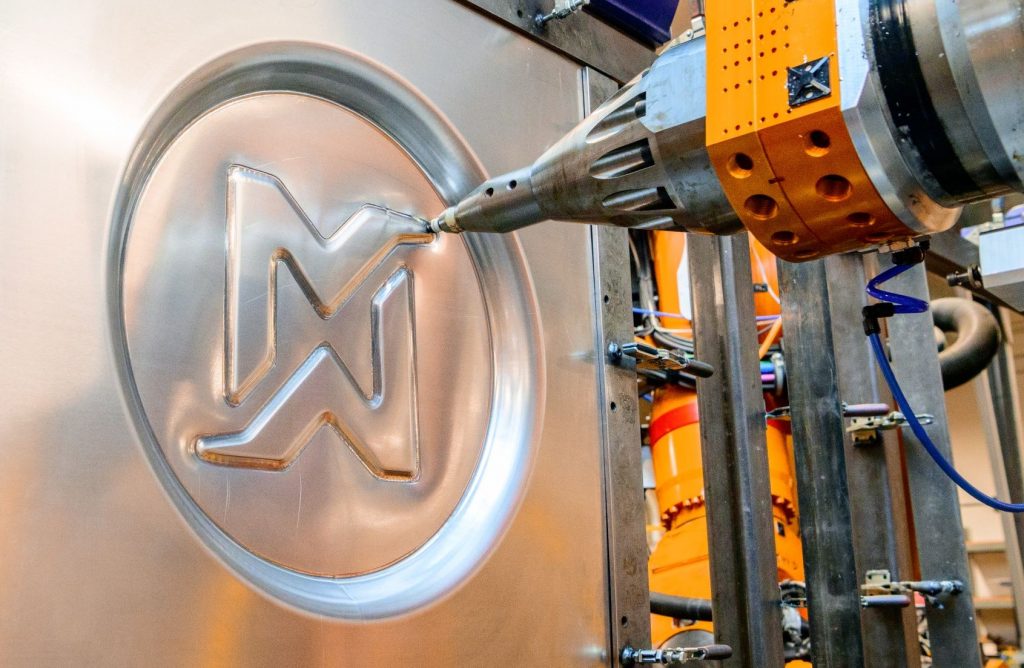

ep.364: Shaking Up The Sheetmetal Industry, with Ed Mehr

Conventional sheet metal manufacturing is highly inefficient for the low-volume production seen in the space industry. At Machina Labs, they developed a novel method of forming sheet metal using two robotic arms to bend the metal into different geometries. This method cuts down the time to produce large sheet metal parts from several months down to a few hours. Ed Mehr, Co-Founder and CEO of Machina Labs, explains this revolutionary manufacturing process.

Ed Mehr

Ed Mehr is the co-founder and CEO of Machina Labs. He has an engineering background in smart manufacturing and artificial intelligence. In his previous position at Relativity Space, he led a team in charge of developing the world’s largest metal 3D printer. Relativity Space uses 3D printing to make rocket parts rapidly, and with the flexibility for multiple iterations. Ed previously was the CTO at Cloudwear (Now Averon), and has also worked at SpaceX, Google, and Microsoft.

Links

- Machina Labs

- Download mp3

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

Robot Talk Episode 37 – Interview with Yang Gao

Claire chatted to Professor Yang Gao from the University of Surrey all about space robotics and planetary exploration.

Yang Gao is Professor of Space Autonomous Systems and Founding Head of the STAR LAB that specializes in robotic sensing, perception, visual guidance, navigation, and control (GNC) and biomimetic mechanisms for industrial applications in extreme environments. She brings over 20 years of research experience in developing robotics and autonomous systems, in which she has been the principal investigator of over 30 inter/nationally teamed projects and involved in real-world mission development.

Using the cuttlefish eye as a template for robot eyes that can see better in murky conditions

How a Government Drone Blacklist Could Impact Farms

How a Government Drone Blacklist Could Impact Farms

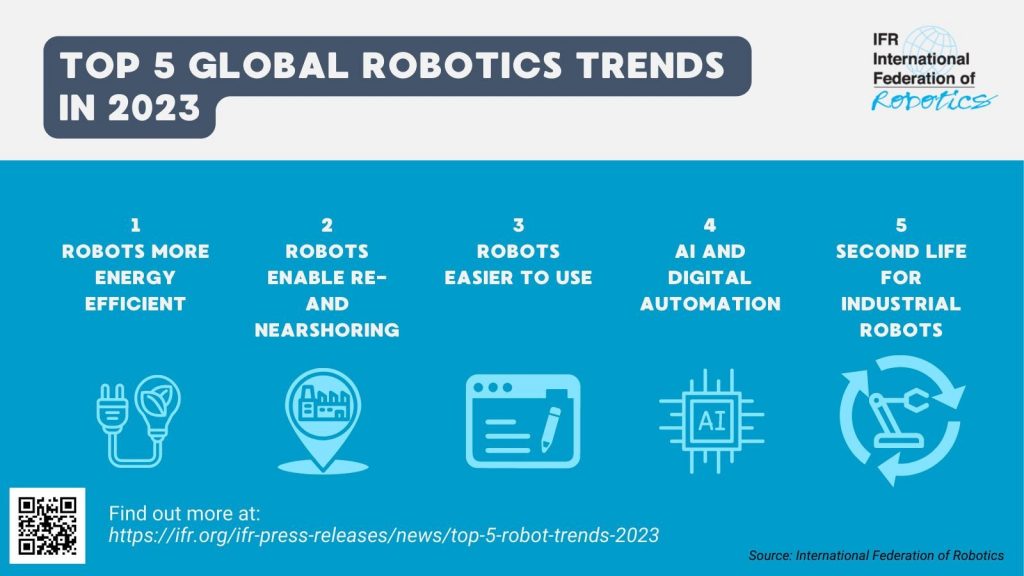

Top 5 robot trends 2023

Top 5 Robot Trends 2023 © International Federation of Robotics

The stock of operational robots around the globe hit a new record of about 3.5 million units – the value of installations reached an estimated 15.7 billion USD. The International Federation of Robotics analyzes the top 5 trends shaping robotics and automation in 2023.

“Robots play a fundamental role in securing the changing demands of manufacturers around the world,” says Marina Bill, President of the International Federation of Robotics. “New trends in robotics attract users from small enterprise to global OEMs.”

1 – Energy Efficiency

Energy efficiency is key to improve companies’ competitiveness amid rising energy costs. The adoption of robotics helps in many ways to lower energy consumption in manufacturing. Compared to traditional assembly lines, considerable energy savings can be achieved through reduced heating. At the same time, robots work at high speed thus increasing production rates so that manufacturing becomes more time- and energy-efficient.

Today’s robots are designed to consume less energy, which leads to lower operating costs. To meet sustainability targets for their production, companies use industrial robots equipped with energy saving technology: robot controls are able to convert kinetic energy into electricity, for example, and feed it back into the power grid. This technology significantly reduces the energy required to run a robot. Another feature is the smart power saving mode that controls the robot´s energy supply on-demand throughout the workday. Since industrial facilities need to monitor their energy consumption even today, such connected power sensors are likely to become an industry standard for robotic solutions.

2 – Reshoring

Resilience has become an important driver for reshoring in various industries: Car manufacturers e.g. invest heavily in short supply lines to bring processes closer to their customers. These manufacturers use robot automation to manufacture powerful batteries cost-effectively and in large quantities to support their electric vehicle projects. These investments make the shipment of heavy batteries redundant. This is important as more and more logistics companies refuse to ship batteries for safety reasons.

Relocating microchip production back to the US and Europe is another reshoring trend. Since most industrial products nowadays require a semiconductor chip to function, their supply close to the customer is crucial. Robots play a vital role in chip manufacturing, as they live up to the extreme requirements of precision. Specifically designed robots automate the silicon wafer fabrication, take over cleaning and cleansing tasks or test integrated circuits. Recent examples of reshoring are Intel´s new chip factories in Ohio or the recently announced chip plant in the Saarland region of Germany run by chipmaker Wolfspeed and automotive supplier ZF.

3 – Robots easier to use

Robot programming has become easier and more accessible to non-experts. Providers of software-driven automation platforms support companies, letting users manage industrial robots with no prior programming experience. Original equipment manufacturers work hand-in-hand with low code or even no-code technology partners that allow users of all skill levels to program a robot.

The easy-to-use software paired with an intuitive user experience replaces extensive robotics programming and opens up new robotics automation opportunities: Software start-ups are entering this market with specialized solutions for the needs of small and medium-sized companies. For example: a traditional heavy-weight industrial robot can be equipped with sensors and a new software that allows collaborative setup operation. This makes it easy for workers to adjust heavy machinery to different tasks. Companies will thus get the best of both worlds: robust and precise industrial robot hardware and state-of-the-art cobot software.

Easy-to-use programming interfaces, that allow customers to set up the robots themselves, also drive the emerging new segment of low-cost robotics. Many new customers reacted to the pandemic in 2020 by trying out robotic solutions. Robot suppliers acknowledged this demand: Easy setup and installation, for instance, with pre-configured software to handle grippers, sensors or controllers support lower-cost robot deployment. Such robots are often sold through web shops and program routines for various applications are downloadable from an app store.

4 – Artificial Intelligence (AI) and digital automation

Propelled by advances in digital technologies, robot suppliers and system integrators offer new applications and improve existing ones regarding speed and quality. Connected robots are transforming manufacturing. Robots will increasingly operate as part of a connected digital ecosystem: Cloud Computing, Big Data Analytics or 5G mobile networks provide the technological base for optimized performance. The 5G standard will enable fully digitalized production, making cables on the shopfloor obsolete.

Artificial Intelligence (AI) holds great potential for robotics, enabling a range of benefits in manufacturing. The main aim of using AI in robotics is to better manage variability and unpredictability in the external environment, either in real-time, or off-line. This makes AI supporting machine learning play an increasing role in software offerings where running systems benefit, for example with optimized processes, predictive maintenance or vision-based gripping.

This technology helps manufacturers, logistics providers and retailers dealing with frequently changing products, orders and stock. The greater the variability and unpredictability of the environment, the more likely it is that AI algorithms will provide a cost-effective and fast solution – for example, for manufacturers or wholesalers dealing with millions of different products that change on a regular basis. AI is also useful in environments in which mobile robots need to distinguish between the objects or people they encounter and respond differently.

5 – Second life for industrial robots

Since an industrial robot has a service lifetime of up to thirty years, new tech equipment is a great opportunity to give old robots a “second life”. Industrial robot manufacturers like ABB, Fanuc, KUKA or Yaskawa run specialized repair centers close to their customers to refurbish or upgrade used units in a resource-efficient way. This prepare-to-repair strategy for robot manufacturers and their customers also saves costs and resources. To offer long-term repair to customers is an important contribution to the circular economy.

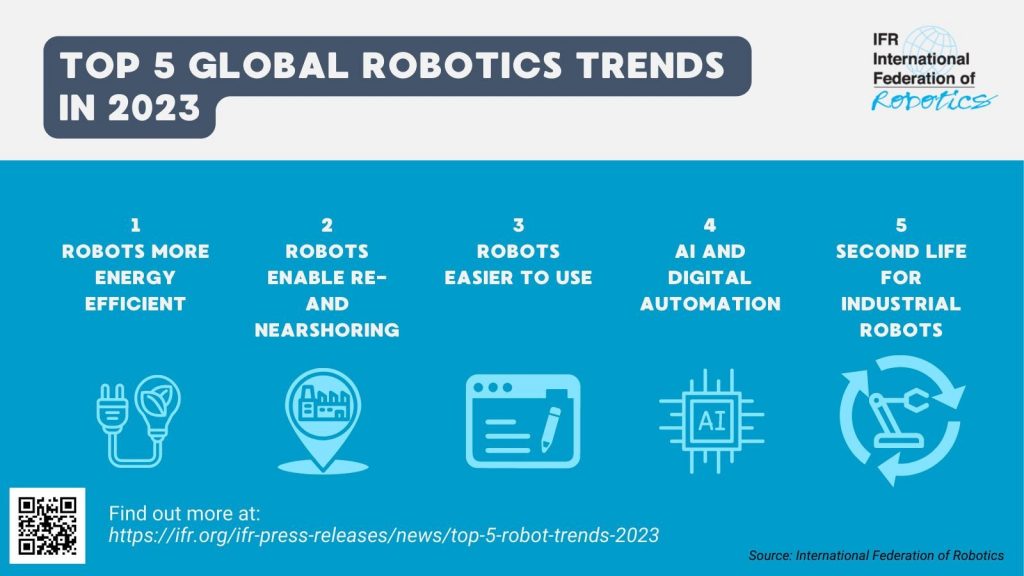

Top 5 robot trends 2023

Top 5 Robot Trends 2023 © International Federation of Robotics

The stock of operational robots around the globe hit a new record of about 3.5 million units – the value of installations reached an estimated 15.7 billion USD. The International Federation of Robotics analyzes the top 5 trends shaping robotics and automation in 2023.

“Robots play a fundamental role in securing the changing demands of manufacturers around the world,” says Marina Bill, President of the International Federation of Robotics. “New trends in robotics attract users from small enterprise to global OEMs.”

1 – Energy Efficiency

Energy efficiency is key to improve companies’ competitiveness amid rising energy costs. The adoption of robotics helps in many ways to lower energy consumption in manufacturing. Compared to traditional assembly lines, considerable energy savings can be achieved through reduced heating. At the same time, robots work at high speed thus increasing production rates so that manufacturing becomes more time- and energy-efficient.

Today’s robots are designed to consume less energy, which leads to lower operating costs. To meet sustainability targets for their production, companies use industrial robots equipped with energy saving technology: robot controls are able to convert kinetic energy into electricity, for example, and feed it back into the power grid. This technology significantly reduces the energy required to run a robot. Another feature is the smart power saving mode that controls the robot´s energy supply on-demand throughout the workday. Since industrial facilities need to monitor their energy consumption even today, such connected power sensors are likely to become an industry standard for robotic solutions.

2 – Reshoring

Resilience has become an important driver for reshoring in various industries: Car manufacturers e.g. invest heavily in short supply lines to bring processes closer to their customers. These manufacturers use robot automation to manufacture powerful batteries cost-effectively and in large quantities to support their electric vehicle projects. These investments make the shipment of heavy batteries redundant. This is important as more and more logistics companies refuse to ship batteries for safety reasons.

Relocating microchip production back to the US and Europe is another reshoring trend. Since most industrial products nowadays require a semiconductor chip to function, their supply close to the customer is crucial. Robots play a vital role in chip manufacturing, as they live up to the extreme requirements of precision. Specifically designed robots automate the silicon wafer fabrication, take over cleaning and cleansing tasks or test integrated circuits. Recent examples of reshoring are Intel´s new chip factories in Ohio or the recently announced chip plant in the Saarland region of Germany run by chipmaker Wolfspeed and automotive supplier ZF.

3 – Robots easier to use

Robot programming has become easier and more accessible to non-experts. Providers of software-driven automation platforms support companies, letting users manage industrial robots with no prior programming experience. Original equipment manufacturers work hand-in-hand with low code or even no-code technology partners that allow users of all skill levels to program a robot.

The easy-to-use software paired with an intuitive user experience replaces extensive robotics programming and opens up new robotics automation opportunities: Software start-ups are entering this market with specialized solutions for the needs of small and medium-sized companies. For example: a traditional heavy-weight industrial robot can be equipped with sensors and a new software that allows collaborative setup operation. This makes it easy for workers to adjust heavy machinery to different tasks. Companies will thus get the best of both worlds: robust and precise industrial robot hardware and state-of-the-art cobot software.

Easy-to-use programming interfaces, that allow customers to set up the robots themselves, also drive the emerging new segment of low-cost robotics. Many new customers reacted to the pandemic in 2020 by trying out robotic solutions. Robot suppliers acknowledged this demand: Easy setup and installation, for instance, with pre-configured software to handle grippers, sensors or controllers support lower-cost robot deployment. Such robots are often sold through web shops and program routines for various applications are downloadable from an app store.

4 – Artificial Intelligence (AI) and digital automation

Propelled by advances in digital technologies, robot suppliers and system integrators offer new applications and improve existing ones regarding speed and quality. Connected robots are transforming manufacturing. Robots will increasingly operate as part of a connected digital ecosystem: Cloud Computing, Big Data Analytics or 5G mobile networks provide the technological base for optimized performance. The 5G standard will enable fully digitalized production, making cables on the shopfloor obsolete.

Artificial Intelligence (AI) holds great potential for robotics, enabling a range of benefits in manufacturing. The main aim of using AI in robotics is to better manage variability and unpredictability in the external environment, either in real-time, or off-line. This makes AI supporting machine learning play an increasing role in software offerings where running systems benefit, for example with optimized processes, predictive maintenance or vision-based gripping.

This technology helps manufacturers, logistics providers and retailers dealing with frequently changing products, orders and stock. The greater the variability and unpredictability of the environment, the more likely it is that AI algorithms will provide a cost-effective and fast solution – for example, for manufacturers or wholesalers dealing with millions of different products that change on a regular basis. AI is also useful in environments in which mobile robots need to distinguish between the objects or people they encounter and respond differently.

5 – Second life for industrial robots

Since an industrial robot has a service lifetime of up to thirty years, new tech equipment is a great opportunity to give old robots a “second life”. Industrial robot manufacturers like ABB, Fanuc, KUKA or Yaskawa run specialized repair centers close to their customers to refurbish or upgrade used units in a resource-efficient way. This prepare-to-repair strategy for robot manufacturers and their customers also saves costs and resources. To offer long-term repair to customers is an important contribution to the circular economy.

To help recover balance, robotic exoskeletons have to be faster than human reflexes

Bionic fingers create 3D maps of human tissue, electronics and other complex objects

Learning challenges shape a mechanical engineer’s path

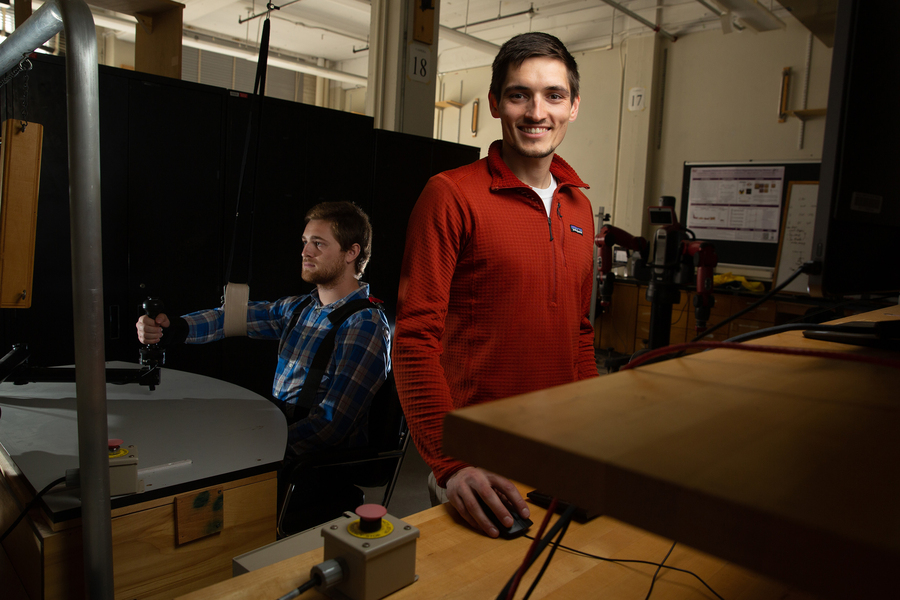

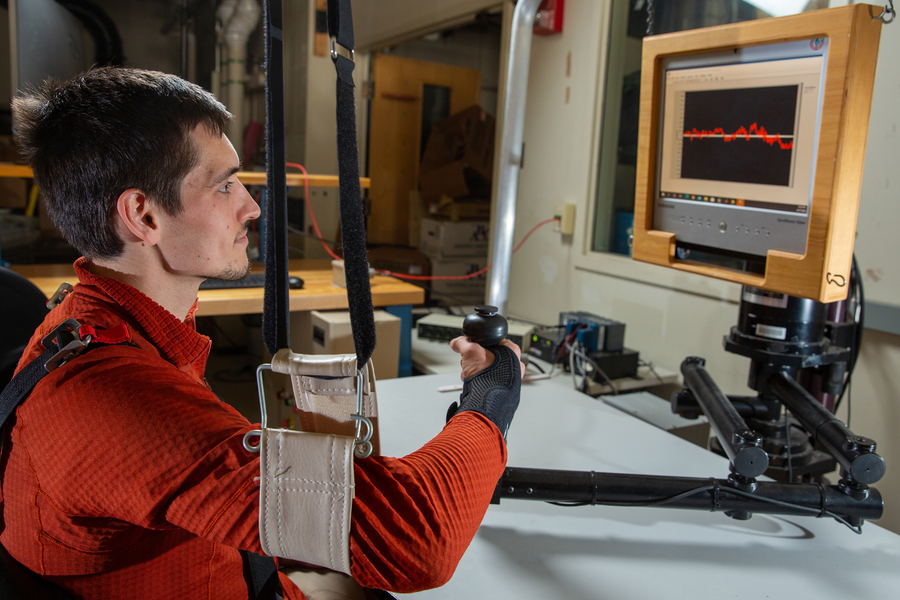

“I observed assistive technologies — developed by scientists and engineers my friends and I never met — which liberated us. My dream has always been to be one of those engineers.” Hermus says. Credit: Tony Pulsone

By Michaela Jarvis | Department of Mechanical Engineering

Before James Hermus started elementary school, he was a happy, curious kid who loved to learn. By the end of first grade, however, all that started to change, he says. As his schoolbooks became more advanced, Hermus could no longer memorize the words on each page, and pretend to be reading. He clearly knew the material the teacher presented in class; his teachers could not understand why he was unable to read and write his assignments. He was accused of being lazy and not trying hard enough.

Hermus was fortunate to have parents who sought out neuropsychology testing — which documented an enormous discrepancy between his native intelligence and his symbol decoding and phonemic awareness. Yet despite receiving a diagnosis of dyslexia, Hermus and his family encountered resistance at his school. According to Hermus, the school’s reading specialist did not “believe” in dyslexia, and, he says, the principal threatened his family with truancy charges when they took him out of school each day to attend tutoring.

Hermus’ school, like many across the country, was reluctant to provide accommodations for students with learning disabilities who were not two years behind in two subjects, Hermus says. For this reason, obtaining and maintaining accommodations, such as extended time and a reader, was a constant battle from first through 12th grade: Students who performed well lost their right to accommodations. Only through persistence and parental support did Hermus succeed in an educational system which he says all too often fails students with learning disabilities.

By the time Hermus was in high school, he had to become a strong self-advocate. In order to access advanced courses, he needed to be able to read more and faster, so he sought out adaptive technology — Kurzweil, a text-to-audio program. This, he says, was truly life-changing. At first, to use this program he had to disassemble textbooks, feed the pages through a scanner, and digitize them.

After working his way to the University of Wisconsin at Madison, Hermus found a research opportunity in medical physics and then later in biomechanics. Interestingly, the steep challenges that Hermus faced during his education had developed in him “the exact skill set that makes a successful researcher,” he says. “I had to be organized, advocate for myself, seek out help to solve problems that others had not seen before, and be excessively persistent.”

While working as a member of Professor Darryl Thelen’s Neuromuscular Biomechanics Lab at Madison, Hermus helped design and test a sensor for measuring tendon stress. He recognized his strengths in mechanical design. During this undergraduate research, he co-authored numerous journal and conference papers. These experiences and a desire to help people with physical disabilities propelled him to MIT.

“MIT is an incredible place. The people in MechE at MIT are extremely passionate and unassuming. I am not unusual at MIT,” Hermus says. Credit: Tony Pulsone

In September 2022, Hermus completed his PhD in mechanical engineering from MIT. He has been an author on seven papers in peer-reviewed journals, three as first author and four of them published when he was an undergraduate. He has won awards for his academics and for his mechanical engineering research and has served as a mentor and an advocate for disability awareness in several different contexts.

His work as a researcher stems directly from his personal experience, Hermus says. As a student in a special education classroom, “I observed assistive technologies — developed by scientists and engineers my friends and I never met — which liberated us. My dream has always been to be one of those engineers.”

Hermus’ work aims to investigate and model human interaction with objects where both substantial motion and force are present. His research has demonstrated that the way humans perform such everyday actions as turning a steering wheel or opening a door is very different from much of robotics. He showed specific patterns exist in the behavior that provide insight into neural control. In 2020, Hermus was the first author on a paper on this topic, which was published in the Journal of Neurophysiology and later won first place in the MIT Mechanical Engineering Research Exhibition. Using this insight, Hermus and his colleagues implemented these strategies on a Kuka LBR iiwa robot to learn about how humans regulate their many degrees of freedom. This work was published in IEEE Transactions on Robotics 2022. More recently, Hermus has collaborated with researchers at the University of Pittsburgh to see if these ideas prove useful in the development of brain computer interfaces — using electrodes implanted in the brain to control a prosthetic robotic arm.

While the hardware of prosthetics and exoskeletons is advancing, Hermus says, there are daunting limitations to the field in the descriptive modeling of human physical behavior, especially during contact with objects. Without these descriptive models, developing generalizable implementations of prosthetics, exoskeletons, and rehabilitation robotics will prove challenging.

“We need competent descriptive models of human physical interaction,” he says.

While earning his master’s and doctoral degrees at MIT, Hermus worked with Neville Hogan, the Sun Jae Professor of Mechanical Engineering, in the Eric P. and Evelyn E. Newman Laboratory for Biomechanics and Human Rehabilitation. Hogan has high praise for the research Hermus has conducted over his six years in the Newman lab.

“James has done superb work for both his master’s and doctoral theses. He tackled a challenging problem and made excellent and timely progress towards its solution. He was a key member of my research group,” Hogan says. “James’ commitment to his research is unquestionably a reflection of his own experience.”

Following postdoctoral research at MIT, where he has also been a part-time lecturer, Hermus is now beginning postdoctoral work with Professor Aude Billard at EPFL in Switzerland, where he hopes to gain experience with learning and optimization methods to further his human motor control research.

Hermus’ enthusiasm for his research is palpable, and his zest for learning and life shines through despite the hurdles his dyslexia presented. He demonstrates a similar kind of excitement for ski-touring and rock-climbing with the MIT Outing Club, working at MakerWorkshop, and being a member of the MechE community.

“MIT is an incredible place. The people in MechE at MIT are extremely passionate and unassuming. I am not unusual at MIT,” he says. “Nearly every person I know well has a unique story with an unconventional path.”