Intel RealSense 3D Camera for robotics & SLAM (with code)

D400 Depth Cameras. Credit: Intel Corporation

D400 Depth Cameras. Credit: Intel CorporationThe Intel RealSense cameras have been gaining in popularity for the past few years for use as a 3D camera and for visual odometry. I had the chance to hear a presentation from Daniel Piro about using the Intel RealSense cameras generally and for SLAM (Simultaneous Localization and Mapping). The following post is based on his talk.

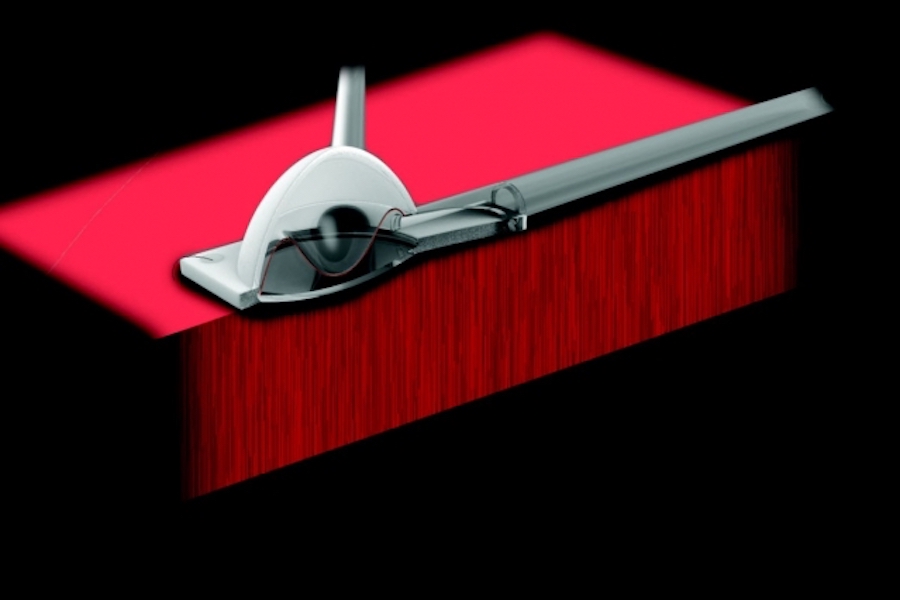

Depth Camera (D400 series)

Depth information is important since that gives us the information needed to understand shapes, sizes, and distance. This lets us (or a robot) know how far it is from items to avoid running into things and to plan path around obstacles in the image field of view. Traditionally this information has come from RADAR or LIDAR, however in some applications we can also get that from cameras. In cameras we often get depth from using 2 cameras for stereo vision.

The Intel RealSense Depth camera (D400 series) uses stereoscopic depth sensing to determine the range to an item. So essentially it has two cameras and can do triangulation from them for stereo. This sensor uses two infrared cameras for the stereo and then also has an RGB camera onboard. So you can get 4 data products from the sensor; RGB image, depth image, left infrared image, and right infrared image. Think of each image frame as a 3D snapshot of the environment, where each color (RGB) pixel also has a range value (depth) to the item that is in the image. The farther the items are from the camera the greater the range/depth error will be.

The D400 cameras have an infrared projector for getting better features on surfaces with minimal texture in the infrared camera for computing the stereo reconstruction. This projector can be turned on and off if you want. Disabling the projector is often useful for tracking applications (since the projected dots don’t move with the items being tracked).

One thing to be aware is that the infrared images are rectified (to make the images look the same in a common plane) in the camera, however the RGB camera image is not rectified. This means that if you want the depth and RGB images to line up well, you need to manually rectify the RGB image.

Pro Tip 1: The driver has a UV map to help map from the depth pixel to the RGB image to help account for the difference in image sizes. This lets you match the depth to RGB image data points better.

Pro Tip 2: If using the D435i (the IMU version), use the timestamps from the images and IMU to synchronize the two sensors. If you use system time (from your computer) there will be more error (partially due to weird USB timing).

Pro Tip 3: The cameras have Depth Presets. These are profiles that let you optimize various settings for various conditions. Such as high density, high accuracy, etc..

Pro Tip 4: Make sure your exposure is set correctly. If you are using auto exposure try changing the Mean Intensity Set Point (the setting is not where exposure is, it is under AE control, not the most obvious).

If you want to use manual exposure you can play with are Exposure setpoint and Gain constant. Start with the exposure setpoint, then adjust the gain.

You might also want to see this whitepaper for more methods of tuning the cameras for better performance.

Visual Odometry & SLAM (T265)

Visual odometry is the generic term for figuring out how far you have moved using a camera. This is as opposed to “standard” odometry using things such as wheel encoders, or inertial odometry with a IMU.

RealSense T265 is a tracking camera that is designed to be more optimal for Visual Odometry and SLAM (wider field of view and not using infrared light). It can do SLAM onboard as well as loop closure. However, this camera is not able to return RGB images (since it does not have a RGB camera onboard) and the depth returned is not as good as the D400 series (and can be a little trickier to get).

Using both a RealSense D435i sensor and a RealSense T265 sensor can provide both the maps and the better quality visual odometry for developing a full SLAM system. The D435i used for the mapping, and the T265 for the tracking.

Software

Intel provides the RealSense SDK2.0 library for using the RealSense cameras. It is Open Source and work on Mac, Windows, Linux, and Android. There are also ROS and OpenCV wrappers.

Click here for the developers page with the SDK.

Within the SDK (software development kit) it includes a viewer to let you view images, record images, change settings, or update the firmware.

Pro Tip 5: Spend some time with the viewer looking at the camera and depth images when designing your system so you can compare various mounting angles, heights, etc.. for the cameras.

The RealSense SDK (software development kit) has a few filters that can run on your computer to try and improve the returned depth map. You can play with turning these on and off in the viewer. Some of these include:

- Decimation Filter

- Spatial Edge-Preserving Filter

- Temporal Filter

- Holes Filling Filter

Within ROS there is a realsense-ros package that provides a wrapper for working with the cameras in ROS and lets you view images and other data in RVIZ.

ROS RealSense Occupancy Map package is available as an experimental feature in a separate branch of the RealSense git repo. This uses both the D400 and T265 cameras for creating the map.

For SLAM with just the D435i sensor, see here.

Pro Tip 6: You can use multiple T265 sensors for better accuracy. For example, if one sensor is pointed forward and another backwards; you can use the confidence values from each sensor to feed into a filter.

I know this is starting to sound like a sales pitch

Pro Tip 7: If you have multiple cameras you can connect and query for a serial number to know which cameras is which.

Also if you remove the little connector at the top of the camera you can wire and chain multiple cameras together to synchronize them. (This should work in the SDK 2.0)

See this whitepaper for working with multiple camera configurations. https://simplecore.intel.com/realsensehub/wp-content/uploads/sites/63/Multiple_Camera_WhitePaper04.pdf

Pro Tip 8: Infinite depth points (such as points to close or to far from the sensor) have a depth value of 0. This is good to know for filtering.

Here are two code snippet’s for using the cameras provided by Daniel, to share with you. The first one is for basic displaying of images using python. The second code snippet is using OpenCV to also detect blobs, also using python.

## License: Apache 2.0. See LICENSE file in root directory.

## Copyright(c) 2015-2017 Intel Corporation. All Rights Reserved.

###############################################

## Open CV and Numpy integration ##

###############################################

import pyrealsense2 as rs

import numpy as np

import cv2

cv2.namedWindow('RealSense', cv2.WINDOW_AUTOSIZE)

# Configure depth and color streams

pipeline = rs.pipeline()

config = rs.config()

config.enable_stream(rs.stream.depth, 640, 480, rs.format.z16, 30)

config.enable_stream(rs.stream.color, 640, 480, rs.format.bgr8, 30)

# Start streaming

pipeline.start(config)

try:

while True:

# Wait for a coherent pair of frames: depth and color

frames = pipeline.wait_for_frames()

depth_frame = frames.get_depth_frame()

color_frame = frames.get_color_frame()

if not depth_frame or not color_frame:

continue

# Convert images to numpy arrays

depth_image = np.asanyarray(depth_frame.get_data())

color_image = np.asanyarray(color_frame.get_data())

# Apply colormap on depth image (image must be converted to 8-bit per pixel first)

scaled_depth=cv2.convertScaleAbs(depth_image, alpha=0.08)

depth_colormap = cv2.applyColorMap(scaled_depth, cv2.COLORMAP_JET)

# Stack both images horizontally

images = np.hstack((color_image, depth_colormap))

# Show images

cv2.imshow('RealSense', images)

k = cv2.waitKey(1) & 0xFF

if k == 27:

break

finally:

# Stop streaming

pipeline.stop()## License: Apache 2.0. See LICENSE file in root directory.

## Copyright(c) 2015-2017 Intel Corporation. All Rights Reserved.

###############################################

## Open CV and Numpy integration ##

###############################################

import pyrealsense2 as rs

import numpy as np

import cv2

def nothing(args):

pass

def detectBlobs(mask):

# Set up the SimpleBlobdetector with default parameters.

params = cv2.SimpleBlobDetector_Params()

# Change thresholds

params.minThreshold = 1;

params.maxThreshold = 255;

# Filter by Area.

params.filterByArea = True

params.maxArea = 4000

params.minArea = 300

# Filter by Circularity

params.filterByCircularity = True

params.minCircularity = 0.1

# Filter by Convexity

params.filterByConvexity = True

params.minConvexity = 0.5

# Filter by Inertia

params.filterByInertia = True

params.minInertiaRatio = 0.1

detector = cv2.SimpleBlobDetector_create(params)

# Detect blobs.

reversemask= mask

keypoints = detector.detect(reversemask)

im_with_keypoints = cv2.drawKeypoints(mask, keypoints, np.array([]),

(0,0,255), cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

return im_with_keypoints

def thresholdDepth(depth):

depth[depth==0] = 255 #set all invalid depth pixels to 255

threshold_value = cv2.getTrackbarPos('Threshold','Truncated Depth')

# Zero if dist>TH

ret,truncated_depth=cv2.threshold(scaled_depth,threshold_value,255,cv2.THRESH_BINARY_INV)

return truncated_depth

cv2.namedWindow('RealSense', cv2.WINDOW_AUTOSIZE)

cv2.namedWindow('Truncated Depth', cv2.WINDOW_AUTOSIZE)

cv2.createTrackbar('Threshold','Truncated Depth',30,255,nothing)

# Configure depth and color streams

pipeline = rs.pipeline()

config = rs.config()

config.enable_stream(rs.stream.depth, 640, 480, rs.format.z16, 30)

config.enable_stream(rs.stream.color, 640, 480, rs.format.bgr8, 30)

# Start streaming

pipeline.start(config)

try:

while True:

# Wait for a coherent pair of frames: depth and color

frames = pipeline.wait_for_frames()

depth_frame = frames.get_depth_frame()

color_frame = frames.get_color_frame()

if not depth_frame or not color_frame:

continue

# Convert images to numpy arrays

depth_image = np.asanyarray(depth_frame.get_data())

color_image = np.asanyarray(color_frame.get_data())

# Apply colormap on depth image (image must be converted to 8-bit per pixel first)

scaled_depth=cv2.convertScaleAbs(depth_image, alpha=0.08)

depth_colormap = cv2.applyColorMap(scaled_depth, cv2.COLORMAP_JET)

# Stack both images horizontally

images = np.hstack((color_image, depth_colormap))

# Show images

cv2.imshow('RealSense', images)

truncated_depth=thresholdDepth(scaled_depth)

truncated_depth=detectBlobs(truncated_depth)

cv2.imshow('Truncated Depth', truncated_depth)

k = cv2.waitKey(1) & 0xFF

if k == 27:

break

finally:

# Stop streaming

pipeline.stop()I hope you found this informative and can make use of the Pro Tips.

Thank you to Daniel for presenting this information and allowing me to share it. This content is based on his talk. Daniel has also provided the full slide set that can be accessed by clicking here.

Disclaimer: I have not received any funding or free items from Intel.

Liked this article? Take a second to support me on Patreon! This post appeared first on Robots For Roboticists.

Discover New Solutions at PACK EXPO Las Vegas 2019

PACK Q&A with Schmalz

5 Ways Robotics Are Benefiting the Water Industry

PACK EXPO 2019 – MiR – Mobile Industrial Robots

PACK EXPO 2019 – ATI Industrial Automation

FIRST® Inspires 78,000 Students Worldwide to Reach New Heights with FIRST® Tech Challenge SKYSTONE Presented by Qualcomm

Future of Warehouse Automation – Market worth $27B by 2025

Encouraging robot uptake through Europe’s network of Digital Innovation Hubs

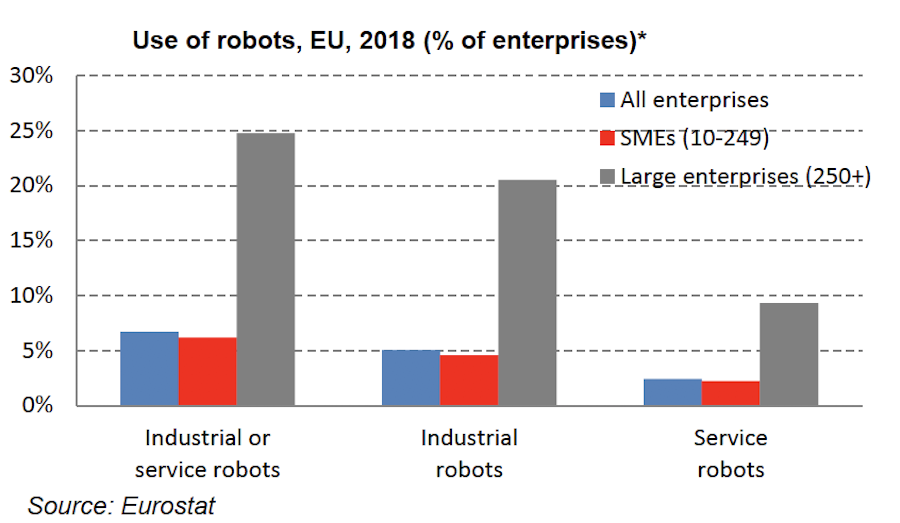

A recent report by the OECD showed that Europe is only capitalising on a fraction (12%) of its digital potential. Most companies limit their digital use to email and internet. Small and Medium Sized Enterprises (SMEs) are particularly slow to integrate digital technologies. The recent Digital Economy and Society Index Report found that “Less than a fifth of companies in the EU-28 are highly digitised“ and that “Use of robots is low on an EU level, with 6.7% of all enterprises using industrial or service robots. The share of large enterprises that use robots is four times higher than the share of SMEs.”

Yet advances in robotics, AI, cloud computing, and big data have the potential to boost productivity and change the industrial landscape.

Digital Innovation Hubs

In an effort to increase uptake of digital technologies in companies, the European Commission promised 500M € in 2016, over 5 years, to launch Digital Innovation Hubs (DIHs). DIHs are regional one-stop-shops that help companies access the latest knowledge, expertise, and technology to prototype and test their own digital innovations, network, receive financing advice and market intelligence.

Key to the success of DIHs is to build on core technology infrastructures, so-called Competence Centres (CC), and other existing structures, and link them on a regional level. The idea is that every company should be able to access at least one DIH within their region. This is especially important since most SMEs confess not knowing where to start when it comes to integrating digital technologies into their companies.

“The DIHs funded by the EU Commission can boost digitalisation in Europe in an effective way by supporting both the public sector and SMEs to become more competitive in a global market” says Francesco Ferro, CEO of the Spanish SME PAL Robotics.

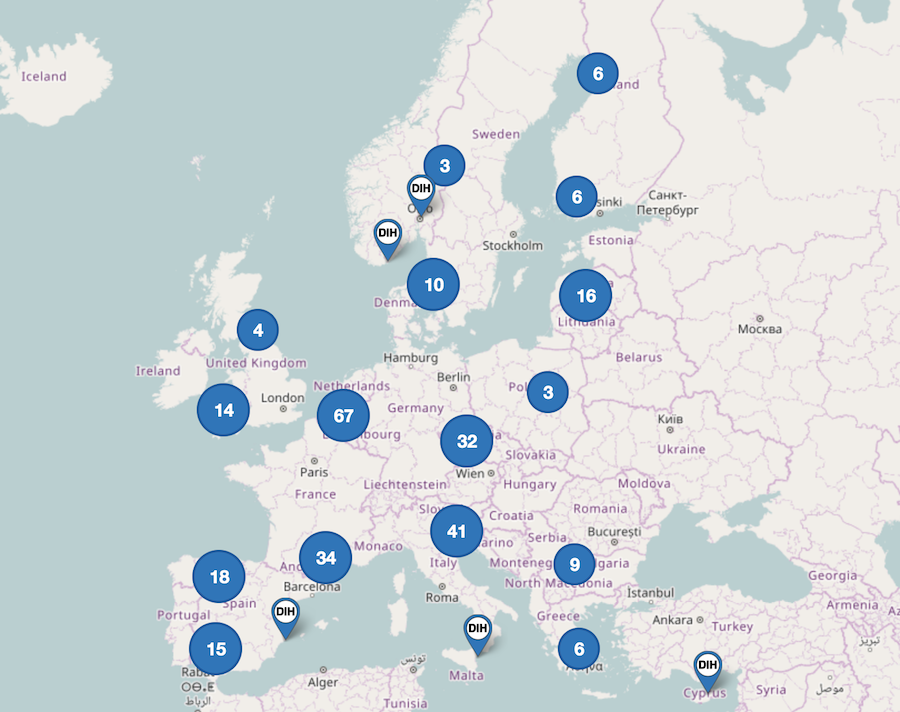

The catalogue of Digital Innovation Hubs, hosted by the Joint Research Centre in Sevilla, currently includes over 290 operational hubs across Europe, with 200 more in preparation. The hubs span the full digital landscape, including robotics, artificial intelligence, big data, high-performance computing and cybersecurity, as well as its applications in healthcare, manufacturing, agriculture and food production, maintenance and inspection.

Focus on Robotics

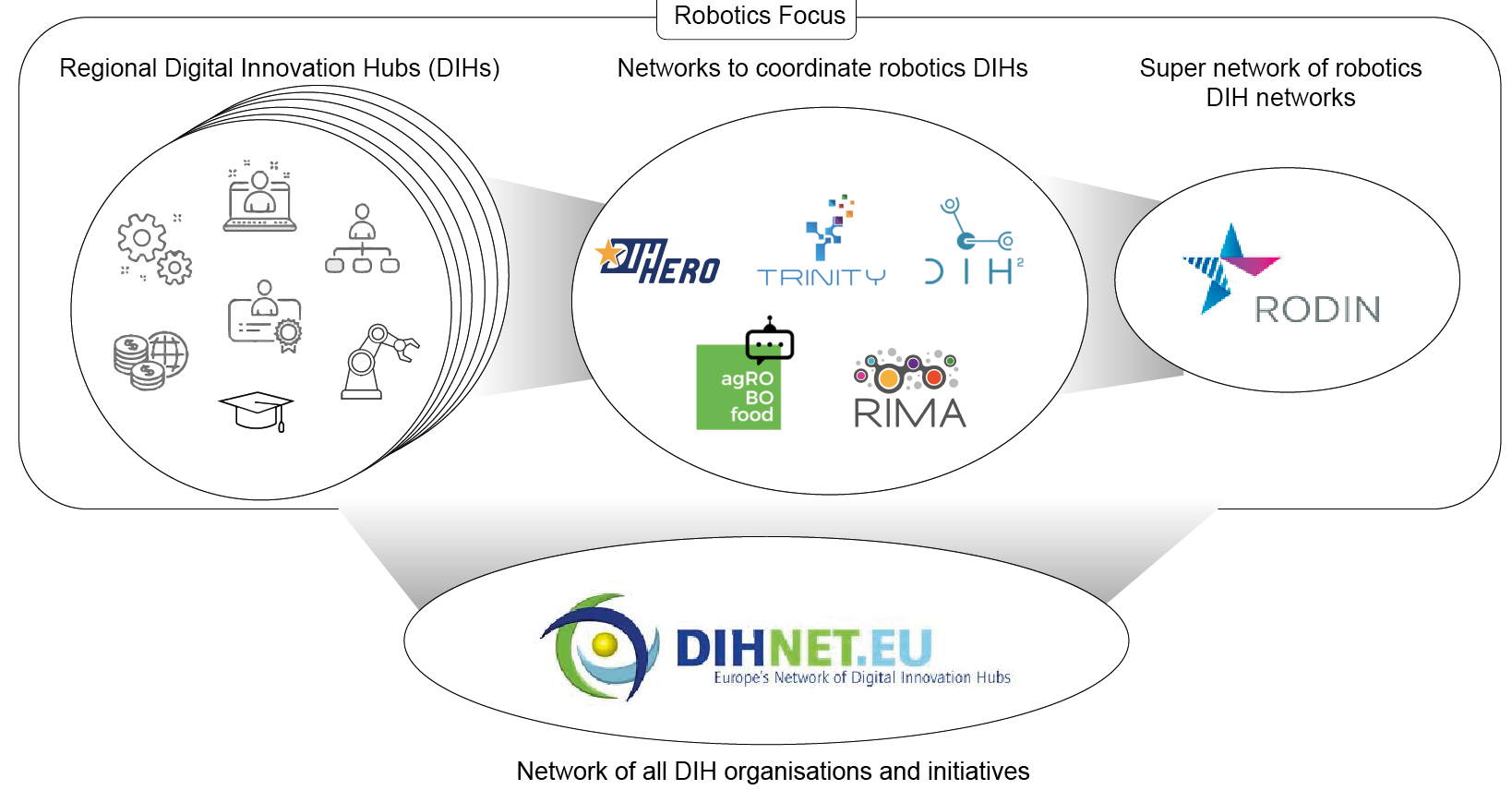

Robotics is central to Europe’s Digital Strategy and 196 of the registered DIHs have identified robotics as an area of expertise. In addition, the European Commission awarded 80M € since 2018 to 5 DIH networks with a robotics focus. Each of these brings together one or two dozen DIHs, with the aim of expanding to even more during the project.

The Horizon 2020 projects Trinity and DIH² focus on Agile production, RIMA promotes the uptake of robotics for inspection and maintenance applications, DIH-HERO facilitates the healthcare robotics sector, and agROBOfood enables the adoption of robotics technologies in the agri-food sector. To coordinate these projects, the Coordination and Support Action (CSA) RODIN started last November with funding of 2M € over five years to cover the whole life-cycle of the network projects. The aim is to present a harmonised interface to the overall landscape of robotics DIHs, coordinate events and calls, share best practice, and connect with similar networks in other areas, such as DIHNET.EU (Next Generation European DIH Network).

Open calls for funding from robotics DIHs

Networks of Networks

DIHNET.EU, the 1M € three-year project launched in November, coordinates the whole network of DIHs in different domains such as robotics, cybersecurity, photonics, advanced manufacturing, artificial intelligence, high-performance-computing, software, big data, agriculture, and more. Think of it as a pan-European network of networks. DIHNET.EU promotes collaboration between DIHs through workshops and events, provides tools and an on-line community platform for DIHs, and is the link to the JRC that maintains the DIH catalogue. The project provides an overview of DIHs to the outside world and helps the European Commission shape DIH-related workshops. To promote the sharing of best practice, DIHNET.EU just launched the Champions Challenge to identify, show and support success stories from mature DIHs that can inspire and guide other DIHs in their developments.

“The DIHNET.EU project is meant to link all relevant initiatives around DIHs together and to create sustainability. Sharing of best practice, creation of awareness and synergies across application sectors, technologies, and regions will help shape a pan-European business ecosystem” says Reinhard Lafrenz, Secretary General of euRobotics.

Robo-Exoticism is the theme for 2019/20 Art, Technology and Culture Colloquiums

Madeline Gannon’s “Robots Are Creatures, Not Things” will be the first work of the Fall 2019-Spring 2020 season of the Colloquiums at UC Berkeley’s Center for New Media at 6pm on Sept 9th.

Dr. Madeline Gannon is a multidisciplinary designer inventing better ways to communicate with machines. In her work, Gannon seeks to blend knowledge from design, robotics, and human-computer interaction to innovate at the intersection of art and technology. Gannon designs her research to engage with wide audiences across scientific and cultural communities: her work has been exhibited at international cultural institutions, published at ACM conferences, and covered by diverse global media outlets. Her 2016 interactive installation, Mimus, even earned her the nickname, “The Robot Whisperer.”

She is three-time World Economic Forum Cultural Leader, and serves as a council member on the World Economic Forum Global Council for IoT, Robotics, & Smart Cities. Gannon holds a Ph.D. in computational design from Carnegie Mellon University, a master’s in architecture from Florida International University, and is a Research Fellow at the Frank-Ratchye STUDIO for Creative Inquiry at Carnegie Mellon University.

Her work “Robots Are Creatures, Not Things” questions how we should coexist with intelligent, autonomous machines. After 50 years of promises and potential, robots are beginning to leave the lab to live in the wild with us. In this lecture, Dr. Madeline Gannon discusses how art and technology are merging to forge new futures for human-robot relations. She shares her work in convincing robots to do things they were never intended to do: from transforming a giant industrial robot into living, breathing mechanical creature, to taming a horde autonomous robots to behave more like a pack of animals. By pushing the boundaries of human-robot interaction, her work shows that robots can not only be useful, but meaningful additions to our everyday lives.

Founded in 1997, the ATC series is an internationally respected forum for creative ideas. The ATC series, free of charge and open to the public, is coordinated by the Berkeley Center for New Media and has presented over 170 leading artists, writers, and critical thinkers who question assumptions and push boundaries at the forefront of art, technology, and culture including: Vito Acconci, Laurie Anderson, Sophie Calle, Bruno Latour, Maya Lin, Doug Aitken, Pierre Huyghe, Miranda July, Billy Kluver, David Byrne, Gary Hill, and Charles Ray.

Current ATC Director is robotics professor Ken Goldberg, who is behind this season’s “Robo-Exotica” theme as well as being the Director of the CITRIS People and Robots Initiative and head of the AutoLab at UC Berkeley.

In 1920, Karl Capek coined the term “robot” in a play about mechanical workers organizing a rebellion to defeat their human overlords. A century later, increasing popularism, inequality, and xenophobia require us to reconsider our assumptions about labor, trade, political stability, and community. At the same time, advances in artificial intelligence and robotics, fueled by corporations and venture capital, challenge our assumptions about the distinctions between humans and machines. To explore potential linkages between these trends, “Robo-Exoticism” characterizes a range of human responses to AI and robots that exaggerate both their negative and positive attributes and reinforce fears, fantasies, and stereotypes.

A Century of Art and Technology in the Bay Area” (essay)

Location:

Monday Evenings, 6:30-8:00pm

Osher Auditorium

BAMPFA, Berkeley, CA

More information

Lectures are free and open to the public. Sign up for the ATC Mailing List!

Global Robotics Revenue to Reach $248.5 Billion by 2025, as the Market for Non-Industrial Robots Maintains Strong Growth, According to Tractica

A Robot Inspired by Worms

Soft robotics breakthrough manages immune response for implanted devices

Image courtesy of the researchers.

Researchers from the Institute for Medical Engineering and Science (IMES) at MIT; the National University of Ireland Galway (NUI Galway); and AMBER, the SFI Research Centre for Advanced Materials and BioEngineering Research, recently announced a significant breakthrough in soft robotics that could help patients requiring in-situ (implanted) medical devices such as breast implants, pacemakers, neural probes, glucose biosensors, and drug and cell delivery devices.

The implantable medical devices market is currently estimated at approximately $100 billion, with significant growth potential into the future as new technologies for drug delivery and health monitoring are developed. These devices are not without problems, caused in part by the body’s own protection responses. These complex and unpredictable foreign-body responses impair device function and drastically limit the long-term performance and therapeutic efficacy of these devices.

One such foreign body response is fibrosis, a process whereby a dense fibrous capsule surrounds the implanted device, which can cause device failure or impede its function. Implantable medical devices have various failure rates that can be attributed to fibrosis, ranging from 30-50 percent for implantable pacemakers or 30 percent for mammoplasty prosthetics. In the case of biosensors or drug/cell delivery devices, the dense fibrous capsule which can build up around the implanted device can seriously impede its function, with consequences for the patient and costs to the health care system.

A radical new vision for medical devices to address this problem was published in the internationally respected journal, Science Robotics. The study was led by researchers from NUI Galway, IMES, and the SFI research center AMBER, among others. The research describes the use of soft robotics to modify the body’s response to implanted devices. Soft robots are flexible devices that can be implanted into the body.

The transatlantic partnership of scientists has created a tiny, mechanically actuated soft robotic device known as a dynamic soft reservoir (DSR) that has been shown to significantly reduce the build-up of the fibrous capsule by manipulating the environment at the interface between the device and the body. The device uses mechanical oscillation to modulate how cells respond around the implant. In a bio-inspired design, the DSR can change its shape at a microscope scale through an actuating membrane.

IMES core faculty member, assistant professor at the Department of Mechanical Engineering, and W.M. Keck Career Development Professor in Biomedical Engineering Ellen Roche, the senior co-author of the study, is a former researcher at NUI Galway who won international acclaim in 2017 for her work in creating a soft robotic sleeve to help patients with heart failure. Of this research, Roche says “This study demonstrates how mechanical perturbations of an implant can modulate the host foreign body response. This has vast potential for a range of clinical applications and will hopefully lead to many future collaborative studies between our teams.”

Garry Duffy, professor in anatomy at NUI Galway and AMBER principal investigator, and a senior co-author of the study, adds “We feel the ideas described in this paper could transform future medical devices and how they interact with the body. We are very excited to develop this technology further and to partner with people interested in the potential of soft robotics to better integrate devices for longer use and superior patient outcomes. It’s fantastic to build and continue the collaboration with the Dolan and Roche labs, and to develop a trans-Atlantic network of soft roboticists.”

The first author of the study, Eimear Dolan, lecturer of biomedical engineering at NUI Galway and former researcher in the Roche and Duffy labs at MIT and NUI Galway, says “We are very excited to publish this study, as it describes an innovative approach to modulate the foreign-body response using soft robotics. I recently received a Science Foundation Ireland Royal Society University Research Fellowship to bring this technology forward with a focus on Type 1 diabetes. It is a privilege to work with such a talented multi-disciplinary team, and I look forward to continuing working together.”