Slotless Brushless Servo Motors Improve Machine Performance.

Vision in Challenging Lighting Environments

ABB demonstrates concept of mobile laboratory robot for Hospital of the Future

Smart Gripper for Small Collaborative Robots

World’s first haptic telerobot hand (Tactile Telerobot) to officially launch at first public event at CEATEC 2019 in Japan

Robofill 4.0 – Robot and Gripper-assisted Filling Concept for Customized Bottle Supply

Rapid Launches and Strategic Acquisitions Propel Growth of Warehouse Robotics

30 women in robotics you need to know about – 2019

From Mexican immigrant to MIT, from Girl Power in Latin America to robotics entrepreneurs in Africa and India, the 2019 annual “women in robotics you need to know about” list is here! We’ve featured 150 women so far, from 2013 to 2018, and this time we’re not stopping at 25. We’re featuring 30 badass #womeninrobotics because robotics is growing and there are many new stories to be told.

So, without further ado, here are the 30 Women In Robotics you need to know about – 2019 edition!

|

Alice Agogino

CEO & CTO – Squishy Robotics

Squishy robots are rapidly deployable mobile sensing robots for disaster rescue, remote monitoring and space exploration, developed from the research at the BEST Lab or Berkeley Emergent Space Tensegrities Lab. Prof. Alice Agogino is the Roscoe and Elizabeth Hughes Professor of Mechanical Engineering, Product Design Concentration Founder and Head Advisor, MEng Program at the University of California, Berkeley, and has a long history of combining research, entrepreneurship and inclusion in engineering. Agogino won the AAAS Lifetime Mentor Award in 2012 and the Presidential Award for Excellence in Science, Mathematics and Engineering Mentoring in 2018. |

|

Danielle Applestone

CEO & CoFounder – Daughters of Rosies

While working at Otherlab, Danielle Applestone developed the Other Machine, a desktop CNC machine and machine control software suitable for students, and funded by DARPA. The company is now known as Bantam Tools, and was acquired by Bre Pettis. Currently, Applestone is CEO and CoFounder of Daughters of Rosie, on a mission to solve the labor shortage in the U.S. manufacturing industry by getting more women into stable manufacturing jobs with purpose, growth potential, and benefits. |

|

Cindy Bethel

Professor and Billie J. Ball Endowed Professorship in Engineering – Mississippi State University

Prof. Cindy Bethel’s research at MSU ranges from designing social robots for trauma victims to mobile robots for law enforcement and first responders. She focuses on human-robot interaction, human-computer interaction and interface design, robotics, affective computing, and cognitive science. Bethel was a NSF Computing Innovation Postdoctoral Research Fellow (CIFellow) at Yale University, is the Billie J. Ball Endowed Professorship of Engineering, the Director of the Social, Therapeutic, and Robotic Systems (STaRS) Lab, and is the 2019 U.S. – Australian Fulbright Senior Scholar at the University of Technology, Sydney. |

|

Sonja Betschart

Co-Founder & Chief Entrepreneurship Officer – WeRobotics

Sonja Betschart is the Co-Founder and Chief Entrepreneurship Officer of WeRobotics, a US/Swiss based non-profit organization that addresses the Digital Divide through local capacity and inclusive participation in the application of emerging technologies in Africa, Latin America, Asia and Oceania. Betschart is a passionate “”Tech for Good”” entrepreneur with a longstanding career in SME’s, multinationals and start-ups, including in the drone industry and for digital transformation initiatives. She holds Master degrees both in Marketing and SME Management and has been voted as one of Switzerlands’ Digital Shapers in 2018. |

|

Susanne Bieller

General Secretary – International Federation of Robotics (IFR)

Dr. Susanne Bieller is General Secretary, of The International Federation of Robotics (IFR), a non-profit organization representing more than 50 manufacturers of industrial robots and national robot associations from over twenty countries. Before then, Dr Bieller was project manager of the European Robotics Association EUnited Robotics. After completing her PhD in Chemistry, she began her professional career at the European Commission in Brussels, then managed the flat-panel display group at the German Engineering Federation (VDMA) in Frankfurt. |

|

Noramay Cadena

Managing Partner – MiLA Capital

Noramay Cadena is an engineer, entrepreneur, investor, and former nonprofit leader. She’s the Cofounder and Managing Director of Make in LA, an early stage hardware accelerator and venture fund in Los Angeles. Since launching in 2015, Make in LA’s venture fund has invested over a million dollars in seed stage companies who have have collectively raised over 25 million dollars and created jobs across the United States and in several other countries. Previously Cadena worked in aerospace with The Boeing Company, and cofounded the Latinas in STEM Foundation in 2013 to inspire and empower Latinas to pursue and thrive in STEM fields. |

|

Madeline Gannon

Principal Researcher – ATONATON

Madeline Gannon is a multidisciplinary designer inventing better ways to communicate with machines. Her recent works taming giant industrial robots focus on developing new frontiers in human-robot relations. Her interactive installation, Mimus, was awarded a 2017 Ars Electronica STARTS Prize Honorable Mention. She was also named a 2017/2018 World Economic Forum Cultural Leader. She holds a PhD in Computational Design from Carnegie Mellon University, where she explored human-centered interfaces for autonomous fabrication machines. She also holds a Masters in Architecture from Florida International University. |

|

Colombia Girl Powered Program

Girl Powered – VEX

The Girl Powered Program is a recent initiative from VEX and the Robotics Education and Competition Foundation, showcasing examples of how women can change the world, providing tools to enable girls to succeed, and providing safe spaces for them to do it in. Girl Powered focuses on supporting diverse creative teams, building inclusive environments, and redefining what a roboticist looks like. |

|

Verity Harding

Co-Lead, DeepMind Ethics and Society – DeepMind

Verity Harding is Co-Lead of DeepMind Ethics & Society, a research unit established to explore the real-world impacts of artificial intelligence. The unit has a dual aim: to help technologists put ethics into practice, and to help society anticipate and direct the impact of AI so that it works for the benefit of all. Prior to this Verity was Head of Security Policy for Google in Europe, and previously the Special Adviser to the Deputy Prime Minister, the Rt Hon Sir Nick Clegg MP, with responsibility for Home Affairs and Justice. She is a graduate of Pembroke College, Oxford University, and was a Michael Von Clemm Fellow at Harvard University. In her spare time, Verity sits on the Board of the Friends of the Royal Academy of Arts. |

|

Lydia Kavraki

Nora Harding Professor – Rice University

Prof. Lydia Kavraki is known for her pioneering works concerning paths for robots, reflected in her influential book Principles of Robot Motion. A professor of Computer Science and Bioengineering at Rice University, she is the developer of Probabilistic Roadmap Method (PRM), a system that uses randomizing and sampling-based motion planners to keep robots from crashing. She’s also the recipient of numerous accolades, including an ACM Grace Murray Hopper Award, an NSF CAREER Award, a Sloan Fellowship and the ACM Athena Award in 2017/2018. |

|

Dana Kulic

Professor – Monash University

Prof. Dana Kulić develops autonomous systems that can operate in concert with humans, using natural and intuitive interaction strategies while learning from user feedback to improve and individualise operation over long-term use. She serves as the Global Innovation Research Visiting Professor at the Tokyo University of Agriculture and Technology, and the August-Wilhelm Scheer Visiting Professor at the Technical University of Munich. Before coming to Monash, she established the Adaptive Systems Lab at the University of Waterloo, and collaborated with colleagues to establish Waterloo as one of Canada’s leading research centers in robotics. |

|

Jean Liu

President – Didi Chuxing

Jean Liu runs the largest mobility company in China, rapidly innovating in the smart cityscape. A native of China, Liu, 40, studied at Peking University and earned a master’s degree in computer science at Harvard. After a decade at Goldman Sachs, Liu joined Didi in 2014 as chief operating officer. During Liu’s tenure, Didi secured investments from all three of China’s largest internet service companies — Baidu, Alibaba and Tencent. It also bought Uber’s China operations in China and has announced a joint venture with the Japan’s Softbank. Liu is outspoken about the need for inclusion and women’s empowerment, also the role of technology in creating a better society. |

|

Amy Loutfi

Professor at the AASS Research Center, Department of Science and Technology – Örebro University |

|

Sheila McIlraith

Professor – University of Toronto |

|

Malika Meghjani

Assistant Professor – Singapore University of Technology |

|

Cristina Olaverri Monreal

BMVIT Endowed Professorship and Chair for Sustainable Transport Logistics 4.0 – Johannes Kepler University |

|

Wendy Moyle

Program Director – Menzies Health Institute |

|

Yukie Nagai

Project Professor and Director of Cognitive Developmental Robotics Lab – University of Tokyo |

|

Temitope Oladokun

Robotics Trainer – TechieGeeks |

|

Svetlana Potyagaylo

SLAM Algorithm Engineer – Indoor Robotics |

|

Suriya Prabha

Founder & CEO YouCode |

|

Amanda Prorok

Assistant Professor – University of Cambridge |

|

Ellen Purdy

Director, Emerging Capabilities & Prototyping Initiatives & Analysis Office of the Assistant Secretary of Defense |

|

Signe Redfield

Engineer – Naval Research Laboratory |

|

Marcela Riccillo

Specialist in Artificial Intelligence & Robotics – Professor Machine Learning & Data Science |

|

Selma Sabanovic

Associate Professor – Indiana University Bloomington |

|

Maria Telleria

Cofounder and CTO – Canvas |

|

Ann Whittaker

Head of People and Culture – Vicarious Surgical |

|

Jinger Zeng

Head of China Ecosystem – Auterion |

Want to keep reading? There are 150 more stories on our 2013 to 2018 lists. Why not nominate someone for inclusion next year! Want to show your support in another fashion? Join the fashionistas with your very own #womeninrobotics tshirt, scarf or mug.

And we encourage #womeninrobotics and women who’d like to work in robotics to join our professional network at https://womeninrobotics.org

Where Is The Industry Leading In Terms Of Autonomous Car Tech?

#295: inVia Robotics: Product-Picking Robots for the Warehouse, with Rand Voorhies

In this episode, Lauren Klein speaks with Dr. Rand Voorhies, co-founder and CTO of inVia Robotics. In a world where consumers expect fast home delivery of a variety of goods, inVia’s mission is to help warehouse workers package diverse sets of products quickly using a system of autonomous mobile robots. Voorhies describes how inVia’s robots operate to pick and deliver boxes or totes of products to and from people workers in a warehouse environment eliminating the need for people to walk throughout the warehouse, and how the actions of the robots are optimized.

Rand Voorhies

Rand Voorhies is the CTO and a founder of inVia Robotics, where he leads the engineering team in developing the software which drives their system of autonomous robots. Voorhies received his PhD in computer science from the University of Southern California, where he conducted robotics research with NASA’s Jet Propulsion Laboratory.

Rand Voorhies is the CTO and a founder of inVia Robotics, where he leads the engineering team in developing the software which drives their system of autonomous robots. Voorhies received his PhD in computer science from the University of Southern California, where he conducted robotics research with NASA’s Jet Propulsion Laboratory.

Links

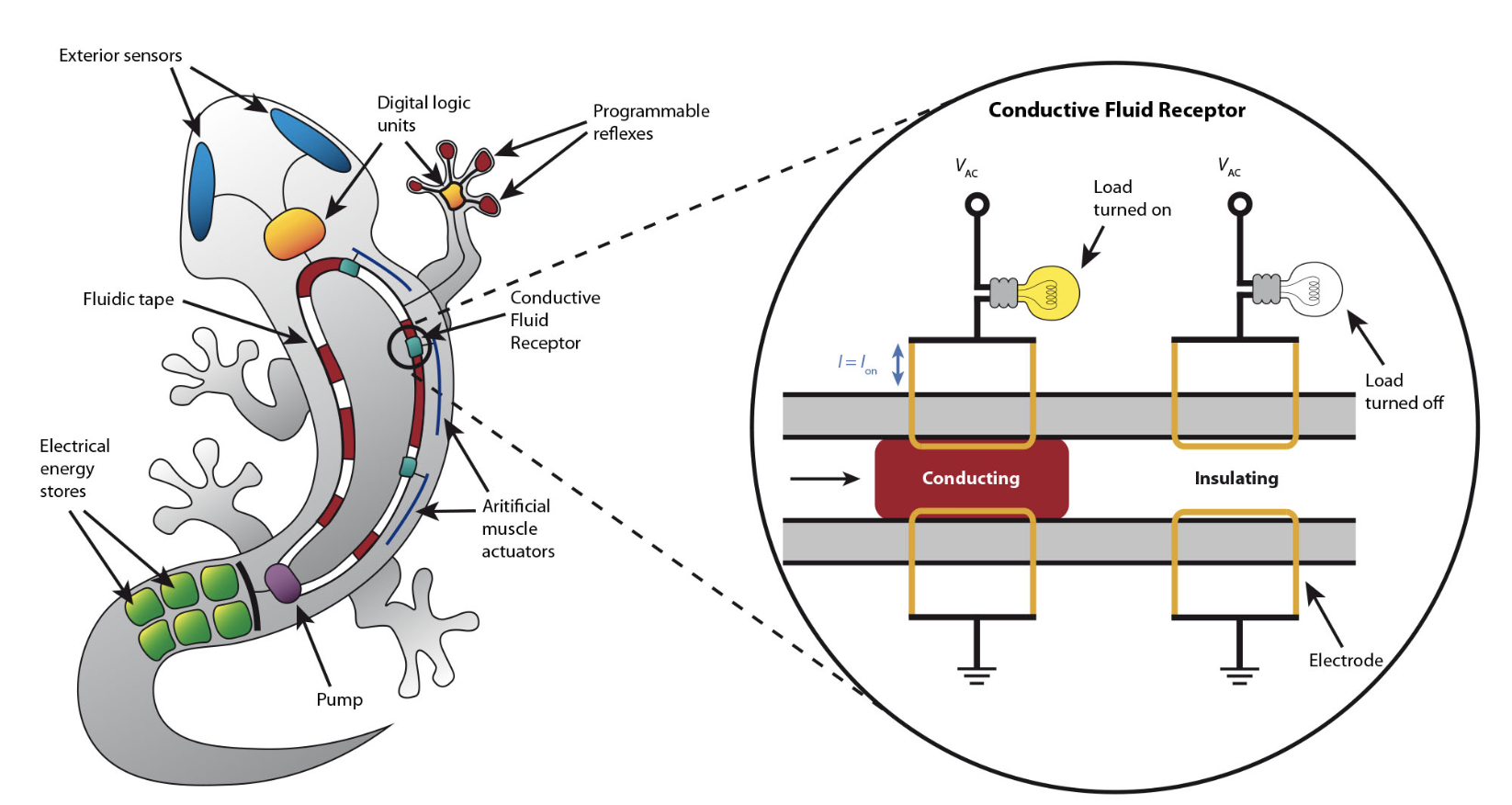

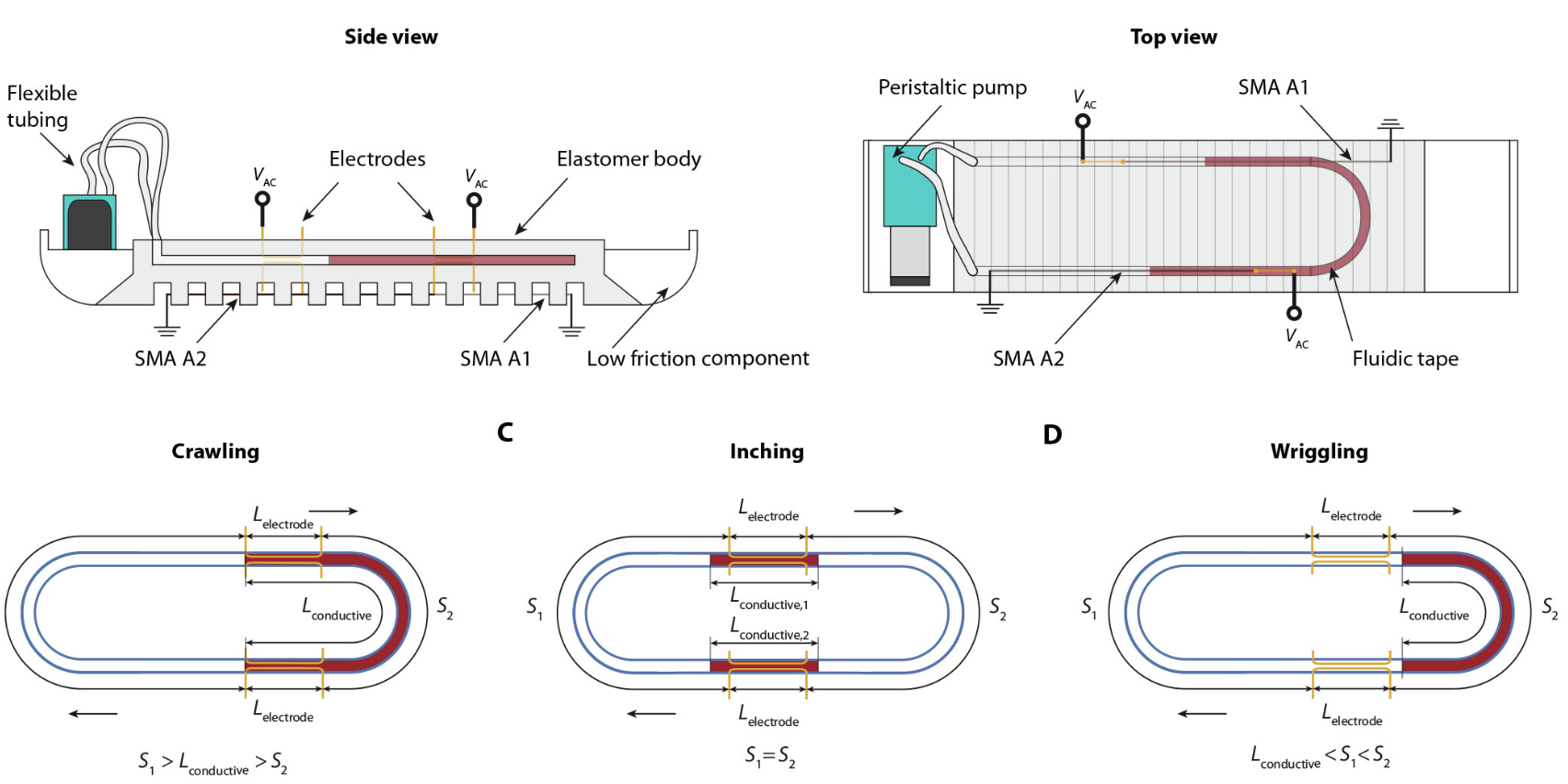

A soft matter computer for soft robots

Our work published recently in Science Robotics describes a new form of computer, ideally suited to controlling soft robots. Our Soft Matter Computer (SMC) is inspired by the way information is encoded and transmitted in the vascular system.

Our work published recently in Science Robotics describes a new form of computer, ideally suited to controlling soft robots. Our Soft Matter Computer (SMC) is inspired by the way information is encoded and transmitted in the vascular system.

Soft robotics has exploded in popularity over the last decade. In part, this is because robots made with soft materials can easily adapt and conform to their environment. This makes soft robots particularly suited to tasks that require a delicate touch, such as handling fragile materials or operating close to the (human) body.

However, until now, most soft robotic systems have been controlled by conventional electronics, made from hard materials such as silicon. This means putting stiff components into an otherwise soft system, limiting its overall flexibility. Our SMC instead uses only flexible materials, allowing soft robots to retain the many benefits of softness. Here’s how it works.

The building block of our soft matter computer is the conductive fluid receptor (CFR). A CFR consists of two electrodes, placed on opposite sides of a soft tube, parallel to the direction of fluid flow. We inject a pattern of insulating (air, clear) and conducting (saltwater, red) fluids into the CFR. When the saltwater connects the two electrodes, the CFR is switched on. By connecting a soft actuator to a CFR, we have a simple control system.

By connecting multiple CFRs together, we can create SMCs that perform more complex calculations. In our paper, we show SMC architectures for performing both analogue and digital computation. This means that in theory, SMCs could be used to implement any algorithm used on an electronic computer.

SMCs can be easily integrated into the body of a soft robot. For example, softworms [1] are powered by two shape memory alloy (SMA) actuators. These actuators contract when current flows through them; by controlling the activation pattern of the two actuators, three distinct gaits can be produced. We show that we can integrate an SMC into the body of a softworm and produce each of the three gaits by varying the programming of the SMC. The video below shows an SMC-Softworm, with the saltwater dyed red.

The SMC is not the first soft matter control system designed for soft robots. Other research groups have developed fluidic [2] and microfluidic [3, 4] control systems. These approaches, however, are limited to controlling fluidic actuators. The SMC outputs an electrical current, meaning it can interface with most soft actuators.

A grand challenge for soft robotics is the development of an autonomous and intelligent robotic system fabricated entirely out of soft materials. We believe that the SMC is an important step towards such a system, while also enabling new possibilities in environmental monitoring, smart prosthetic devices, wearable biosensing and self-healing composites.

You can read more about this work in the Science Robotics paper “A soft matter computer for soft robots”, by M. Garrad, G. Soter, A.T. Conn, H. Hauser, and J. Rossiter.

[1] Umedachi, T., V. Vikas, and B. A. Trimmer. “Softworms: the design and control of non-pneumatic, 3D-printed, deformable robots.” Bioinspiration & biomimetics 11.2 (2016): 025001.

[2] Preston, Daniel J., et al. “Digital logic for soft devices.” Proceedings of the National Academy of Sciences 116.16 (2019): 7750-7759.

[3] Wehner, Michael, et al. “An integrated design and fabrication strategy for entirely soft, autonomous robots.” Nature536.7617 (2016): 451.

[4] Mahon, Stephen T., et al. “Soft Robots for Extreme Environments: Removing Electronic Control.” 2019 2nd IEEE International Conference on Soft Robotics (RoboSoft). IEEE, 2019.

Deep dynamics models for dexterous manipulation

By Anusha Nagabandi

Dexterous manipulation with multi-fingered hands is a grand challenge in robotics: the versatility of the human hand is as yet unrivaled by the capabilities of robotic systems, and bridging this gap will enable more general and capable robots. Although some real-world tasks (like picking up a television remote or a screwdriver) can be accomplished with simple parallel jaw grippers, there are countless tasks (like functionally using the remote to change the channel or using the screwdriver to screw in a nail) in which dexterity enabled by redundant degrees of freedom is critical. In fact, dexterous manipulation is defined as being object-centric, with the goal of controlling object movement through precise control of forces and motions — something that is not possible without the ability to simultaneously impact the object from multiple directions. For example, using only two fingers to attempt common tasks such as opening the lid of a jar or hitting a nail with a hammer would quickly encounter the challenges of slippage, complex contact forces, and underactuation. Although dexterous multi-fingered hands can indeed enable flexibility and success of a wide range of manipulation skills, many of these more complex behaviors are also notoriously difficult to control: They require finely balancing contact forces, breaking and reestablishing contacts repeatedly, and maintaining control of unactuated objects. Success in such settings requires a sufficiently dexterous hand, as well as an intelligent policy that can endow such a hand with the appropriate control strategy. We study precisely this in our work on Deep Dynamics Models for Learning Dexterous Manipulation.

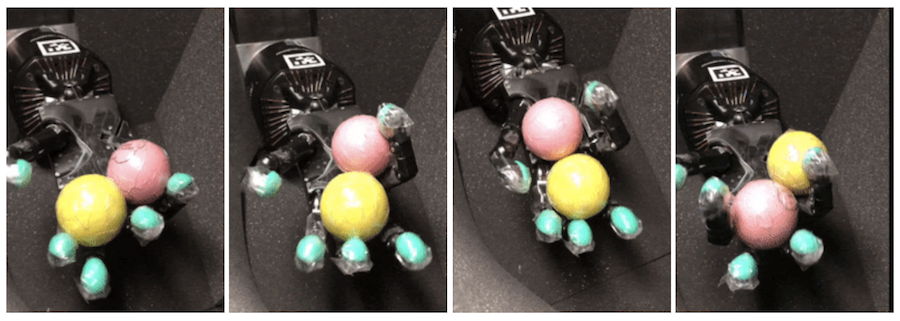

Figure 1: Our approach (PDDM) can efficiently and effectively learn complex dexterous manipulation skills in both simulation and the real world. Here, the learned model is able to control the 24-DoF Shadow Hand to rotate two free-floating Baoding balls in the palm, using just 4 hours of real-world data with no prior knowledge/assumptions of system or environment dynamics.

Common approaches for control include modeling the system as well as the relevant objects in the environment, planning through this model to produce reference trajectories, and then developing a controller to actually achieve these plans. However, the success and scale of these approaches have been restricted thus far due to their need for accurate modeling of complex details, which is especially difficult for such contact-rich tasks that call for precise fine-motor skills. Learning has thus become a popular approach, offering a promising data-driven method for directly learning from collected data rather than requiring explicit or accurate modeling of the world. Model-free reinforcement learning (RL) methods, in particular, have been shown to learn policies that achieve good performance on complex tasks; however, we will show that these state-of-the-art algorithms struggle when a high degree of flexibility is required, such as moving a pencil to follow arbitrary user-specified strokes, instead of a fixed one. Model-free methods also require large amounts of data, often making them infeasible for real-world applications. Model-based RL methods, on the other hand, can be much more efficient, but have not yet been scaled up to similarly complex tasks. Our work aims to push the boundary on this task complexity, enabling a dexterous manipulator to turn a valve, reorient a cube in-hand, write arbitrary motions with a pencil, and rotate two Baoding balls around the palm. We show that our method of online planning with deep dynamics models (PDDM) addresses both of the aforementioned limitations: Improvements in learned dynamics models, together with improvements in online model-predictive control, can indeed enable efficient and effective learning of flexible contact-rich dexterous manipulation skills — and that too, on a 24-DoF anthropomorphic hand in the real world, using ~4 hours of purely real-world data to coordinate multiple free-floating objects.

Method Overview

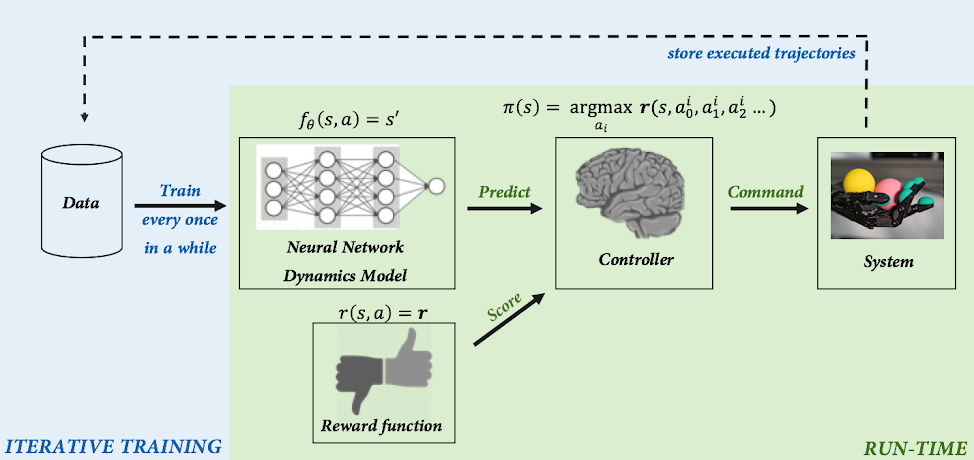

Figure 2: Overview of our PDDM algorithm for online planning with deep dynamics models.

Learning complex dexterous manipulation skills on a real-world robotic system requires an algorithm that is (1) data-efficient, (2) flexible, and (3) general-purpose. First, the method must be efficient enough to learn tasks in just a few hours of interaction, in contrast to methods that utilize simulation and require hundreds of hours, days, or even years to learn. Second, the method must be flexible enough to handle a variety of tasks, so that the same model can be used to perform various different tasks. Third, the method must be general and make relatively few assumptions: It should not require a known model of the system, which can be very difficult to obtain for arbitrary objects in the world.

To this end, we adopt a model-based reinforcement learning approach for dexterous manipulation. Model-based RL methods work by learning a predictive model of the world, which predicts the next state given the current state and action. Such algorithms are more efficient than model-free learners because every trial provides rich supervision: even if the robot does not succeed at performing the task, it can use the trial to learn more about the physics of the world. Furthermore, unlike model-free learning, model-based algorithms are “off-policy,” meaning that they can use any (even old) data for learning. Typically, it is believed that this efficiency of model-based RL algorithms comes at a price: since they must go through this intermediate step of learning the model, they might not perform as well at convergence as model-free methods, which more directly optimize the reward. However, our simulated comparative evaluations show that our model-based method actually performs better than model-free alternatives when the desired tasks are very diverse (e.g., writing different characters with a pencil). This separation of modeling from control allows the model to be easily reused for different tasks – something that is not as straightforward with learned policies.

Our complete method (Figure 2), consists of learning a predictive model of the environment (denoted $f_\theta(s,a) = s’$), which can then be used to control the robot by planning a course of action at every time step through a sampling-based planning algorithm. Learning proceeds as follows: data is iteratively collected by attempting the task using the latest model, updating the model using this experience, and repeating. Although the basic design of our model-based RL algorithms has been explored in prior work, the particular design decisions that we made were crucial to its performance. We utilize an ensemble of models, which accurately fits the dynamics of our robotic system, and we also utilize a more powerful sampling-based planner that preferentially samples temporally correlated action sequences as well as performs reward-weighted updates to the sampling distribution. Overall, we see effective learning, a nice separation of modeling and control, and an intuitive mechanism for iteratively learning more about the world while simultaneously reasoning at each time step about what to do.

Baoding Balls

For a true test of dexterity, we look to the task of Baoding balls. Also referred to as Chinese relaxation balls, these two free-floating spheres must be rotated around each other in the palm. Requiring both dexterity and coordination, this task is commonly used for improving finger coordination, relaxing muscular tensions, and recovering muscle strength and motor skills after surgery. Baoding behaviors evolve in the high dimensional workspace of the hand and exhibit contact-rich (finger-finger, finger-ball, and ball-ball) interactions that are hard to reliably capture, either analytically or even in a physics simulator. Successful baoding behavior on physical hardware requires not only learning about these interactions via real world experiences, but also effective planning to find precise and coordinated maneuvers while avoiding task failure (e.g., dropping the balls).

For our experiments, we use the ShadowHand — a 24-DoF five-fingered anthropomorphic hand. In addition to ShadowHand’s inbuilt proprioceptive sensing at each joint, we use a 280×180 RGB stereo image pair that is fed into a separately pretrained tracker to produce 3D position estimates for the two Baoding balls. To enable continuous experimentation in the real world, we developed an automated reset mechanism (Figure 3) that consists of a ramp and an additional robotic arm: The ramp funnels the dropped Baoding balls to a specific position and then triggers the 7-DoF Franka-Emika arm to use its parallel jaw gripper to pick them up and return them to the ShadowHand’s palm to resume training. We note that the entire training procedure is performed using the hardware setup described above, without the aid of any simulation data.

Figure 3: Automated reset procedure, where the Franka-Emika arm gathers and resets the Baoding Balls, in order for the ShadowHand to continue its training.

During the initial phase of the learning, the hand continues to drop both balls, since that is the very likely outcome before it knows how to solve the task. Later, it learns to keep the balls in the palm to avoid the penalty incurred due to dropping. As learning improves, progress in terms of half-rotations start to emerge around 30 minutes of training. Getting the balls past this 90-degree orientation is a difficult maneuver, and PDDM spends a moderate amount of time here: To get past this point, notice the transition that must happen (in the 3rd video panel of Figure 4), from first controlling the objects with the pinky, and then controlling them indirectly through hand motion, and finally getting to control them with the thumb. By ~2 hours, the hand can reliably make 90-degree turns, frequently make 180-degree turns, and sometimes even make turns with multiple rotations.

Figure 4: Training progress on the ShadowHand hardware. From left to right: 0-0.25 hours, 0.25-0.5 hours, 0.5-1.5 hours, ~2 hours.

Simulated Tasks

Although we presented the PDDM algorithm in light of the Baoding task, it is very generic, and we show it below in Figure 5 working on a suite of simulated dexterous manipulation tasks. These tasks illustrate various challenges presented by contact-rich dexterous manipulation tasks — high dimensionality of the hand, intermittent contact dynamics involving hand and objects, prevalence of constraints that must be respected and utilized to effectively manipulate objects, and catastrophic failures from dropping objects from the hand. These tasks not only require precise understanding of the rich contact interactions but also require carefully coordinated and planned movements.

Figure 5: Result of PDDM solving simulated dexterous manipulation tasks. From left to right: 9 DOF D’Claw turning valve to random (green) targets (~20 min of data), 16 dof D’Hand pulling a weight via the manipulation of a flexible rope (~1 hour of data), 24 DOF ShadowHand performing in-hand reorientation of a free-floating cube to random (shown) targets (~1 hour of data), 24 DOF ShadowHand following desired trajectories with tip of a free-floating pencil (~1-2 hours of data). Note that the amount of data is measured in terms of the real-world equivalent (e.g., 100 data points where each step represents 0.1 seconds would represent 10 seconds worth of data).

Model Reuse

Since PDDM learns dynamics models as opposed to task-specific policies or policy-conditioned value functions, a given model can then be reused when planning for different but related tasks. In Figure 6 below, we demonstrate that the model trained for the Baoding task of performing counterclockwise rotations (left) can be repurposed to move a single ball to a goal location in the hand (middle) or to perform clockwise rotations (right) instead of the learned counterclockwise ones.

Figure 6: Model reuse on simulated tasks. Left: train model on CCW Baoding task. Middle: reuse that model for go-to single location task. Right: reuse that same model for CW Baoding task.

Flexibility

We study the flexibility of PDDM by experimenting with handwriting, where the base of the hand is fixed and arbitrary characters need to be written through the coordinated movement of the fingers and wrist. Although even writing a fixed trajectory is challenging, we see that writing arbitrary trajectories requires a degree of flexibility and coordination that is exceptionally challenging for prior methods. PDDM’s separation of modeling and task-specific control allows for generalization across behaviors, as opposed to discovering and memorizing the answer to a specific task/movement. In Figure 7 below, we show PDDM’s handwriting results that were trained on random paths for the green dot but then tested in a zero-shot fashion to write numerical digits.

Figure 7: Flexibility of the learned handwriting model, which was trained to follow random paths of the green dot, but shown here to write some digits.

Future Directions

Our results show that PDDM can be used to learn challenging dexterous manipulation tasks, including controlling free-floating objects, agile finger gaits for repositioning objects in the hand, and precise control of a pencil to write user-specified strokes. In addition to testing PDDM on our simulated suite of tasks to analyze various algorithmic design decisions as well as to perform comparisons to other state-of-the-art model-based and model-free algorithms, we also show PDDM learning the Baoding Balls task on a real-world 24-DoF anthropomorphic hand using just a few hours of entirely real-world interaction. Since model-based techniques do indeed show promise on complex tasks, exciting directions for future work would be to study methods for planning at different levels of abstraction to enable success on sparse-reward or long-horizon tasks, as well as to study the effective integration of additional sensing modalities, such as vision and touch, into these models to better understand the world and expand the boundaries of what our robots can do. Can our robotic hand braid someone’s hair? Crack an egg and carefully handle the shell? Untie a knot? Button up all the buttons of a shirt? Tie shoelaces? With the development of models that can understand the world, along with planners that can effectively use those models, we hope the answer to all of these questions will become ‘yes.’

Acknowledgements

This work was done at Google Brain, and the authors are Anusha Nagabandi, Kurt Konoglie, Sergey Levine, and Vikash Kumar. The authors would also like to thank Michael Ahn for his frequent software and hardware assistance, and Sherry Moore for her work on setting up the drivers and code for working with our ShadowHand.

This article was initially published on the BAIR blog, and appears here with the authors’ permission.

Complex lattices that change in response to stimuli open a range of applications in electronics, robotics, and medicine

By Leah Burrows

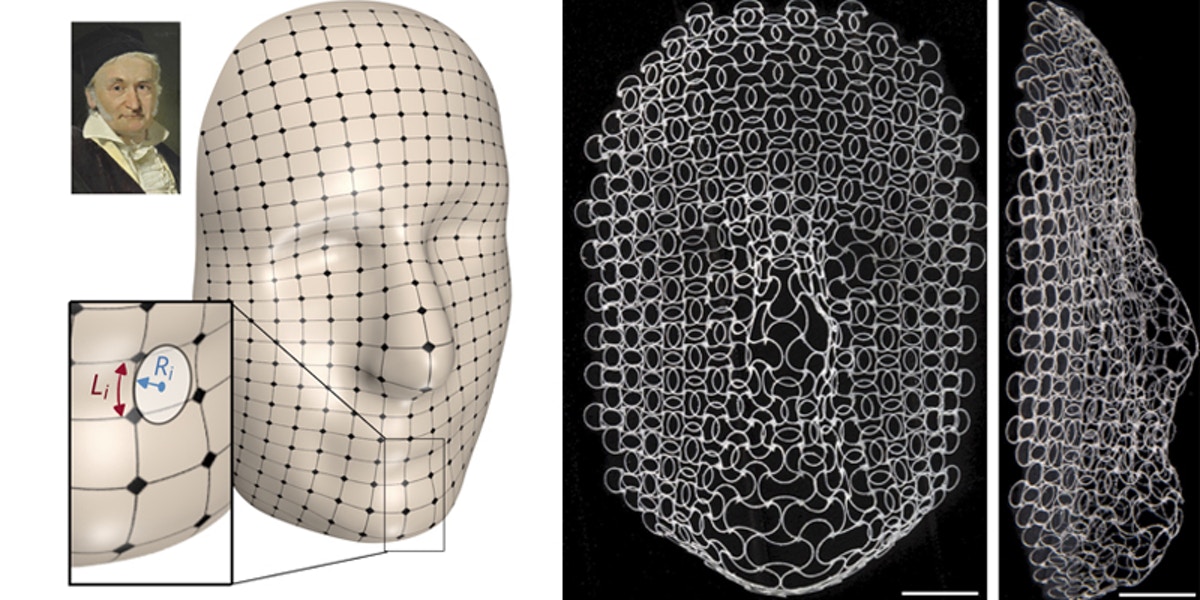

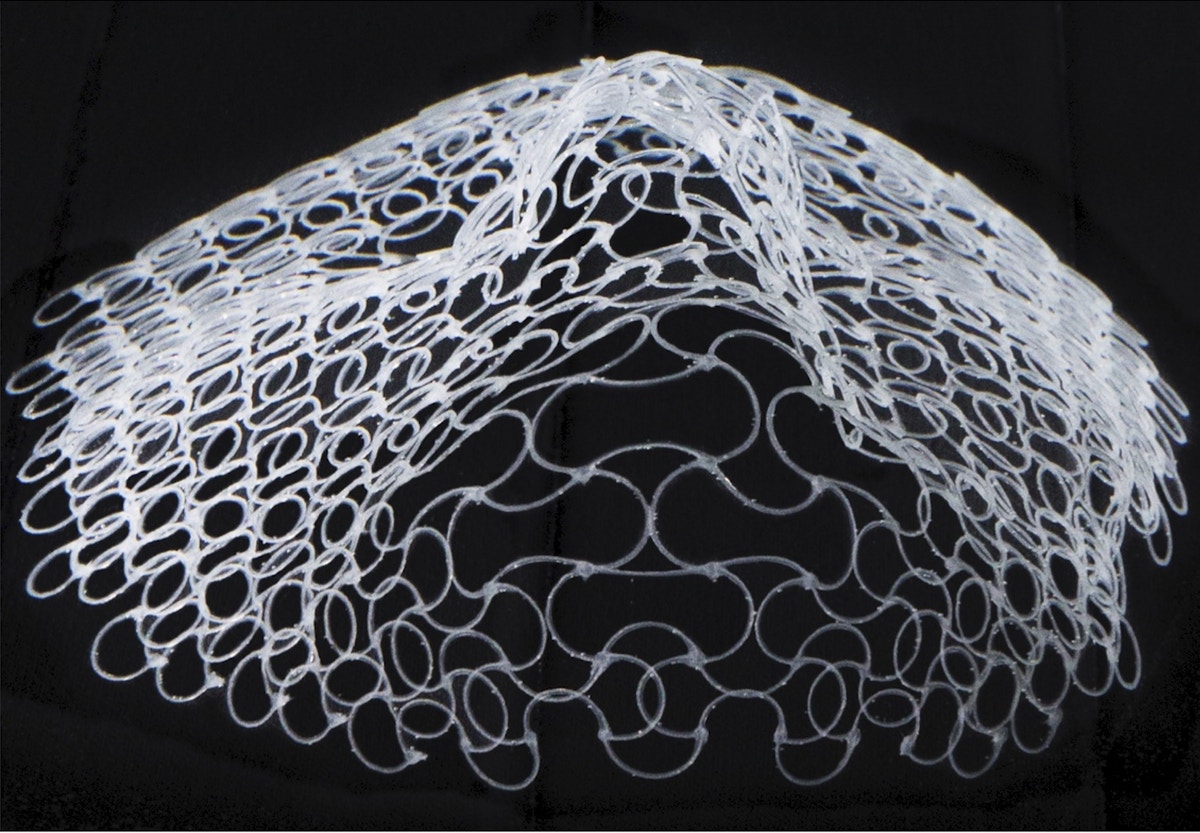

What would it take to transform a flat sheet into a human face? How would the sheet need to grow and shrink to form eyes that are concave into the face and a convex nose and chin that protrude?

How to encode and release complex curves in shape-shifting structures is at the center of research led by the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) and Harvard’s Wyss Institute of Biologically Inspired Engineering.

Over the past decade, theorists and experimentalists have found inspiration in nature as they have sought to unravel the physics, build mathematical frameworks, and develop materials and 3D and 4D-printing techniques for structures that can change shape in response to external stimuli.

However, complex multi-scale curvature has remained out of reach.

Now, researchers have created the most complex shape-shifting structures to date — lattices composed of multiple materials that grow or shrink in response to changes in temperature. To demonstrate their technique, team printed flat lattices that shape morph into a frequency-shifting antenna or the face of pioneering mathematician Carl Friedrich Gauss in response to a change in temperature.

The research is published in the Proceedings of the National Academy of Sciences.

“Form both enables and constrains function,” said L Mahadevan, Ph.D., the de Valpine Professor of Applied Mathematics, and Professor of Physics and Organismic and Evolutionary Biology at Harvard. “Using mathematics and computation to design form, and a combination of multi-scale geometry and multi-material printing to realize it, we are now able to build shape-shifting structures with the potential for a range of functions.”

“Together, we are creating new classes of shape-shifting matter,” said Jennifer A. Lewis, Sc.D., the Hansjörg Wyss Professor of Biologically Inspired Engineering at Harvard. “Using an integrated design and fabrication approach, we can encode complex ‘instruction sets’ within these printed materials that drive their shape-morphing behavior.”

Lewis is also a Core Faculty member of the Wyss Institute.

To create complex and doubly-curved shapes — such as those found on a face — the team turned to a bilayer, multimaterial lattice design.

“The open cells of the curved lattice give it the ability to grow or shrink a lot, even if the material itself undergoes limited extension,” said co-first author Wim M. van Rees, Ph.D., who was a postdoctoral fellow at SEAS and is now an Assistant Professor at MIT.

To achieve complex curves, growing and shrinking the lattice on its own isn’t enough. You need to be able to direct the growth locally.

“That’s where the materials palette that we’ve developed comes in,” said J. William Boley, Ph.D., a former postdoctoral fellow at SEAS and co-first author of the paper. “By printing materials with different thermal expansion behavior in pre-defined configurations, we can control the growth and shrinkage of each individual rib of the lattice, which in turn gives rise to complex bending of the printed lattice both within and out of plane.” Boley is now an Assistant Professor at Boston University.

The researchers used four different materials and programmed each rib of the lattice to change shape in response to a change in temperature. Using this method, they printed a shape-shifting patch antenna, which can change resonant frequencies as it changes shape.

To showcase the ability of the method to create a complex surface with multiscale curvature, the researchers decided to print a human face. They chose the face of the 19th century mathematician who laid the foundations of differential geometry: Carl Friederich Gauss. The researchers began with a 2D portrait of Gauss, painted in 1840, and generated a 3D surface using an open-source artificial intelligence algorithm. They then programmed the ribs in the different layers of the lattice to grow and shrink, mapping the curves of Gauss’ face.

This inverse design approach and multimaterial 4D printing method could be extended to other stimuli-responsive materials and be used to create scalable, reversible, shape-shifting structures with unprecedented complexity.

“Application areas include, soft electronics, smart fabrics, tissue engineering, robotics and beyond,” said Boley.

“This work was enabled by recent advances in posing and solving geometric inverse problems combined with 4D-printing technologies using multiple materials. Going forward, our hope is that this multi-disciplinary approach for shaping matter will be broadly adopted,” said Mahadevan.

This research was co-authored by Charles Lissandrello, Mark Horenstein, Ryan Truby, and Arda Kotikian. It was supported by the National Science Foundation and Draper Laboratory.

Sample efficient evolutionary algorithm for analog circuit design

By Kourosh Hakhamaneshi

In this post, we share some recent promising results regarding the applications of Deep Learning in analog IC design. While this work targets a specific application, the proposed methods can be used in other black box optimization problems where the environment lacks a cheap/fast evaluation procedure.

So let’s break down how the analog IC design process is usually done, and then how we incorporated deep learning to ease the flow.