DOE’s E-ROBOT Prize targets robots for construction and built environment

Silicon Valley Robotics is pleased to announce that we are a Connector organization for the E-ROBOT Prize, and other DOE competitions on the American-Made Network. There is \$2 million USD available in up to ten prizes for Phase One of the E-ROBOT Prize, and \$10 million USD available in Phase Two. Individuals or teams can sign up for the competition, as the online platform offers opportunities to connect with potential team members, as do competition events organized by Connector organizations. Please cite Silicon Valley Robotics as your Connector organization, when entering the competition.

Silicon Valley Robotics will be hosting E-Robot Prize information and connection events as part of our calendar of networking and Construction Robotics Network events. The first event will be on February 3rd at 7pm PST in our monthly robot ‘showntell’ event “Bots&Beer”, and you can register here. We’ll be announcing more Construction Robotics Network events very soon.

E-ROBOT stands for Envelope Retrofit Opportunities for Building Optimization Technologies. Phase One of the E-ROBOT Prize looks for solutions in sensing, inspection, mapping or retrofitting in building envelopes and the deadline is May 19 2021. Phase Two will focus on holistic, rather than individual solutions, i.e. bringing together the full stack of sensing, inspection, mapping and retrofitting.

The overarching goal of E-ROBOT is to catalyze the development of minimally invasive, low-cost, and holistic building envelope retrofit solutions that make retrofits easier, faster, safer, and more accessible for workers. Successful competitors will provide solutions that provide significant advancements in robot technologies that will advance the energy efficiency retrofit industry and develop building envelope retrofit technologies that meet the following criteria:

- Holistic: The solution must include mapping, retrofit, sensing, and inspection.

- Low cost: The solution should reduce costs significantly when compared to current state-of-the-art solutions. The target for reduction in costs should be based on a 50% reduction from the baseline costs of a fully implemented solution (not just hardware, software, or labor; the complete fully implemented solution must be considered). If costs are not at the 50% level, there should be a significant energy efficiency gain achieved.

- Minimally invasive: The solution must not require building occupants to vacate the premises or require envelope teardown or significant envelope damage.

- Utilizes long-lasting materials: Retrofit is done with safe, nonhazardous, and durable (30+ year lifespan) materials.

- Completes time-efficient, high-quality installations: The results of the retrofit must meet common industry quality standards and be completed in a reasonable timeframe.

- Provides opportunities to workers: The solution enables a net positive gain in terms of the workforce by bringing high tech jobs to the industry, improving worker safety, enabling workers to be more efficient with their time, improving envelope accessibility for workers, and/or opening up new business opportunities or markets.

The E-ROBOT Prize provides a total of \$5 million in funding, including \$4 million in cash prizes for competitors and an additional \$1 million in awards and support to network partners.

Through this prize, the U.S. Department of Energy (DOE) will stimulate technological innovation, create new opportunities for the buildings and construction workforce, reduce building retrofit costs, create a safer and faster retrofit process, ensure consistent, high-quality installations, enhance construction retrofit productivity, and improve overall energy savings of the built environment.

The E-ROBOT Prize is made up of two phases that will fast-track efforts to identify, develop, and validate disruptive solutions to meet building industry needs. Each phase will include a contest period when participants will work to rapidly advance their solutions. DOE invites anyone, individually or as a team, to compete to transform a conceptual solution into product reality.

DOE’s E-ROBOT Prize targets robots for construction and built environment

Silicon Valley Robotics is pleased to announce that we are a Connector organization for the E-ROBOT Prize, and other DOE competitions on the American-Made Network. There is \$2 million USD available in up to ten prizes for Phase One of the E-ROBOT Prize, and \$10 million USD available in Phase Two. Individuals or teams can sign up for the competition, as the online platform offers opportunities to connect with potential team members, as do competition events organized by Connector organizations. Please cite Silicon Valley Robotics as your Connector organization, when entering the competition.

Silicon Valley Robotics will be hosting E-Robot Prize information and connection events as part of our calendar of networking and Construction Robotics Network events. The first event will be on February 3rd at 7pm PST in our monthly robot ‘showntell’ event “Bots&Beer”, and you can register here. We’ll be announcing more Construction Robotics Network events very soon.

E-ROBOT stands for Envelope Retrofit Opportunities for Building Optimization Technologies. Phase One of the E-ROBOT Prize looks for solutions in sensing, inspection, mapping or retrofitting in building envelopes and the deadline is May 19 2021. Phase Two will focus on holistic, rather than individual solutions, i.e. bringing together the full stack of sensing, inspection, mapping and retrofitting.

The overarching goal of E-ROBOT is to catalyze the development of minimally invasive, low-cost, and holistic building envelope retrofit solutions that make retrofits easier, faster, safer, and more accessible for workers. Successful competitors will provide solutions that provide significant advancements in robot technologies that will advance the energy efficiency retrofit industry and develop building envelope retrofit technologies that meet the following criteria:

- Holistic: The solution must include mapping, retrofit, sensing, and inspection.

- Low cost: The solution should reduce costs significantly when compared to current state-of-the-art solutions. The target for reduction in costs should be based on a 50% reduction from the baseline costs of a fully implemented solution (not just hardware, software, or labor; the complete fully implemented solution must be considered). If costs are not at the 50% level, there should be a significant energy efficiency gain achieved.

- Minimally invasive: The solution must not require building occupants to vacate the premises or require envelope teardown or significant envelope damage.

- Utilizes long-lasting materials: Retrofit is done with safe, nonhazardous, and durable (30+ year lifespan) materials.

- Completes time-efficient, high-quality installations: The results of the retrofit must meet common industry quality standards and be completed in a reasonable timeframe.

- Provides opportunities to workers: The solution enables a net positive gain in terms of the workforce by bringing high tech jobs to the industry, improving worker safety, enabling workers to be more efficient with their time, improving envelope accessibility for workers, and/or opening up new business opportunities or markets.

The E-ROBOT Prize provides a total of \$5 million in funding, including \$4 million in cash prizes for competitors and an additional \$1 million in awards and support to network partners.

Through this prize, the U.S. Department of Energy (DOE) will stimulate technological innovation, create new opportunities for the buildings and construction workforce, reduce building retrofit costs, create a safer and faster retrofit process, ensure consistent, high-quality installations, enhance construction retrofit productivity, and improve overall energy savings of the built environment.

The E-ROBOT Prize is made up of two phases that will fast-track efforts to identify, develop, and validate disruptive solutions to meet building industry needs. Each phase will include a contest period when participants will work to rapidly advance their solutions. DOE invites anyone, individually or as a team, to compete to transform a conceptual solution into product reality.

American Robotics Becomes First Company Approved by the FAA To Operate Automated Drones Without Human Operators On-Site

The future of robotics research: Is there room for debate?

By Brian Wang, Sarah Tang, Jaime Fernandez Fisac, Felix von Drigalski, Lee Clement, Matthew Giamou, Sylvia Herbert, Jonathan Kelly, Valentin Pertroukhin, and Florian Shkurti

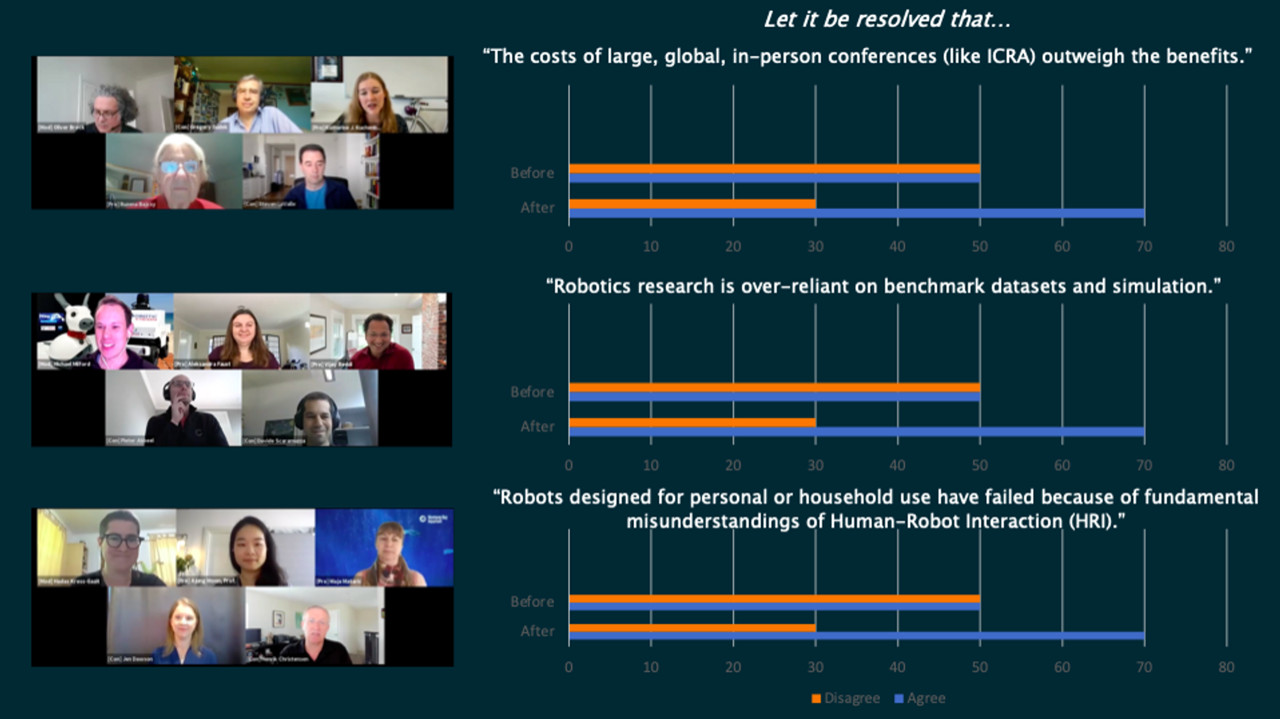

As the field of robotics matures, our community must grapple with the multifaceted impact of our research; in this article, we describe two previous workshops hosting robotics debates and advocate for formal debates to become an integral, standalone part of major international conferences, whether as a plenary session or as a parallel conference track.

As roboticists build increasingly complex systems for applications spanning manufacturing, personal assistive technologies, transportation and others, we face not only technical challenges, but also the need to critically assess how our work can advance societal good. Our rapidly growing and uniquely multidisciplinary field naturally cultivates diverse perspectives, and informal dialogues about our impact, ethical responsibilities, and technologies. Indeed, such discussions have become a cornerstone of the conference experience, but there has been relatively little formal programming in this direction at major technical conferences like the IEEE International Conference on Robotics and Automation (ICRA) and Robotics: Science and Systems (RSS) Conference.

To fill this void, we organized two workshops entitled “Debates on the Future of Robotics Research” at ICRA 2019 and 2020, inspired by a similar workshop at the 2018 International Conference on Machine Learning (ICML). In these workshops, panellists from industry and academia debated key issues in a structured format, with groups of two arguing either “for” or “against” resolutions relating to these issues. The workshops featured three 80-minute sessions modelled roughly after the Oxford Union debate format, consisting of:

- An initial audience poll asking whether they “agree” or “disagree” with the proposed resolution

- Opening statements from all four panellists, alternating for and against

- Moderated discussion including audience questions

- Closing remarks

- A final audience poll

- Panel discussion and audience Q&A

The “Debates” workshops attracted approximately 400 attendees in 2019 and 1100 in 2020 (ICRA 2020 was held virtually in response to the COVID-19 pandemic). In some instances, panellists took positions out of line with their personally held beliefs and nonetheless swayed audience members to their side, with audience polls displaying notable shifts in majority opinion. These results demonstrate the ability of debate to engage our community, elucidate complex issues, and challenge ourselves to adopt new ways of thinking. Indeed, the popularity of the format is on the rise within the robotics community, as other recent workshops have also adopted a debates format — for instance, the 2${}^{nd}$ Workshop on Closing the Reality Gap in Sim2Real Transfer for Robotics or Soft Robotics Debates).

(ICRA)

Given this positive community response, we argue that major robotics conferences should begin to organize structured debates as a core element of their programming. We believe a debate-style plenary session or parallel track would provide a number of benefits to conference attendees and the wider robotics community:

- Exposure to ideas: Attendees would have more opportunities to be exposed to unfamiliar ideas and perspectives in a well-attended plenary session, while minimizing overlap with technical sessions.

- Equity: Panellists and moderators no longer have to choose between accepting a debate invitation, accepting a workshop speaking engagement, supporting their students’ workshop presentations, and/or hosting a workshop of their own. Some of these conflicting responsibilities disproportionately affect early career researchers.

- Inclusion: Conference organizers have the discretion to provide travel support, enabling panellists and moderators from a wider range of countries, career stages, and backgrounds to participate.

- Impact: A notable Science Robotics article cited debates as a necessary driver of progress towards solving robotics’ grand challenges. By facilitating structured and critical self-reflection, a widely accessible debates plenary could help identify avenues for future work and move the field forward.

The driving force behind such an event should be a diverse debates committee responsible for inviting representatives within and outside academia, with different research interests, at different career stages, and from different parts of the world. The committee will also lead the selection of appropriate debate topics. Historically, our debate propositions revolved around three key questions: “what problems should we solve?”, “how should we solve them?”, and “how do we measure success and progress?”.

Recent high-profile shutterings of several prominent robotics companies testify to the importance of identifying the right problem. How do we transform our research into products that provide value to end-users, while keeping in mind our environmental and economic impact? How can we quickly introspect, learn from, and pivot in response to failures, as well as avoid repeating past mistakes? Our 2020 resolution, “robots designed for personal or household use have failed because of fundamental misunderstandings of Human-Robot Interaction (HRI),” provided an opportunity to discuss such questions.

As a multidisciplinary field drawing on advances in machine learning, computer science, mechanical design, control, optimization, biology, and more, robotics has a wealth of tools at its disposal. A central problem in robotics research is then to identify the right tool for the right problem. Our 2019 debate proposition, “The pervasiveness of deep learning in robotics research is an impediment to gaining scientific insights into robotics problems.”, probed the ascendance of data-driven approaches to robotics problems, and attracted a large audience.

In addition to identifying the right problems and the right tools to solve them, it is equally important to discuss how best to evaluate and compare proposed solutions. A central tension in robotics research is how to strike a balance between accessible and replicable benchmark datasets, and real world experiments. Our 2020 debate proposition, “Robotics research is over reliant on benchmark datasets and simulation”, challenged the audience to consider how to rigorously and accessibly evaluate the performance of robotic systems without overfitting to a particular benchmark. Indeed, failures to adequately evaluate robotics algorithms have already led to tragic loss of life, underscoring the importance of establishing common standards for measuring the performance and safety of our technologies in the context of rapid commercialization and real-world deployments. These standards must be informed by regular and rigorous critical reflection on our ethical obligations as researchers, practitioners and policymakers. The fact that our 2019 debate proposition, “Robotics needs a similar level of regulation and certification as other engineering disciplines (e.g., aviation), even if this results in slower technological innovation”, attracted and resonated with a significant audience is evidence of demand for structured discussion of these topics.

As robotics technologies continue to move from the research laboratory to the real world, we believe there should be room for debate at major robotics conferences. Enshrining debate as a core element of major robotics conferences will serve to create opportunities for self-reflection, establish institutional memory, and drive the field forward.

For more information, please see https://roboticsdebates.org/.

Designing customized ‘brains’ for robots

Smooth touchdown: Novel camera-based system for automated landing of drone on a fixed spot

Talking to Machines – New Operating Concepts With Artificial Intelligence

Bio-inspired robotics: Learning from dragonflies

Behind those dancing robots, scientists had to bust a move

Best deep CNN architectures and their principles: from AlexNet to EfficientNet

Squid-inspired robot swims with nature’s most efficient marine animals

A MiR500 Robot Lowers Transport Costs at Schneider Electric

How do you know where a drone is flying without a GPS signal?

Self-supervised learning of visual appearance solves fundamental problems of optical flow

Flying insects as inspiration to AI for small drones

How do honeybees land on flowers or avoid obstacles? One would expect such questions to be mostly of interest to biologists. However, the rise of small electronics and robotic systems has also made them relevant to robotics and Artificial Intelligence (AI). For example, small flying robots are extremely restricted in terms of the sensors and processing that they can carry onboard. If these robots are to be as autonomous as the much larger self-driving cars, they will have to use an extremely efficient type of artificial intelligence – similar to the highly developed intelligence possessed by flying insects.

Optical flow

One of the main tricks up the insect’s sleeve is the extensive use of ‘optical flow’: the way in which objects move in their view. They use it to land on flowers and avoid obstacles or predators. Insects use surprisingly simple and elegant optical flow strategies to tackle complex tasks. For example, for landing, honeybees keep the optical flow divergence (how quickly things get bigger in view) constant when going down. By following this simple rule, they automatically make smooth, soft landings.

I started my work on optical flow control from enthusiasm about such elegant, simple strategies. However, developing the control methods to actually implement these strategies in flying robots turned out to be far from trivial. For example, when I first worked on optical flow landing my flying robots would not actually land, but they started to oscillate, continuously going up and down, just above the landing surface.

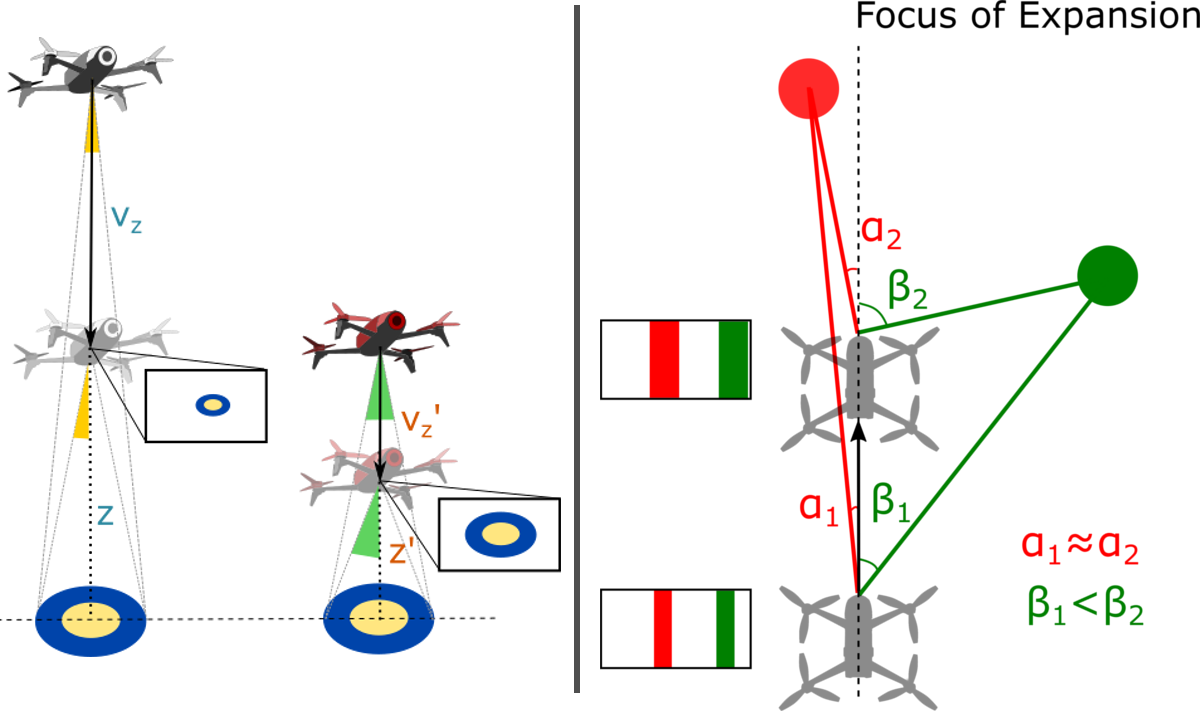

Fundamental problems

Optical flow has two fundamental problems that have been widely described in the growing literature on bio-inspired robotics. The first problem is that optical flow only provides mixed information on distances and velocities – and not on distance or velocity separately. To illustrate, if there are two landing drones and one of them flies twice as high and twice as fast as the other drone, then they experience exactly the same optical flow. However, for good control these two drones should actually react differently to deviations in the optical flow divergence. If a drone does not adapt its reactions to the height when landing, it will never arrive and start to oscillate above the landing surface.

The second problem is that optical flow is very small and little informative in the direction in which a robot is moving. This is very unfortunate for obstacle avoidance, because it means that the obstacles straight ahead of the robot are the hardest ones to detect! The problems are illustrated in the figures below.

Right: Problem 2: The drone moves straight forward, in the direction of its forward-looking camera. Hence, the focus of expansion is straight ahead. Objects close to this direction, like the red obstacle, have very little flow. This is illustrated by the red lines in the figure: The angles of these lines with respect to the camera are very similar. Objects further from this direction, like the green obstacle, have considerable flow. Indeed, the green lines show that the angle gets quickly bigger when the drone moves forward.

Learning visual appearance as the solution

In an article published in Nature Machine Intelligence today [1], we propose a solution to both problems. The main idea was that both problems of optical flow would disappear if the robots were able to interpret not only optical flow, but also the visual appearance of objects in their environment. This solution becomes evident from the above figures. The rectangular insets show the images captured by the drones. For the first problem it is evident that the image perfectly captures the difference in height between the white and red drone: The landing platform is simply larger in the red drone’s image. For the second problem the red obstacle is as large as the green one in the drone’s image. Given their identical size, the obstacles are equally close to the drone.

Exploiting visual appearance as captured by an image would allow robots to see distances to objects in the scene similarly to how we humans can estimate distances in a still picture. This would allow drones to immediately pick the right control gain for optical flow control and it would allow them to see obstacles in the flight direction. The only question was: How can a flying robot learn to see distances like that?

The key to this question lay in a theory I devised a few years back [2], which showed that flying robots can actively induce optical flow oscillations to perceive distances to objects in the scene. In the approach proposed in the Nature Machine Intelligence article the robots use such oscillations in order to learn what the objects in their environment look like at different distances. In this way, the robot can for example learn how fine the texture of grass is when looking at it from different heights during landing, or how thick tree barks are at different distances when navigating in a forest.

Relevance to robotics and applications

Implementing this learning process on flying robots led to much faster, smoother optical flow landings than we ever achieved before. Moreover, for obstacle avoidance, the robots were now also able to see obstacles in the flight direction very clearly. This did not only improve obstacle detection performance, but also allowed our robots to speed up. We believe that the proposed methods will be very relevant to resource-constrained flying robots, especially when they operate in a rather confined environment, such as flying in greenhouses to monitor crop or keeping track of the stock in warehouses.

It is interesting to compare our way of distance learning with recent methods in the computer vision domain for single camera (monocular) distance perception. In the field of computer vision, self-supervised learning of monocular distance perception is done with the help of projective geometry and the reconstruction of images. This results in impressively accurate, dense distance maps. However, these maps are still “unscaled” – they can show that one object is twice as far as another one but cannot convey distances in an absolute sense.

In contrast, our proposed method provides “scaled” distance estimates. Interestingly, the scaling is not in terms of meters but in terms of control gains that would lead the drone to oscillate. This makes it very relevant for control. This feels very much like the way in which we humans perceive distances. Also for us it may be more natural to reason in terms of actions (“Is an object within reach?”, “How many steps do I roughly need to get to a place?”) than in terms of meters. It hence reminds very much of the perception of “affordances”, a concept forwarded by Gibson, who introduced the concept of optical flow [3].

Relevance to biology

The findings are not only relevant to robotics, but also provide a new hypothesis for insect intelligence. Typical honeybee experiments start with a learning phase, in which honeybees exhibit various oscillatory behaviors when they get acquainted with a new environment and related novel cues like artificial flowers. The final measurements presented in articles typically take place after this learning phase has finished and focus predominantly on the role of optical flow. The presented learning process forms a novel hypothesis on how flying insects improve their navigational skills over their lifetime. This suggests that we should set up more studies to investigate and report on this learning phase.

On behalf of all authors,

Guido de Croon, Christophe De Wagter, and Tobias Seidl.

References

- Enhancing optical-flow-based control by learning visual appearance cues for flying robots. G.C.H.E. de Croon, C. De Wagter, and T. Seidl, Nature Machine Intelligence 3(1), 2021.

- Monocular distance estimation with optical flow maneuvers and efference copies: a stability-based strategy. G.C.H.E. de Croon, Bioinspiration & biomimetics, 11(1), 016004.

- The Ecological Approach to Visual Perception. J.J. Gibson (1979).