Robotics is both an exciting and intimidating field because it takes so many different capabilities and disciplines to spin up a robot. This goes all the way from mechatronic design and hardware engineering to some of the more philosophical parts of software engineering.

Those who know me also know I’m a total “high-level guy”. I’m fascinated by how complex a modern robotic system is and how all the pieces come together to make something that’s truly impressive. I don’t have particularly deep knowledge in any specific area of robotics, but I appreciate the work of countless brilliant people that give folks like me the ability to piece things together.

In this post, I will go through what it takes to put together a robot that is highly capable by today’s standards. This is very much an opinion article that tries to slice robotic skills along various dimensions. I found it challenging to find a single “correct” taxonomy, so I look forward to hearing from you about anything you might disagree with or have done differently.

What defines a robot?

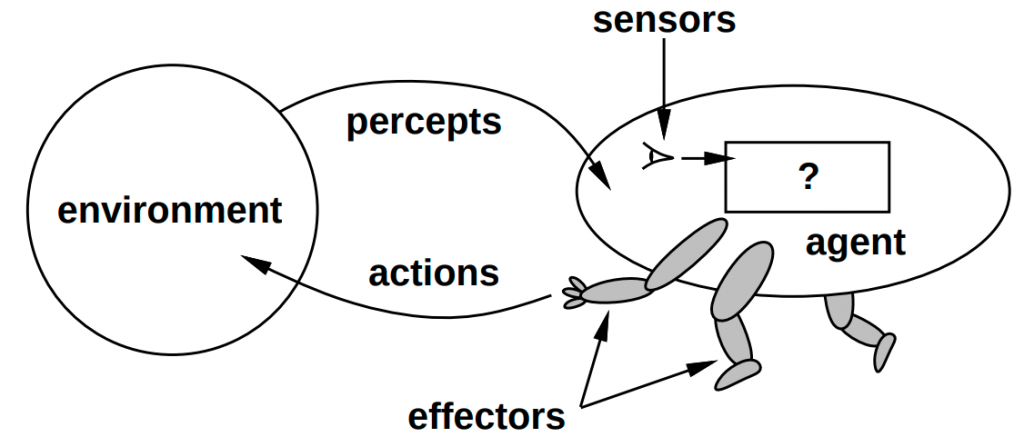

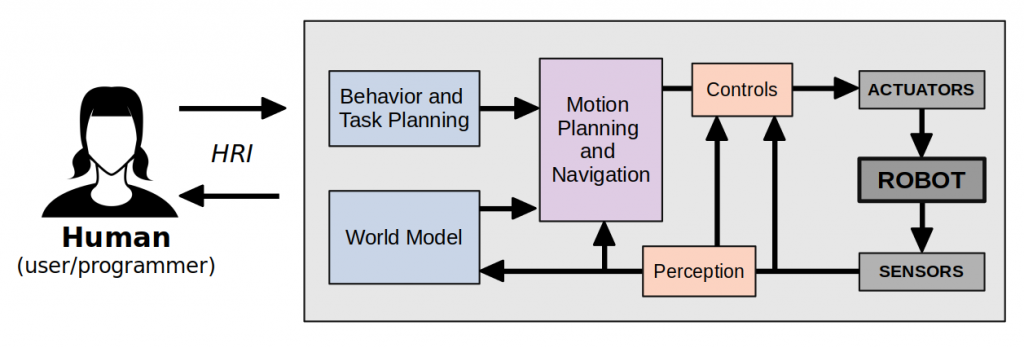

Roughly speaking, a robot is a machine with sensors, actuators, and some computing device that has been programmed to perform some level of autonomous behavior. Below is the essential “getting started” diagram that anyone in AI should recognize.

This opens up the ever present “robot vs. machine” debate that I don’t want to spent too much time on, but my personal distinction between a robot vs. a machine includes a couple of specifications.

- A robot has at least one closed feedback loop between sensing and actuation that does not require human input. This discounts things like a remote-controlled car, or a machine that constantly executes a repetitive motion but will never recover if you nudge it slightly — think, for example, Theo Jansen’s kinetic sculptures.

- A robot is an embodied agent that operates in the physical world. This discounts things like chatbots, or even smart speakers which — while awesome displays of artificial intelligence — I wouldn’t consider them to be robots… yet.

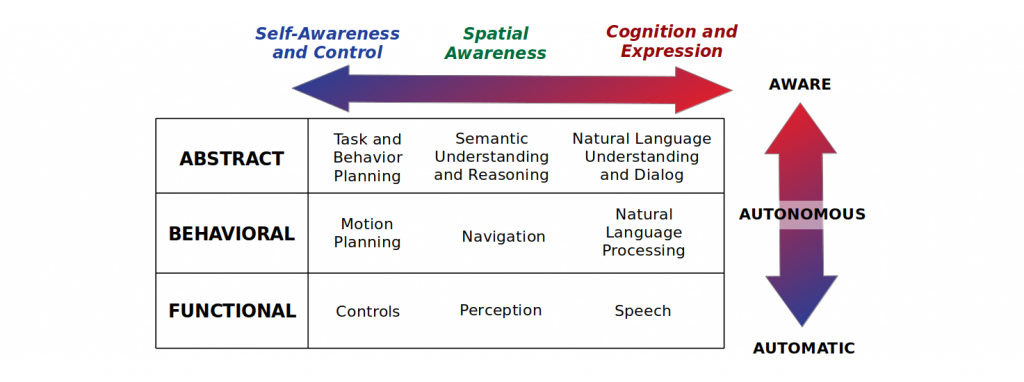

The big picture: Automatic, Autonomous, and Aware

Robots are not created equal. Not to say that simpler robots don’t have their purpose — knowing how simple or complex (technology- and budget-wise) to build a robot given the problem at hand is a true engineering challenge. To say it a different way: overengineering is not always necessary.

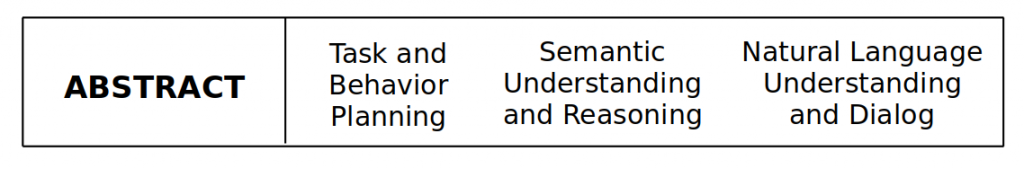

I will now categorize the capabilities of a robot into three bins: Automatic, Autonomous, and Aware. This roughly corresponds to how low- or high-level a robot’s skills have been developed, but it’s not an exact mapping as you will see.

Automatic: The robot is controllable and it can execute motion commands provided by a human in a constrained environment. Think, for example, of industrial robots in an assembly line. You don’t need to program very complicated intelligence for a robot to put together a piece of an airplane from a fixed set of instructions. What you need is fast, reliable, and sustained operation. The motion trajectories of these robots are often trained and calibrated by a technician for a very specific task, and the surroundings are tailored for robots and humans to work with minimal or no conflict.

Autonomous: The robot can now execute tasks in an uncertain environment with minimal human supervision. One of the most pervasive example of this is self-driving cars (which I absolutely label as robots). Today’s autonomous vehicles can detect and avoid other cars and pedestrians, perform lane-change maneuvers, and navigate the rules of traffic with fairly high success rates despite the negative media coverage they may get for not being 100% perfect.

Aware: This gets a little bit into the “sci-fi” camp of robots, but rest assured that research is bringing this closer to reality day by day. You could tag a robot as aware when it can establish two-way communication with humans. An aware robot, unlike the previous two categories, is not only a tool that receives commands and makes mundane tasks easier, but one that is perhaps more collaborative. In theory, aware robots can understand the world at a higher level of abstraction than their more primitive counterparts, and process humans’ intentions from verbal or nonverbal cues, still under the general goal of solving a real-life problem.

A good example is a robot that can help humans assemble furniture. It operates in the same physical and task space as us humans, so it should adapt to where we choose to position ourselves or what part of the furniture we are working on without getting in the way. It can listen to our commands or requests, learn from our demonstrations, and tell us what it sees or what it might do next in language we understand so we can also take an active part in ensuring the robot is being used effectively.

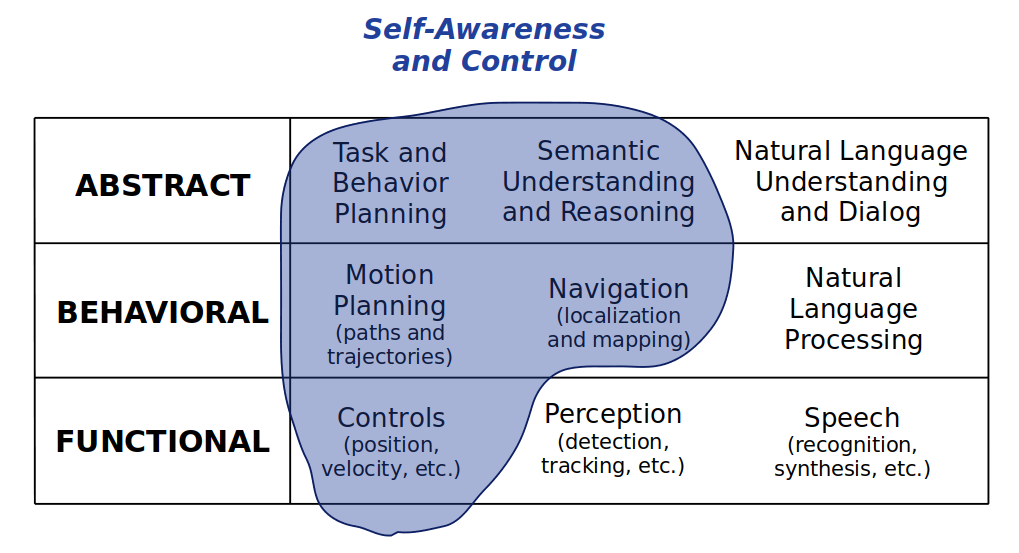

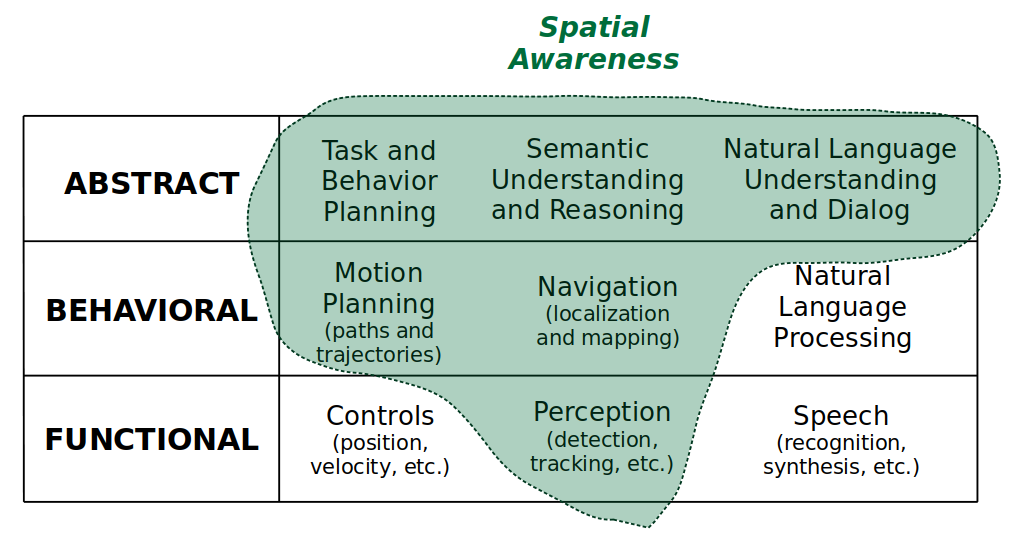

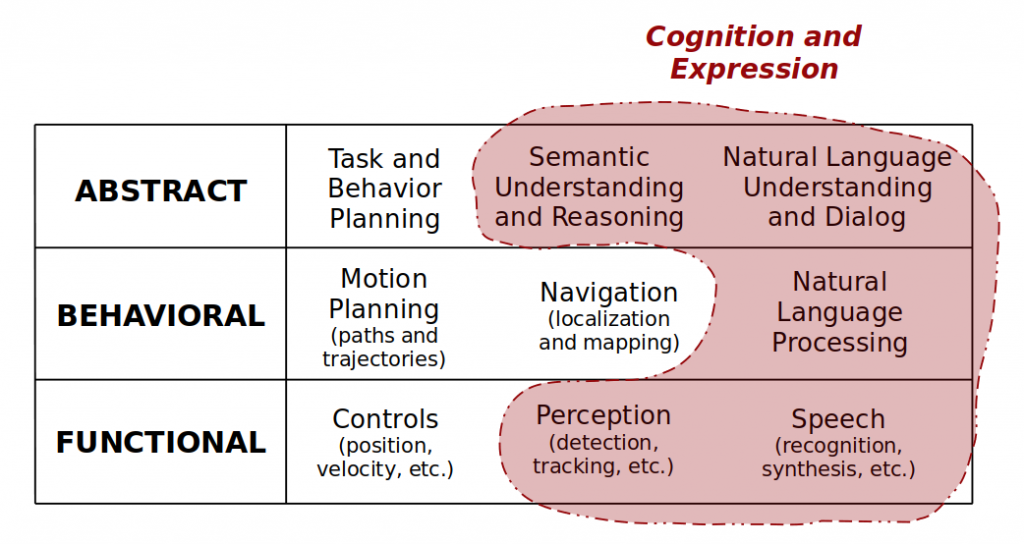

Above you can see a perpendicular axis of categorizing robot awareness. Each highlighted area represents the subset of skills it may require for a robot to achieve awareness on that particular dimension. Notice how forming abstractions — i.e., semantic understanding and reasoning — is crucial for all forms of awareness. That is no coincidence.

The most important takeaway as we move from automatic to aware is the increasing ability for robots to operate “in the wild”. Whereas an industrial robot may be designed to perform repetitive tasks with superhuman speed and strength, a home service robot will often sacrifice this kind of task-specific performance with more generalized skills needed for human interaction and navigating uncertain and/or unknown environments.

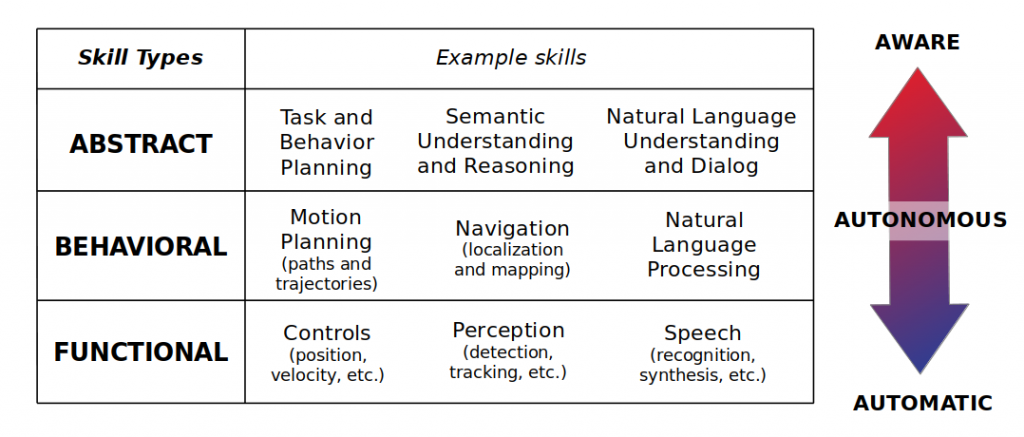

A Deeper Dive Into Robot Skills

Spinning up a robot requires a combination of skills at different levels of abstraction. These skills are all important aspects of a robot’s software stack and require vastly different areas of expertise. This goes back to the central point of this blog post: it’s not easy to build a highly capable robot, and it’s certainly not easy to do it alone!

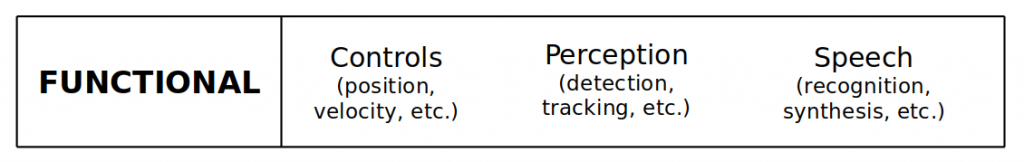

Functional Skills

This denotes the low-level foundational skills of a robot. Without a robust set of functional skills, you would have a hard time getting your robot to be successful at anything else higher up the skill chain.

Being at the lowest level of abstraction, functional skills are closely tied to direct interaction with the actuators and sensor of the robot. Indeed, these skills can be discussed along the acting and sensing modalities.

Acting

- Controls is all about ensuring the robot can reliably execute physical commands — whether this is a wheeled robot, flying robot, manipulator, legged robot, soft robot (… you get the point …) it needs to respond to inputs in a predictable way if it is to interact with the world. It’s a simple concept, but a challenging task that ranges from controlling electrical current/fluid pressure/etc. to multi-actuator coordination towards executing a full motion trajectory.

- Speech synthesis acts on the physical world in a different way — this is more on the human-robot interaction (HRI) side of things. You can think of these capabilities as the robot’s ability to express its state, beliefs, and intentions in a way that is interpretable by humans. Imagine speech synthesis as more than speaking in a monotone robotic voice, but maybe simulating emotion or emphasis to help get information across to the human. This is not limited to speech. Many social robots will also employ visual cues like facial expressions, lights and colors, or movements — for example, look at MIT’s Leonardo.

Sensing

- Controls requires some level of proprioceptive (self) sensing. To regulate the state of a robot, we need to employ sensors like encoders, inertial measurement units, and so on.

- Perception, on the other hand, deals with exteroceptive (environmental) sensing. This mainly deals with line-of-sight sensors like sonar, radar, and lidar, as well as cameras. Perception algorithms require significant processing to make sense of a bunch of noisy pixels and/or distance/range readings. The act of abstracting this data to recognize and locate objects, track them over space and time, and use them for higher-level planning is what makes it exciting and challenging. Finally, back on the social robotics topic, vision can also enable robots to infer the state of humans for nonverbal communication.

- Speech recognition is another form of exteroceptive sensing. Getting from raw audio to accurate enough text that the robot can process is not trivial, despite how easy smart assistants like Google Assistant, Siri, and Alexa have made it look. This field of work is officially known as automatic speech recognition (ASR).

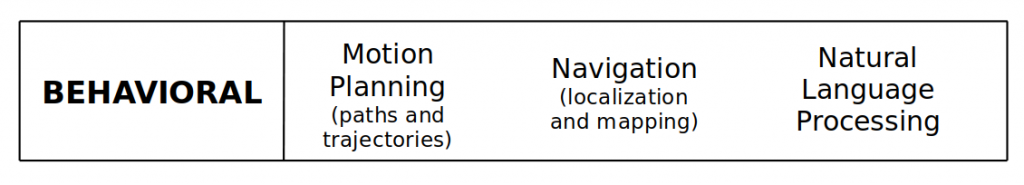

Behavioral Skills

Behavioral skills are a step above the more “raw” sensor-to-actuator processing loops that we explored in the Functional Skills section. Creating a solid set of behavioral skills simplifies our interactions with robots both as users and programmers.

At the functional level, we have perhaps demonstrated capabilities for the robot to respond to very concrete, mathematical goals. For example,

- Robot arm: “Move the elbow joint to 45 degrees and the shoulder joint to 90 degrees in less than 2.5 seconds with less than 10% overshoot. Then, apply a force of 2 N on the gripper.”

- Autonomous car: “Speed up to 40 miles per hour without exceeding acceleration limit of 0.1 g and turn the steering wheel to attain a turning rate of 10 m.”

At the behavioral level, commands may take the form of:

- Robot arm: “Grab the door handle.”

- Autonomous car: “Turn left at the next intersection while following the rules of traffic and passenger ride comfort limits.”

Abstracting away these motion planning and navigation tasks requires a combination of models of the robot and/or world, and of course, our set of functional skills like perception and control.

- Motion planning seeks to coordinate multiple actuators in a robot to execute higher-level tasks. Instead of moving individual joints to setpoints, we now employ kinematic and dynamic models of our robot to operate in the task space — for example, the pose of a manipulator’s end effector or the traffic lane a car occupies in a large highway. Additionally, to go from a start to a goal configuration requires path planning and a trajectory that dictates how to execute the planned path over time. I like this set of slides as a quick intro to motion planning.

- Navigation seeks to build a representation of the environment (mapping) and knowledge of the robot’s state within the environment (localization) to enable the robot to operate in the world. This representation could be in the form of simple primitives like polygonal walls and obstacles, an occupancy grid, a high-definition map of highways, etc.

If this hasn’t yet got across, behavioral skills and functional skills definitely do not work in isolation. Motion planning in a space with obstacles requires perception and navigation. Navigating in a world requires controls and motion planning.

On the language side, Natural Language Processing (NLP) is what takes us from raw text input — whether it came from speech or directly typed in — to something more actionable for the robot. For instance, if a robot is given the command “bring me a snack from the kitchen”, the NLP engine needs to interpret this at the appropriate level to perform the task. Putting it all together,

- Going to the kitchen is a navigation problem that likely requires a map of the house.

- Locating the snack and getting its 3D position relative to the robot is a perception problem.

- Picking up the snack without knocking other things over is a motion planning problem.

- Returning to wherever the human was when the command was issued is again a navigation problem. Perhaps someone closed a door along the way, or left something in the way, so the robot may have to replan based on these changes to the environment.

Abstract Skills

Simply put, abstract skills are the bridge between humans and robot behaviors. All the skills in the row above perform some transformation that lets humans more easily express their commands to robots, and similarly lets robots more easily express themselves to humans.

Task and Behavior Planning operates on the key principles of abstraction and composition. A command like “get me a snack from the kitchen” can be broken down into a set of fundamental behaviors (navigation, motion planning, perception, etc.) that can be parameterized and thus generalized to other types of commands such as “put the bottle of water in the garbage”. Having a common language like this makes it useful for programmers to add capabilities to robots, and for users to leverage their robot companions to solve a wider set of problems. Modeling tools such as finite-state machines and behavior trees have been integral in implementing such modular systems.

Semantic Understanding and Reasoning is bringing abstract knowledge to a robot’s internal model of the world. For example, in navigation we saw that a map can be represented as “occupied vs. unoccupied”. In reality, there are more semantics that can enrich the task space of the robot besides “move here, avoid there”. Does the environment have separate rooms? Is some of the “occupied space” movable if needed? Are there elements in the world where objects can be stored and retrieved? Where are certain objects typically found such that the robot can perform a more targeted search? This recent paper from Luca Carlone’s group is a neat exploration into maps with rich semantic information, and a huge portal of future work that could build on this.

Natural Language Understanding and Dialog is effectively two-way communication of semantic understanding between humans and robots. After all, the point of abstracting away our world model was so we humans could work with it more easily. Here are examples of both directions of communication:

- Robot-to-human: If a robot failed to execute a plan or understand a command, can it actually tell the human why it failed? Maybe a door was locked on the way to the goal, or the robot did not know what a certain word meant and it can ask you to define it.

- Human-to-robot: The goal here is to share knowledge with the robot to enrich its semantic understanding the world. Some examples might be teaching new skills (“if you ever see a mug, grab it by the handle”) or reducing uncertainty about the world (“the last time I saw my mug it was on the coffee table”).

This is all a bit of a pipe dream — Can a robot really be programmed to work with humans at such a high level of interaction? It’s not easy, but research tries to tackle problems like these every day. I believe a good measure of effective human-robot interaction is whether the human and robot jointly learn not to run into the same problem over and over, thus improving the user experience.

Conclusion

Thanks for reading, even if you just skimmed through the pictures. I hope this was a useful guide to navigating robotic systems that was somewhat worth its length. As I mentioned earlier, no categorization is perfect and I invite you to share your thoughts.

Going back to one of my specifications: A robot has at least one closed feedback loop between sensing and actuation that does not require human input. Let’s try put our taxonomy in an example set of feedback loops below. Note that I’ve condensed the natural language pipeline into the “HRI” arrows connecting to the human.

Because no post today can evade machine learning, I also want to take some time make readers aware of machine learning’s massive role in modern robotics.

- Processing vision and audio data has been an active area of research for decades, but the rise of neural networks as function approximators for machine learning (i.e. “deep learning”) has made recent perception and ASR systems more capable than ever.

- Additionally, learning has demonstrated its use at higher levels of abstraction. Text processing with neural networks has moved the needle on natural language processing and understanding. Similarly, neural networks have enabled end-to-end systems that can learn to produce motion, task, and/or behavior plans from complex observation sources like images and range sensors.

The truth is, our human knowledge is being outperformed by machine learning for processing such high-dimensional data. Always remember that machine learning shouldn’t be a crutch due to its biggest pitfall: We can’t (yet) explain why learned systems behave the way they do, which means we can’t (yet) formulate the kinds guarantees than we can with traditional methods. The hope is to eventually refine our collective scientific knowledge so we’re not relying on data-driven black-box approaches. After all, knowing how and why robots learn will only make them more capable in the future.

On that note, you have earned yourself a break from reading. Until next time!