Obstacles no problem for smart robots

Normally, students and scientists walk here, but today a drone is flying through a corridor on TU Delft Campus. Seemingly effortlessly, it whizzes past and between a variety of obstacles: rubbish bins, stacked boxes and poles. But then suddenly a person appears, walking straight towards the drone in the same space. This is not a stationery object but an actual moving person. ‘This is much more difficult for the drone to process. Because how fast is someone moving and are they going to make unexpected movements?’ asks Javier Alonso-Mora. This goes well too. As the drone approaches the walker, it moves to the side and flies on. The flying robot completes the obstacle course without a hitch.

This experiment, conducted by Alonso-Mora and his colleagues, is a good reflection of their research field. They investigate mobile robots that move on wheels or fly through the air and take their surroundings into account – which is why they can move safely alongside us in a corridor, room or hallway.

The new generation of robots that we’re now developing work with people and other robots. Dr. Javier Alonso Mora

That’s something new. For decades, robots were mainly used in factories, where they were separated from people and could assemble cars in a screened-off area or put something on a conveyor belt, for example. ‘The new generation of robots that we’re now developing work with people and other robots. So they have to take into account how others behave. They deliver packages, for example, or cooperate by assembling or delivering something. Moreover, they’re not fixed but can move freely in space,’ he says.

Adapting at lightning speed

For this to work, it’s important that the robots always make the right decision. This is achieved based on models that the scientists, including Alonso-Mora, are currently developing. ‘They perceive their environment on the basis of these models. It helps them to complete a task safely,’ he says.

Humans are highly skilled at this. We’re masters at correctly predicting what will happen and avoiding other people in time or adjusting our route accordingly to avoid a collision if things don’t happen as predicted after all. Busy intersections in major cities are good examples. Cyclists, cars, pedestrians, scooters, trams and trucks weave in and out of each other. It looks chaotic, but usually goes smoothly. ‘This is more difficult for a robot. It has to constantly predict what action is safe. There are many potential outcomes, and the robot has to calculate the various permutations. That takes time. Humans, on the other hand, have so much experience that we can assess a situation at lightning speed and adapt immediately if necessary. Our models are trying to achieve the same with robots.’

It’s important that the robots that complete difficult tasks work as well and safely as possible. Dr. Javier Alonso Mora

An interesting example of Alonso-Mora’s research is the Harmony project he’s working on. Scientists are developing robots that help nurses, doctors and patients. For example, they bring food to patients in bed or medicine to nurses. ‘They need to calculate the best route and how to behave on the way. How do they safely navigate an area with a moving bed, a patient on crutches or a surgeon who’s in a hurry?’

Testing the robots in hospitals

Not only do the robots have to cover small distances, but they also have to open doors and plan a route. Moreover, it’s important that they understand whether their task needs to be carried out quickly or can wait a while. ‘So the robots have to process a great deal of information, and on top of that, they have to analyse their environment and work with people and other robots. We’ve just launched this international project, and our goal is to start using demonstration robots in hospitals in Switzerland and Sweden in three years’ time. We’re creating and testing the algorithm that will allow the robots to move safely.’

It’s no coincidence that Alonso-Mora is working on this particular project. He was already fascinated by robots as a young boy. ‘I used to love playing with Lego; I could spend hours building things with it. We’re now doing something similar with robots, but in a more complicated way, because they too consist of many different parts. I’m interested in whether we can make the robots of the future intelligent. As a child, I loved reading Isaac Asimov’s science fiction, which often featured robots. The novel I, Robot, which was made into a film starring Will Smith, is a good example. It’s fiction, of course, but increasingly we’re getting closer to robots that are smart and can work with us. Whether they’re autonomous cars, drones or robots moving around in hospitals. It’s important that the robots that complete difficult tasks work as well and safely as possible.’

More information

J. Alonso Mora, Cognitive Robotics

https://www.tudelft.nl/staff/j.alonsomora/

Autonomous Multi-Robots Laboratory, TUD

https://www.autonomousrobots.nl/

H2020 Harmony

https://harmony-eu.org/

The post Obstacles no problem for smart robots appeared first on RoboValley.

Bird-like wings could help drones keep stable in gusts

New FAA Rules Will Increase Drones in Manufacturing Facilities

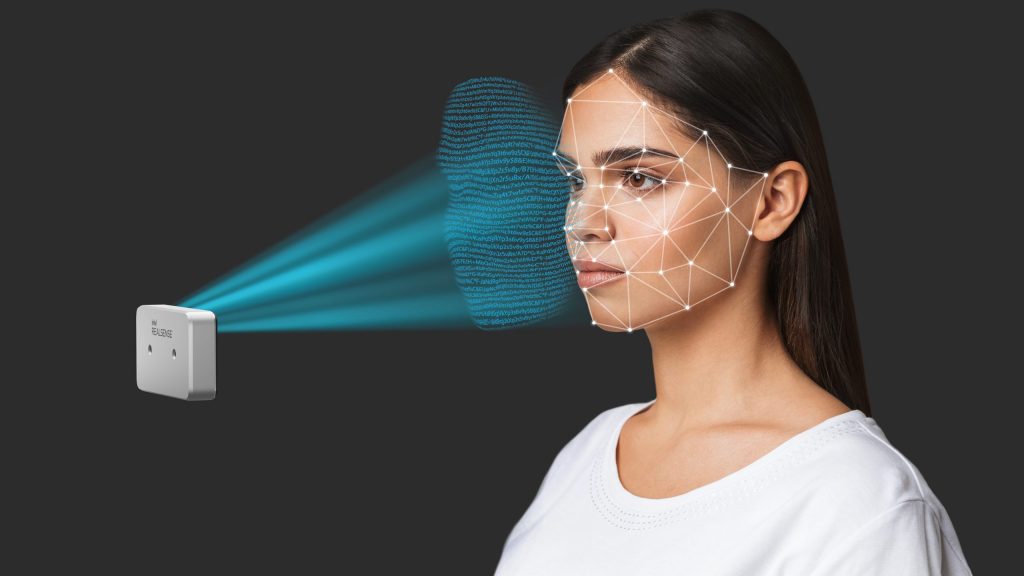

#334: Intel RealSense Enabling Computer Vision and Machine Learning At The Edge, with Joel Hagberg

Intel RealSense is known in the robotics community for its plug-and-play stereo cameras. These cameras make gathering 3D depth data a seamless process, with easy integrations into ROS to simplify the software development for your robots. From the RealSense team, Joel Hagberg talks about how they built this product, which allows roboticists to perform computer vision and machine learning at the edge.

Joel Hagberg

Joel Hagberg leads the Intel® RealSense Marketing. Product Management and Customer Support teams. He joined Intel in 2018 after a few years as an Executive Advisor working with startups in the IoT, AI, Flash Array, and SaaS markets. Before his Executive Advisor role, Joel spent two years as Vice President of Product Line Management at Seagate Technology with responsibility for their $13B product portfolio. He joined Seagate from Toshiba, where Joel spent 4 years as Vice President of Marketing and Product Management for Toshiba’s HDD and SSD product lines. Joel joined Toshiba with Fujitsu’s Storage Business acquisition, where Joel spent 12 years as Vice President of Marketing, Product Management, and Business Development. Joel’s Business Development efforts at Fujitsu focused on building emerging market business units in Security, Biometric Sensors, H.264 HD Video Encoders, 10GbE chips, and Digital Signage. Joel earned his bachelor’s degree in Electrical Engineering and Math from the University of Maryland. Joel also graduated from Fujitsu’s Global Knowledge Institute Executive MBA leadership program.

Marketing. Product Management and Customer Support teams. He joined Intel in 2018 after a few years as an Executive Advisor working with startups in the IoT, AI, Flash Array, and SaaS markets. Before his Executive Advisor role, Joel spent two years as Vice President of Product Line Management at Seagate Technology with responsibility for their $13B product portfolio. He joined Seagate from Toshiba, where Joel spent 4 years as Vice President of Marketing and Product Management for Toshiba’s HDD and SSD product lines. Joel joined Toshiba with Fujitsu’s Storage Business acquisition, where Joel spent 12 years as Vice President of Marketing, Product Management, and Business Development. Joel’s Business Development efforts at Fujitsu focused on building emerging market business units in Security, Biometric Sensors, H.264 HD Video Encoders, 10GbE chips, and Digital Signage. Joel earned his bachelor’s degree in Electrical Engineering and Math from the University of Maryland. Joel also graduated from Fujitsu’s Global Knowledge Institute Executive MBA leadership program.

Links

- Download mp3 (14.6 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

Training robots to manipulate soft and deformable objects

Mobile Robot Revolutionizes Work in Supermarkets

Top Resources to start with Computer Vision and Deep Learning

New processes for automated fabrication of fiber and silicone composite structures for soft robotics

A social robot that could help children to regulate their emotions

Could Your Robot Be Spying on You? – Cybersecurity Tips for Manufacturers Employing Robotics

A camera that knows exactly where it is

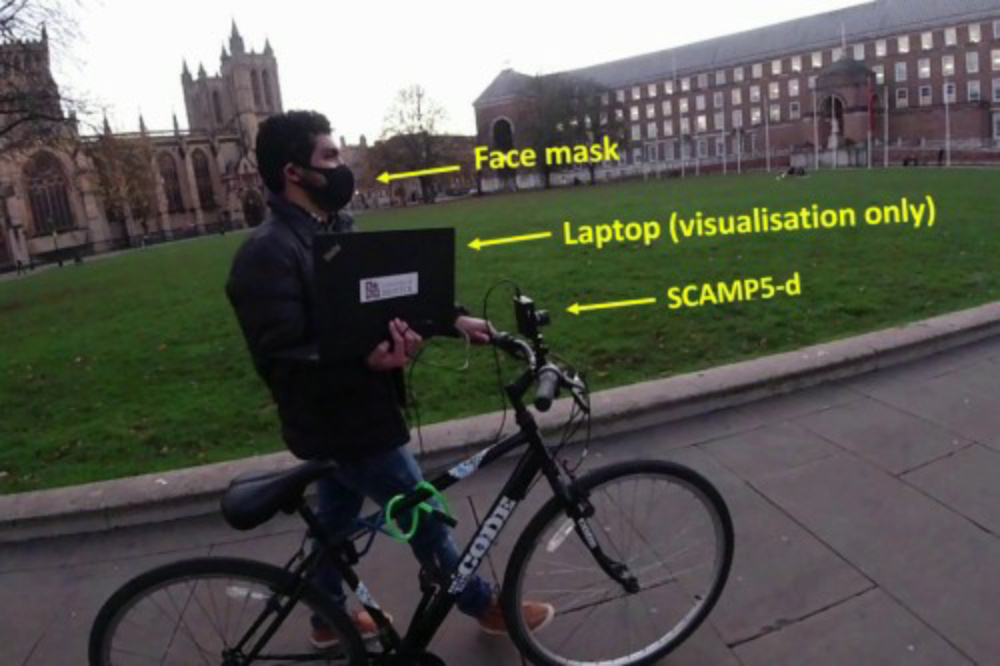

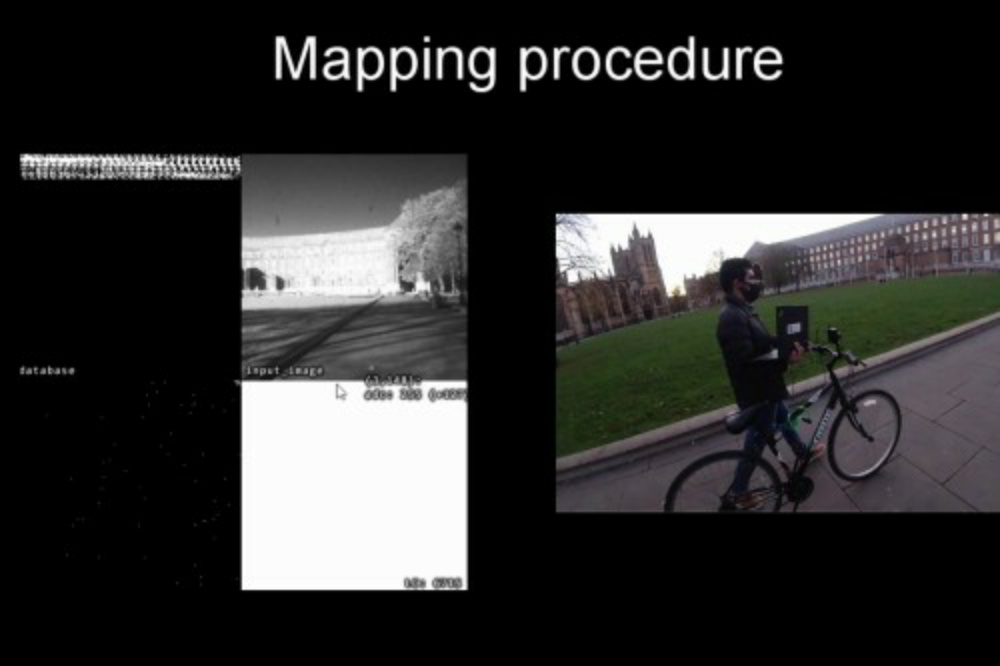

Image credit: University of Bristol

Knowing where you are on a map is one of the most useful pieces of information when navigating journeys. It allows you to plan where to go next and also tracks where you have been before. This is essential for smart devices from robot vacuum cleaners to delivery drones to wearable sensors keeping an eye on our health.

But one important obstacle is that systems that need to build or use maps are very complex and commonly rely on external signals like GPS that do not work indoors, or require a great deal of energy due to the large number of components involved.

Walterio Mayol-Cuevas, Professor in Robotics, Computer Vision and Mobile Systems at the University of Bristol’s Department of Computer Science, led the team that has been developing this new technology.

He said: “We often take for granted things like our impressive spatial abilities. Take bees or ants as an example. They have been shown to be able to use visual information to move around and achieve highly complex navigation, all without GPS or much energy consumption.

“In great part this is because their visual systems are extremely efficient and well-tuned to making and using maps, and robots can’t compete there yet.”

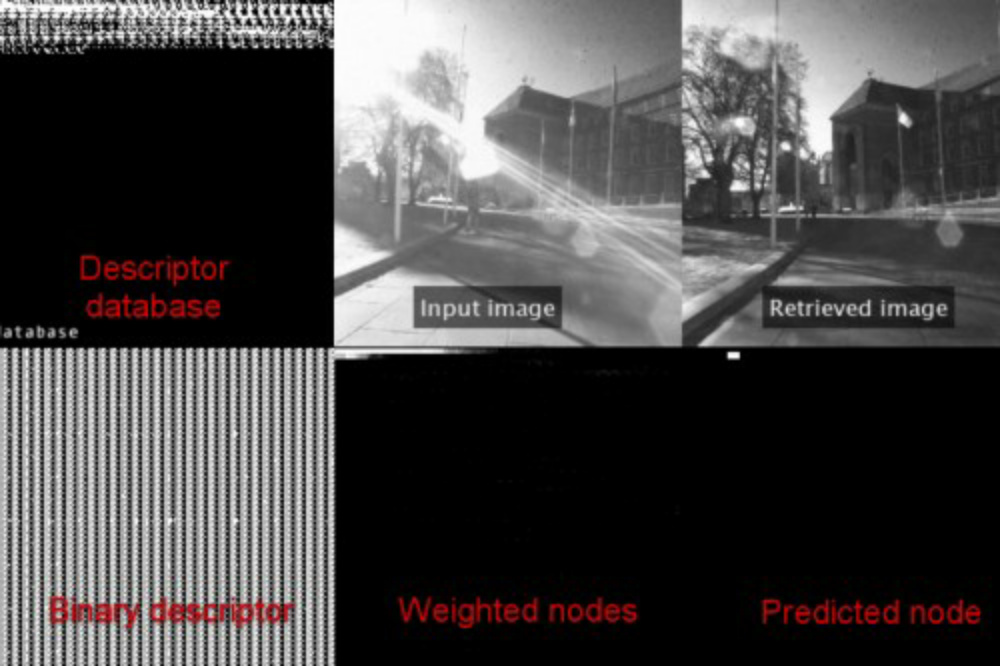

However, a new breed of sensor-processor devices that the team calls Pixel Processor Array (PPA), allow processing on-sensor. This means that as images are sensed, the device can decide what information to keep, what information to discard and only use what it needs for the task at hand.

An example of such PPA device is the SCAMP architecture that has been developed by the team’s colleagues at the University of Manchester by Piotr Dudek, Professor of Circuits and Systems from the University of Manchester and his team. This PPA has one small processor for every pixel which allows for massively parallel computation on the sensor itself.

The team at the University of Bristol has previously demonstrated how these new systems can recognise objects at thousands of frames per second but the new research shows how a sensor-processor device can make maps and use them, all at the time of image capture.

This work was part of the MSc dissertation of Hector Castillo-Elizalde, who did his MSc in Robotics at the University of Bristol. He was co-supervised by Yanan Liu who is also doing his PhD on the same topic and Dr Laurie Bose.

Hector Castillo-Elizalde and the team developed a mapping algorithm that runs all on-board the sensor-processor device.

The algorithm is deceptively simple: when a new image arrives, the algorithm decides if it is sufficiently different to what it has seen before. If it is, it will store some of its data, if not it will discard it.

As the PPA device is moved around by for example a person or robot, it will collect a visual catalogue of views. This catalogue can then be used to match any new image when it is in the mode of localisation.

Importantly, no images go out of the PPA, only the key data that indicates where it is with respect to the visual catalogue. This makes the system more energy efficient and also helps with privacy.

The team believes that this type of artificial visual systems that are developed for visual processing, and not necessarily to record images, is a first step towards making more efficient smart systems that can use visual information to understand and move in the world. Tiny, energy efficient robots or smart glasses doing useful things for the planet and for people will need spatial understanding, which will come from being able to make and use maps.

The research has been partially funded by the Engineering and Physical Sciences Research Council (EPSRC), by a CONACYT scholarship to Hector Castillo-Elizalde and a CSC scholarship to Yanan Liu.