Top Resources to start with Computer Vision and Deep Learning

New processes for automated fabrication of fiber and silicone composite structures for soft robotics

A social robot that could help children to regulate their emotions

Could Your Robot Be Spying on You? – Cybersecurity Tips for Manufacturers Employing Robotics

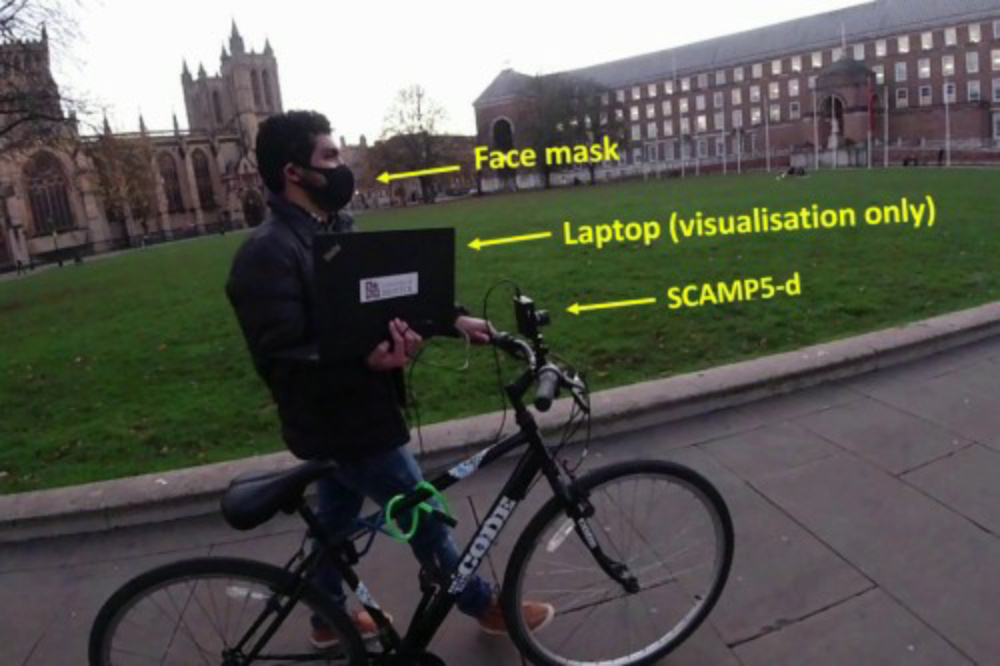

A camera that knows exactly where it is

Image credit: University of Bristol

Knowing where you are on a map is one of the most useful pieces of information when navigating journeys. It allows you to plan where to go next and also tracks where you have been before. This is essential for smart devices from robot vacuum cleaners to delivery drones to wearable sensors keeping an eye on our health.

But one important obstacle is that systems that need to build or use maps are very complex and commonly rely on external signals like GPS that do not work indoors, or require a great deal of energy due to the large number of components involved.

Walterio Mayol-Cuevas, Professor in Robotics, Computer Vision and Mobile Systems at the University of Bristol’s Department of Computer Science, led the team that has been developing this new technology.

He said: “We often take for granted things like our impressive spatial abilities. Take bees or ants as an example. They have been shown to be able to use visual information to move around and achieve highly complex navigation, all without GPS or much energy consumption.

“In great part this is because their visual systems are extremely efficient and well-tuned to making and using maps, and robots can’t compete there yet.”

However, a new breed of sensor-processor devices that the team calls Pixel Processor Array (PPA), allow processing on-sensor. This means that as images are sensed, the device can decide what information to keep, what information to discard and only use what it needs for the task at hand.

An example of such PPA device is the SCAMP architecture that has been developed by the team’s colleagues at the University of Manchester by Piotr Dudek, Professor of Circuits and Systems from the University of Manchester and his team. This PPA has one small processor for every pixel which allows for massively parallel computation on the sensor itself.

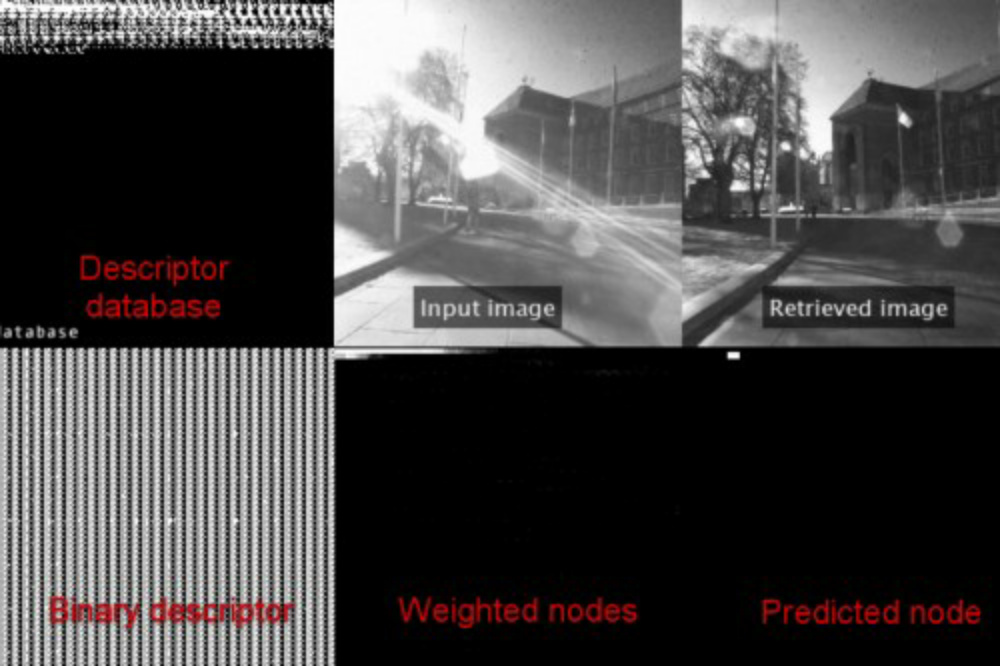

The team at the University of Bristol has previously demonstrated how these new systems can recognise objects at thousands of frames per second but the new research shows how a sensor-processor device can make maps and use them, all at the time of image capture.

This work was part of the MSc dissertation of Hector Castillo-Elizalde, who did his MSc in Robotics at the University of Bristol. He was co-supervised by Yanan Liu who is also doing his PhD on the same topic and Dr Laurie Bose.

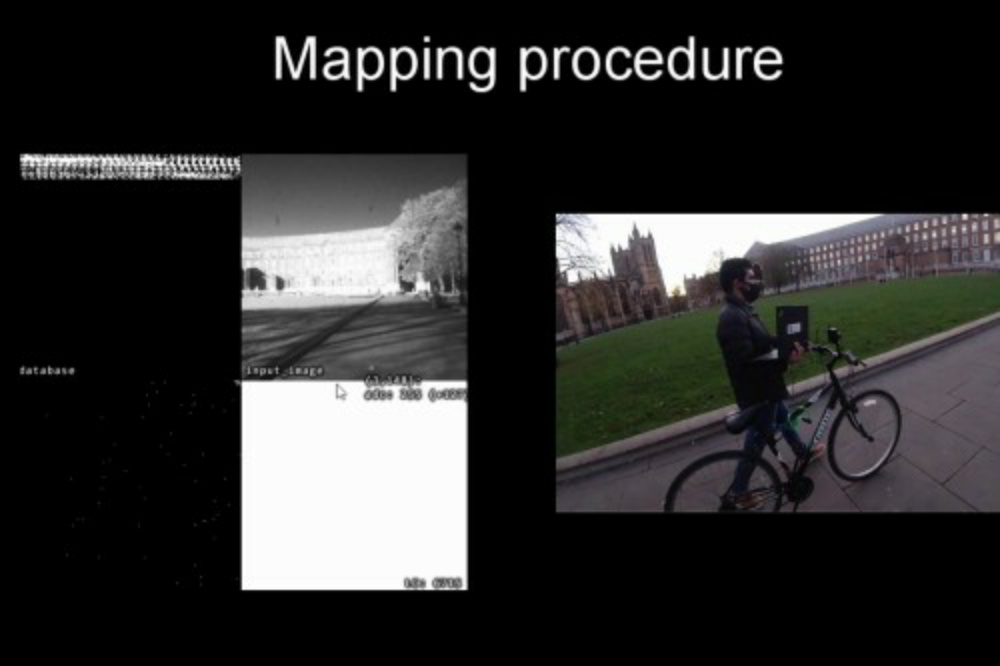

Hector Castillo-Elizalde and the team developed a mapping algorithm that runs all on-board the sensor-processor device.

The algorithm is deceptively simple: when a new image arrives, the algorithm decides if it is sufficiently different to what it has seen before. If it is, it will store some of its data, if not it will discard it.

As the PPA device is moved around by for example a person or robot, it will collect a visual catalogue of views. This catalogue can then be used to match any new image when it is in the mode of localisation.

Importantly, no images go out of the PPA, only the key data that indicates where it is with respect to the visual catalogue. This makes the system more energy efficient and also helps with privacy.

The team believes that this type of artificial visual systems that are developed for visual processing, and not necessarily to record images, is a first step towards making more efficient smart systems that can use visual information to understand and move in the world. Tiny, energy efficient robots or smart glasses doing useful things for the planet and for people will need spatial understanding, which will come from being able to make and use maps.

The research has been partially funded by the Engineering and Physical Sciences Research Council (EPSRC), by a CONACYT scholarship to Hector Castillo-Elizalde and a CSC scholarship to Yanan Liu.

Paper

Teaching drones to hear screams from catastrophe victims

Studying wombat burrows with WomBot, a remote-controlled robot

Making virtual assistants sound human poses a challenge for designers

5 MIR1000 Robots Automates the Internal Transportation of Heavy Loads at Florisa

A system to benchmark the posture control and balance of humanoid robots

Expectations and perceptions of healthcare professionals for robot deployment in hospital environments during the COVID-19 pandemic

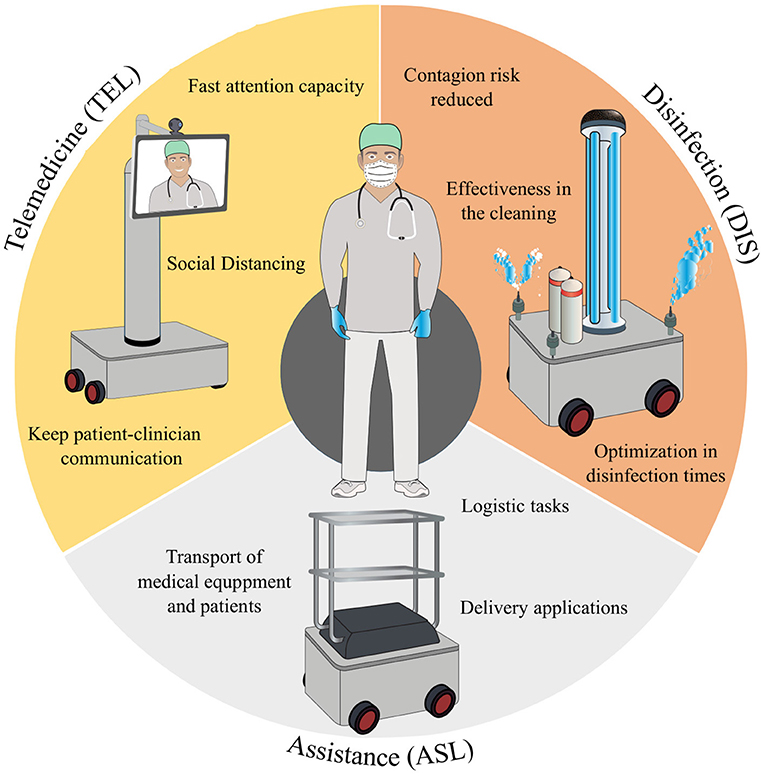

Image extracted from [1].

The recent outbreak of the severe acute respiratory syndrome, caused by coronavirus 2 (SARS-CoV-2), also referred to as COVID-19, has spread globally in an unprecedented way. In response, the efforts of most countries have been focused on containing and mitigating the effects of the pandemic. Given the transmission rate of the virus, the World Health Organization (WHO) recommended several strategies, such as physical distancing, to contain the spread and widespread transmission. Driven by other factors, including the effects of this pandemic on the economy, some countries are now resuming economic activities, making it all the more necessary to ensure compliance with bio-safety protocols to contain further spread of the virus. So, the background to our project is this diverse landscape of different public health measures that are having to be adopted around the world, oftentimes with the measures being iteratively refined by the authorities as their impacts on the social, economic, and political sectors become clearer.

In the health sector, all levels and different stakeholders of the world’s health systems have been unwaveringly focused on providing medical care during this pandemic. The demands on the health systems remain high despite the successful rollouts of vaccination among many in this population across the globe. Numerous challenges have arisen, such as (1) the vulnerability and overloading of healthcare professionals, (2) the need for decongestion and reduction in the risk of contagion in intra-hospital environments, (3) the availability of biomedical technology, and (4) the sustainability of patient care. As a consequence, multiple strategies have been proposed to address these challenges. For instance, robotics is a promising part of the solution to help control and mitigate the effects of the COVID-19.

Although the literature has shown some robotics applications to be able to overcome the potential hazards and risks in hospital environments, actual deployments of those developments are limited. Interestingly, few studies measure the perception and the acceptance among clinicians of these robotics platforms. This work [1] presents the design and implementation of several perception questionnaires to assess healthcare providers’ level of acceptance and awareness of robotics for COVID-19 control in clinical scenarios. The data of these results are available in a public repository. Specifically, 41 healthcare professionals (e.g., nurses, doctors, biomedical engineers, among others, from two private healthcare institutions in the city of Bogotá D.C., Colombia) completed the surveys, assessing three categories: (DIS) Disinfection and cleaning robots, (ASL) Assistance, Service, and Logistics robots, and (TEL) Telemedicine and Telepresence robots. The survey revealed that there is generally a relatively low level of knowledge about robotics applications among the target population. Likewise, the surveys revealed that some fear of being replaced by robots very much remains in the medical community. However, 82.9 % of participants indicated a positive perception concerning the development and implementation of robotics in clinical environments.

The outcomes showed that the healthcare staff expects these robots to interact within hospital environments socially and communicate with users, connecting patients and doctors in this sense. Related work [2] focused on Socially Assistive Robotics presents a potential tool to support clinical care areas, promoting physical distancing, and reducing the contagion rate. The paper [2] presents a long-term evaluation of a social robotic platform for gait neurorehabilitation.

The social robot is located in front of the patient during the exercise, guiding their performance through non-verbal and verbal gestures and monitoring physiological progress. Thus, the platform enables the physical distancing between the clinicians and the patient. A clinical validation with ten patients during 15 sessions was conducted in a rehabilitation center located in Colombia. Results showed that the robot’s support improves the patients’ physiological progress. It helped them to maintain a healthy posture, evidenced in their thoracic and cervical posture. The perception and the acceptance were also measured in this work, and Clinicians highlighted that they trust the system as a complementary tool in rehabilitation.

Previous studies before the pandemic showed that clinicians were worried about being replaced by the robot before any real interaction in their environments [3]. Considering the robot’s role during the COVID-19 pandemic, clinicians have a positive perception of the robot used as a tool to manage the rehabilitation procedures. Most healthcare personnel will consent to use the robot during the pandemic, as they consider this tool can promote physical distancing. It is a secure device to carry out the healthcare protocol. Another encouraging result is that clinicians recommend the robot to other colleagues and institutions to support rehabilitation during the COVID-19 pandemic.

These works are funded by the Royal Academy of Engineering – Pandemic Preparedness (Grant EXPP20211\1\183). This project aims to develop robotic strategies in developing countries for monitoring non-safety conditions related to human behaviors and planning processes of disinfection of outdoor and indoor environments. This project configures an international cooperation network led by the Colombian School of Engineering Julio Garavito, with leading Investigator Carlos A. Cifuentes and the University of Edinburgh support by Professor Subramanian Ramamoorthy along with researchers and clinicians from Latin America (Colombia, Brazil, Argentina, and Chile).

References

- Sierra, S., Gomez-Vargas, D., Cespedes, N., Munera, M., Roberti, F., Barria, P., Ramamoorthy, S., Becker, M., Carelli. R. Cifuentes, C.A. (2021) Expectations and Perceptions of Healthcare Professionals for Robot Deployment in Hospital Environments during the COVID-19 Pandemic. Frontiers in Robotics and AI.

- Cespedes N., Raigoso, D., Munera, M., Cifuentes, C.A. (2021) Long-Term Social Human-Robot Interaction for Neurorehabilitation: Robots as a tool to support gait therapy in the Pandemic, Frontiers in Neurorobotics.

- Casas, J. Cespedes, N., Cifuentes, C.A., Gutierrez, L., M., Rincon-Roncancio, Munera, M., (2019). Expectations vs Reality: Attitudes Towards a Socially Assistive Robot in Cardiac Rehabilitation. Appl. Sci, 9(21), 4651.