An easier way to teach robots new skills

Working With Robots With Different Levels of Collaboration

DeepMind’s latest research at ICLR 2022

MimicEducationalRobots teach robotics for the future

The robotics industry is changing. The days of industrial robot arms working behind enclosures, performing pre-programmed identical tasks are coming to an end. Robots that can interact with each other and other equipment are becoming standard and robots are expanding to more aspects of our lives. My name is Brett Pipitone, and I am the founder, CEO, and sole employee of mimicEducationalRobots. I believe that robots will soon become an inescapable part of modern life, and I seek to prepare today’s students to work with these emerging technologies.

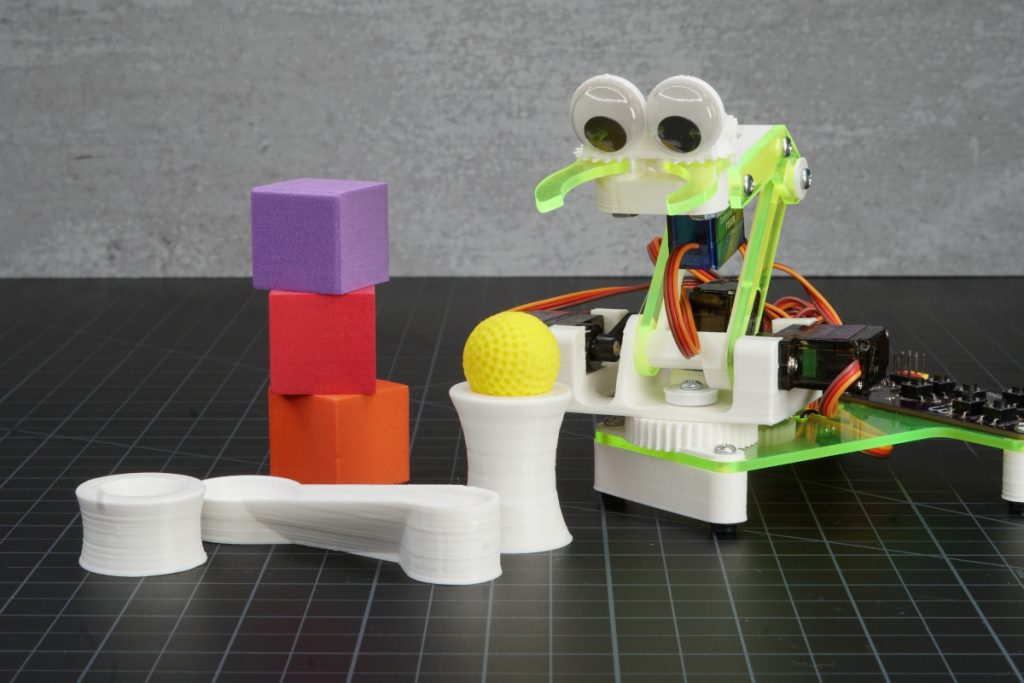

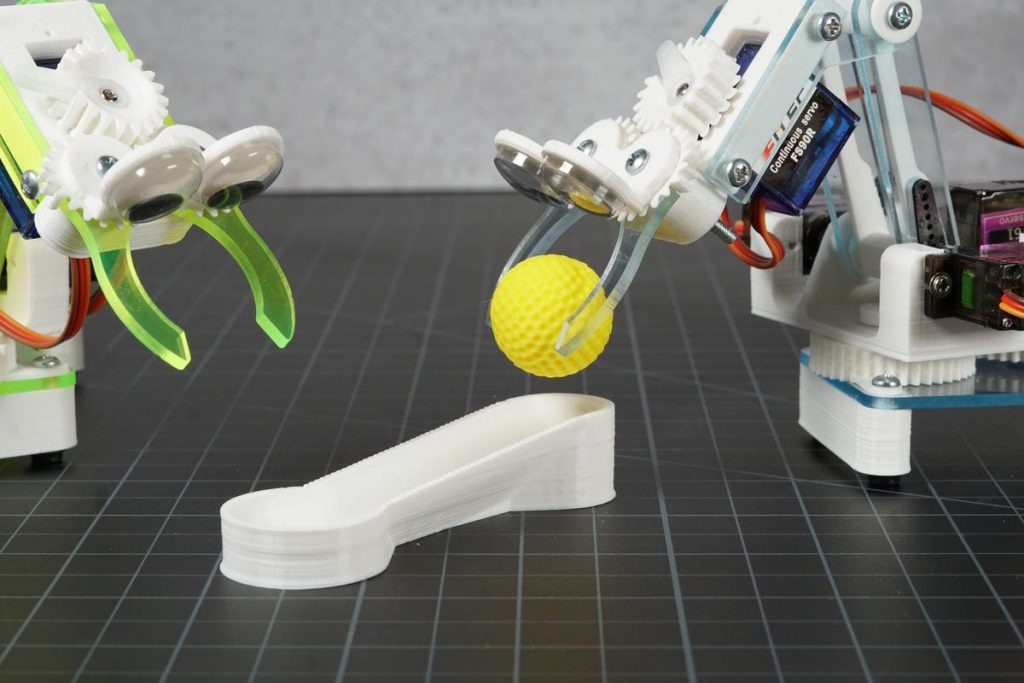

The mimicEducationalRobots product line consists of a family of three robots. The largest and most sophisticated is mimicArm. The adorable tinyBot is small and capable. The newest robot, bitsyBot (currently on Kickstarter) is perfect for those taking the first steps into the robotics world. Despite having different features, all three robots are designed to communicate with each other and use special sensors to make decisions about their environment. Interfaces are simple but powerful, allowing users to learn quickly and without frustration.

mimicEducationalRobots believes that every student will encounter robots in their everyday life, no matter their career path. Learning robotics allows students to become comfortable and familiar with technology that is rapidly becoming commonplace in day-to-day life. Through their combinations of features, the mimicEducationalRobots products introduce technology that’s still in its infancy, such as human/robot interaction and cooperative robotics, at a level students can understand. This is why every mimicEducationalRobots robot starts with manual control, allowing students to get the feel of their robot and what it can and can’t do. Once they’ve mastered manual control programming is a smaller leap. The mimicEducationalRobots programming software simplifies this transition by reflecting the same motions the students have been making manually with simple commands like “robotMove” and “robotGrab”.

For more complex programs, mimicEducationalRobots believes that their robots should mimic industry as closely as possible. This means doing as much as possible with the simplest possible sensor. Things start small, with a great big tempting pushable button called, incidentally, “greatBigButton”. This is the students’ first introduction to human interaction as they program their robot to react to a button press. From there things get much more exciting without getting very much more complicated. For example, an array of non-contact IR thermometers called Grid-EYE allows mimicArm to detect faces using nothing but body heat. A simple IR proximity sensor allows tinyBot or bitsyBot to react when offered a block before the block touches any part of the robot. There’s even a cable that allows robots to communicate with each other and react to what the other is doing. These simple capabilities allow students to create a wide range of robotic behaviors.

mimicEducationalRobots is a homegrown business designed and built by an engineer and dad passionate about teaching people of all ages about robotics. I created the robots’ brains using a bare circuit board, template, some solder paste, and tweezers. Every component is added by hand and the board is soldered together with a toaster oven I modified. Once cooled the boards are programmed using a modified Arduino UNO R3, one of the best technological tools for beginners.

Other physical robot parts are designed using 3D modeling software and made either on a 3D printer or CNC router. I have two 3D printers in his basement running 24 hours a day, producing at least 20 robot kits a week. The CNC router requires a great deal more supervision but is capable of turning out four sets of beautiful neon plastic parts every 30 minutes.

mimicEducationalRobots is a new kind of company, producing a new kind of product, for a new kind of consumer. Their products demonstrate just how fundamental technology, and in particular open source technology, has changed our world. I hope students learning on mimicArm, tinyBot, or bitsyBot will help create the next life-changing technological leap.

To learn more about the family of mimicEducationalRobots visit www.mimicRobots.com

Robotic Disruption in US Workforce Leads to New Opportunities and Challenges

Sensing Parkinson’s symptoms

MyoExo integrates a series of sensors into a wearable device capable of detecting slight changes in muscle strain and bulging, enabling it to measure and track the symptoms of Parkinson’s disease. Credit: Oluwaseun Araromi

By Matthew Goisman/SEAS Communications

Nearly one million people in the United States live with Parkinson’s disease. The degenerative condition affects the neurons in the brain that produce the neurotransmitter dopamine, which can impact motor function in multiple ways, including muscle tremors, limb rigidity and difficulty walking.

There is currently no cure for Parkinson’s disease, and current treatments are limited by a lack of quantitative data about the progress of the disease.

MyoExo, a translation-focused research project based on technology developed at the Harvard John A. Paulson School of Engineering (SEAS) and the Wyss Institute for Biologically Inspired Engineering, aims to provide that data. The team is refining the technology and starting to develop a business plan as part of the Harvard Innovation Lab’s venture program. The MyoExo wearable device aims to not only provide a remote monitoring device for patients at-home setting but also be sensitive enough to aid early diagnostics of Parkinson’s disease.

“This is a disease that’s affecting a lot of people and it seems like the main therapeutics that tackle this have not changed significantly in the past several decades,” said Oluwaseun Araromi, Research Associate in Materials Science and Mechanical Engineering at SEAS and the Wyss Institute.

The MyoExo technology consists of a series of wearable sensors, each one capable of detecting slight changes in muscle strain and bulging. When integrated into a wearable device, the data can provide what Araromi described as “muscle-centric physiological signatures.”

“The enabling technology underlying this is a sensor that detects small changes in the shape of an object,” he said. “Parkinson’s disease, especially in its later stages, really expresses itself as a movement disorder, so sensors that can detect shape changes can also detect changes in the shape of muscle as people move.”

MyoExo emerged from research done in the Harvard Biodesign Lab of Conor Walsh, the Paul A. Maeder Professor of Engineering and Applied Sciences, and the Microrobotics Lab of Rob Wood, the Charles River Professor of Engineering and Applied Sciences at SEAS. Araromi, Walsh and Wood co-authored a paper on their research into resilient wearable sensors in November 2020, around the same time the team began to focus on medical applications of the technology.

“If we had these hypersensitive sensors in something that a person was wearing, we could detect how their muscles were bulging,” Walsh said. “That was more application-agnostic. We didn’t know exactly where that would be the most important, and I credit Seun and our Wyss collaborators for being the ones to think about identifying Parkinson’s applications.”

Araromi sees the MyoExo technology as having value for three major stakeholders: the pharmaceutical industry, clinicians and physicians, and patients. Pharmaceutical companies could use data from the wearable system to quantify their medications’ effect on Parkinson’s symptoms, while clinicians could determine if one treatment regimen is more effective than another for a specific patient. Patients could use the system to track their own treatment, whether that’s medication, physical therapy, or both.

“Some patients are very incentivized to track their progress,” Araromi said. “They want to know that if they were really good last week and did all of the exercises that they were prescribed, their wearable device would tell them their symptomatology has reduced by 5% compared to the week before. We envision that as something that would really encourage individuals to keep and adhere to their treatment regiments.”

MyoExo’s sensor technology is based on research conducted in the Harvard Biodesign Lab of Conor Walsh and the Microrobotics Lab of Rob Wood at SEAS, and further developed through the Wyss Institute for Biologically Inspired Engineering and Harvard Innovation Labs venture program. Credit: Oluwaseun Araromi

Araromi joined SEAS and the Wyss Institute as a postdoctoral researcher in 2016, having earned a Ph.D in mechanical engineering from the University of Bristol in England and completed a postdoc at the Swiss Federal Institute of Technology Lausanne.

His interest in sensor technology made him a great fit for research spanning the Biodesign and Microrobotics labs, and his early work included helping develop an exosuit to aid with walking.

“I was initially impressed with Seun’s strong background in materials, transduction and physics,” Walsh said. “He really understood how you’d think about creating novel sensors with soft materials. Seun’s really the translation champion for the project in terms of driving forward the technology, but at the same time trying to think about the need in the market, and how we demonstrate that we can meet that.”

The technology is currently in the human testing phase to demonstrate proof of concept detection of clinically-relevant metrics with support from the Wyss Institute Validation Project program. Araromi wants to show that the wearable device can quantify the difference between the muscle movements of someone with Parkinson’s and someone without. From there, the goal is to demonstrate that the device can quantify whether a person has early- or late-stage symptoms of the disease, as well as their response to treatment.

“We are evaluating our technology and validating our technical approach, making sure that as it’s currently constructed, even in this crude form, we can get consistent data and results,” Araromi said. “We’re doing this in a small pilot phase, such that if there are issues, we can fix those issues, and then expand to a larger population where we would test our device on more individuals with Parkinson’s disease. That should really convince ourselves and hopefully the community that we are able to reach a few key technical milestones, and then garner more interest and potentially investment and partnership.”

Interactive Perception at Toyota Research Institute

Dr. Carolyn Matl, Research Scientist at Toyota Research Institute, explains why Interactive Perception and soft tactile sensors are critical for manipulating challenging objects such as liquids, grains, and dough. She also dives into “StRETcH” a Soft to Resistive Elastic Tactile Hand, a variable stiffness soft tactile end-effector, presented by her research group.

Carolyn Matl

Carolyn Matl is a research scientist at the Toyota Research Institute, where she works on robotic perception and manipulation with the Mobile Manipulation Team. She received her B.S.E in Electrical Engineering from Princeton University in 2016, and her Ph.D. in Electrical Engineering and Computer Sciences at the University of California, Berkeley in 2021. At Berkeley, she was awarded the NSF Graduate Research Fellowship and was advised by Ruzena Bajcsy. Her dissertation work focused on developing and leveraging non-traditional sensors for robotic manipulation of complicated objects and substances like liquids and doughs.

Carolyn Matl’s Related Research Videos

Links

- StRETcH: a Soft to Resistive Elastic Tactile Hand

- Carolyn Matl’s personal website

- Download mp3 (48.7 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

Engineers Meet Industry Demand By Growing Their Robotics & IAS Expertise

Molecular robots that work cooperatively in swarms

High Performance Inertial Sensors for Robotic Systems

New method allows robot vision to identify occluded objects

#ICRA2022 Science Communication Awards

Number of awards: 2

Amount: travel and accommodation (up to 2k) + free registration

Application deadline: 22 April 2022

We are offering up to 2 Science Communication Awards to motivated roboticists keen on helping us cover ICRA. Your coverage could include videos, interviews, podcasts, blogs, social media, or art. Please apply by sending the following information by April 22 to sabine.hauert@bristol.ac.uk with the subject [ICRA SciCommAward]:

- Name

- Who you are (one paragraph)

- Previous experience in science communication (one paragraph with links to material)

- What you’d like to cover at ICRA and where you will publish material produced (one paragraph)

- Agreement to cross-post your content to the ICRA website or other third-party channels (yes/no).

- Estimated travel budget

Awards will be announced on 25 April.