Open-sourcing MuJoCo

ep.352: Robotics Grasping and Manipulation Competition Spotlight, with Yu Sun

Yu Sun, Professor of Computer Science and Engineering at the University of South Florida, created and organized the Robotic Grasping and Manipulation Competition. Yu talks about the impact robots will have in domestic environments, the disparity between industry and academia showcased by competitions, and the commercialization of research.

Yu Sun

Yu Sun is a Professor in the Department of Computer Science and Engineering at the University of South Florida (Assistant Professor 2009-2015, Associate Professor 2015-2020, Associate Chair of Graduate Affairs 2018-2020). He was a Visiting Associate Professor at Stanford University from 2016 to 2017, and received his Ph.D. degree in Computer Science from the University of Utah in 2007. Then he had his Postdoctoral training at Mitsubishi Electric Research Laboratories (MERL), Cambridge, MA (2007-2008) and the University of Utah (2008-2009).

He initiated the IEEE RAS Technical Committee on Robotic Hands, Grasping, and Manipulation and served as its first co-Chair. Yu Sun also served on several editorial boards as an Associate Editor and Senior Editor, including IEEE Transactions on Robotics, IEEE Robotics and Automation Letters (RA-L), ICRA, and IROS.

Links

- Download mp3 (49.0 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

ep.351: Early Days of ICRA Competitions, with Bill Smart

Bill Smart, Professor of Mechanical Engineering and Robotics at Oregon State University, helped start competitions as part of ICRA. In this episode, Bill dives into the high-level decisions involved with creating a meaningful competition. The conversation explores how competitions are there to showcase research, potential ideas for future competitions, the exciting phase of robotics we are currently in, and the intersection of robotics, ethics, and law.

Bill Smart

Dr. Smart does research in the areas of robotics and machine learning. In robotics, Smart is particularly interested in improving the interactions between people and robots; enabling robots to be self-sufficient for weeks and months at a time; and determining how they can be used as personal assistants for people with severe motor disabilities. In machine learning, Smart is interested in developing strategies for teaching robots to act effectively (or even optimally), based on long-term interactions with the world and given intermittent and at times incorrect feedback on their performance.

Links

- Download mp3 (29.1 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

Soft Grippers Can Handle Small and Delicate Parts With Greater Ease

New imaging method makes tiny robots visible in the body

By Florian Meyer

How can a blood clot be removed from the brain without any major surgical intervention? How can a drug be delivered precisely into a diseased organ that is difficult to reach? Those are just two examples of the countless innovations envisioned by the researchers in the field of medical microrobotics. Tiny robots promise to fundamentally change future medical treatments: one day, they could move through patient’s vasculature to eliminate malignancies, fight infections or provide precise diagnostic information entirely noninvasively. In principle, so the researchers argue, the circulatory system might serve as an ideal delivery route for the microrobots, since it reaches all organs and tissues in the body.

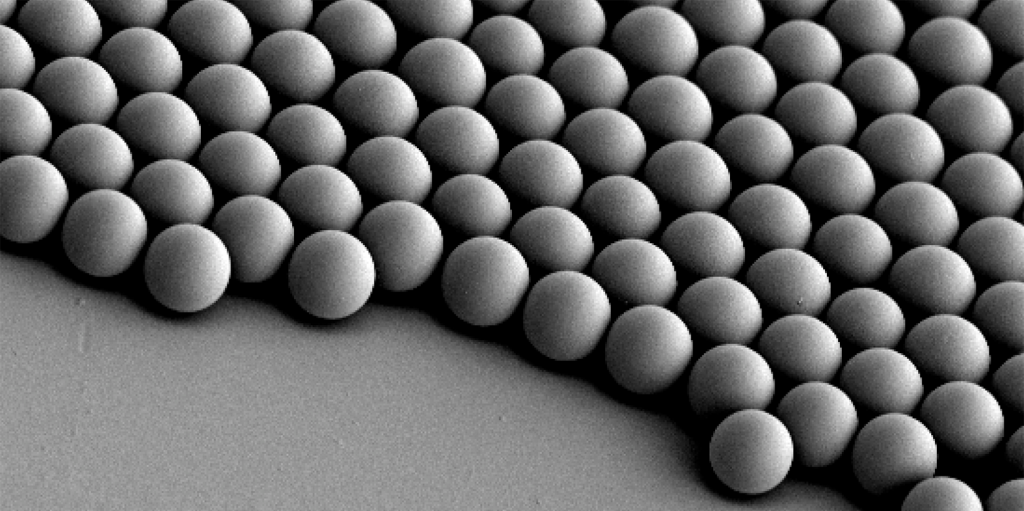

For such microrobots to be able to perform the intended medical interventions safely and reliably, they must not be larger than a biological cell. In humans, a cell has an average diameter of 25 micrometres – a micrometre is one millionth of a metre. The smallest blood vessels in humans, the capillaries, are even thinner: their average diameter is only 8 micrometres. The microrobots must be correspondingly small if they are to pass through the smallest blood vessels unhindered. However, such a small size also makes them invisible to the naked eye – and science too, has not yet found a technical solution to detect and track the micron-sized robots individually as they circulate in the body.

Tracking circulating microrobots for the first time

“Before this future scenario becomes reality and microrobots are actually used in humans, the precise visualisation and tracking of these tiny machines is absolutely necessary,” says Paul Wrede, who is a doctoral fellow at the Max Planck ETH Center for Learnings Systems (CLS). “Without imaging, microrobotics is essentially blind,” adds Daniel Razansky, Professor of Biomedical Imaging at ETH Zurich and the University of Zurich and a member of the CLS. “Real-time, high-resolution imaging is thus essential for detecting and controlling cell-sized microrobots in a living organism.” Further, imaging is also a prerequisite for monitoring therapeutic interventions performed by the robots and verifying that they have carried out their task as intended. “The lack of ability to provide real-time feedback on the microrobots was therefore a major obstacle on the way to clinical application.”

“Without imaging, microrobotics is essentially blind.”

Daniel Razansky

Together with Metin Sitti, a world-leading microrobotics expert who is also a CLS member as Director at the Max Planck Institute for Intelligent Systems (MPI-IS) and ETH Professor of Physical Intelligence, and other researchers, the team has now achieved an important breakthrough in efficiently merging microrobotics and imaging. In a study just published in the scientific journal Science Advances, they managed for the first time to clearly detect and track tiny robots as small as five micrometres in real time in the brain vessels of mice using a non-invasive imaging technique.

The researchers used microrobots with sizes ranging from 5 to 20 micrometres. The tiniest robots are about the size of red blood cells, which are 7 to 8 micrometres in diameter. This size makes it possible for the intravenously injected microrobots to travel even through the thinnest microcapillaries in the mouse brain.

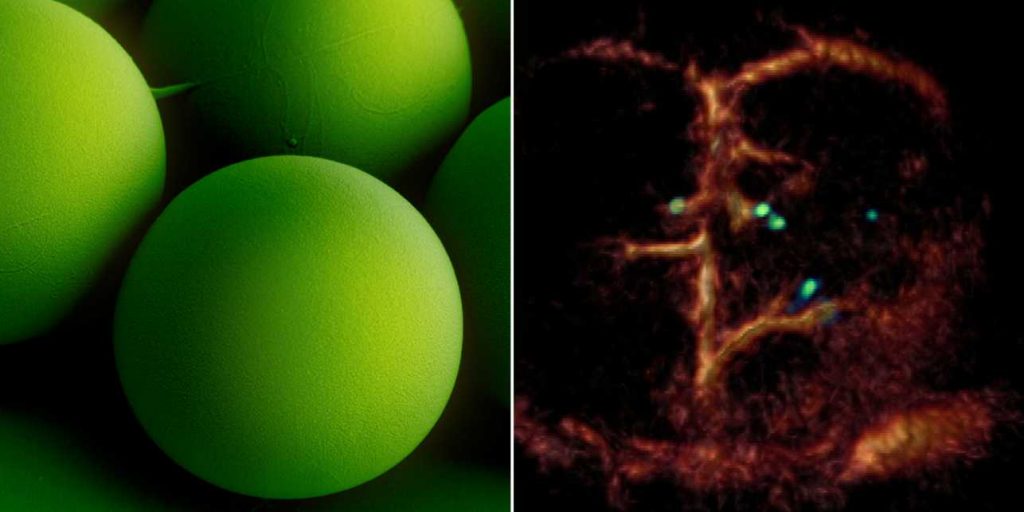

A breakthrough: Tiny circulating microrobots, which are as small as red blood cells (left picture), were visualised one-by-one in the blood vessels of mice with optoacoustic imaging (right picture). Image: ETH Zurich / Max Planck Institute for Intelligent Systems

The researchers also developed a dedicated optoacoustic tomography technology in order to actually detect the tiny robots one by one, in high resolution and in real time. This unique imaging method makes it possible to detect the tiny robots in deep and hard-to-reach regions of the body and brain, which would not have been possible with optical microscopy or any other imaging technique. The method is called optoacoustic because light is first emitted and absorbed by the respective tissue. The absorption then produces tiny ultrasound waves that can be detected and analysed to result in high-resolution volumetric images.

Janus-faced robots with gold layer

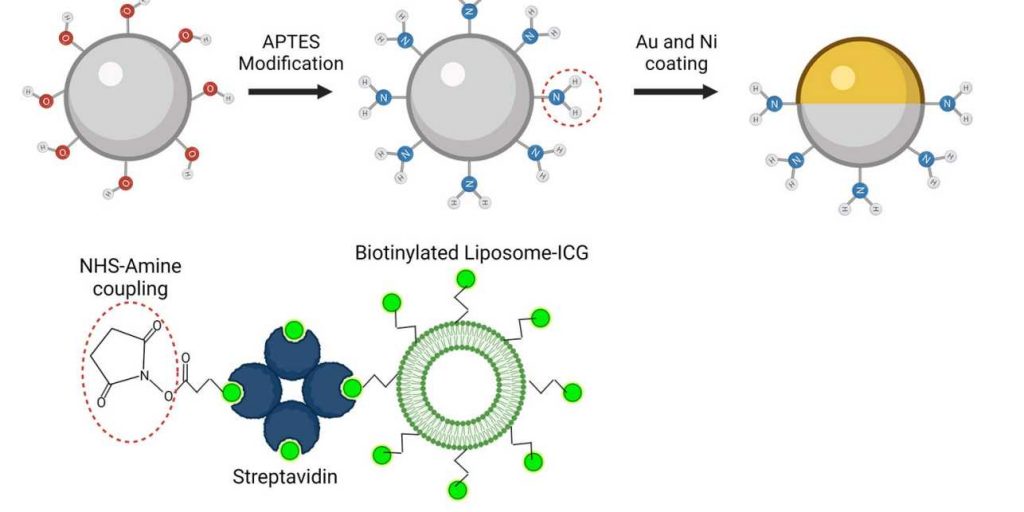

To make the microrobots highly visible in the images, the researchers needed a suitable contrast material. For their study, they therefore used spherical, silica particle-based microrobots with a so-called Janus-type coating. This type of robot has a very robust design and is very well qualified for complex medical tasks. It is named after the Roman god Janus, who had two faces. In the robots, the two halves of the sphere are coated differently. In the current study, the researchers coated one half of the robot with nickel and the other half with gold.

The spherical microrobots consist of silica-based particles and have been coated half with nickel (Ni) and half with gold (Au) and loaded with green-dyed nanobubbles (liposomes). In this way, they can be detected individually with the new optoacoustic imaging technique. Image: ETH Zurich / MPI-IS

“Gold is a very good contrast agent for optoacoustic imaging,” explains Razansky, “without the golden layer, the signal generated by the microrobots is just too weak to be detected.” In addition to gold, the researchers also tested the use of small bubbles called nanoliposomes, which contained a fluorescent green dye that also served as a contrast agent. “Liposomes also have the advantage that you can load them with potent drugs, which is important for future approaches to targeted drug delivery,” says Wrede, the first author of the study. The potential uses of liposomes will be investigated in a follow-up study.

Furthermore, the gold also allows to minimise the cytotoxic effect of the nickel coating – after all, if in the future microrobots are to operate in living animals or humans, they must be made biocompatible and non-toxic, which is part of an ongoing research. In the present study, the researchers used nickel as a magnetic drive medium and a simple permanent magnet to pull the robots. In follow-up studies, they want to test the optoacoustic imaging with more complex manipulations using rotating magnetic fields.

“This would give us the ability to precisely control and move the microrobots even in strongly flowing blood,” says Metin Sitti. “In the present study we focused on visualising the microrobots. The project was tremendously successful thanks to the excellent collaborative environment at the CLS that allowed combining the expertise of the two research groups at MPI-IS in Stuttgart for the robotic part and ETH Zurich for the imaging part,” Sitti concludes.

Engineers develop a way to use everyday WiFi to help robots see and navigate better indoors

Engineers develop a way to use everyday WiFi to help robots see and navigate better indoors

A quadcopter that works in the air and underwater and also has a suction cup for hitching a ride on a host

New technique can safely guide an autonomous robot through a highly uncertain environment

Talking Automate 2022 with ATI Industrial Automation

A draft open standard for an Ethical Black Box

About 5 years ago we proposed that all robots should be fitted with the robot equivalent of an aircraft Flight Data Recorder to continuously record sensor and relevant internal status data. We call this an ethical black box (EBB). We argued that an ethical black box will play a key role in the processes of discovering why and how a robot caused an accident, and thus an essential part of establishing accountability and responsibility.

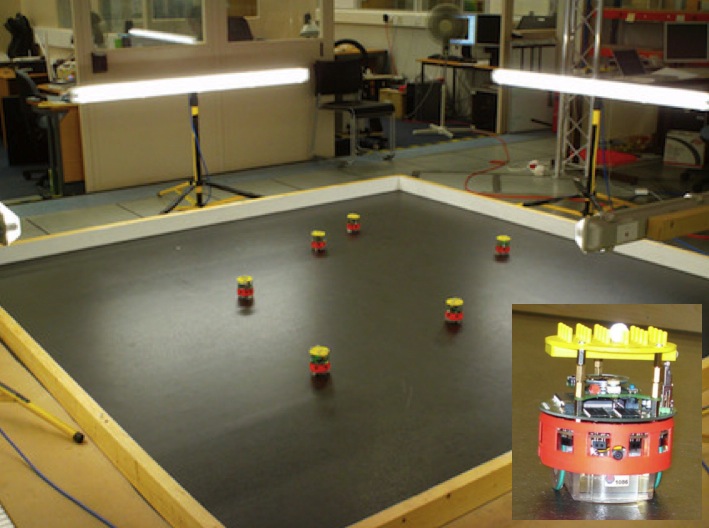

Since then, within the RoboTIPS project, we have developed and tested several model EBBs, including one for an e-puck robot that I wrote about in this blog, and another for the MIRO robot. With some experience under our belts, we have now drafted an Open Standard for the EBB for social robots – initially as a paper submitted to the International Conference on Robots Ethics and Standards. Let me now explain first why we need a standard, and second why it should be an open standard.

Why do we need a standard specification for an EBB? As we outline in our new paper, there are four reasons:

- A standard approach to EBB implementation in social robots will greatly benefit accident and incident (near miss) investigations.

- An EBB will provide social robot designers and operators with data on robot use that can support both debugging and functional improvements to

the robot. - An EBB can be used to support robot ‘explainability’ functions to allow, for instance, the robot to answer ‘Why did you just do that?’ questions from its user. And,

- a standard allows EBB implementations to be readily shared and adapted for different robots and, we hope, encourage manufacturers to develop and market general purpose robot EBBs.

And why should it be an Open Standard? Bruce Perens, author of The Open Source Definition, outlines a number of criteria an open standard must satisfy, including:

- “Availability: Open standards are available for all to read and implement.

- Maximize End-User Choice: Open Standards create a fair, competitive market for implementations of the standard.

- No Royalty: Open standards are free for all to implement, with no royalty

or fee. - No Discrimination: Open standards and the organizations that administer them do not favor one implementor over another for any reason other than the technical standards compliance of a vendor’s implementation.

- Extension or Subset: Implementations of open standards may be extended, or offered in subset form.”

These are *good* reasons.

The most famous and undoubtedly the most impactful Open Standards are those that were written for the Internet. They were, and still are, called Requests for Comments (RFCs) to reflect the fact that they were – especially in the early years, drafts for revision. As a mark of respect we also regard our draft 0.1 Open Standard for an EBB for Social Robots, as an RFC. You can find draft 0.1 in Annex A of the paper here.

Not only is this a first draft, it is also incomplete, covering only the specification of the data and its format, that should be saved in an EBB for social robots. Given that the EBB data specification is at the heart of the EBB standard, we feel that this is sufficient to be opened up for comments and feedback. We will continue to extend the specification, with subsequent versions also published on arXiv.

Let me know encourage comments and feedback. Please feel free to either submit comments to this blog post – this way everyone can see the comments – or by contacting me directly via email. All constructive comments that result in revisions to the standard will be acknowledged in the standard.