Cincoze DI-1000 Drives AGVs in Factories and Warehouses

Mechanical engineering professor to design a ‘soft’ robot that could be used in space

You’re doing it wrong: You need to compare apples to oranges

How Robots Are Redefining Health Care: 6 Recent Innovations

Robot centenary – 100 years since ‘robot’ made its debut

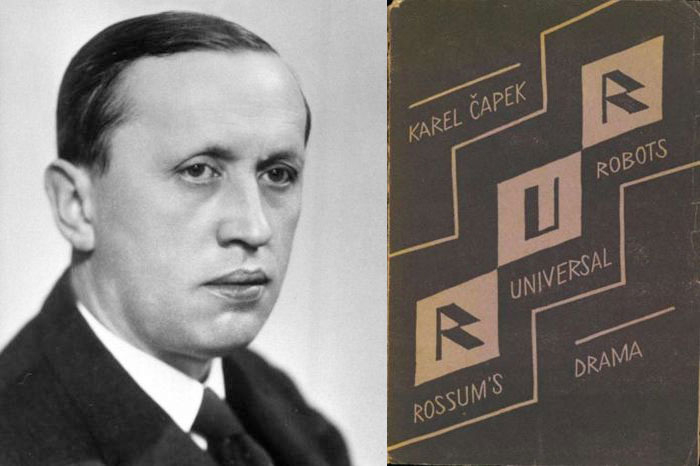

Robotics remained at the leading edge of technology development in 2021, yet it was one hundred years earlier in 1921 that the word robot (in its modern sense) made its public debut. Czech author Karel Čapek’s play R.U.R. (Rossum’s Universal Robots) imagined a world in which humanoids called ‘roboti’ were created in a factory. Karel’s brother, the artist and writer Josef Čapek had first coined the term robot before Karel adopted it for this theatrical vision.

The Slavic root of the word is even older and even its first known appearance in English dates back nearly two hundred years. During the time of the Habsburgs and the Austro-Hungarian Empire, robot referred to a form of forced labour similar to slavery. In Čapek’s play, the roboti were being manufactured as serfs to serve human needs.

Nowadays we would see Čapek’s creations more as androids rather than robots in the modern sense. However, the outcome of the play, with the robots rebelling against the humans and taking over the world, has since become a trope of science fiction which persists to this day. And yet the intended message in Čapek’s play wasn’t about the inherent risks of robots but of the dehumanising dangers of rampant mechanisation. We know that popular culture has taken a different reading from the play, of course, with robots on screen and in print more likely to be cast as the bad guy, although there are some notable exceptions, too. It’s interesting to wonder how different the mainstream image of robots might be today if Čapek’s play had placed his roboti and their owners in a more mutually benevolent relationship.

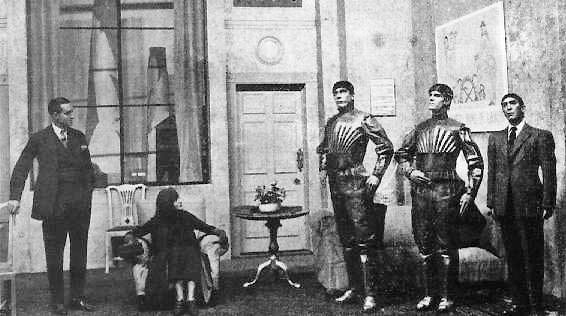

Čapek’s robots first appeared on the screen in a BBC television production of R.U.R. in 1938. Three years later the word ‘robotics’ was first used as a term for the field of research and technology that develops and manufactures robots. Like ‘robot’ in its modern sense, ‘robotics’ also has its origins in the creative imagination, thanks to a man who was born in the same year that the Čapek brothers were bringing the modern ‘robot’ into the world. Science fiction writer Isaac Azimov famously coined his three laws of robotics, which continue to resonate in discussions about the ethical use of robots, in 1941. Incidentally, Azimov wasn’t a fan of the play itself but his laws were designed precisely to prevent the kind of tragedy imagined in Čapek’s play.

The next generation of robots will be shape-shifters

Training robots with realistic pain expressions can reduce doctors’ risk of causing pain during physical exams

New study shows methods robots can use to self-assess their own performance

A text-reading robot may help users manage negative emotions

Increase Manufacturing Efficiency with MiR Robots | AHS

Christian Fritz: Full-stack Robotics and Growing an App Marketplace | Sense Think Act Podcast #15

In this episode, Audrow Nash speaks to Christian Fritz, CEO and founder of Transitive Robotics. Transitive Robotics makes software for building full stack robotics applications. In this conversation, they talk about how Transitive Robotic’s software works, their business model, sandboxing for security, creating a marketplace for robotics applications, and web tools, in general.

Episode Links

- Download the episode

- Christian’s LinkedIn

- Transitive Robotics’ Website

- Transitive Robotics on LinkedIn

- Transitive Robotics’ Twitter

- Sign up for Transitive Robotics’ Beta

- Contact Transitive Robotics

Podcast info

Computer-on-Modules For Autonomous Intralogistics Vehicles

Event Cameras – An Evolution in Visual Data Capture

Over the past decade, camera technology has made gradual, and significant improvements thanks to the mobile phone industry. This has accelerated multiple industries, including Robotics. Today, Davide Scaramuzza discusses a step-change in camera innovation that has the potential to dramatically accelerate vision-based robotics applications.

Davide Scaramuzza deep dives on Event Cameras, which operate fundamentally different from traditional cameras. Instead of sampling every pixel on an imaging sensor at a fixed frequency, the “pixels” on an event camera all operate independently, and each responds to changes in illumination. This technology unlocks a multitude of benefits, including extremely highspeed imaging, removal of the concept of “framerate”, removal of data corruption due to having the sun in the sensor, reduced data throughput, and low power consumption. Tune in for more.

Davide Scaramuzza

Davide Scaramuzza is a Professor of Robotics and Perception at both departments of Informatics (University of Zurich) and Neuroinformatics (joint between the University of Zurich and ETH Zurich), where he directs the Robotics and Perception Group. His research lies at the intersection of robotics, computer vision, and machine learning, using standard cameras and event cameras, and aims to enable autonomous, agile, navigation of micro drones in search-and-rescue applications.

Links

- Download mp3 (49.3 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

Generation equality: Empowering and giving visibility to women in robotics

Photo credits: Studio Number One (SNO)

On March 8, International Women’s Day (IWD) we celebrate the political, socioeconomic and cultural achievements of women and the women right’s movement towards gender equality. “Whilst the social and political rights of women are greater in some places than others, there is no country where gender equality has been achieved” says Mary Evans, professor at the London School of Economics and Political Science in her book “The persistence of gender inequality” (Polity Press 2017). In 2022 this situation has not changed either globally or at the European level as indicated in the EU Gender Equality index for 2020 where the average of the EU is 67.4% and the maximum is Sweden with 83.8%. Although there has been a clear commitment from the European Union on gender equality (specially in innovation and science), there are still structural forms of inequality that must be challenged and changed. It is not the aim of this article to analyse or comment on those, but to show what is being done and is available, especially in the European Union, for us to contribute as individuals and as a community towards gender equality in the field of robotics.

Any journey starts with a first step, so I asked Maja Hadziselimovic, member of the Board of Directors of euRobotics and active United Nations (UN) Generation Equality champion of women and girls in STEM, how a person (no matter the gender) can contribute to gender equality. She told me “There are three actions you can take to be part of Generation Equality: Empower women and girls in science; raise awareness about gender equality and raise the visibility of women in science”. So, let’s explore different activities linked to those actions.

Maja Hadziselimovic (Photo Credits: Marijana Bicvic / UN Women)

Empower women

Although inequalities still exist, the EU has a well-established regulatory framework on gender equality and has made significant progress over the last decades. In March 2020, the European Commission published the EU Gender Equality Strategy for 2020-2025, setting out their commitment towards “a Union where women and men, girls and boys, in all their diversity, are free to pursue their chosen path in life, have equal opportunities to thrive, and can equally participate in and lead our European society.”

Thus, it is not surprising that the EC is making an eligibility criterion for all public bodies, higher education institutions and research organisations to have a Gender Equality Plan (GEP) in order to participate in Horizon Europe. Those looking for extra information on GEPs, can take a look at the work of EU-H2020 project SPEAR (Supporting and Implementing Plans for Gender Equality in Academia and Research) which provides the SPEAR’s compass guide, to help you draft and implement gender equality measures in your Research Performing Organisation (RPO); and GEARING ROLES: Gender Equality Actions in Research Institutions to traNsform Gender ROLES with examples of implementation of GEPs in universities and research organisations, because ensuring effective implementation and avoiding resistance is not always easy.

Empowering women can also be done outside the EC sphere, at a national or local level. This is the case with the work of Ana-Maria Stancu, CEO of Bucharest Robots, founder of the association E-Civis, and member of the Board of Directors of euRobotics. She has a personal and professional mission to empower women and girls in the field of robotics in Romania: “One of the hashtags we are using in our work in Romania is #vinrobotii (robots are coming). We are not using this as a threat, but as a reminder that the robots are here and will develop further. So far, what I can see in my day-to-day work is that women are generally perceived as outsiders in this industry. Every time I talk to a potential client or public authority, the general discussion is led by me, but the minute they have a technical question, they unconsciously turn to my male colleagues. The main problem I see is that most of them do this unintentionally – which makes it even more problematic. It means that this perception is deeply rooted in people’s minds.” This probably sounds familiar to you and gender bias can be found in different fields. A good read in this regard is the book of “Invisible Women” (Chatto & Windus 2019) by Caroline Criado Perez.

Ana-Maria Stancu (Photo Credits: Scuola di Robotica)

Ana-Maria underlines how is important to be persistent in bringing women into the field of robotics: “There is no doubt that the robotics industry will see a tremendous growth in the very near future and will need lots of specialists. We have to make sure that this development will not include only men but will also reflect the activity of women. I believe this day – International Women’s Day – should be a reminder that we have to do more for more women in technical fields like robotics.”

To make sure women are not left behind in the robotics industry, Ana -Maria promotes robotics classes to all children and encourages girls to participate. The E-Civis association also organises robotics and programming summer schools just for girls, so they can learn in a pressure-free environment. From her experience in teaching programming and robotics in public schools in rural areas, she has no doubt that “girls are as interested as boys in this field. Every child loves robots! Let’s use this love to teach and encourage all of them to build them!”

Programming summer school for girls by E-Civis (Photo credits: E-Civis)

For those who do not have the time or opportunity to be involved in mentorship or for this level of commitment, there is one activity that all of us can do in our everyday life, this is to fight micro-aggressions, correct unconscious bias, and avoid what Rebecca Solnit calls “mansplaining” in her book “Men Explain Things To Me” (Haymarket Books, 2014), and provide a sexist-free workplace. An easy and hilarious read for women and men that offers different tools to fight sexism in the workplace is “Feminist Fight Club” by Jessica Bennet (Harper Wave, 2016). Of course, there is a multitude of excellent research papers, policy reports and books you can read, and just having a coffee and listening to or supporting your female colleagues can be already of great help.

If you are a member of euRobotics, I would like you to encourage your female colleagues or employees to present themselves as candidates for the next elections. Our research board is screaming for some women power!

Raise awareness on gender equality

Integrating the gender dimension in research ensures that researchers question gender norms and stereotypes and address the evolving needs and social roles of women and men. It is a matter of improving scientific excellence and increasing the number of women in science, research and innovation. Networks and portals born as FP7 and Horizon 2020 projects, are still in place nowadays to raise awareness on gender equality, provide training and share knowledge. This is the case of GENDERACTION, GE Academy: Gender Equality Academy, GENDER-NET PLUS and GENPORT.

Since 2013, the European Commission publishes every three years the “She Figures” report that monitors the state of gender equality in research and innovation in Europe. The ERA Progress report also monitors the implementation of the objectives set by the EC: gender equality in scientific careers, gender balance in decision making and integration of the gender dimension in R&I.

As an individual you can publicly show your support to gender equality. You can join the Women’s Day march in your city or raise awareness in your social media or your workplace. You can download women’s rights posters and square images by Studio Number One for free here. Be part of the wave of change today.

Raise visibility of women in science

In 2014 a new grassroots community was started to support women in robotics across the world – the Women in Robotics network. Since 2020, the network founded by Andra Keay and Sabine Hauert has grown exponentially and become a non-profit organisation in the U.S. This global community supports women working (or interested in working) in the field of robotics. Their activities include local networking events, outreach, education, mentoring and the promotion of positive role models in robotics. They also publish every year the “list of women in robotics you need to know” on Ada Lovelace Day (13 October). Why not to join the network today? https://womeninrobotics.org/

On International Women’s Day (8 March) The European Commission celebrates the #EUWomen4future campaign and the EU Prize for Women Innovators with a seminar co-hosted by Commissioner Mariya Gabriel and President Roberta Metsola. The event highlights women’s professional achievements in culture, education, sport and science. You can attend the event online here and still use the hashtag.

Women in Robotics publication on Ada Lovelace’s Day. (Photo credit: Women in Robotics)

This article shows a peak of a long list of projects, activities and work concerning gender equality in the area of science and technology (mainly in the EU), and some specifically in robotics. We are in a period of changes, of “Me too”, of breaking ceilings and structural inequality, of building the future we want for our sisters, our daughters, our partners, and most important of all, for ourselves. Don’t be afraid to be challenging, don’t be afraid to be loud.